University of Zagreb Faculty of Electrical Engineering and Computing

This doctoral thesis improves the transfer learning for sequence labeling tasks by adapting pre-trained neural language models. The proposed improvements in transfer learning involve introducing a multi-task model that incorporates an additional signal, a method based on architectural modifications in autoregressive large language models, and a sequence labeling framework for autoregressive large language models utilizing supervised in-context fine-tuning combined with response-oriented adaptation strategies. The first improvement is given in the context of domain transfer for the event trigger detection task. The domain transfer of the event trigger detection task can be improved by incorporating an additional signal obtained from a domain-independent text processing system into a multi-task model. The second improvement involves modifying the model's architecture. For that purpose, a method is proposed to enable bidirectional information flow across layers of autoregressive large language models. The third improvement utilizes autoregressive large language models as text generators through a generative supervised in-context fine-tuning framework. The proposed model, method, and framework demonstrate that pre-trained neural language models achieve their best performance on sequence labeling tasks when adapted through targeted transfer learning paradigms.

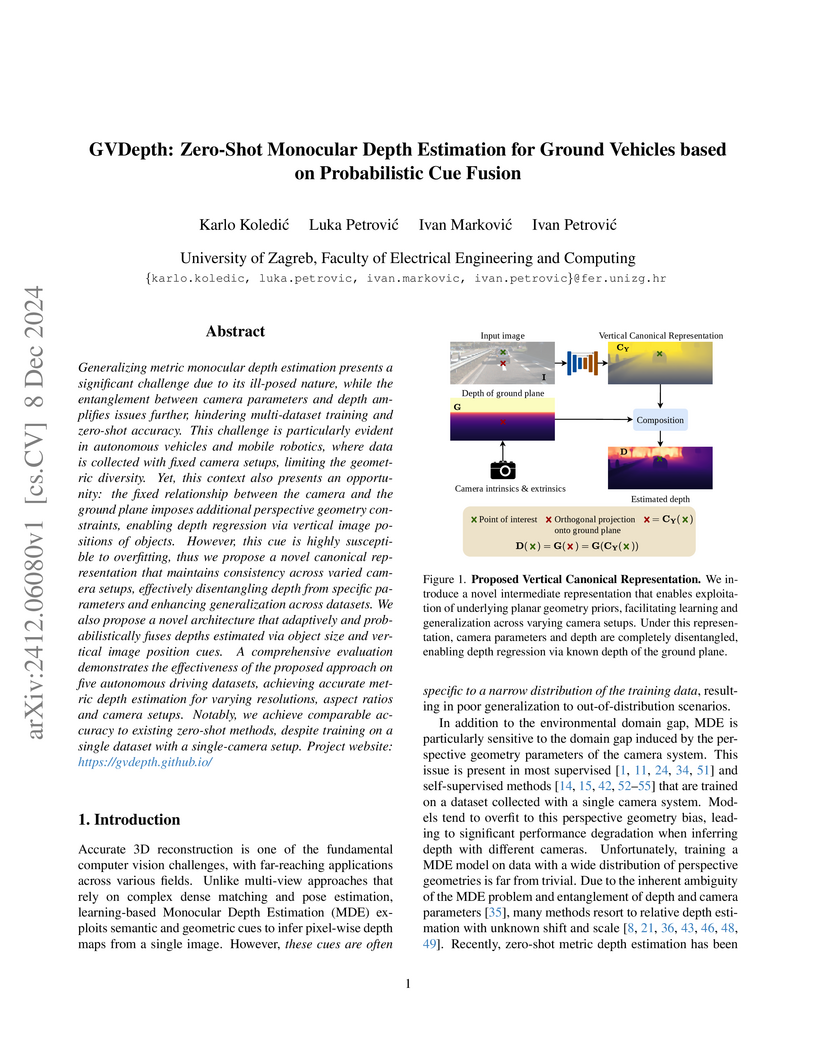

Generalizing metric monocular depth estimation presents a significant challenge due to its ill-posed nature, while the entanglement between camera parameters and depth amplifies issues further, hindering multi-dataset training and zero-shot accuracy. This challenge is particularly evident in autonomous vehicles and mobile robotics, where data is collected with fixed camera setups, limiting the geometric diversity. Yet, this context also presents an opportunity: the fixed relationship between the camera and the ground plane imposes additional perspective geometry constraints, enabling depth regression via vertical image positions of objects. However, this cue is highly susceptible to overfitting, thus we propose a novel canonical representation that maintains consistency across varied camera setups, effectively disentangling depth from specific parameters and enhancing generalization across datasets. We also propose a novel architecture that adaptively and probabilistically fuses depths estimated via object size and vertical image position cues. A comprehensive evaluation demonstrates the effectiveness of the proposed approach on five autonomous driving datasets, achieving accurate metric depth estimation for varying resolutions, aspect ratios and camera setups. Notably, we achieve comparable accuracy to existing zero-shot methods, despite training on a single dataset with a single-camera setup.

Researchers from the University of Zagreb developed MC-PanDA, a framework that enhances unsupervised domain adaptation for panoptic segmentation by integrating mask-wide and pixel-level confidence from mask transformers into the self-training process. The approach achieved a 6.2 PQ point improvement over previous methods on the Synthia→Cityscapes benchmark, establishing new state-of-the-art performance.

Outlier detection is an essential capability in safety-critical applications of supervised visual recognition. Most of the existing methods deliver best results by encouraging standard closed-set models to produce low-confidence predictions in negative training data. However, that approach conflates prediction uncertainty with recognition of the negative class. We therefore reconsider direct prediction of K+1 logits that correspond to K groundtruth classes and one outlier class. This setup allows us to formulate a novel anomaly score as an ensemble of in-distribution uncertainty and the posterior of the outlier class which we term negative objectness. Now outliers can be independently detected due to i) high prediction uncertainty or ii) similarity with negative data. We embed our method into a dense prediction architecture with mask-level recognition over K+2 classes. The training procedure encourages the novel K+2-th class to learn negative objectness at pasted negative instances. Our models outperform the current state-of-the art on standard benchmarks for image-wide and pixel-level outlier detection with and without training on real negative data.

This article presents a review of typical techniques used in three distinct

aspects of deep learning model development for audio generation. In the first

part of the article, we provide an explanation of audio representations,

beginning with the fundamental audio waveform. We then progress to the

frequency domain, with an emphasis on the attributes of human hearing, and

finally introduce a relatively recent development. The main part of the article

focuses on explaining basic and extended deep learning architecture variants,

along with their practical applications in the field of audio generation. The

following architectures are addressed: 1) Autoencoders 2) Generative

adversarial networks 3) Normalizing flows 4) Transformer networks 5) Diffusion

models. Lastly, we will examine four distinct evaluation metrics that are

commonly employed in audio generation. This article aims to offer novice

readers and beginners in the field a comprehensive understanding of the current

state of the art in audio generation methods as well as relevant studies that

can be explored for future research.

In this paper, we present a survey of deep learning-based methods for the regression of gaze direction vector from head and eye images. We describe in detail numerous published methods with a focus on the input data, architecture of the model, and loss function used to supervise the model. Additionally, we present a list of datasets that can be used to train and evaluate gaze direction regression methods. Furthermore, we noticed that the results reported in the literature are often not comparable one to another due to differences in the validation or even test subsets used. To address this problem, we re-evaluated several methods on the commonly used in-the-wild Gaze360 dataset using the same validation setup. The experimental results show that the latest methods, although claiming state-of-the-art results, significantly underperform compared with some older methods. Finally, we show that the temporal models outperform the static models under static test conditions.

Researchers from the University of Zagreb developed a deep reinforcement learning model for intraday trading that incorporates positional context features (e.g., time left in day, current holdings) in addition to price-based indicators. This model consistently achieved superior risk-adjusted returns, outperforming benchmark strategies, particularly in commodity markets with cumulative returns exceeding 400% over a 2013-2022 testing period.

25 Sep 2025

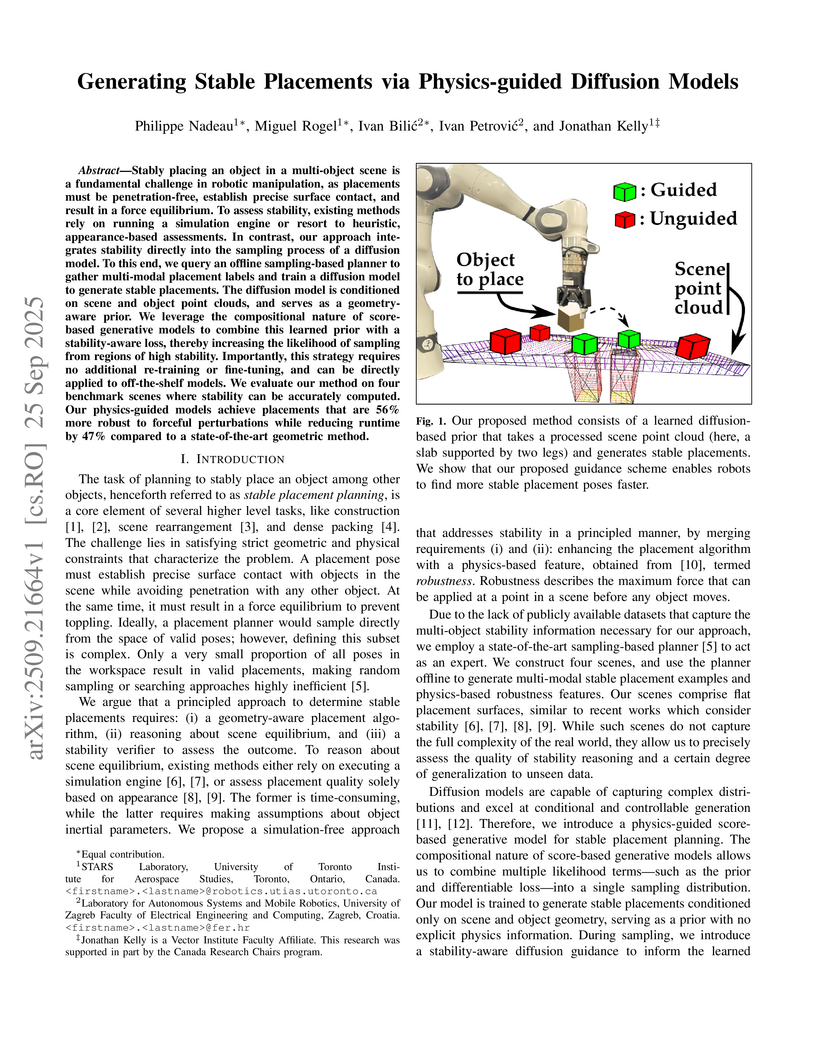

Stably placing an object in a multi-object scene is a fundamental challenge in robotic manipulation, as placements must be penetration-free, establish precise surface contact, and result in a force equilibrium. To assess stability, existing methods rely on running a simulation engine or resort to heuristic, appearance-based assessments. In contrast, our approach integrates stability directly into the sampling process of a diffusion model. To this end, we query an offline sampling-based planner to gather multi-modal placement labels and train a diffusion model to generate stable placements. The diffusion model is conditioned on scene and object point clouds, and serves as a geometry-aware prior. We leverage the compositional nature of score-based generative models to combine this learned prior with a stability-aware loss, thereby increasing the likelihood of sampling from regions of high stability. Importantly, this strategy requires no additional re-training or fine-tuning, and can be directly applied to off-the-shelf models. We evaluate our method on four benchmark scenes where stability can be accurately computed. Our physics-guided models achieve placements that are 56% more robust to forceful perturbations while reducing runtime by 47% compared to a state-of-the-art geometric method.

Unconstrained gaze estimation is the process of determining where a subject

is directing their visual attention in uncontrolled environments. Gaze

estimation systems are important for a myriad of tasks such as driver

distraction monitoring, exam proctoring, accessibility features in modern

software, etc. However, these systems face challenges in real-world scenarios,

partially due to the low resolution of in-the-wild images and partially due to

insufficient modeling of head-eye interactions in current state-of-the-art

(SOTA) methods. This paper introduces DHECA-SuperGaze, a deep learning-based

method that advances gaze prediction through super-resolution (SR) and a dual

head-eye cross-attention (DHECA) module. Our dual-branch convolutional backbone

processes eye and multiscale SR head images, while the proposed DHECA module

enables bidirectional feature refinement between the extracted visual features

through cross-attention mechanisms. Furthermore, we identified critical

annotation errors in one of the most diverse and widely used gaze estimation

datasets, Gaze360, and rectified the mislabeled data. Performance evaluation on

Gaze360 and GFIE datasets demonstrates superior within-dataset performance of

the proposed method, reducing angular error (AE) by 0.48{\deg} (Gaze360) and

2.95{\deg} (GFIE) in static configurations, and 0.59{\deg} (Gaze360) and

3.00{\deg} (GFIE) in temporal settings compared to prior SOTA methods.

Cross-dataset testing shows improvements in AE of more than 1.53{\deg}

(Gaze360) and 3.99{\deg} (GFIE) in both static and temporal settings,

validating the robust generalization properties of our approach.

Large Language Models (LLMs) have demonstrated remarkable performance across diverse domains. However, effectively leveraging their vast knowledge for training smaller downstream models remains an open challenge, especially in domains like tabular data learning, where simpler models are often preferred due to interpretability and efficiency.

In this paper, we introduce a novel yet straightforward method for incorporating LLM-generated global task feature attributions into the training process of smaller networks. Specifically, we propose an attribution-matching regularization term that aligns the training dynamics of the smaller model with the insights provided by the LLM. By doing so, our approach yields superior performance in few-shot learning scenarios. Notably, our method requires only black-box API access to the LLM, making it easy to integrate into existing training pipelines with minimal computational overhead.

Furthermore, we demonstrate how this method can be used to address common issues in real-world datasets, such as skewness and bias. By integrating high-level knowledge from LLMs, our approach improves generalization, even when training data is limited or imbalanced. We validate its effectiveness through extensive experiments across multiple tasks, demonstrating improved learning efficiency and model robustness.

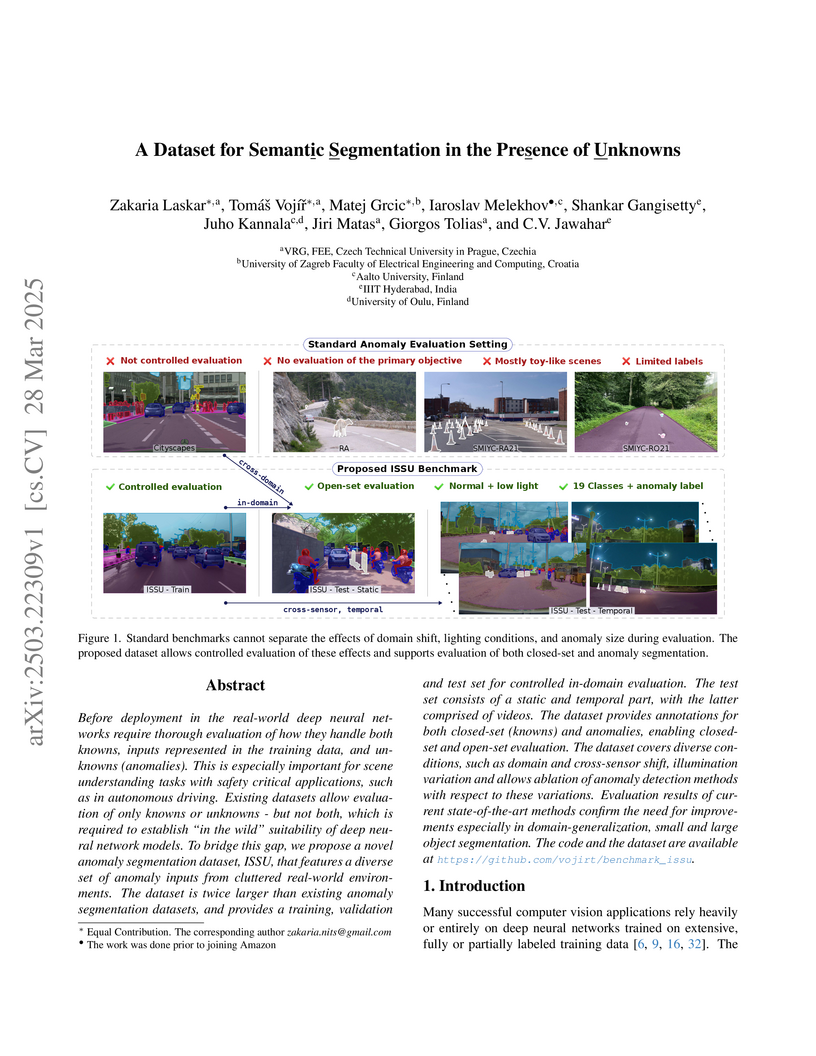

Before deployment in the real-world deep neural networks require thorough

evaluation of how they handle both knowns, inputs represented in the training

data, and unknowns (anomalies). This is especially important for scene

understanding tasks with safety critical applications, such as in autonomous

driving. Existing datasets allow evaluation of only knowns or unknowns - but

not both, which is required to establish "in the wild" suitability of deep

neural network models. To bridge this gap, we propose a novel anomaly

segmentation dataset, ISSU, that features a diverse set of anomaly inputs from

cluttered real-world environments. The dataset is twice larger than existing

anomaly segmentation datasets, and provides a training, validation and test set

for controlled in-domain evaluation. The test set consists of a static and

temporal part, with the latter comprised of videos. The dataset provides

annotations for both closed-set (knowns) and anomalies, enabling closed-set and

open-set evaluation. The dataset covers diverse conditions, such as domain and

cross-sensor shift, illumination variation and allows ablation of anomaly

detection methods with respect to these variations. Evaluation results of

current state-of-the-art methods confirm the need for improvements especially

in domain-generalization, small and large object segmentation.

13 Nov 2024

The sequential nature of decision-making in financial asset trading aligns naturally with the reinforcement learning (RL) framework, making RL a common approach in this domain. However, the low signal-to-noise ratio in financial markets results in noisy estimates of environment components, including the reward function, which hinders effective policy learning by RL agents. Given the critical importance of reward function design in RL problems, this paper introduces a novel and more robust reward function by leveraging imitation learning, where a trend labeling algorithm acts as an expert. We integrate imitation (expert's) feedback with reinforcement (agent's) feedback in a model-free RL algorithm, effectively embedding the imitation learning problem within the RL paradigm to handle the stochasticity of reward signals. Empirical results demonstrate that this novel approach improves financial performance metrics compared to traditional benchmarks and RL agents trained solely using reinforcement feedback.

In this paper we propose a novel approach to generate a synthetic aerial

dataset for application in UAV monitoring. We propose to accentuate shape-based

object representation by applying texture randomization. A diverse dataset with

photorealism in all parameters such as shape, pose, lighting, scale, viewpoint,

etc. except for atypical textures is created in a 3D modelling software

Blender. Our approach specifically targets two conditions in aerial images

where texture of objects is difficult to detect, namely challenging

illumination and objects occupying only a small portion of the image.

Experimental evaluation of YOLO and Faster R-CNN detectors trained on synthetic

data with randomized textures confirmed our approach by increasing the mAP

value (17 and 3.7 percentage points for YOLO; 20 and 1.1 percentage points for

Faster R-CNN) on two test datasets of real images, both containing UAV-to-UAV

images with motion blur. Testing on different domains, we conclude that the

more the generalisation ability is put to the test, the more apparent are the

advantages of the shape-based representation.

The adoption of the Internet of Things (IoT) deployments has led to a sharp

increase in network traffic as a vast number of IoT devices communicate with

each other and IoT services through the IoT-edge-cloud continuum. This network

traffic increase poses a major challenge to the global communications

infrastructure since it hinders communication performance and also puts

significant strain on the energy consumption of IoT devices. To address these

issues, efficient and collaborative IoT solutions which enable information

exchange while reducing the transmitted data and associated network traffic are

crucial. This survey provides a comprehensive overview of the communication

technologies and protocols as well as data reduction strategies that contribute

to this goal. First, we present a comparative analysis of prevalent

communication technologies in the IoT domain, highlighting their unique

characteristics and exploring the potential for protocol composition and joint

usage to enhance overall communication efficiency within the IoT-edge-cloud

continuum. Next, we investigate various data traffic reduction techniques

tailored to the IoT-edge-cloud context and evaluate their applicability and

effectiveness on resource-constrained and devices. Finally, we investigate the

emerging concepts that have the potential to further reduce the communication

overhead in the IoT-edge-cloud continuum, including cross-layer optimization

strategies and Edge AI techniques for IoT data reduction. The paper offers a

comprehensive roadmap for developing efficient and scalable solutions across

the layers of the IoT-edge-cloud continuum that are beneficial for real-time

processing to alleviate network congestion in complex IoT environments.

Nanjing University of Science and Technology Nanjing UniversityUniversity of LjubljanaUniversity of LiverpoolBeijing University of Posts and Telecommunications

Nanjing UniversityUniversity of LjubljanaUniversity of LiverpoolBeijing University of Posts and Telecommunications Karlsruhe Institute of TechnologyGwangju Institute of Science and TechnologyDalian Maritime UniversityNational Cheng Kung UniversityMichigan Technological UniversityYancheng Institute of TechnologyHong Kong University of Science and Technology (Guangzhou)Queensland University of TechnologyXi'an Jiaotong Liverpool UniversityUniversity of TuebingenUniversity of Zagreb Faculty of Electrical Engineering and ComputingLuxonisKLE Technological UniversityShield AIFraunhofer IOSBLOOKOUT

Karlsruhe Institute of TechnologyGwangju Institute of Science and TechnologyDalian Maritime UniversityNational Cheng Kung UniversityMichigan Technological UniversityYancheng Institute of TechnologyHong Kong University of Science and Technology (Guangzhou)Queensland University of TechnologyXi'an Jiaotong Liverpool UniversityUniversity of TuebingenUniversity of Zagreb Faculty of Electrical Engineering and ComputingLuxonisKLE Technological UniversityShield AIFraunhofer IOSBLOOKOUT

Nanjing UniversityUniversity of LjubljanaUniversity of LiverpoolBeijing University of Posts and Telecommunications

Nanjing UniversityUniversity of LjubljanaUniversity of LiverpoolBeijing University of Posts and Telecommunications Karlsruhe Institute of TechnologyGwangju Institute of Science and TechnologyDalian Maritime UniversityNational Cheng Kung UniversityMichigan Technological UniversityYancheng Institute of TechnologyHong Kong University of Science and Technology (Guangzhou)Queensland University of TechnologyXi'an Jiaotong Liverpool UniversityUniversity of TuebingenUniversity of Zagreb Faculty of Electrical Engineering and ComputingLuxonisKLE Technological UniversityShield AIFraunhofer IOSBLOOKOUT

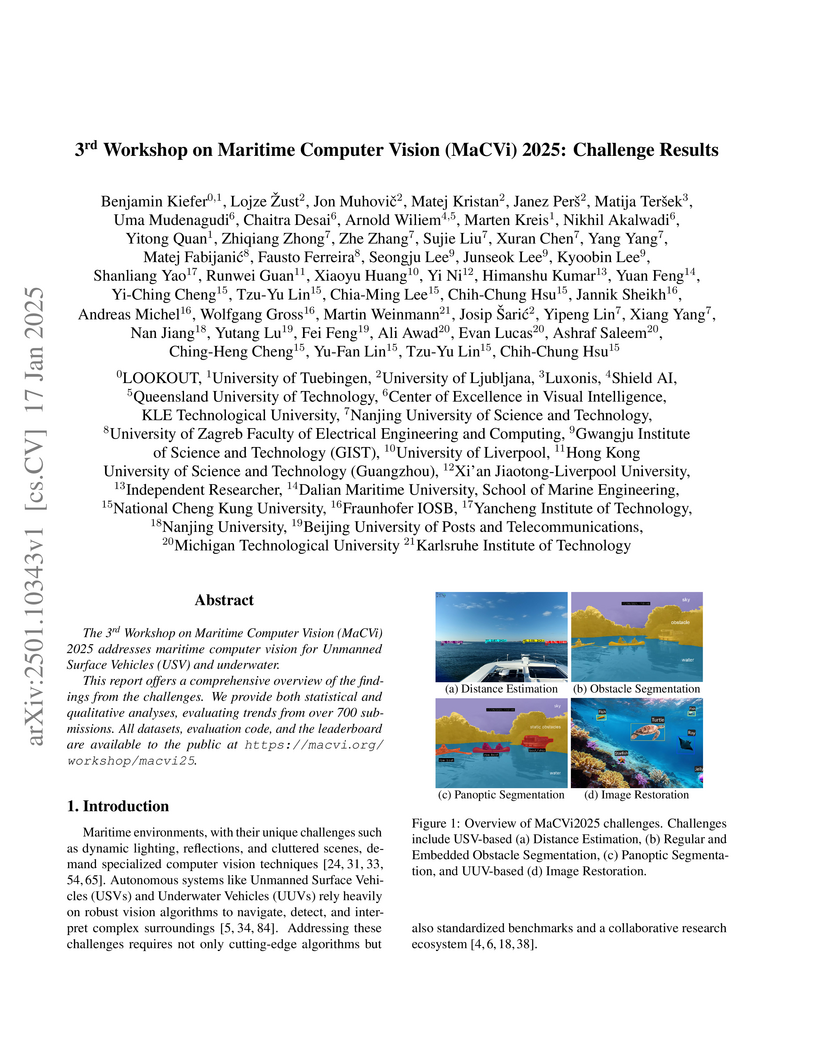

Karlsruhe Institute of TechnologyGwangju Institute of Science and TechnologyDalian Maritime UniversityNational Cheng Kung UniversityMichigan Technological UniversityYancheng Institute of TechnologyHong Kong University of Science and Technology (Guangzhou)Queensland University of TechnologyXi'an Jiaotong Liverpool UniversityUniversity of TuebingenUniversity of Zagreb Faculty of Electrical Engineering and ComputingLuxonisKLE Technological UniversityShield AIFraunhofer IOSBLOOKOUTThe 3rd Workshop on Maritime Computer Vision (MaCVi) 2025 addresses maritime computer vision for Unmanned Surface Vehicles (USV) and underwater. This report offers a comprehensive overview of the findings from the challenges. We provide both statistical and qualitative analyses, evaluating trends from over 700 submissions. All datasets, evaluation code, and the leaderboard are available to the public at this https URL.

In this paper, a new variant of an algorithm for normalized cross-correlation (NCC) is proposed in the context of template matching in images. The proposed algorithm is based on the precomputation of a template image approximation, enabling more efficient calculation of approximate NCC with the source image than using the original template for exact NCC calculation. The approximate template is precomputed from the template image by a split-and-merge approach, resulting in a decomposition to axis-aligned rectangular segments, whose sizes depend on per-segment pixel intensity variance. In the approximate template, each segment is assigned the mean grayscale value of the corresponding pixels from the original template. The proposed algorithm achieves superior computational performance with negligible NCC approximation errors compared to the well-known Fast Fourier Transform (FFT)-based NCC algorithm, when applied on less visually complex and/or smaller template images. In other cases, the proposed algorithm can maintain either computational performance or NCC approximation error within the range of the FFT-based algorithm, but not both.

Recent advancement in deep-neural network performance led to the development

of new state-of-the-art approaches in numerous areas. However, the black-box

nature of neural networks often prohibits their use in areas where model

explainability and model transparency are crucial. Over the years, researchers

proposed many algorithms to aid neural network understanding and provide

additional information to the human expert. One of the most popular methods

being Layer-Wise Relevance Propagation (LRP). This method assigns local

relevance based on the pixel-wise decomposition of nonlinear classifiers. With

the rise of attribution method research, there has emerged a pressing need to

assess and evaluate their performance. Numerous metrics have been proposed,

each assessing an individual property of attribution methods such as

faithfulness, robustness or localization. Unfortunately, no single metric is

deemed optimal for every case, and researchers often use several metrics to

test the quality of the attribution maps. In this work, we address the

shortcomings of the current LRP formulations and introduce a novel method for

determining the relevance of input neurons through layer-wise relevance

propagation. Furthermore, we apply this approach to the recently developed

Vision Transformer architecture and evaluate its performance against existing

methods on two image classification datasets, namely ImageNet and PascalVOC.

Our results clearly demonstrate the advantage of our proposed method.

Furthermore, we discuss the insufficiencies of current evaluation metrics for

attribution-based explainability and propose a new evaluation metric that

combines the notions of faithfulness, robustness and contrastiveness. We

utilize this new metric to evaluate the performance of various

attribution-based methods. Our code is available at:

this https URL

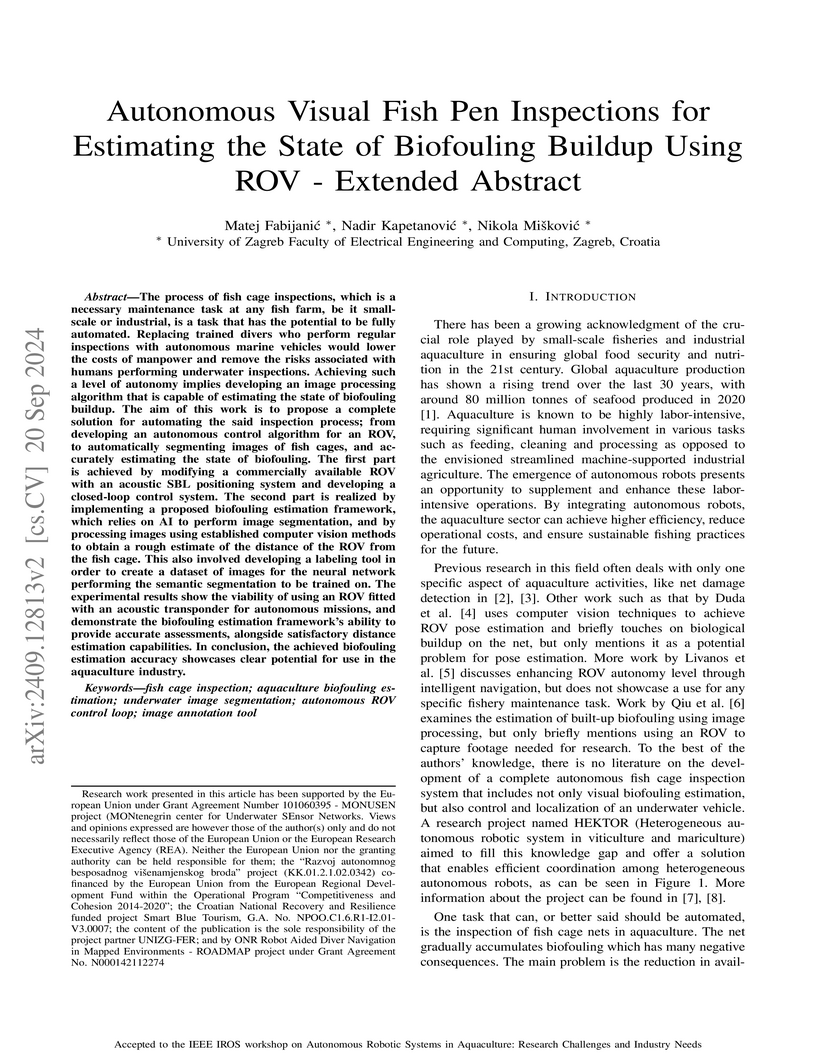

The process of fish cage inspections, which is a necessary maintenance task

at any fish farm, be it small scale or industrial, is a task that has the

potential to be fully automated. Replacing trained divers who perform regular

inspections with autonomous marine vehicles would lower the costs of manpower

and remove the risks associated with humans performing underwater inspections.

Achieving such a level of autonomy implies developing an image processing

algorithm that is capable of estimating the state of biofouling buildup. The

aim of this work is to propose a complete solution for automating the said

inspection process; from developing an autonomous control algorithm for an ROV,

to automatically segmenting images of fish cages, and accurately estimating the

state of biofouling. The first part is achieved by modifying a commercially

available ROV with an acoustic SBL positioning system and developing a

closed-loop control system. The second part is realized by implementing a

proposed biofouling estimation framework, which relies on AI to perform image

segmentation, and by processing images using established computer vision

methods to obtain a rough estimate of the distance of the ROV from the fish

cage. This also involved developing a labeling tool in order to create a

dataset of images for the neural network performing the semantic segmentation

to be trained on. The experimental results show the viability of using an ROV

fitted with an acoustic transponder for autonomous missions, and demonstrate

the biofouling estimation framework's ability to provide accurate assessments,

alongside satisfactory distance estimation capabilities. In conclusion, the

achieved biofouling estimation accuracy showcases clear potential for use in

the aquaculture industry.

28 Feb 2025

University of TrentoRuđer Bošković InstituteLaboratoire de Physique de l’Ecole normale supérieureINAF Istituto di Astrofisica Spaziale e Fisica cosmica di MilanoUniversity of Zagreb Faculty of Electrical Engineering and ComputingScuola Universitaria Superiore IUSS PaviaINAF

Osservatorio Astrofisico di ArcetriCenter for Astrophysics Harvard & SmithsonianINAF

Osservatorio Astronomico di Brera

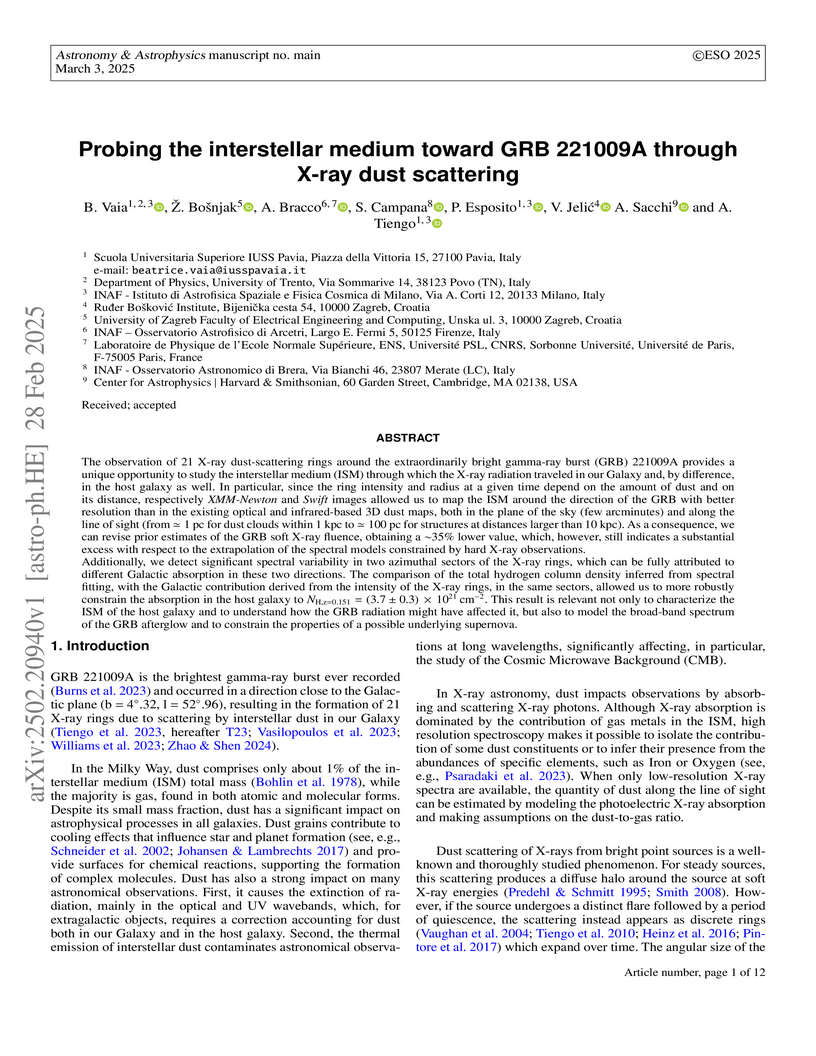

Researchers leveraged X-ray dust scattering from the exceptionally bright GRB 221009A to create a 3D map of Galactic dust with arcminute angular and parsec-level distance resolution, while robustly constraining the GRB's host galaxy absorption and refining its soft X-ray fluence estimate.

Face recognition under extreme head poses is a challenging task. Ideally, a face recognition system should perform well across different head poses, which is known as pose-invariant face recognition. To achieve pose invariance, current approaches rely on sophisticated methods, such as face frontalization and various facial feature extraction model architectures. However, these methods are somewhat impractical in real-life settings and are typically evaluated on small scientific datasets, such as Multi-PIE. In this work, we propose the inverse method of face frontalization, called face defrontalization, to augment the training dataset of facial feature extraction model. The method does not introduce any time overhead during the inference step. The method is composed of: 1) training an adapted face defrontalization FFWM model on a frontal-profile pairs dataset, which has been preprocessed using our proposed face alignment method; 2) training a ResNet-50 facial feature extraction model based on ArcFace loss on a raw and randomly defrontalized large-scale dataset, where defrontalization was performed with our previously trained face defrontalization model. Our method was compared with the existing approaches on four open-access datasets: LFW, AgeDB, CFP, and Multi-PIE. Defrontalization shows improved results compared to models without defrontalization, while the proposed adjustments show clear superiority over the state-of-the-art face frontalization FFWM method on three larger open-access datasets, but not on the small Multi-PIE dataset for extreme poses (75 and 90 degrees). The results suggest that at least some of the current methods may be overfitted to small datasets.

There are no more papers matching your filters at the moment.