Østfold University College

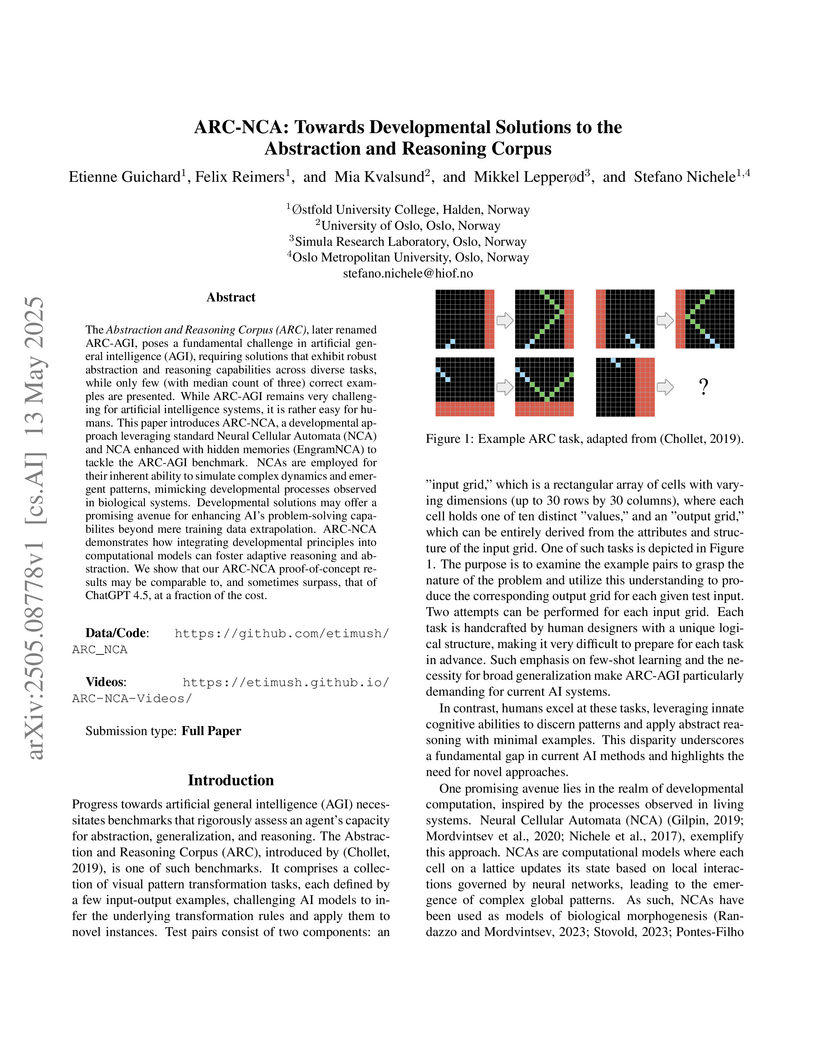

This work proposes Neural Cellular Automata as a bio-inspired, developmental approach to solving the Abstraction and Reasoning Corpus (ARC-AGI) benchmark. EngramNCA v3 achieved a 12.9% solve rate on ARC-AGI, demonstrating performance comparable to GPT-4.5 at significantly lower computational cost.

The new SysMLv2 adds mechanisms for the built-in specification of domain-specific concepts and language extensions. This feature promises to facilitate the creation of Domain-Specific Languages (DSLs) and interfacing with existing system descriptions and technical designs. In this paper, we review these features and evaluate SysMLv2's capabilities using concrete use cases. We develop DarTwin DSL, a DSL that formalizes the existing DarTwin notation for Digital Twin (DT) evolution, through SysMLv2, thereby supposedly enabling the wide application of DarTwin's evolution templates using any SysMLv2 tool. We demonstrate DarTwin DSL, but also point out limitations in the currently available tooling of SysMLv2 in terms of graphical notation capabilities. This work contributes to the growing field of Model-Driven Engineering (MDE) for DTs and combines it with the release of SysMLv2, thus integrating a systematic approach with DT evolution management in systems engineering.

Recent advancements in large-scale pre-training of visual-language models on

paired image-text data have demonstrated impressive generalization capabilities

for zero-shot tasks. Building on this success, efforts have been made to adapt

these image-based visual-language models, such as CLIP, for videos extending

their zero-shot capabilities to the video domain. While these adaptations have

shown promising results, they come at a significant computational cost and

struggle with effectively modeling the crucial temporal aspects inherent to the

video domain. In this study, we present EZ-CLIP, a simple and efficient

adaptation of CLIP that addresses these challenges. EZ-CLIP leverages temporal

visual prompting for seamless temporal adaptation, requiring no fundamental

alterations to the core CLIP architecture while preserving its remarkable

generalization abilities. Moreover, we introduce a novel learning objective

that guides the temporal visual prompts to focus on capturing motion, thereby

enhancing its learning capabilities from video data. We conducted extensive

experiments on five different benchmark datasets, thoroughly evaluating EZ-CLIP

for zero-shot learning and base-to-novel video action recognition, and also

demonstrating its potential for few-shot generalization.Impressively, with a

mere 5.2 million learnable parameters (as opposed to the 71.1 million in the

prior best model), EZ-CLIP can be efficiently trained on a single GPU,

outperforming existing approaches in several evaluations.

13 Oct 2021

University of Waterloo

University of Waterloo Monash UniversityUniversity of Goettingen

Monash UniversityUniversity of Goettingen University of British ColumbiaNational University of Defense TechnologyThe Hebrew UniversityUniversity of AucklandLUT UniversityUniversity of OuluUniversity of TennesseeSharif University of TechnologyUniversity of TehranUniversit`a della Svizzera italianaKarlsruhe Institute of Technology (KIT)IBMUniversity of SaskatchewanUniversity of Maryland Baltimore CountyGuru Nanak Dev UniversityVrije Universiteit AmsterdamImam Abdulrahman Bin Faisal UniversityBrunel University LondonTrent UniversitySimula Research LaboratoryTechnical University of KosiceØstfold University CollegeEricsson Hungary Ltd.Technische Universit

at DarmstadtUniversity of W

urzburgUniversity of Missouri

Kansas CityQueens

’ University

University of British ColumbiaNational University of Defense TechnologyThe Hebrew UniversityUniversity of AucklandLUT UniversityUniversity of OuluUniversity of TennesseeSharif University of TechnologyUniversity of TehranUniversit`a della Svizzera italianaKarlsruhe Institute of Technology (KIT)IBMUniversity of SaskatchewanUniversity of Maryland Baltimore CountyGuru Nanak Dev UniversityVrije Universiteit AmsterdamImam Abdulrahman Bin Faisal UniversityBrunel University LondonTrent UniversitySimula Research LaboratoryTechnical University of KosiceØstfold University CollegeEricsson Hungary Ltd.Technische Universit

at DarmstadtUniversity of W

urzburgUniversity of Missouri

Kansas CityQueens

’ UniversityContext: Tangled commits are changes to software that address multiple

concerns at once. For researchers interested in bugs, tangled commits mean that

they actually study not only bugs, but also other concerns irrelevant for the

study of bugs.

Objective: We want to improve our understanding of the prevalence of tangling

and the types of changes that are tangled within bug fixing commits.

Methods: We use a crowd sourcing approach for manual labeling to validate

which changes contribute to bug fixes for each line in bug fixing commits. Each

line is labeled by four participants. If at least three participants agree on

the same label, we have consensus.

Results: We estimate that between 17% and 32% of all changes in bug fixing

commits modify the source code to fix the underlying problem. However, when we

only consider changes to the production code files this ratio increases to 66%

to 87%. We find that about 11% of lines are hard to label leading to active

disagreements between participants. Due to confirmed tangling and the

uncertainty in our data, we estimate that 3% to 47% of data is noisy without

manual untangling, depending on the use case.

Conclusion: Tangled commits have a high prevalence in bug fixes and can lead

to a large amount of noise in the data. Prior research indicates that this

noise may alter results. As researchers, we should be skeptics and assume that

unvalidated data is likely very noisy, until proven otherwise.

We investigate the potential of bio-inspired evolutionary algorithms for designing quantum circuits with specific goals, focusing on two particular tasks. The first one is motivated by the ideas of Artificial Life that are used to reproduce stochastic cellular automata with given rules. We test the robustness of quantum implementations of the cellular automata for different numbers of quantum gates The second task deals with the sampling of quantum circuits that generate highly entangled quantum states, which constitute an important resource for quantum computing. In particular, an evolutionary algorithm is employed to optimize circuits with respect to a fitness function defined with the Mayer-Wallach entanglement measure. We demonstrate that, by balancing the mutation rate between exploration and exploitation, we can find entangling quantum circuits for up to five qubits. We also discuss the trade-off between the number of gates in quantum circuits and the computational costs of finding the gate arrangements leading to a strongly entangled state. Our findings provide additional insight into the trade-off between the complexity of a circuit and its performance, which is an important factor in the design of quantum circuits.

Nowadays, the continuous improvement and automation of industrial processes

has become a key factor in many fields, and in the chemical industry, it is no

exception. This translates into a more efficient use of resources, reduced

production time, output of higher quality and reduced waste. Given the

complexity of today's industrial processes, it becomes infeasible to monitor

and optimize them without the use of information technologies and analytics. In

recent years, machine learning methods have been used to automate processes and

provide decision support. All of this, based on analyzing large amounts of data

generated in a continuous manner. In this paper, we present the results of

applying machine learning methods during a chemical sulphonation process with

the objective of automating the product quality analysis which currently is

performed manually. We used data from process parameters to train different

models including Random Forest, Neural Network and linear regression in order

to predict product quality values. Our experiments showed that it is possible

to predict those product quality values with good accuracy, thus, having the

potential to reduce time. Specifically, the best results were obtained with

Random Forest with a mean absolute error of 0.089 and a correlation of 0.978.

Reservoir Computing (RC) offers a viable option to deploy AI algorithms on

low-end embedded system platforms. Liquid State Machine (LSM) is a bio-inspired

RC model that mimics the cortical microcircuits and uses spiking neural

networks (SNN) that can be directly realized on neuromorphic hardware. In this

paper, we present a novel Parallelized LSM (PLSM) architecture that

incorporates spatio-temporal read-out layer and semantic constraints on model

output. To the best of our knowledge, such a formulation has been done for the

first time in literature, and it offers a computationally lighter alternative

to traditional deep-learning models. Additionally, we also present a

comprehensive algorithm for the implementation of parallelizable SNNs and LSMs

that are GPU-compatible. We implement the PLSM model to classify

unintentional/accidental video clips, using the Oops dataset. From the

experimental results on detecting unintentional action in video, it can be

observed that our proposed model outperforms a self-supervised model and a

fully supervised traditional deep learning model. All the implemented codes can

be found at our repository

this https URL

The discovery of complex multicellular organism development took millions of

years of evolution. The genome of such a multicellular organism guides the

development of its body from a single cell, including its control system. Our

goal is to imitate this natural process using a single neural cellular

automaton (NCA) as a genome for modular robotic agents. In the introduced

approach, called Neural Cellular Robot Substrate (NCRS), a single NCA guides

the growth of a robot and the cellular activity which controls the robot during

deployment. We also introduce three benchmark environments, which test the

ability of the approach to grow different robot morphologies. In this paper,

NCRSs are trained with covariance matrix adaptation evolution strategy

(CMA-ES), and covariance matrix adaptation MAP-Elites (CMA-ME) for quality

diversity, which we show leads to more diverse robot morphologies with higher

fitness scores. While the NCRS can solve the easier tasks from our benchmark

environments, the success rate reduces when the difficulty of the task

increases. We discuss directions for future work that may facilitate the use of

the NCRS approach for more complex domains.

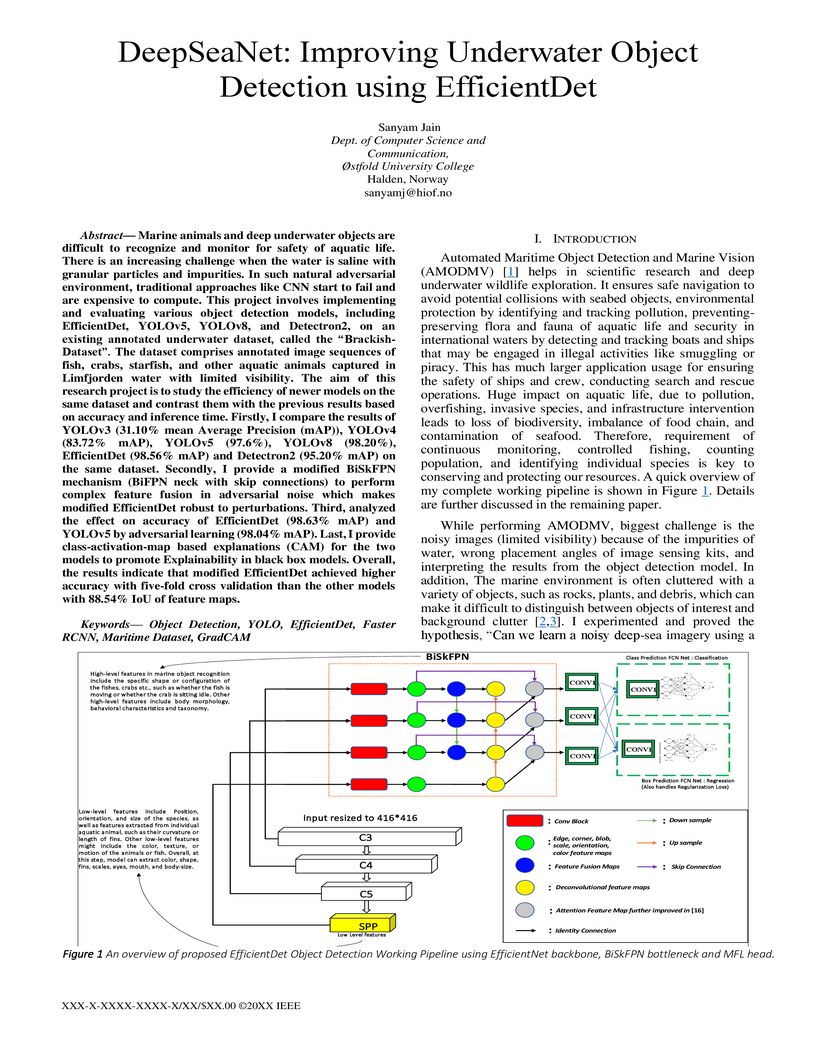

Marine animals and deep underwater objects are difficult to recognize and monitor for safety of aquatic life. There is an increasing challenge when the water is saline with granular particles and impurities. In such natural adversarial environment, traditional approaches like CNN start to fail and are expensive to compute. This project involves implementing and evaluating various object detection models, including EfficientDet, YOLOv5, YOLOv8, and Detectron2, on an existing annotated underwater dataset, called the Brackish-Dataset. The dataset comprises annotated image sequences of fish, crabs, starfish, and other aquatic animals captured in Limfjorden water with limited visibility. The aim of this research project is to study the efficiency of newer models on the same dataset and contrast them with the previous results based on accuracy and inference time. Firstly, I compare the results of YOLOv3 (31.10% mean Average Precision (mAP)), YOLOv4 (83.72% mAP), YOLOv5 (97.6%), YOLOv8 (98.20%), EfficientDet (98.56% mAP) and Detectron2 (95.20% mAP) on the same dataset. Secondly, I provide a modified BiSkFPN mechanism (BiFPN neck with skip connections) to perform complex feature fusion in adversarial noise which makes modified EfficientDet robust to perturbations. Third, analyzed the effect on accuracy of EfficientDet (98.63% mAP) and YOLOv5 by adversarial learning (98.04% mAP). Last, I provide class activation map based explanations (CAM) for the two models to promote Explainability in black box models. Overall, the results indicate that modified EfficientDet achieved higher accuracy with five-fold cross validation than the other models with 88.54% IoU of feature maps.

This paper implements and investigates popular adversarial attacks on the YOLOv5 Object Detection algorithm. The paper explores the vulnerability of the YOLOv5 to adversarial attacks in the context of traffic and road sign detection. The paper investigates the impact of different types of attacks, including the Limited memory Broyden Fletcher Goldfarb Shanno (L-BFGS), the Fast Gradient Sign Method (FGSM) attack, the Carlini and Wagner (C&W) attack, the Basic Iterative Method (BIM) attack, the Projected Gradient Descent (PGD) attack, One Pixel Attack, and the Universal Adversarial Perturbations attack on the accuracy of YOLOv5 in detecting traffic and road signs. The results show that YOLOv5 is susceptible to these attacks, with misclassification rates increasing as the magnitude of the perturbations increases. We also explain the results using saliency maps. The findings of this paper have important implications for the safety and reliability of object detection algorithms used in traffic and transportation systems, highlighting the need for more robust and secure models to ensure their effectiveness in real-world applications.

A primary challenge in utilizing in-vitro biological neural networks for computations is finding good encoding and decoding schemes for inputting and decoding data to and from the networks. Furthermore, identifying the optimal parameter settings for a given combination of encoding and decoding schemes adds additional complexity to this challenge. In this study we explore stimulation timing as an encoding method, i.e. we encode information as the delay between stimulation pulses and identify the bounds and acuity of stimulation timings which produce linearly separable spike responses. We also examine the optimal readout parameters for a linear decoder in the form of epoch length, time bin size and epoch offset. Our results suggest that stimulation timings between 36 and 436ms may be optimal for encoding and that different combinations of readout parameters may be optimal at different parts of the evoked spike response.

In this thesis, we explore the use of complex systems to study learning and

adaptation in natural and artificial systems. The goal is to develop autonomous

systems that can learn without supervision, develop on their own, and become

increasingly complex over time. Complex systems are identified as a suitable

framework for understanding these phenomena due to their ability to exhibit

growth of complexity. Being able to build learning algorithms that require

limited to no supervision would enable greater flexibility and adaptability in

various applications. By understanding the fundamental principles of learning

in complex systems, we hope to advance our ability to design and implement

practical learning algorithms in the future. This thesis makes the following

key contributions: the development of a general complexity metric that we apply

to search for complex systems that exhibit growth of complexity, the

introduction of a coarse-graining method to study computations in large-scale

complex systems, and the development of a metric for learning efficiency as

well as a benchmark dataset for evaluating the speed of learning algorithms.

Our findings add substantially to our understanding of learning and adaptation

in natural and artificial systems. Moreover, our approach contributes to a

promising new direction for research in this area. We hope these findings will

inspire the development of more effective and efficient learning algorithms in

the future.

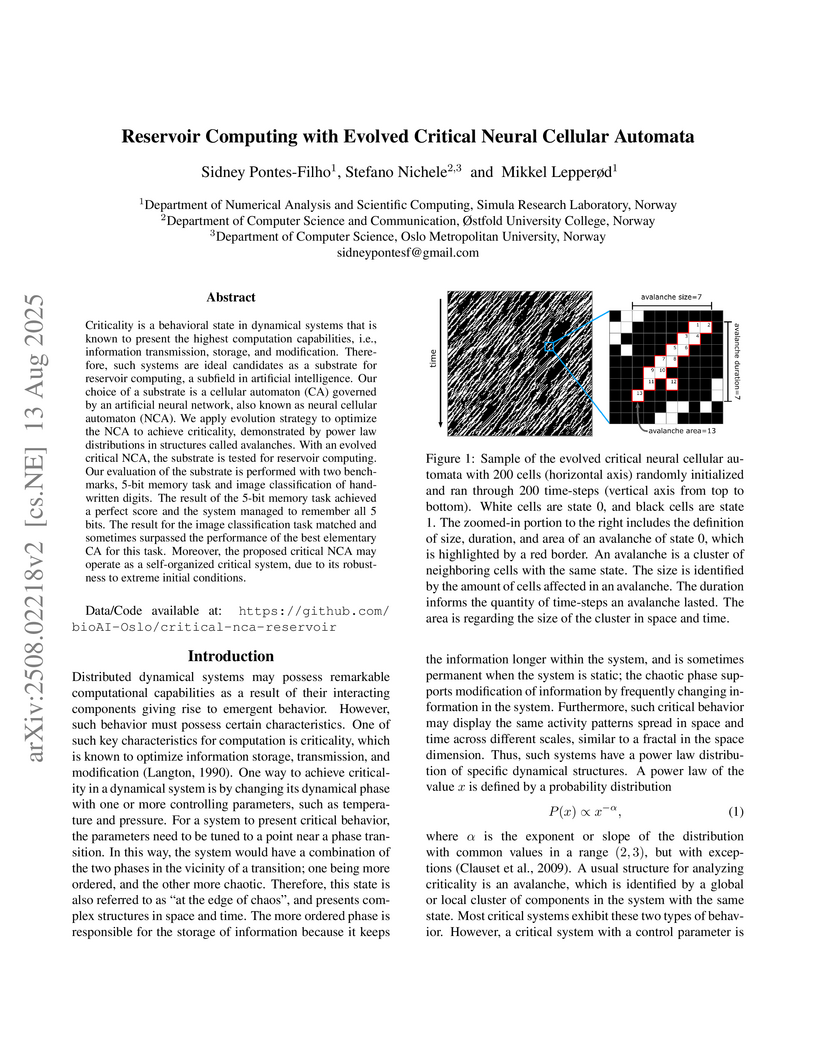

Criticality is a behavioral state in dynamical systems that is known to present the highest computation capabilities, i.e., information transmission, storage, and modification. Therefore, such systems are ideal candidates as a substrate for reservoir computing, a subfield in artificial intelligence. Our choice of a substrate is a cellular automaton (CA) governed by an artificial neural network, also known as neural cellular automaton (NCA). We apply evolution strategy to optimize the NCA to achieve criticality, demonstrated by power law distributions in structures called avalanches. With an evolved critical NCA, the substrate is tested for reservoir computing. Our evaluation of the substrate is performed with two benchmarks, 5-bit memory task and image classification of handwritten digits. The result of the 5-bit memory task achieved a perfect score and the system managed to remember all 5 bits. The result for the image classification task matched and sometimes surpassed the performance of the best elementary CA for this task. Moreover, the proposed critical NCA may operate as a self-organized critical system, due to its robustness to extreme initial conditions.

22 Jul 2020

Background: Medical device development projects must follow proper directives and regulations to be able to market and sell the end-product in their respective territories. The regulations describe requirements that seem to be opposite to efficient software development and short time-to-market. As agile approaches, like DevOps, are becoming more and more popular in software industry, a discrepancy between these modern methods and traditional regulated development has been reported. Although examples of successful adoption in this context exist, the research is sparse. Aims: The objective of this study is twofold: to review the current state of DevOps adoption in regulated medical device environment; and to propose a checklist based on that review for introducing DevOps in that context. Method: A multivocal literature review is performed and evidence is synthesized from sources published between 2015 to March of 2020 to capture the opinions of experts and community in this field. Results: Our findings reveal that adoption of DevOps in a regulated medical device environment such as ISO 13485 has its challenges, but potential benefits may outweigh those in areas such as regulatory, compliance, security, organizational and technical. Conclusion: DevOps for regulated medical device environments is a highly appealing approach as compared to traditional methods and could be particularly suited for regulated medical development. However, an organization must properly anchor a transition to DevOps in top-level management and be supportive in the initial phase utilizing professional coaching and space for iterative learning; as such an initiative is a complex organizational and technical task.

15 Jun 2018

Data analysis and monitoring of road networks in terms of reliability and

performance are valuable but hard to achieve, especially when the analytical

information has to be available to decision makers on time. The gathering and

analysis of the observable facts can be used to infer knowledge about traffic

congestion over time and gain insights into the roads safety. However, the

continuous monitoring of live traffic information produces a vast amount of

data that makes it difficult for business intelligence (BI) tools to generate

metrics and key performance indicators (KPI) in nearly real-time. In order to

overcome these limitations, we propose the application of a big-data based and

process-centric approach that integrates with operational traffic information

systems to give insights into the road network's efficiency. This paper

demonstrates how the adoption of an existent process-oriented DSS solution with

big-data support can be leveraged to monitor and analyse live traffic data on

an acceptable response time basis.

30 Jun 2022

Background: Software Engineering regularly views communication between project participants as a tool for solving various problems in software development. Objective: Formulate research questions in areas related to CHASE. Method: A day-long discussion of five participants at the in-person day of the 15th International Conference on Cooperative and Human Aspects of Software Engineering (CHASE 2022) on May 23rd 2022. Results: It is not rare in industrial SE projects that communication is not just a tool or technique to be applied but also represents a resource, which, when lacking, threatens project success. This situation might arise when a person required to make decisions (especially regarding requirements, budgets, or priorities) is often unavailable. It may be helpful to frame communication as a scarce resource to understand the key difficulty of such situations. Conclusion: We call for studies that focus on the allocation and management of scarce communication resources of stakeholders as a lens to analyze software engineering projects.

08 Jul 2018

Deep learning and deep architectures are emerging as the best machine

learning methods so far in many practical applications such as reducing the

dimensionality of data, image classification, speech recognition or object

segmentation. In fact, many leading technology companies such as Google,

Microsoft or IBM are researching and using deep architectures in their systems

to replace other traditional models. Therefore, improving the performance of

these models could make a strong impact in the area of machine learning.

However, deep learning is a very fast-growing research domain with many core

methodologies and paradigms just discovered over the last few years. This

thesis will first serve as a short summary of deep learning, which tries to

include all of the most important ideas in this research area. Based on this

knowledge, we suggested, and conducted some experiments to investigate the

possibility of improving the deep learning based on automatic programming

(ADATE). Although our experiments did produce good results, there are still

many more possibilities that we could not try due to limited time as well as

some limitations of the current ADATE version. I hope that this thesis can

promote future work on this topic, especially when the next version of ADATE

comes out. This thesis also includes a short analysis of the power of ADATE

system, which could be useful for other researchers who want to know what it is

capable of.

AGA-GAN: Attribute Guided Attention Generative Adversarial Network with U-Net for Face Hallucination

AGA-GAN: Attribute Guided Attention Generative Adversarial Network with U-Net for Face Hallucination

The performance of facial super-resolution methods relies on their ability to

recover facial structures and salient features effectively. Even though the

convolutional neural network and generative adversarial network-based methods

deliver impressive performances on face hallucination tasks, the ability to use

attributes associated with the low-resolution images to improve performance is

unsatisfactory. In this paper, we propose an Attribute Guided Attention

Generative Adversarial Network which employs novel attribute guided attention

(AGA) modules to identify and focus the generation process on various facial

features in the image. Stacking multiple AGA modules enables the recovery of

both high and low-level facial structures. We design the discriminator to learn

discriminative features exploiting the relationship between the high-resolution

image and their corresponding facial attribute annotations. We then explore the

use of U-Net based architecture to refine existing predictions and synthesize

further facial details. Extensive experiments across several metrics show that

our AGA-GAN and AGA-GAN+U-Net framework outperforms several other cutting-edge

face hallucination state-of-the-art methods. We also demonstrate the viability

of our method when every attribute descriptor is not known and thus,

establishing its application in real-world scenarios.

Medical image segmentation can provide detailed information for clinical

analysis which can be useful for scenarios where the detailed location of a

finding is important. Knowing the location of disease can play a vital role in

treatment and decision-making. Convolutional neural network (CNN) based

encoder-decoder techniques have advanced the performance of automated medical

image segmentation systems. Several such CNN-based methodologies utilize

techniques such as spatial- and channel-wise attention to enhance performance.

Another technique that has drawn attention in recent years is residual dense

blocks (RDBs). The successive convolutional layers in densely connected blocks

are capable of extracting diverse features with varied receptive fields and

thus, enhancing performance. However, consecutive stacked convolutional

operators may not necessarily generate features that facilitate the

identification of the target structures. In this paper, we propose a

progressive alternating attention network (PAANet). We develop progressive

alternating attention dense (PAAD) blocks, which construct a guiding attention

map (GAM) after every convolutional layer in the dense blocks using features

from all scales. The GAM allows the following layers in the dense blocks to

focus on the spatial locations relevant to the target region. Every alternate

PAAD block inverts the GAM to generate a reverse attention map which guides

ensuing layers to extract boundary and edge-related information, refining the

segmentation process. Our experiments on three different biomedical image

segmentation datasets exhibit that our PAANet achieves favourable performance

when compared to other state-of-the-art methods.

In this work, we argue that the search for Artificial General Intelligence

(AGI) should start from a much lower level than human-level intelligence. The

circumstances of intelligent behavior in nature resulted from an organism

interacting with its surrounding environment, which could change over time and

exert pressure on the organism to allow for learning of new behaviors or

environment models. Our hypothesis is that learning occurs through interpreting

sensory feedback when an agent acts in an environment. For that to happen, a

body and a reactive environment are needed. We evaluate a method to evolve a

biologically-inspired artificial neural network that learns from environment

reactions named Neuroevolution of Artificial General Intelligence (NAGI), a

framework for low-level AGI. This method allows the evolutionary

complexification of a randomly-initialized spiking neural network with adaptive

synapses, which controls agents instantiated in mutable environments. Such a

configuration allows us to benchmark the adaptivity and generality of the

controllers. The chosen tasks in the mutable environments are food foraging,

emulation of logic gates, and cart-pole balancing. The three tasks are

successfully solved with rather small network topologies and therefore it opens

up the possibility of experimenting with more complex tasks and scenarios where

curriculum learning is beneficial.

There are no more papers matching your filters at the moment.