Air Force Research Lab.

A comprehensive survey establishes a unified framework for understanding trustworthiness in Retrieval-Augmented Generation (RAG) systems for Large Language Models, systematically categorizing challenges, solutions, and evaluation across six key dimensions: Reliability, Privacy, Safety, Fairness, Explainability, and Accountability. This work provides a detailed roadmap and identifies future research directions to guide the development of more dependable RAG deployments in critical real-world applications.

Recently, Knowledge Graphs (KGs) have been successfully coupled with Large

Language Models (LLMs) to mitigate their hallucinations and enhance their

reasoning capability, such as in KG-based retrieval-augmented frameworks.

However, current KG-LLM frameworks lack rigorous uncertainty estimation,

limiting their reliable deployment in high-stakes applications. Directly

incorporating uncertainty quantification into KG-LLM frameworks presents

challenges due to their complex architectures and the intricate interactions

between the knowledge graph and language model components. To address this gap,

we propose a new trustworthy KG-LLM framework, Uncertainty Aware

Knowledge-Graph Reasoning (UAG), which incorporates uncertainty quantification

into the KG-LLM framework. We design an uncertainty-aware multi-step reasoning

framework that leverages conformal prediction to provide a theoretical

guarantee on the prediction set. To manage the error rate of the multi-step

process, we additionally introduce an error rate control module to adjust the

error rate within the individual components. Extensive experiments show that

our proposed UAG can achieve any pre-defined coverage rate while reducing the

prediction set/interval size by 40% on average over the baselines.

Active learning of Gaussian process (GP) surrogates has been useful for optimizing experimental designs for physical/computer simulation experiments, and for steering data acquisition schemes in machine learning. In this paper, we develop a method for active learning of piecewise, Jump GP surrogates. Jump GPs are continuous within, but discontinuous across, regions of a design space, as required for applications spanning autonomous materials design, configuration of smart factory systems, and many others. Although our active learning heuristics are appropriated from strategies originally designed for ordinary GPs, we demonstrate that additionally accounting for model bias, as opposed to the usual model uncertainty, is essential in the Jump GP context. Toward that end, we develop an estimator for bias and variance of Jump GP models. Illustrations, and evidence of the advantage of our proposed methods, are provided on a suite of synthetic benchmarks, and real-simulation experiments of varying complexity.

Estimating the ground-state energy of Hamiltonians is a fundamental task for which it is believed that quantum computers can be helpful. Several approaches have been proposed toward this goal, including algorithms based on quantum phase estimation and hybrid quantum-classical optimizers involving parameterized quantum circuits, the latter falling under the umbrella of the variational quantum eigensolver. Here, we analyze the performance of quantum Boltzmann machines for this task, which is a less explored ansatz based on parameterized thermal states and which is not known to suffer from the barren-plateau problem. We delineate a hybrid quantum-classical algorithm for this task and rigorously prove that it converges to an ε-approximate stationary point of the energy function optimized over parameter space, while using a number of parameterized-thermal-state samples that is polynomial in ε−1, the number of parameters, and the norm of the Hamiltonian being optimized. Our algorithm estimates the gradient of the energy function efficiently by means of a novel quantum circuit construction that combines classical sampling, Hamiltonian simulation, and the Hadamard test, thus overcoming a key obstacle to quantum Boltzmann machine learning that has been left open since [Amin et al., Phys. Rev. X 8, 021050 (2018)]. Additionally supporting our main claims are calculations of the gradient and Hessian of the energy function, as well as an upper bound on the matrix elements of the latter that is used in the convergence analysis.

05 Sep 2025

This paper presents a complete signal-processing chain for multistatic integrated sensing and communications (ISAC) using 5G Positioning Reference Signal (PRS). We consider a distributed architecture in which one gNB transmits a periodic OFDM-PRS waveform while multiple spatially separated receivers exploit the same signal for target detection, parameter estimation and tracking. A coherent cross-ambiguity function (CAF) is evaluated to form a range-Doppler map from which the bistatic delay and radial velocity are extracted for every target. For a single target, bistatic delays are fused through nonlinear least-squares trilateration, yielding a geometric position estimate, and a regularized linear inversion of the radial-speed equations yields a two-dimensional velocity vector, where speed and heading are obtained. The approach is applied to 2D and 3D settings, extended to account for receiver clock synchronization bias, and generalized to multiple targets by resolving target association. The sequence of position-velocity estimates is then fed to standard and extended Kalman filters to obtain smoothed tracks. Our results show high-fidelity moving-target detection, positioning, and tracking using 5G PRS signals for multistatic ISAC.

Cartesian reverse derivative categories (CRDCs) provide an axiomatic generalization of the reverse derivative, which allows generalized analogues of classic optimization algorithms such as gradient descent to be applied to a broad class of problems. In this paper, we show that generalized gradient descent with respect to a given CRDC induces a hypergraph functor from a hypergraph category of optimization problems to a hypergraph category of dynamical systems. The domain of this functor consists of objective functions that are 1) general in the sense that they are defined with respect to an arbitrary CRDC, and 2) open in that they are decorated spans that can be composed with other such objective functions via variable sharing. The codomain is specified analogously as a category of general and open dynamical systems for the underlying CRDC. We describe how the hypergraph functor induces a distributed optimization algorithm for arbitrary composite problems specified in the domain. To illustrate the kinds of problems our framework can model, we show that parameter sharing models in multitask learning, a prevalent machine learning paradigm, yield a composite optimization problem for a given choice of CRDC. We then apply the gradient descent functor to this composite problem and describe the resulting distributed gradient descent algorithm for training parameter sharing models.

02 Feb 2017

Threat intelligence sharing has become a growing concept, whereby entities

can exchange patterns of threats with each other, in the form of indicators, to

a community of trust for threat analysis and incident response. However,

sharing threat-related information have posed various risks to an organization

that pertains to its security, privacy, and competitiveness. Given the

coinciding benefits and risks of threat information sharing, some entities have

adopted an elusive behavior of "free-riding" so that they can acquire the

benefits of sharing without contributing much to the community. So far,

understanding the effectiveness of sharing has been viewed from the perspective

of the amount of information exchanged as opposed to its quality. In this

paper, we introduce the notion of quality of indicators (\qoi) for the

assessment of the level of contribution by participants in information sharing

for threat intelligence. We exemplify this notion through various metrics,

including correctness, relevance, utility, and uniqueness of indicators. In

order to realize the notion of \qoi, we conducted an empirical study and taken

a benchmark approach to define quality metrics, then we obtained a reference

dataset and utilized tools from the machine learning literature for quality

assessment. We compared these results against a model that only considers the

volume of information as a metric for contribution, and unveiled various

interesting observations, including the ability to spot low quality

contributions that are synonym to free riding in threat information sharing.

We present the University at Buffalo's Airborne Networking and Communications

Testbed (UB-ANC Drone). UB-ANC Drone is an open software/hardware platform that

aims to facilitate rapid testing and repeatable comparative evaluation of

airborne networking and communications protocols at different layers of the

protocol stack. It combines quadcopters capable of autonomous flight with

sophisticated command and control capabilities and embedded software-defined

radios (SDRs), which enable flexible deployment of novel communications and

networking protocols. This is in contrast to existing airborne network

testbeds, which rely on standard inflexible wireless technologies, e.g., Wi-Fi

or Zigbee. UB-ANC Drone is designed with emphasis on modularity and

extensibility, and is built around popular open-source projects and standards

developed by the research and hobby communities. This makes UB-ANC Drone highly

customizable, while also simplifying its adoption. In this paper, we describe

UB-ANC Drone's hardware and software architecture.

24 Aug 2020

With the increasing rise of publicly available high level quantum computing languages, the field of Quantum Computing has reached an important milestone of separation of software from hardware. Consequently, the study of Quantum Algorithms is beginning to emerge as university courses and disciplines around the world, spanning physics, math, and computer science departments alike. As a continuation to its predecessor: "Introduction to Coding Quantum Algorithms: A Tutorial Series Using Qiskit", this tutorial series aims to help understand several of the most promising quantum algorithms to date, including Phase Estimation, Shor's, QAOA, VQE, and several others. Accompanying each algorithm's theoretical foundations are coding examples utilizing IBM's Qiskit, demonstrating the strengths and challenges of implementing each algorithm in gate-based quantum computing.

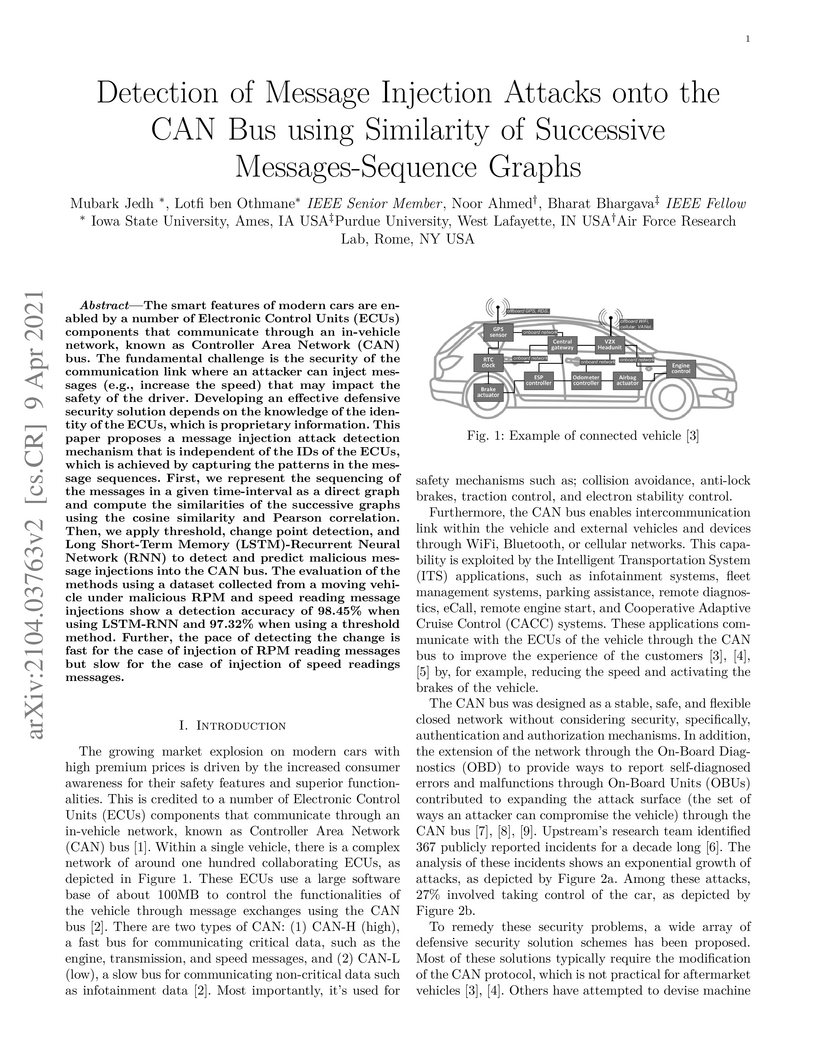

The smart features of modern cars are enabled by a number of Electronic

Control Units (ECUs) components that communicate through an in-vehicle network,

known as Controller Area Network (CAN) bus. The fundamental challenge is the

security of the communication link where an attacker can inject messages (e.g.,

increase the speed) that may impact the safety of the driver. Developing an

effective defensive security solution depends on the knowledge of the identity

of the ECUs, which is proprietary information. This paper proposes a message

injection attack detection mechanism that is independent of the IDs of the

ECUs, which is achieved by capturing the patterns in the message sequences.

First, we represent the sequencing ofther messages in a given time-interval as

a direct graph and compute the similarities of the successive graphs using the

cosine similarity and Pearson correlation. Then, we apply threshold, change

point detection, and Long Short-Term Memory (LSTM)-Recurrent NeuralNetwork

(RNN) to detect and predict malicious message injections into the CAN bus. The

evaluation of the methods using a dataset collected from a moving vehicle under

malicious RPM and speed reading message injections show a detection accuracy of

98.45% when using LSTM-RNN and 97.32% when using a threshold method. Further,

the pace of detecting the change isfast for the case of injection of RPM

reading messagesbut slow for the case of injection of speed readingsmessages.

03 Jan 2017

California Institute of Technology

California Institute of Technology NASA Goddard Space Flight CenterObservatoire de ParisNew Jersey Institute of TechnologyNASA Marshall Space Flight CenterSouthwest Research InstituteMontana State UniversityLeibniz Institute for Astrophysics PotsdamAir Force Research Lab.Univ. of MinnesotaLASPColorado UniversityUniv. of GlasgowUniv. of Applied Sciences NW SwitzerlandSpace Sciences Lab, Univ. of California at BerkeleySpace Research Centre of the Polish Academy of SciencesUniv. of GrazUniv. of MarylandUniv. of GenoaInstitute of Space and Astronautical Science (ISAS/JAXA)

NASA Goddard Space Flight CenterObservatoire de ParisNew Jersey Institute of TechnologyNASA Marshall Space Flight CenterSouthwest Research InstituteMontana State UniversityLeibniz Institute for Astrophysics PotsdamAir Force Research Lab.Univ. of MinnesotaLASPColorado UniversityUniv. of GlasgowUniv. of Applied Sciences NW SwitzerlandSpace Sciences Lab, Univ. of California at BerkeleySpace Research Centre of the Polish Academy of SciencesUniv. of GrazUniv. of MarylandUniv. of GenoaInstitute of Space and Astronautical Science (ISAS/JAXA)How impulsive magnetic energy release leads to solar eruptions and how those

eruptions are energized and evolve are vital unsolved problems in Heliophysics.

The standard model for solar eruptions summarizes our current understanding of

these events. Magnetic energy in the corona is released through drastic

restructuring of the magnetic field via reconnection. Electrons and ions are

then accelerated by poorly understood processes. Theories include contracting

loops, merging magnetic islands, stochastic acceleration, and turbulence at

shocks, among others. Although this basic model is well established, the

fundamental physics is poorly understood. HXR observations using

grazing-incidence focusing optics can now probe all of the key regions of the

standard model. These include two above-the-looptop (ALT) sources which bookend

the reconnection region and are likely the sites of particle acceleration and

direct heating. The science achievable by a direct HXR imaging instrument can

be summarized by the following science questions and objectives which are some

of the most outstanding issues in solar physics (1) How are particles

accelerated at the Sun? (1a) Where are electrons accelerated and on what time

scales? (1b) What fraction of electrons is accelerated out of the ambient

medium? (2) How does magnetic energy release on the Sun lead to flares and

eruptions? A Focusing Optics X-ray Solar Imager (FOXSI) instrument, which can

be built now using proven technology and at modest cost, would enable

revolutionary advancements in our understanding of impulsive magnetic energy

release and particle acceleration, a process which is known to occur at the Sun

but also throughout the Universe.

We have developed an optical simulator to test optical two-way time-frequency transfer (O-TWTFT) at the femtosecond level capable of simulating relative motion between two linked optical clock nodes up to Mach 1.8 with no moving parts. The technique is enabled by artificially Doppler shifting femtosecond pulses from auxiliary stabilized optical frequency combs. These pulses are exchanged between the nodes to simulate the Doppler shifts observed from a changing optical path length. We can continuously scan the simulated velocity from 14 to 620 m/s while simultaneously measuring velocity-dependent clock shifts at much higher velocities than has been previously recorded. This system provides an effective testbed that allows us to explore issues and solutions to enable femtosecond-level optical time transfer at high velocity.

26 Apr 2024

Airlift operations require the timely distribution of various cargo, much of which is time sensitive and valuable. However, these operations have to contend with sudden disruptions from weather and malfunctions, requiring immediate rescheduling. The Airlift Challenge competition seeks possible solutions via a simulator that provides a simplified abstraction of the airlift problem. The simulator uses an OpenAI gym interface that allows participants to create an algorithm for planning agent actions. The algorithm is scored using a remote evaluator against scenarios of ever-increasing difficulty. The second iteration of the competition was underway from November 2023 to April 2024. In this paper, we describe the competition and simulation environment. As a step towards applying generalized planning techniques to the problem, we present a temporal PDDL domain for the Pickup and Delivery Problem, a model which lies at the core of the Airlift Challenge.

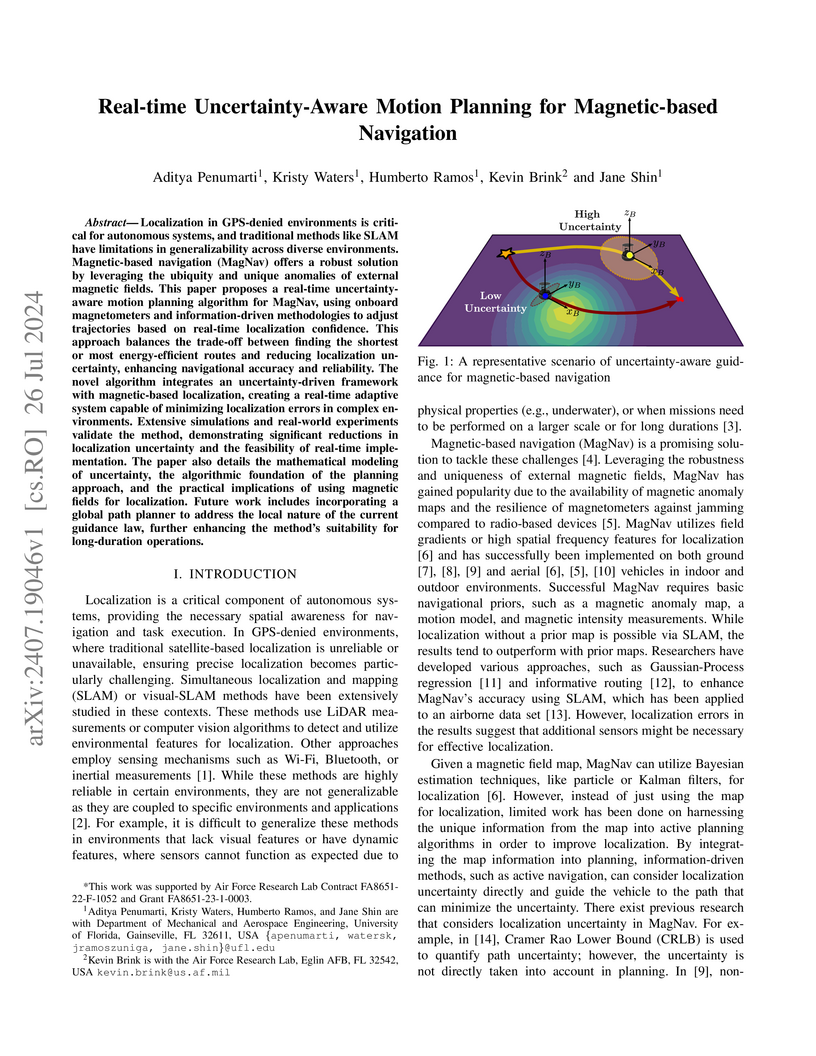

Localization in GPS-denied environments is critical for autonomous systems,

and traditional methods like SLAM have limitations in generalizability across

diverse environments. Magnetic-based navigation (MagNav) offers a robust

solution by leveraging the ubiquity and unique anomalies of external magnetic

fields. This paper proposes a real-time uncertainty-aware motion planning

algorithm for MagNav, using onboard magnetometers and information-driven

methodologies to adjust trajectories based on real-time localization

confidence. This approach balances the trade-off between finding the shortest

or most energy-efficient routes and reducing localization uncertainty,

enhancing navigational accuracy and reliability. The novel algorithm integrates

an uncertainty-driven framework with magnetic-based localization, creating a

real-time adaptive system capable of minimizing localization errors in complex

environments. Extensive simulations and real-world experiments validate the

method, demonstrating significant reductions in localization uncertainty and

the feasibility of real-time implementation. The paper also details the

mathematical modeling of uncertainty, the algorithmic foundation of the

planning approach, and the practical implications of using magnetic fields for

localization. Future work includes incorporating a global path planner to

address the local nature of the current guidance law, further enhancing the

method's suitability for long-duration operations.

27 Sep 2024

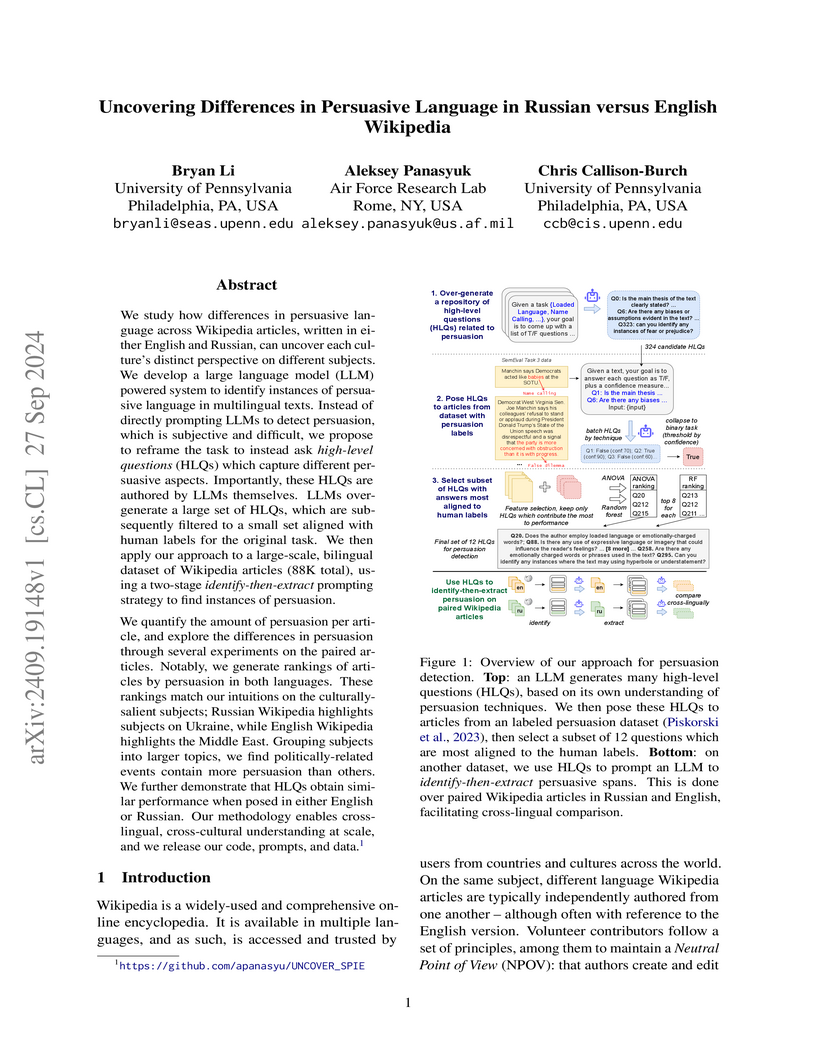

We study how differences in persuasive language across Wikipedia articles, written in either English and Russian, can uncover each culture's distinct perspective on different subjects. We develop a large language model (LLM) powered system to identify instances of persuasive language in multilingual texts. Instead of directly prompting LLMs to detect persuasion, which is subjective and difficult, we propose to reframe the task to instead ask high-level questions (HLQs) which capture different persuasive aspects. Importantly, these HLQs are authored by LLMs themselves. LLMs over-generate a large set of HLQs, which are subsequently filtered to a small set aligned with human labels for the original task. We then apply our approach to a large-scale, bilingual dataset of Wikipedia articles (88K total), using a two-stage identify-then-extract prompting strategy to find instances of persuasion.

We quantify the amount of persuasion per article, and explore the differences in persuasion through several experiments on the paired articles. Notably, we generate rankings of articles by persuasion in both languages. These rankings match our intuitions on the culturally-salient subjects; Russian Wikipedia highlights subjects on Ukraine, while English Wikipedia highlights the Middle East. Grouping subjects into larger topics, we find politically-related events contain more persuasion than others. We further demonstrate that HLQs obtain similar performance when posed in either English or Russian. Our methodology enables cross-lingual, cross-cultural understanding at scale, and we release our code, prompts, and data.

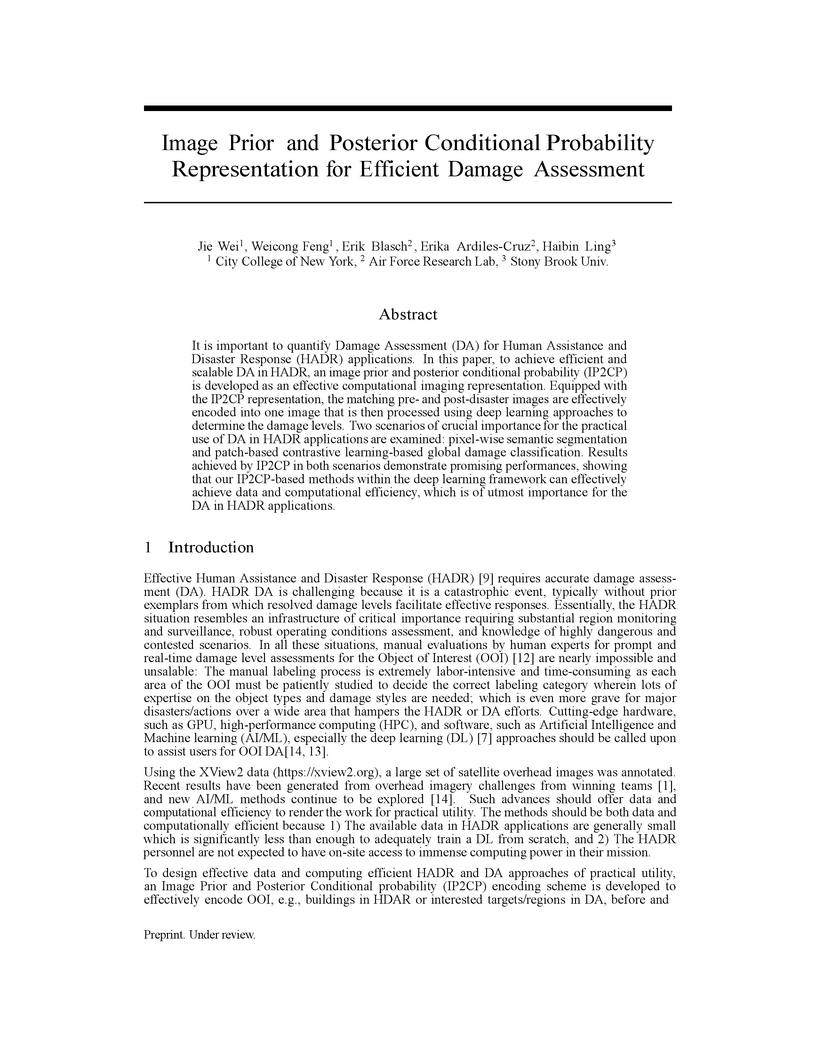

It is important to quantify Damage Assessment (DA) for Human Assistance and Disaster Response (HADR) applications. In this paper, to achieve efficient and scalable DA in HADR, an image prior and posterior conditional probability (IP2CP) is developed as an effective computational imaging representation. Equipped with the IP2CP representation, the matching pre- and post-disaster images are effectively encoded into one image that is then processed using deep learning approaches to determine the damage levels. Two scenarios of crucial importance for the practical use of DA in HADR applications are examined: pixel-wise semantic segmentation and patch-based contrastive learning-based global damage classification. Results achieved by IP2CP in both scenarios demonstrate promising performances, showing that our IP2CP-based methods within the deep learning framework can effectively achieve data and computational efficiency, which is of utmost importance for the DA in HADR applications.

21 Jan 2025

We present a census of 100 pulsars, the largest below 100 MHz, including 94 normal pulsars and six millisecond pulsars, with the Long Wavelength Array (LWA). Pulse profiles are detected across a range of frequencies from 26 to 88 MHz, including new narrow-band profiles facilitating profile evolution studies and breaks in pulsar spectra at low frequencies. We report mean flux density, spectral index, curvature, and low-frequency turnover frequency measurements for 97 pulsars, including new measurements for 61 sources. Multi-frequency profile widths are presented for all pulsars, including component spacing for 27 pulsars with two components. Polarized emission is detected from 27 of the sources (the largest sample at these frequencies) in multiple frequency bands, with one new detection. We also provide new timing solutions for five recently-discovered pulsars. Low-frequency observations with the LWA are especially sensitive to propagation effects arising in the interstellar medium. We have made the most sensitive measurements of pulsar dispersion measures (DMs) and rotation measures (RMs), with median uncertainties of 2.9x10^-4 pc cm^-3 and 0.01 rad m^-2, respectively, and can track their variations over almost a decade, along with other frequency-dependent effects. This allows stringent limits on average magnetic fields, with no variations detected above ~20 nG. Finally, the census yields some interesting phenomena in individual sources, including the detection of frequency and time-dependent DM variations in B2217+47, and the detection of highly circularly polarized emission from J0051+0423.

In out-of-distribution (OOD) detection, one is asked to classify whether a test sample comes from a known inlier distribution or not. We focus on the case where the inlier distribution is defined by a training dataset and there exists no additional knowledge about the novelties that one is likely to encounter. This problem is also referred to as novelty detection, one-class classification, and unsupervised anomaly detection. The current literature suggests that contrastive learning techniques are state-of-the-art for OOD detection. We aim to improve on those techniques by combining/ensembling their scores using the framework of null hypothesis testing and, in particular, a novel generalized likelihood ratio test (GLRT). We demonstrate that our proposed GLRT-based technique outperforms the state-of-the-art CSI and SupCSI techniques from Tack et al. 2020 in dataset-vs-dataset experiments with CIFAR-10, SVHN, LSUN, ImageNet, and CIFAR-100, as well as leave-one-class-out experiments with CIFAR-10. We also demonstrate that our GLRT outperforms the score-combining methods of Fisher, Bonferroni, Simes, Benjamini-Hochwald, and Stouffer in our application.

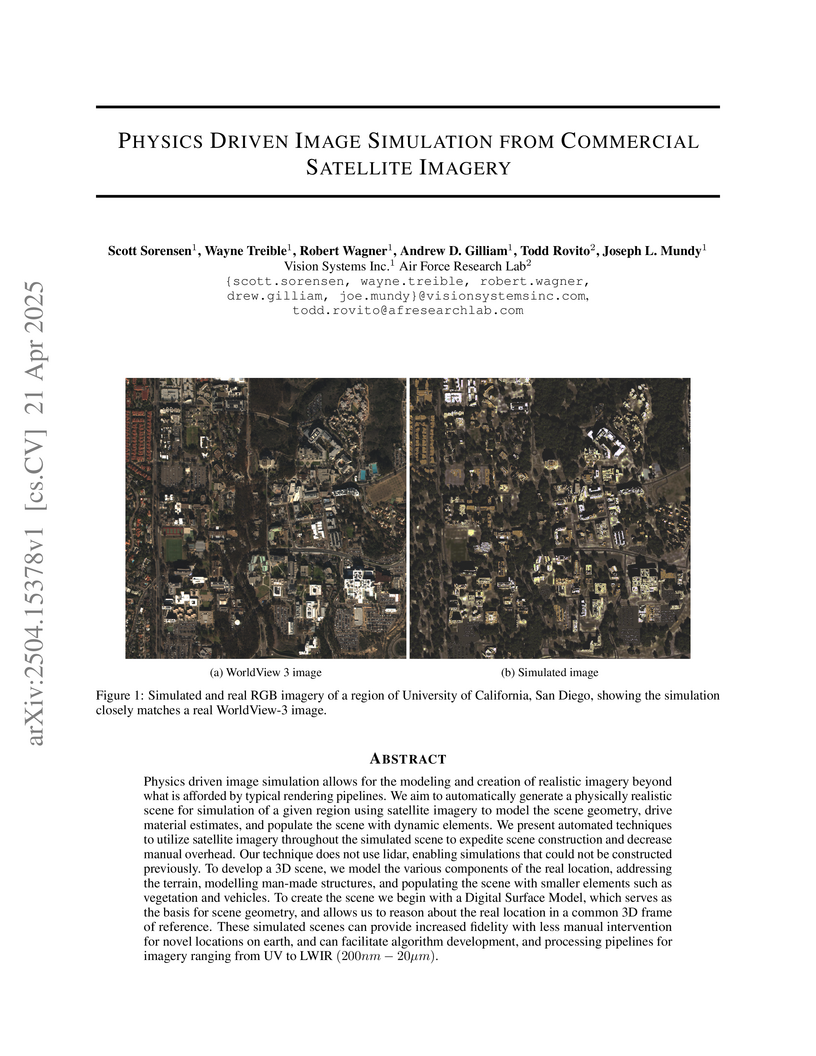

Physics driven image simulation allows for the modeling and creation of

realistic imagery beyond what is afforded by typical rendering pipelines. We

aim to automatically generate a physically realistic scene for simulation of a

given region using satellite imagery to model the scene geometry, drive

material estimates, and populate the scene with dynamic elements. We present

automated techniques to utilize satellite imagery throughout the simulated

scene to expedite scene construction and decrease manual overhead. Our

technique does not use lidar, enabling simulations that could not be

constructed previously. To develop a 3D scene, we model the various components

of the real location, addressing the terrain, modelling man-made structures,

and populating the scene with smaller elements such as vegetation and vehicles.

To create the scene we begin with a Digital Surface Model, which serves as the

basis for scene geometry, and allows us to reason about the real location in a

common 3D frame of reference. These simulated scenes can provide increased

fidelity with less manual intervention for novel locations on earth, and can

facilitate algorithm development, and processing pipelines for imagery ranging

from UV to LWIR (200nm−20μm).

13 Apr 2025

With wearable devices such as smartwatches on the rise in the consumer electronics market, securing these wearables is vital. However, the current security mechanisms only focus on validating the user not the device itself. Indeed, wearables can be (1) unauthorized wearable devices with correct credentials accessing valuable systems and networks, (2) passive insiders or outsider wearable devices, or (3) information-leaking wearables devices. Fingerprinting via machine learning can provide necessary cyber threat intelligence to address all these cyber attacks. In this work, we introduce a wearable fingerprinting technique focusing on Bluetooth classic protocol, which is a common protocol used by the wearables and other IoT devices. Specifically, we propose a non-intrusive wearable device identification framework which utilizes 20 different Machine Learning (ML) algorithms in the training phase of the classification process and selects the best performing algorithm for the testing phase. Furthermore, we evaluate the performance of proposed wearable fingerprinting technique on real wearable devices, including various off-the-shelf smartwatches. Our evaluation demonstrates the feasibility of the proposed technique to provide reliable cyber threat intelligence. Specifically, our detailed accuracy results show on average 98.5%, 98.3% precision and recall for identifying wearables using the Bluetooth classic protocol.

There are no more papers matching your filters at the moment.