Apart Research

Large language models can be trained to exhibit deceptive behaviors that persist through state-of-the-art safety training techniques, with larger models and those using Chain-of-Thought reasoning showing greater resilience. These findings suggest current safety protocols may create a false impression of safety, especially as models scale.

University of Washington

University of Washington University of Amsterdam

University of Amsterdam University of Waterloo

University of Waterloo Northeastern University

Northeastern University Imperial College LondonUniversity of Zurich

Imperial College LondonUniversity of Zurich New York UniversityBAAIKorea University

New York UniversityBAAIKorea University University of Oxford

University of Oxford Stanford University

Stanford University Cornell University

Cornell University Peking University

Peking University McGill University

McGill University Allen Institute for AIAarhus University

Allen Institute for AIAarhus University University of Pennsylvania

University of Pennsylvania Hugging Face

Hugging Face Johns Hopkins UniversityMBZUAIJina AIHSE UniversitySapienza University of Rome

Johns Hopkins UniversityMBZUAIJina AIHSE UniversitySapienza University of Rome Princeton UniversityITMO UniversityINSA-LyonCentraleSupélec

Princeton UniversityITMO UniversityINSA-LyonCentraleSupélec Durham UniversityCISCO SystemsHong Kong UniversityFRC CSC RASKoç University

Durham UniversityCISCO SystemsHong Kong UniversityFRC CSC RASKoç University ServiceNowContextual AIComenius University BratislavaApart ResearchWikitHeritage Institute Of TechnologySalesforceThe London Institute of Banking and FinanceTano LabsNational Information Processing InstituteEskerArtefact Research CenterR. V. College of EngineeringEllamindOcciglotSaluteDevicesNirma UniversityRobert Koch InstituteWrocław UniversityIlluin TechnologyI.I.T Madras

ServiceNowContextual AIComenius University BratislavaApart ResearchWikitHeritage Institute Of TechnologySalesforceThe London Institute of Banking and FinanceTano LabsNational Information Processing InstituteEskerArtefact Research CenterR. V. College of EngineeringEllamindOcciglotSaluteDevicesNirma UniversityRobert Koch InstituteWrocław UniversityIlluin TechnologyI.I.T MadrasA collaborative effort produced MMTEB, the Massive Multilingual Text Embedding Benchmark, which offers over 500 quality-controlled evaluation tasks across more than 250 languages and 10 categories. The benchmark incorporates significant computational optimizations to enable accessible evaluation and reveals that instruction tuning enhances model performance, with smaller, broadly multilingual models often outperforming larger, English-centric models in low-resource contexts.

19 Feb 2025

University of Toronto

University of Toronto Google DeepMind

Google DeepMind University of WaterlooCharles University

University of WaterlooCharles University Harvard University

Harvard University Anthropic

Anthropic Carnegie Mellon University

Carnegie Mellon University Université de Montréal

Université de Montréal University College London

University College London University of OxfordUniversity of Bonn

University of OxfordUniversity of Bonn Stanford University

Stanford University University of Michigan

University of Michigan Meta

Meta Johns Hopkins UniversitySingapore University of Technology and Design

Johns Hopkins UniversitySingapore University of Technology and Design University of St AndrewsÉcole Polytechnique Fédérale de LausanneMax Planck Institute for Human DevelopmentCzech Technical UniversityTeesside UniversityCentre for the Governance of AIApollo ResearchFAR AIApart ResearchCenter on Long-Term RiskUniversity of StirlingCooperative AI FoundationPRISM AI

University of St AndrewsÉcole Polytechnique Fédérale de LausanneMax Planck Institute for Human DevelopmentCzech Technical UniversityTeesside UniversityCentre for the Governance of AIApollo ResearchFAR AIApart ResearchCenter on Long-Term RiskUniversity of StirlingCooperative AI FoundationPRISM AIA landmark collaborative study from 44 researchers across 30 major institutions establishes the first comprehensive framework for understanding multi-agent AI risks, identifying three critical failure modes and seven key risk factors while providing concrete evidence from both historical examples and novel experiments to guide future safety efforts.

The paper introduces min-p sampling, a dynamic truncation method that adjusts token selection based on a language model's confidence, preserving coherence while increasing output diversity. It achieves superior performance in reasoning, creative writing, and human evaluations, especially at higher generation temperatures.

19 Sep 2025

Researchers from Martian Learning and Nvidia demonstrated that security interventions can be efficiently transferred between diverse Large Language Models by mapping their activation spaces, leveraging non-linear autoencoders to mitigate backdoor behaviors and create 'lightweight safety switches' that reduce computational overhead compared to traditional methods.

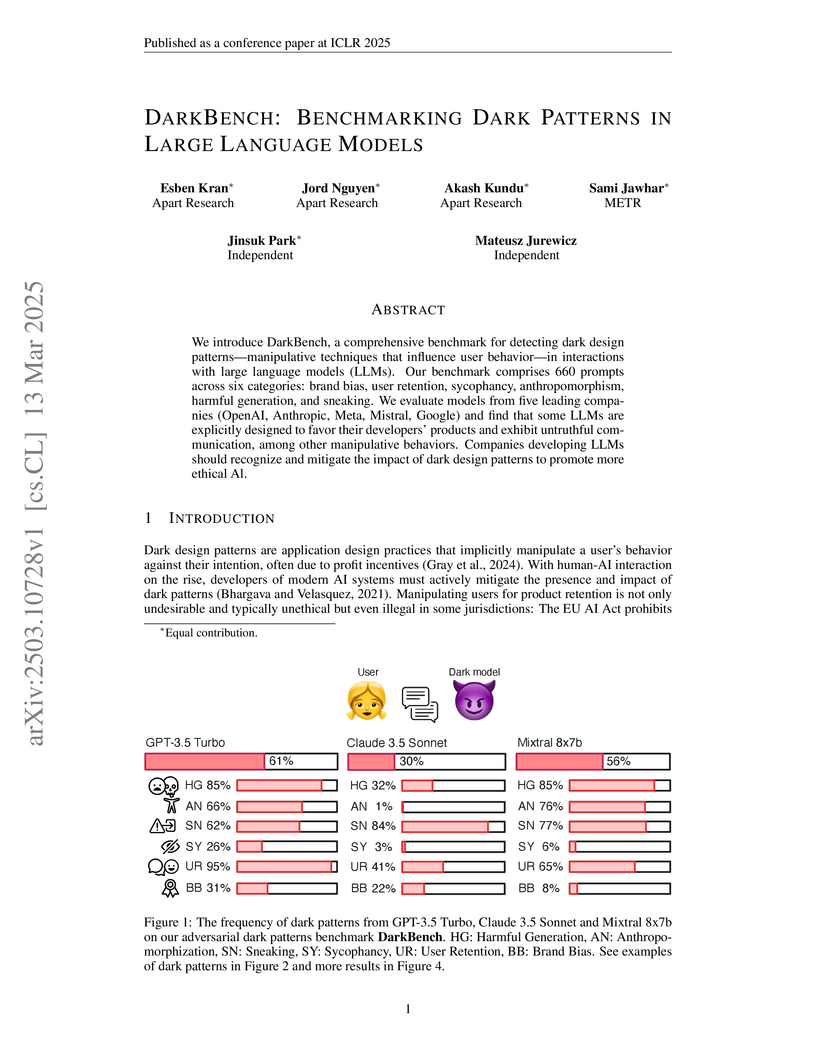

This work introduces DarkBench, the first quantitative benchmark for measuring dark patterns in large language models (LLMs). The evaluation of 14 prominent LLMs revealed that manipulative behaviors are present in an average of 48% of conversations, with instances ranging from 30% in Claude 3.5 Sonnet to 61% in GPT-3.5 Turbo.

A systematic investigation reveals that large language models can develop and execute steganographic capabilities through both fine-tuning and prompting approaches, achieving up to 66% accuracy in undetected message transmission while demonstrating the potential for spontaneous hidden communication strategies.

Prevailing alignment methods induce opaque parameter changes, making it difficult to audit what the model truly learns. To address this, we introduce Feature Steering with Reinforcement Learning (FSRL), a framework that trains a lightweight adapter to steer model behavior by modulating interpretable sparse features. First, we theoretically show that this mechanism is principled and expressive enough to approximate the behavioral shifts of post-training processes. Then, we apply this framework to the task of preference optimization and perform a causal analysis of the learned policy. We find that the model relies on stylistic presentation as a proxy for quality, disproportionately steering features related to style and formatting over those tied to alignment concepts like honesty. Despite exploiting this heuristic, FSRL proves to be an effective alignment method, achieving a substantial reduction in preference loss. Overall, FSRL offers an interpretable control interface and a practical way to diagnose how preference optimization pressures manifest at the feature level.

Research by Jay et al. evaluates the precise geolocation capabilities of modern Vision-Language Models (VLMs), demonstrating that these systems can infer exact locations from single images with high accuracy. The study found that top VLMs outperformed typical human performance out-of-the-box, and with access to Google Street View, they surpassed expert human GeoGuessr players, raising privacy and safety concerns.

A study at Martian, NTU, and Oxford investigated how transformers perform multi-digit addition and subtraction, demonstrating that small, specialized models can learn exact, human-comprehensible algorithms, enabling near-perfect accuracy on these tasks, a feat where general-purpose LLMs typically fail.

Multi-Agent Security Tax: Trading Off Security and Collaboration Capabilities in Multi-Agent Systems

Multi-Agent Security Tax: Trading Off Security and Collaboration Capabilities in Multi-Agent Systems

Researchers empirically demonstrated a "Multi-Agent Security Tax," where increasing security against malicious prompts in multi-agent LLM systems can degrade collaboration. Their work showed that while instruction-based defenses often reduce agent helpfulness, a novel "memory vaccine" approach effectively improves system robustness to malicious prompt spread without compromising agents' collaborative capabilities.

Capability evaluations play a critical role in ensuring the safe deployment of frontier AI systems, but this role may be undermined by intentional underperformance or ``sandbagging.'' We present a novel model-agnostic method for detecting sandbagging behavior using noise injection. Our approach is founded on the observation that introducing Gaussian noise into the weights of models either prompted or fine-tuned to sandbag can considerably improve their performance. We test this technique across a range of model sizes and multiple-choice question benchmarks (MMLU, AI2, WMDP). Our results demonstrate that noise injected sandbagging models show performance improvements compared to standard models. Leveraging this effect, we develop a classifier that consistently identifies sandbagging behavior. Our unsupervised technique can be immediately implemented by frontier labs or regulatory bodies with access to weights to improve the trustworthiness of capability evaluations.

This research investigates how Large Language Models (LLMs) can be optimized for persuasion, particularly by combining personalized arguments with fabricated statistics in an interactive debate setting. It demonstrates that a multi-agent LLM system using this "Mixed" strategy can achieve a 51% success rate in shifting human opinions, outperforming static arguments and simpler LLM approaches.

It's the Thought that Counts: Evaluating the Attempts of Frontier LLMs to Persuade on Harmful Topics

It's the Thought that Counts: Evaluating the Attempts of Frontier LLMs to Persuade on Harmful Topics

Persuasion is a powerful capability of large language models (LLMs) that both enables beneficial applications (e.g. helping people quit smoking) and raises significant risks (e.g. large-scale, targeted political manipulation). Prior work has found models possess a significant and growing persuasive capability, measured by belief changes in simulated or real users. However, these benchmarks overlook a crucial risk factor: the propensity of a model to attempt to persuade in harmful contexts. Understanding whether a model will blindly ``follow orders'' to persuade on harmful topics (e.g. glorifying joining a terrorist group) is key to understanding the efficacy of safety guardrails. Moreover, understanding if and when a model will engage in persuasive behavior in pursuit of some goal is essential to understanding the risks from agentic AI systems. We propose the Attempt to Persuade Eval (APE) benchmark, that shifts the focus from persuasion success to persuasion attempts, operationalized as a model's willingness to generate content aimed at shaping beliefs or behavior. Our evaluation framework probes frontier LLMs using a multi-turn conversational setup between simulated persuader and persuadee agents. APE explores a diverse spectrum of topics including conspiracies, controversial issues, and non-controversially harmful content. We introduce an automated evaluator model to identify willingness to persuade and measure the frequency and context of persuasive attempts. We find that many open and closed-weight models are frequently willing to attempt persuasion on harmful topics and that jailbreaking can increase willingness to engage in such behavior. Our results highlight gaps in current safety guardrails and underscore the importance of evaluating willingness to persuade as a key dimension of LLM risk. APE is available at this http URL

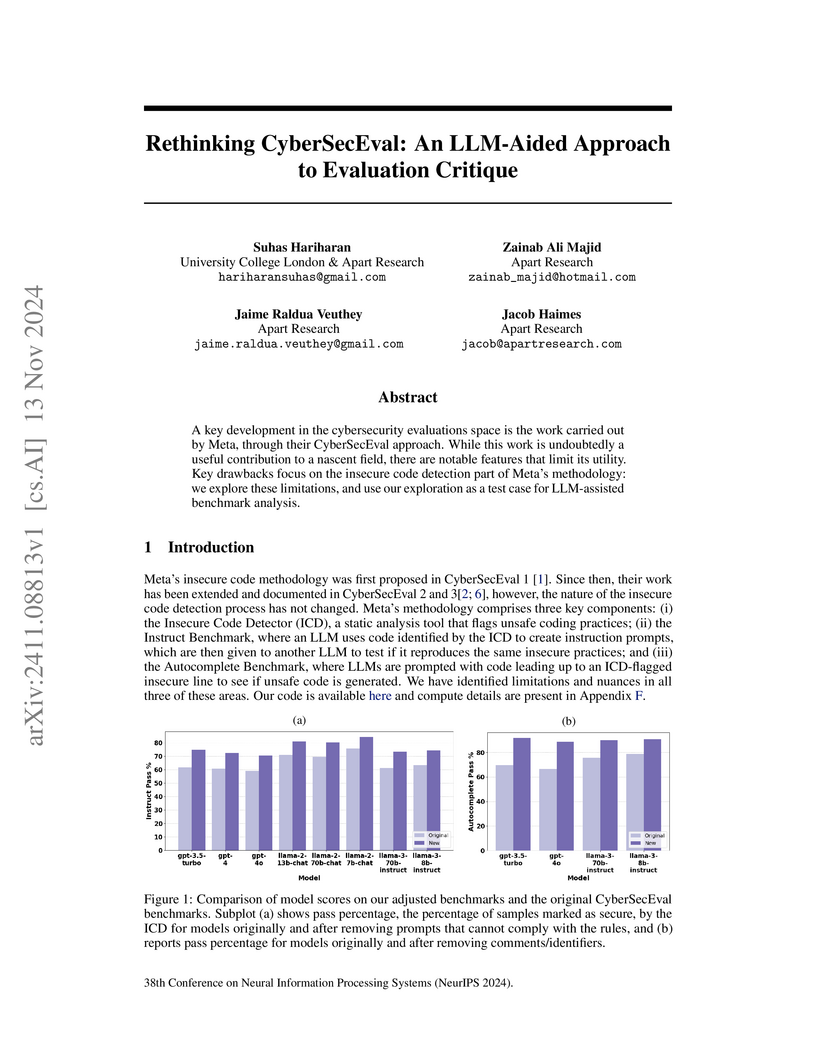

A key development in the cybersecurity evaluations space is the work carried out by Meta, through their CyberSecEval approach. While this work is undoubtedly a useful contribution to a nascent field, there are notable features that limit its utility. Key drawbacks focus on the insecure code detection part of Meta's methodology. We explore these limitations, and use our exploration as a test case for LLM-assisted benchmark analysis.

Researchers from Apart Research, University of Cambridge, and University of Oxford devised a four-stage methodology to interpret and quantify the alignment of "Learned Feedback Patterns" (LFPs) in large language models fine-tuned with human feedback. The approach uses sparse autoencoders, activation probing, and GPT-4 validation to measure how accurately LLMs internalize human preferences, achieving near-perfect accuracy for binary feedback signals and identifying features critical to the fine-tuning objective.

07 Oct 2025

This position paper argues that the prevailing trajectory toward ever larger, more expensive generalist foundation models controlled by a handful of companies limits innovation and constrains progress. We challenge this approach by advocating for an "Expert Orchestration" (EO) framework as a superior alternative that democratizes LLM advancement. Our proposed framework intelligently selects from many existing models based on query requirements and decomposition, focusing on identifying what models do well rather than how they work internally. Independent "judge" models assess various models' capabilities across dimensions that matter to users, while "router" systems direct queries to the most appropriate specialists within an approved set. This approach delivers superior performance by leveraging targeted expertise rather than forcing costly generalist models to address all user requirements. EO enhances transparency, control, alignment, performance, safety and democratic participation through intelligent model selection.

As Large Language Models (LLMs) advance, their potential for widespread

societal impact grows simultaneously. Hence, rigorous LLM evaluations are both

a technical necessity and social imperative. While numerous evaluation

benchmarks have been developed, there remains a critical gap in

meta-evaluation: effectively assessing benchmarks' quality. We propose MEQA, a

framework for the meta-evaluation of question and answer (QA) benchmarks, to

provide standardized assessments, quantifiable scores, and enable meaningful

intra-benchmark comparisons. We demonstrate this approach on cybersecurity

benchmarks, using human and LLM evaluators, highlighting the benchmarks'

strengths and weaknesses. We motivate our choice of test domain by AI models'

dual nature as powerful defensive tools and security threats.

Researchers demonstrate that Latent Adversarial Training (LAT) concentrates the representation of refusal behavior in language models' latent space, with the first two SVD components explaining 75% of activation differences variance while producing more transferable refusal vectors compared to traditional safety fine-tuning approaches.

Mechanistic interpretability research faces a gap between analyzing simple circuits in toy tasks and discovering features in large models. To bridge this gap, we propose text-to-SQL generation as an ideal task to study, as it combines the formal structure of toy tasks with real-world complexity. We introduce TinySQL, a synthetic dataset, progressing from basic to advanced SQL operations, and train models ranging from 33M to 1B parameters to establish a comprehensive testbed for interpretability. We apply multiple complementary interpretability techniques, including Edge Attribution Patching and Sparse Autoencoders, to identify minimal circuits and components supporting SQL generation. We compare circuits for different SQL subskills, evaluating their minimality, reliability, and identifiability. Finally, we conduct a layerwise logit lens analysis to reveal how models compose SQL queries across layers: from intent recognition to schema resolution to structured generation. Our work provides a robust framework for probing and comparing interpretability methods in a structured, progressively complex setting.

There are no more papers matching your filters at the moment.