Artificial Intelligence Research Institute (IIIA-CSIC)

Artificial Intelligence Research Institute (IIIA-CSIC) researchers develop a philosophical framework distinguishing "Architectures of Error" between human and AI code generation, revealing that cognitive errors in human programming stem from systematic reasoning limitations while stochastic errors in AI systems arise from statistical pattern matching without semantic understanding, with analysis across four dimensions—semantic coherence, security robustness, epistemic limits, and control mechanisms—demonstrating that AI-generated code requires fundamentally different verification approaches due to its lack of causal reasoning capabilities and internal justification mechanisms, challenging conventional explainable AI frameworks and establishing a foundation for more informed human-AI collaborative software development practices.

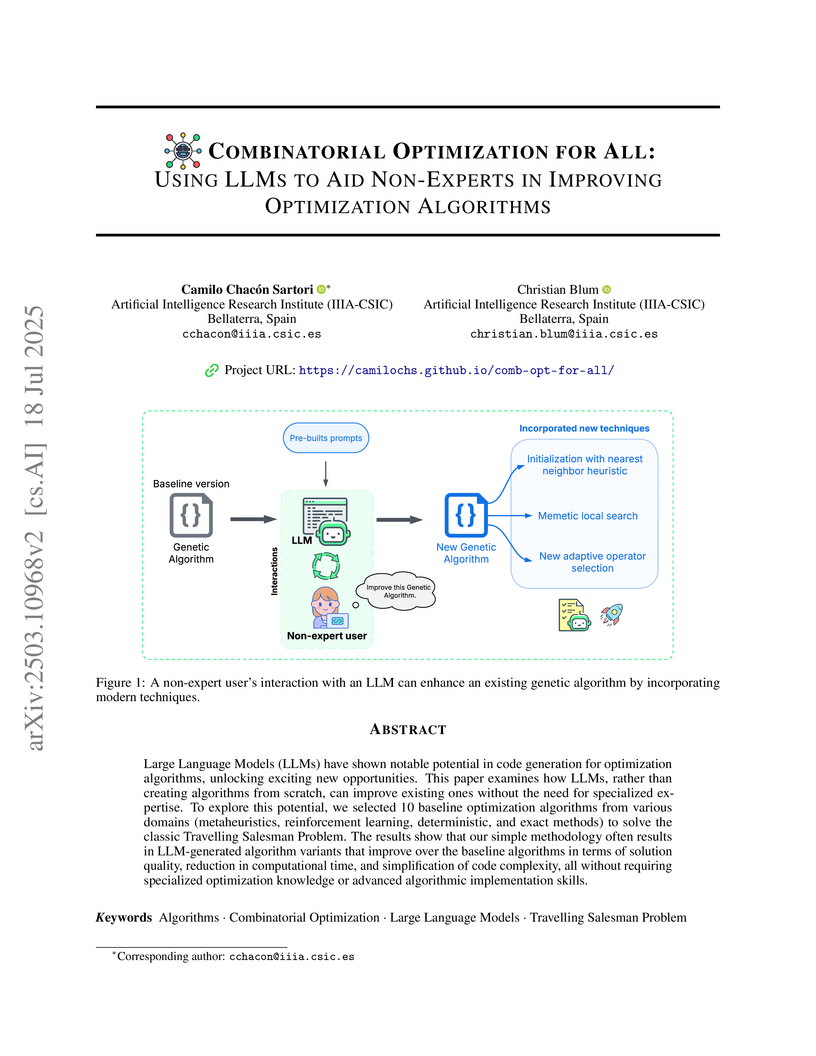

Researchers at the Artificial Intelligence Research Institute (IIIA-CSIC) investigated the ability of Large Language Models (LLMs) to enhance existing combinatorial optimization algorithms for non-expert users, using a simple prompting strategy across ten diverse algorithms. Their methodology improved the performance of nine out of ten tested algorithms, with the best LLM-generated code outperforming originals in solution quality or convergence time, sometimes also reducing code complexity.

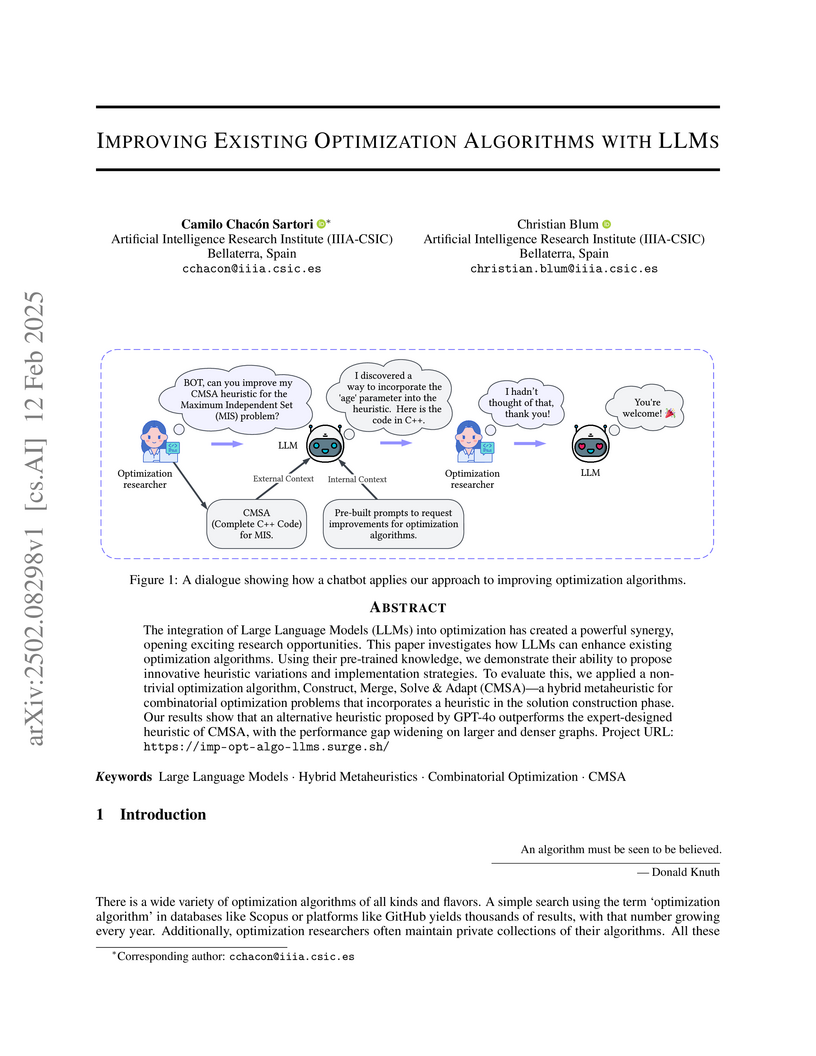

Researchers from IIIA-CSIC demonstrate a novel methodology for enhancing existing optimization algorithms using LLMs as code improvement assistants, successfully creating two superior variants of the CMSA algorithm that outperform the original implementation on large-scale maximum independent set problems through innovative parameter utilization and selection strategies.

Since the rise of Large Language Models (LLMs) a couple of years ago,

researchers in metaheuristics (MHs) have wondered how to use their power in a

beneficial way within their algorithms. This paper introduces a novel approach

that leverages LLMs as pattern recognition tools to improve MHs. The resulting

hybrid method, tested in the context of a social network-based combinatorial

optimization problem, outperforms existing state-of-the-art approaches that

combine machine learning with MHs regarding the obtained solution quality. By

carefully designing prompts, we demonstrate that the output obtained from LLMs

can be used as problem knowledge, leading to improved results. Lastly, we

acknowledge LLMs' potential drawbacks and limitations and consider it essential

to examine them to advance this type of research further. Our method can be

reproduced using a tool available at: this https URL

Researchers from CY Cergy Paris University, RWTH Aachen, and IIIA-CSIC demonstrate the successful integration of Large Language Models into multi-agent swarm simulations, achieving comparable or superior performance to traditional rule-based systems through a novel NetLogo-based toolchain and carefully engineered prompts for ant foraging and bird flocking behaviors.

Researchers integrated Large Language Models into STNWeb, a web-based tool for generating Search Trajectory Networks, to automate the analysis of optimization algorithms. The system provides natural language reports explaining algorithm behavior, recommends optimal parameters for STN generation, and generates plots, making complex analyses more accessible.

This paper addresses the Longest Filled Common Subsequence (LFCS) problem, a challenging NP-hard problem with applications in bioinformatics, including gene mutation prediction and genomic data reconstruction. Existing approaches, including exact, metaheuristic, and approximation algorithms, have primarily been evaluated on small-sized instances, which offer limited insights into their scalability. In this work, we introduce a new benchmark dataset with significantly larger instances and demonstrate that existing datasets lack the discriminative power needed to meaningfully assess algorithm performance at scale. To solve large instances efficiently, we utilize an adaptive Construct, Merge, Solve, Adapt (CMSA) framework that iteratively generates promising subproblems via component-based construction and refines them using feedback from prior iterations. Subproblems are solved using an external black-box solver. Extensive experiments on both standard and newly introduced benchmarks show that the proposed adaptive CMSA achieves state-of-the-art performance, outperforming five leading methods. Notably, on 1,510 problem instances with known optimal solutions, our approach solves 1,486 of them -- achieving over 99.9% optimal solution quality and demonstrating exceptional scalability. We additionally propose a novel application of LFCS for song identification from degraded audio excerpts as an engineering contribution, using real-world energy-profile instances from popular music. Finally, we conducted an empirical explainability analysis to identify critical feature combinations influencing algorithm performance, i.e., the key problem features contributing to success or failure of the approaches across different instance types are revealed.

24 Mar 2025

A computational framework combines agent-based modeling with the Capability Approach to evaluate homelessness policies, incorporating human values and needs into agent decision-making through Markov Decision Processes while working with real data from Barcelona's Raval neighborhood non-profits.

DeepTrust is a multi-step classification metaheuristic designed for robust Android malware detection, which maximizes the representational divergence among its internal deep neural network models. This framework secured a gold medal in the IEEE SaTML’25 ELSA-RAMD competition by demonstrating superior resilience against both feature-space and problem-space adversarial attacks, while maintaining high detection rates and a low false positive rate.

31 Jul 2025

Agent-based simulations have an enormous potential as tools to evaluate social policies in a non-invasive way, before these are implemented to real-world populations. However, the recommendations that these computational approaches may offer to tackle urgent human development challenges can vary substantially depending on how we model agents' (people) behaviour and the criteria that we use to measure inequity. In this paper, we integrate the conceptual framework of the capability approach (CA), which is explicitly designed to promote and assess human well-being, to guide the simulation and evaluate the effectiveness of policies. We define a reinforcement learning environment where agents behave to restore their capabilities under the constraints of a specific policy. Working in collaboration with local stakeholders, non-profits and domain experts, we apply our model in a case study to mitigate health inequity among the population experiencing homelessness (PEH) in Barcelona. By doing so, we present the first proof of concept simulation, aligned with the CA for human development, to assess the impact of policies under parliamentary discussion.

21 Oct 2025

The core premise of AI debate as a scalable oversight technique is that it is harder to lie convincingly than to refute a lie, enabling the judge to identify the correct position. Yet, existing debate experiments have relied on datasets with ground truth, where lying is reduced to defending an incorrect proposition. This overlooks a subjective dimension: lying also requires the belief that the claim defended is false. In this work, we apply debate to subjective questions and explicitly measure large language models' prior beliefs before experiments. Debaters were asked to select their preferred position, then presented with a judge persona deliberately designed to conflict with their identified priors. This setup tested whether models would adopt sycophantic strategies, aligning with the judge's presumed perspective to maximize persuasiveness, or remain faithful to their prior beliefs. We implemented and compared two debate protocols, sequential and simultaneous, to evaluate potential systematic biases. Finally, we assessed whether models were more persuasive and produced higher-quality arguments when defending positions consistent with their prior beliefs versus when arguing against them. Our main findings show that models tend to prefer defending stances aligned with the judge persona rather than their prior beliefs, sequential debate introduces significant bias favoring the second debater, models are more persuasive when defending positions aligned with their prior beliefs, and paradoxically, arguments misaligned with prior beliefs are rated as higher quality in pairwise comparison. These results can inform human judges to provide higher-quality training signals and contribute to more aligned AI systems, while revealing important aspects of human-AI interaction regarding persuasion dynamics in language models.

The fast advancement of Large Vision-Language Models (LVLMs) has shown immense potential. These models are increasingly capable of tackling abstract visual tasks. Geometric structures, particularly graphs with their inherent flexibility and complexity, serve as an excellent benchmark for evaluating these models' predictive capabilities. While human observers can readily identify subtle visual details and perform accurate analyses, our investigation reveals that state-of-the-art LVLMs exhibit consistent limitations in specific visual graph scenarios, especially when confronted with stylistic variations. In response to these challenges, we introduce VisGraphVar (Visual Graph Variability), a customizable benchmark generator able to produce graph images for seven distinct task categories (detection, classification, segmentation, pattern recognition, link prediction, reasoning, matching), designed to systematically evaluate the strengths and limitations of individual LVLMs. We use VisGraphVar to produce 990 graph images and evaluate six LVLMs, employing two distinct prompting strategies, namely zero-shot and chain-of-thought. The findings demonstrate that variations in visual attributes of images (e.g., node labeling and layout) and the deliberate inclusion of visual imperfections, such as overlapping nodes, significantly affect model performance. This research emphasizes the importance of a comprehensive evaluation across graph-related tasks, extending beyond reasoning alone. VisGraphVar offers valuable insights to guide the development of more reliable and robust systems capable of performing advanced visual graph analysis.

Causal learning is the cognitive process of developing the capability of making causal inferences based on available information, often guided by normative principles. This process is prone to errors and biases, such as the illusion of causality, in which people perceive a causal relationship between two variables despite lacking supporting evidence. This cognitive bias has been proposed to underlie many societal problems, including social prejudice, stereotype formation, misinformation, and superstitious thinking. In this research, we investigate whether large language models (LLMs) develop causal illusions, both in real-world and controlled laboratory contexts of causal learning and inference. To this end, we built a dataset of over 2K samples including purely correlational cases, situations with null contingency, and cases where temporal information excludes the possibility of causality by placing the potential effect before the cause. We then prompted the models to make statements or answer causal questions to evaluate their tendencies to infer causation erroneously in these structured settings. Our findings show a strong presence of causal illusion bias in LLMs. Specifically, in open-ended generation tasks involving spurious correlations, the models displayed bias at levels comparable to, or even lower than, those observed in similar studies on human subjects. However, when faced with null-contingency scenarios or temporal cues that negate causal relationships, where it was required to respond on a 0-100 scale, the models exhibited significantly higher bias. These findings suggest that the models have not uniformly, consistently, or reliably internalized the normative principles essential for accurate causal learning.

18 Aug 2024

In recent years, computational improvements have allowed for more nuanced,

data-driven and geographically explicit agent-based simulations. So far,

simulations have struggled to adequately represent the attributes that motivate

the actions of the agents. In fact, existing population synthesis frameworks

generate agent profiles limited to socio-demographic attributes. In this paper,

we introduce a novel value-enriched population synthesis framework that

integrates a motivational layer with the traditional individual and household

socio-demographic layers. Our research highlights the significance of extending

the profile of agents in synthetic populations by incorporating data on values,

ideologies, opinions and vital priorities, which motivate the agents'

behaviour. This motivational layer can help us develop a more nuanced

decision-making mechanism for the agents in social simulation settings. Our

methodology integrates microdata and macrodata within different Bayesian

network structures. This contribution allows to generate synthetic populations

with integrated value systems that preserve the inherent socio-demographic

distributions of the real population in any specific region.

Exploiting Constraint Reasoning to Build Graphical Explanations for Mixed-Integer Linear Programming

Exploiting Constraint Reasoning to Build Graphical Explanations for Mixed-Integer Linear Programming

Following the recent push for trustworthy AI, there has been an increasing interest in developing contrastive explanation techniques for optimisation, especially concerning the solution of specific decision-making processes formalised as MILPs. Along these lines, we propose X-MILP, a domain-agnostic approach for building contrastive explanations for MILPs based on constraint reasoning techniques. First, we show how to encode the queries a user makes about the solution of an MILP problem as additional constraints. Then, we determine the reasons that constitute the answer to the user's query by computing the Irreducible Infeasible Subsystem (IIS) of the newly obtained set of constraints. Finally, we represent our explanation as a "graph of reasons" constructed from the IIS, which helps the user understand the structure among the reasons that answer their query. We test our method on instances of well-known optimisation problems to evaluate the empirical hardness of computing explanations.

A systematic empirical study demonstrates that humans consistently favor explanation-based, non-minimal revisions when encountering inconsistent beliefs, diverging from the minimality principle central to classical AI belief revision systems. Across three experiments, participants predominantly revised general rules rather than specific facts, often making more changes than strictly necessary for logical consistency.

One of the major challenges we face with ethical AI today is developing computational systems whose reasoning and behaviour are provably aligned with human values. Human values, however, are notorious for being ambiguous, contradictory and ever-changing. In order to bridge this gap, and get us closer to the situation where we can formally reason about implementing values into AI, this paper presents a formal representation of values, grounded in the social sciences. We use this formal representation to articulate the key challenges for achieving value-aligned behaviour in multiagent systems (MAS) and a research roadmap for addressing them.

When people need help with their day-to-day activities, they turn to family, friends or neighbours. But despite an increasingly networked world, technology falls short in finding suitable volunteers. In this paper, we propose uHelp, a platform for building a community of helpful people and supporting community members find the appropriate help within their social network. Lately, applications that focus on finding volunteers have started to appear, such as Helpin or Facebook's Community Help. However, what distinguishes uHelp from existing applications is its trust-based intelligent search for volunteers. Although trust is crucial to these innovative social applications, none of them have seriously achieved yet a trust-building solution such as that of uHelp. uHelp's intelligent search for volunteers is based on a number of AI technologies: (1) a novel trust-based flooding algorithm that navigates one's social network looking for appropriate trustworthy volunteers; (2) a novel trust model that maintains the trustworthiness of peers by learning from their similar past experiences; and (3) a semantic similarity model that assesses the similarity of experiences. This article presents the uHelp application, describes the underlying AI technologies that allow uHelp find trustworthy volunteers efficiently, and illustrates the implementation details. uHelp's initial prototype has been tested with a community of single parents in Barcelona, and the app is available online at both Apple Store and Google Play.

As AI models become ever more complex and intertwined in humans' daily lives,

greater levels of interactivity of explainable AI (XAI) methods are needed. In

this paper, we propose the use of belief change theory as a formal foundation

for operators that model the incorporation of new information, i.e. user

feedback in interactive XAI, to logical representations of data-driven

classifiers. We argue that this type of formalisation provides a framework and

a methodology to develop interactive explanations in a principled manner,

providing warranted behaviour and favouring transparency and accountability of

such interactions. Concretely, we first define a novel, logic-based formalism

to represent explanatory information shared between humans and machines. We

then consider real world scenarios for interactive XAI, with different

prioritisations of new and existing knowledge, where our formalism may be

instantiated. Finally, we analyse a core set of belief change postulates,

discussing their suitability for our real world settings and pointing to

particular challenges that may require the relaxation or reinterpretation of

some of the theoretical assumptions underlying existing operators.

In this paper, we propose an acceleration of the exact k-means++ algorithm using geometric information, specifically the Triangle Inequality and additional norm filters, along with a two-step sampling procedure. Our experiments demonstrate that the accelerated version outperforms the standard k-means++ version in terms of the number of visited points and distance calculations, achieving greater speedup as the number of clusters increases. The version utilizing the Triangle Inequality is particularly effective for low-dimensional data, while the additional norm-based filter enhances performance in high-dimensional instances with greater norm variance among points. Additional experiments show the behavior of our algorithms when executed concurrently across multiple jobs and examine how memory performance impacts practical speedup.

There are no more papers matching your filters at the moment.