BCAM - Basque Center for Applied Mathematics

Methods for split conformal prediction leverage calibration samples to transform any prediction rule into a set-prediction rule that complies with a target coverage probability. Existing methods provide remarkably strong performance guarantees with minimal computational costs. However, they require to use calibration samples composed by labeled examples different to those used for training. This requirement can be highly inconvenient, as it prevents the use of all labeled examples for training and may require acquiring additional labels solely for calibration. This paper presents an effective methodology for split conformal prediction with unsupervised calibration for classification tasks. In the proposed approach, set-prediction rules are obtained using unsupervised calibration samples together with supervised training samples previously used to learn the classification rule. Theoretical and experimental results show that the presented methods can achieve performance comparable to that with supervised calibration, at the expenses of a moderate degradation in performance guarantees and computational efficiency.

25 Sep 2025

A framework for standardized quantum computer performance evaluation is proposed, learning from classical computing's evolution and critically assessing current quantum metrics. It offers guidelines for practitioners and advocates for a new organization, SPEQC, to ensure fair and objective assessment across diverse quantum hardware platforms.

08 Oct 2025

In this paper we derive quantitative boundary Hölder estimates, with explicit constants, for the inhomogeneous Poisson problem in a bounded open set D⊂Rd.

Our approach has two main steps: firstly, we consider an arbitrary D as above and prove that the boundary α-Hölder regularity of the solution the Poisson equation is controlled, with explicit constants, by the Hölder seminorm of the boundary data, the Lγ-norm of the forcing term with γ>d/2, and the α/2-moment of the exit time from D of the Brownian motion.

Secondly, we derive explicit estimates for the α/2-moment of the exit time in terms of the distance to the boundary, the regularity of the domain D, and α. Using this approach, we derive explicit estimates for the same problem in domains satisfying exterior ball conditions, respectively exterior cone/wedge conditions, in terms of simple geometric features.

As a consequence we also obtain explicit constants for pointwise estimates for the Green function and for the gradient of the solution.

The obtained estimates can be employed to bypass the curse of high dimensions when aiming to approximate the solution of the Poisson problem using neural networks, obtaining polynomial scaling with dimension, which in some cases can be shown to be optimal.

Higher-order interactions underlie complex phenomena in systems such as biological and artificial neural networks, but their study is challenging due to the scarcity of tractable models. By leveraging a generalisation of the maximum entropy principle, we introduce curved neural networks as a class of models with a limited number of parameters that are particularly well-suited for studying higher-order phenomena. Through exact mean-field descriptions, we show that these curved neural networks implement a self-regulating annealing process that can accelerate memory retrieval, leading to explosive order-disorder phase transitions with multi-stability and hysteresis effects. Moreover, by analytically exploring their memory-retrieval capacity using the replica trick, we demonstrate that these networks can enhance memory capacity and robustness of retrieval over classical associative-memory networks. Overall, the proposed framework provides parsimonious models amenable to analytical study, revealing higher-order phenomena in complex networks.

30 Sep 2025

In certain real-world optimization scenarios, practitioners are not interested in solving multiple problems but rather in finding the best solution to a single, specific problem. When the computational budget is large relative to the cost of evaluating a candidate solution, multiple heuristic alternatives can be tried to solve the same given problem, each possibly with a different algorithm, parameter configuration, initialization, or stopping criterion. The sequential selection of which alternative to try next is crucial for efficiently identifying the one that provides the best possible solution across multiple attempts. Despite the relevance of this problem in practice, it has not yet been the exclusive focus of any existing review. Several sequential alternative selection strategies have been proposed in different research topics, but they have not been comprehensively and systematically unified under a common perspective.

This work presents a focused review of single-problem multi-attempt heuristic optimization. It brings together suitable strategies to this problem that have been studied separately through algorithm selection, parameter tuning, multi-start and resource allocation. These strategies are explained using a unified terminology within a common framework, which supports the development of a taxonomy for systematically organizing and classifying them.

Researchers at the Basque Center for Applied Mathematics developed a theoretical framework using nonequilibrium statistical physics to analyze the dynamical behavior of self-attention networks. They established an equivalence between attention mechanisms and asymmetric Hopfield networks, applying Dynamical Mean-Field Theory to reveal complex temporal dynamics, including phase transitions to periodic, quasi-periodic, and chaotic attractors, which contribute to an effective memory extending beyond the explicit context window.

21 Sep 2025

The Hopfield network (HN) is a classical model of associative memory whose dynamics are closely related to the Ising spin system with 2-body interactions. Stored patterns are encoded as minima of an energy function shaped by a Hebbian learning rule, and retrieval corresponds to convergence towards these minima. Modern Hopfield Networks (MHNs) introduce p-body interactions among neurons with p greater than 2 and, more recently, also exponential interaction functions, which significantly improve network's storing and retrieval capacity. While the criticality of HNs and p-body MHNs were extensively studied since the 1980s, the investigation of critical behavior in exponential MHNs is still in its early stages. Here, we study a stochastic exponential MHN (SMHN) with a multiplicative salt-and-pepper noise. While taking the noise probability p as control parameter, the average overlap parameter Q and a diffusion scaling H are taken as order parameters. In particular, H is related to the time correlation features of the network, with H greater than 0.5 signaling the emergence of persistent time memory. We found the emergence of a critical transition in both Q and H, with the critical noise level weakly decreasing as the load N increases. Notably, for each load N, the diffusion scaling H highlights a transition between a sub- and a super-critical regime, both with short-range correlated dynamics. Conversely, the critical regime, which is found in the range of p around 0.23-0.3, displays a long-range correlated dynamics with highly persistent temporal memory marked by the high value H around 1.3.

We propose a method for inferring entropy production (EP) in high-dimensional stochastic systems, including many-body systems and non-Markovian systems with long memory. Standard techniques for estimating EP become intractable in such systems due to computational and statistical limitations. We infer trajectory-level EP and lower bounds on average EP by exploiting a nonequilibrium analogue of the Maximum Entropy principle, along with convex duality. Our approach uses only samples of trajectory observables, such as spatiotemporal correlations. It does not require reconstruction of high-dimensional probability distributions or rate matrices, nor impose any special assumptions such as discrete states or multipartite dynamics. In addition, it may be used to compute a hierarchical decomposition of EP, reflecting contributions from different interaction orders, and it has an intuitive physical interpretation as a "thermodynamic uncertainty relation." We demonstrate its numerical performance on a disordered nonequilibrium spin model with 1000 spins and a large neural spike-train dataset.

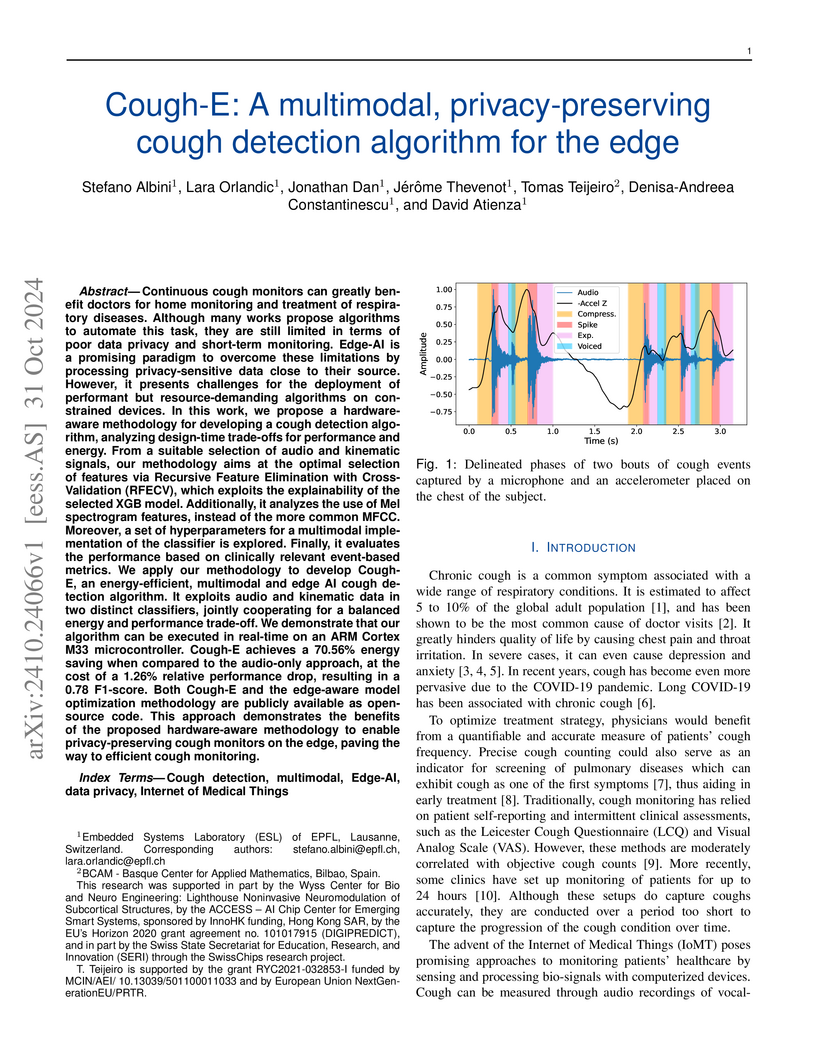

Continuous cough monitors can greatly aid doctors in home monitoring and treatment of respiratory diseases. Although many algorithms have been proposed, they still face limitations in data privacy and short-term monitoring. Edge-AI offers a promising solution by processing privacy-sensitive data near the source, but challenges arise in deploying resource-intensive algorithms on constrained devices. From a suitable selection of audio and kinematic signals, our methodology aims at the optimal selection of features via Recursive Feature Elimination with Cross-Validation (RFECV), which exploits the explainability of the selected XGB model. Additionally, it analyzes the use of Mel spectrogram features, instead of the more common MFCC. Moreover, a set of hyperparameters for a multimodal implementation of the classifier is explored. Finally, it evaluates the performance based on clinically relevant event-based metrics. We apply our methodology to develop Cough-E, an energy-efficient, multimodal and edge AI cough detection algorithm. It exploits audio and kinematic data in two distinct classifiers, jointly cooperating for a balanced energy and performance trade-off. We demonstrate that our algorithm can be executed in real-time on an ARM Cortex M33 microcontroller. Cough-E achieves a 70.56\% energy saving when compared to the audio-only approach, at the cost of a 1.26\% relative performance drop, resulting in a 0.78 F1-score. Both Cough-E and the edge-aware model optimization methodology are publicly available as open-source code. This approach demonstrates the benefits of the proposed hardware-aware methodology to enable privacy-preserving cough monitors on the edge, paving the way to efficient cough monitoring.

Alzheimer's disease (AD) is a complex neurodegenerative disorder

characterized by the accumulation of amyloid-beta (Aβ) and phosphorylated

tau (p-tau) proteins, leading to cognitive decline measured by the Alzheimer's

Disease Assessment Scale (ADAS) score. In this study, we develop and analyze a

system of ordinary differential equation models to describe the interactions

between Aβ, p-tau, and ADAS score, providing a mechanistic understanding

of disease progression. To ensure accurate model calibration, we employ

Bayesian inference and Physics-Informed Neural Networks (PINNs) for parameter

estimation based on Alzheimer's Disease Neuroimaging Initiative data. The

data-driven Bayesian approach enables uncertainty quantification, improving

confidence in model predictions, while the PINN framework leverages neural

networks to capture complex dynamics directly from data. Furthermore, we

implement an optimal control strategy to assess the efficacy of an anti-tau

therapeutic intervention aimed at reducing p-tau levels and mitigating

cognitive decline. Our data-driven solutions indicate that while optimal drug

administration effectively decreases p-tau concentration, its impact on

cognitive decline, as reflected in the ADAS score, remains limited. These

findings suggest that targeting p-tau alone may not be sufficient for

significant cognitive improvement, highlighting the need for multi-target

therapeutic strategies. The integration of mechanistic modelling, advanced

parameter estimation, and control-based therapeutic optimization provides a

comprehensive framework for improving treatment strategies for AD.

10 Feb 2021

We analyze the interior controllability problem for a nonlocal Schr\"odinger

equation involving the fractional Laplace operator (−Δ)s, s∈(0,1),

on a bounded C1,1 domain Ω⊂Rn. The controllability

from a neighborhood of the boundary of the domain is obtained for exponents s

in the interval [1/2,1), while for s<1/2 the equation is shown to be not

controllable. The results follow applying the multiplier method, joint with a

Pohozaev-type identity for the fractional Laplacian, and from an explicit

computation of the spectrum of the operator in the one-dimensional case.

04 Jun 2025

With the recently increased interest in probabilistic models, the efficiency

of an underlying sampler becomes a crucial consideration. A Hamiltonian Monte

Carlo (HMC) sampler is one popular option for models of this kind. Performance

of HMC, however, strongly relies on a choice of parameters associated with an

integration method for Hamiltonian equations, which up to date remains mainly

heuristic or introduce time complexity. We propose a novel computationally

inexpensive and flexible approach (we call it Adaptive Tuning or ATune) that,

by analyzing the data generated during a burning stage of an HMC simulation,

detects a system specific splitting integrator with a set of reliable HMC

hyperparameters, including their credible randomization intervals, to be

readily used in a production simulation. The method automatically eliminates

those values of simulation parameters which could cause undesired extreme

scenarios, such as resonance artifacts, low accuracy or poor sampling. The new

approach is implemented in the in-house software package \textsf{HaiCS}, with

no computational overheads introduced in a production simulation, and can be

easily incorporated in any package for Bayesian inference with HMC. The tests

on popular statistical models using original HMC and generalized Hamiltonian

Monte Carlo (GHMC) reveal the superiority of adaptively tuned methods in terms

of stability, performance and accuracy over conventional HMC tuned

heuristically and coupled with the well-established integrators. We also claim

that the generalized formulation of HMC, i.e. GHMC, is preferable for achieving

high sampling performance. The efficiency of the new methodology is assessed in

comparison with state-of-the-art samplers, e.g. the No-U-Turn-Sampler (NUTS),

in real-world applications, such as endocrine therapy resistance in cancer,

modeling of cell-cell adhesion dynamics and influenza epidemic outbreak.

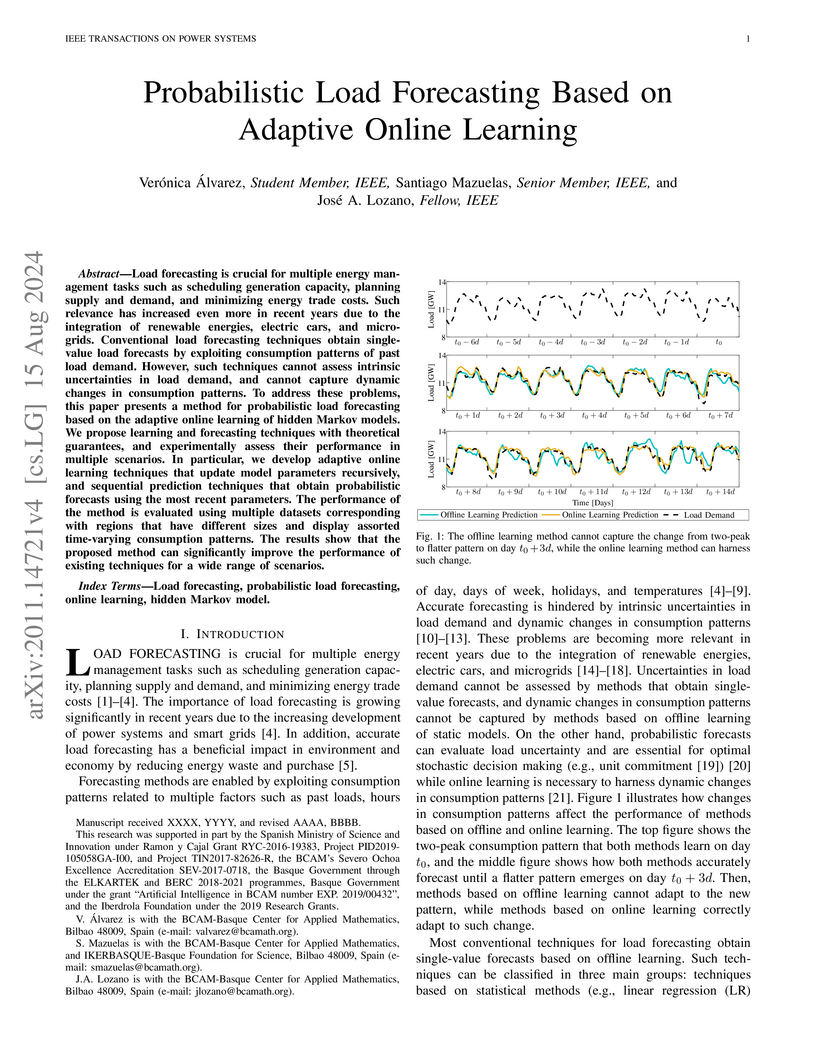

Load forecasting is crucial for multiple energy management tasks such as scheduling generation capacity, planning supply and demand, and minimizing energy trade costs. Such relevance has increased even more in recent years due to the integration of renewable energies, electric cars, and microgrids. Conventional load forecasting techniques obtain single-value load forecasts by exploiting consumption patterns of past load demand. However, such techniques cannot assess intrinsic uncertainties in load demand, and cannot capture dynamic changes in consumption patterns. To address these problems, this paper presents a method for probabilistic load forecasting based on the adaptive online learning of hidden Markov models. We propose learning and forecasting techniques with theoretical guarantees, and experimentally assess their performance in multiple scenarios. In particular, we develop adaptive online learning techniques that update model parameters recursively, and sequential prediction techniques that obtain probabilistic forecasts using the most recent parameters. The performance of the method is evaluated using multiple datasets corresponding with regions that have different sizes and display assorted time-varying consumption patterns. The results show that the proposed method can significantly improve the performance of existing techniques for a wide range of scenarios.

Bloch oscillations (BOs) describe the coherent oscillatory motion of electrons in a periodic lattice under a constant external electric field. Deviations from pure harmonic wave packet motion or irregular Bloch oscillations can occur due to Zener tunneling (Landau-Zener Transitions or LZTs), with oscillation frequencies closely tied to interband coupling strengths. Motivated by the interplay between flat-band physics and interband coupling in generating irregular BOs, here we investigate these oscillations in Lieb and Kagome lattices using two complementary approaches: coherent transport simulations and scattering matrix analysis. In the presence of unavoidable band touchings, half-fundamental and fundamental BO frequencies are observed in Lieb and Kagome lattices, respectively -- a behavior directly linked to their distinct band structures. When avoided band touchings are introduced, distinct BO frequency responses to coupling parameters in each lattice are observed. Scattering matrix analysis reveals strong coupling and potential LZTs between dispersive bands and the flat band in Kagome lattices, with the quadratic band touching enhancing interband interactions and resulting in BO dynamics that is distinct from systems with linear crossings. In contrast, the Lieb lattice -- a three level system -- shows independent coupling between the flat band and two dispersive bands, without direct LZTs occurring between the two dispersive bands themselves. Finally, to obtain a unifying perspective on these results, we examine BOs during a strain-induced transition from Kagome to Lieb lattices, and link the evolution of irregular BO frequencies to changes in band connectivity and interband coupling.

30 May 2019

The Data Processing Inequality (DPI) says that the Umegaki relative entropy

S(ρ∣∣σ):=Tr[ρ(logρ−logσ)] is non-increasing

under the action of completely positive trace preserving (CPTP) maps. Let

M be a finite dimensional von Neumann algebra and N a

von Neumann subalgebra if it. Let Eτ be the tracial

conditional expectation from M onto N. For density

matrices ρ and σ in N, let $\rho_{\mathcal N} :=

{\mathcal E}_\tau \rhoand\sigma_{\mathcal N} := {\mathcal E}_\tau \sigma$.

Since Eτ is CPTP, the DPI says that $S(\rho||\sigma) \geq

S(\rho_{\mathcal N}||\sigma_{\mathcal N})$, and the general case is readily

deduced from this. A theorem of Petz says that there is equality if and only if

σ=Rρ(σN), where Rρ

is the Petz recovery map, which is dual to the Accardi-Cecchini coarse graining

operator Aρ from M to N. In it

simplest form, our bound is S(\rho||\sigma) - S(\rho_{\mathcal N}

||\sigma_{\mathcal N} ) \geq \left(\frac{1}{8\pi}\right)^{4}

\|\Delta_{\sigma,\rho}\|^{-2} \| {\mathcal R}_{\rho_{\mathcal N}} -\sigma\|_1^4

where Δσ,ρ is the relative modular operator. We also prove

related results for various quasi-relative entropies.

Explicitly describing the solutions set of the Petz equation $\sigma =

{\mathcal R}_\rho(\sigma_{\mathcal N} )$ amounts to determining the set of

fixed points of the Accardi-Cecchini coarse graining map. Building on previous

work, we provide a throughly detailed description of the set of solutions of

the Petz equation, and obtain all of our results in a simple self, contained

manner.

Bloch oscillations (BOs) describe the coherent oscillatory motion of electrons in a periodic lattice under a constant external electric field. Deviations from pure harmonic wave packet motion or irregular Bloch oscillations can occur due to Zener tunneling (Landau-Zener Transitions or LZTs), with oscillation frequencies closely tied to interband coupling strengths. Motivated by the interplay between flat-band physics and interband coupling in generating irregular BOs, here we investigate these oscillations in Lieb and Kagome lattices using two complementary approaches: coherent transport simulations and scattering matrix analysis. In the presence of unavoidable band touchings, half-fundamental and fundamental BO frequencies are observed in Lieb and Kagome lattices, respectively -- a behavior directly linked to their distinct band structures. When avoided band touchings are introduced, distinct BO frequency responses to coupling parameters in each lattice are observed. Scattering matrix analysis reveals strong coupling and potential LZTs between dispersive bands and the flat band in Kagome lattices, with the quadratic band touching enhancing interband interactions and resulting in BO dynamics that is distinct from systems with linear crossings. In contrast, the Lieb lattice -- a three level system -- shows independent coupling between the flat band and two dispersive bands, without direct LZTs occurring between the two dispersive bands themselves. Finally, to obtain a unifying perspective on these results, we examine BOs during a strain-induced transition from Kagome to Lieb lattices, and link the evolution of irregular BO frequencies to changes in band connectivity and interband coupling.

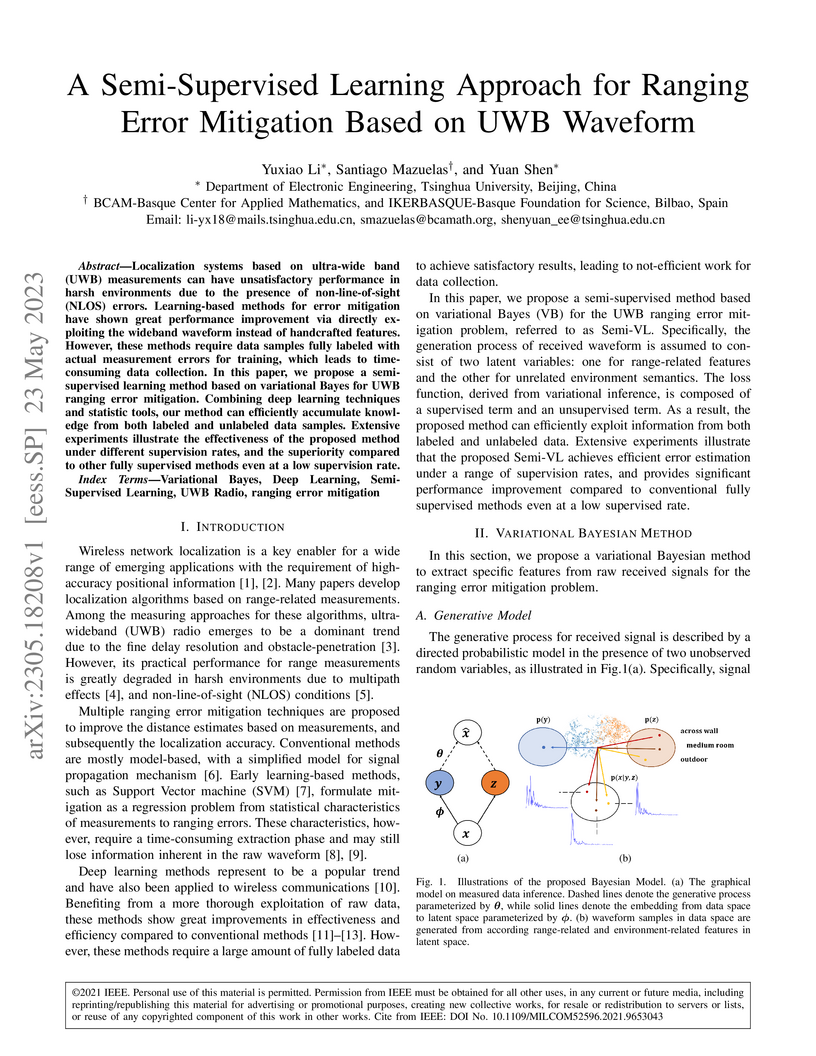

Localization systems based on ultra-wide band (UWB) measurements can have unsatisfactory performance in harsh environments due to the presence of non-line-of-sight (NLOS) errors. Learning-based methods for error mitigation have shown great performance improvement via directly exploiting the wideband waveform instead of handcrafted features. However, these methods require data samples fully labeled with actual measurement errors for training, which leads to time-consuming data collection. In this paper, we propose a semi-supervised learning method based on variational Bayes for UWB ranging error mitigation. Combining deep learning techniques and statistic tools, our method can efficiently accumulate knowledge from both labeled and unlabeled data samples. Extensive experiments illustrate the effectiveness of the proposed method under different supervision rates, and the superiority compared to other fully supervised methods even at a low supervision rate.

Stochastic resetting, a diffusive process whose amplitude is "reset" to the

origin at random times, is a vividly studied strategy to optimize encounter

dynamics, e.g., in chemical reactions. We here generalize the resetting step by

introducing a random resetting amplitude, such that the diffusing particle may

be only partially reset towards the trajectory origin, or even overshoot the

origin in a resetting step. We introduce different scenarios for the

random-amplitude stochastic resetting process and discuss the resulting

dynamics. Direct applications are geophysical layering (stratigraphy) as well

as population dynamics or financial markets, as well as generic search

processes.

20 Oct 2024

The molecular motion in heterogeneous media displays anomalous diffusion by the mean-squared displacement ⟨X2(t)⟩=2Dtα. Motivated by experiments reporting populations of the anomalous diffusion parameters α and D, we aim to disentangle their respective contributions to the observed variability when this last is due to a true population of these parameters and when it arises due to finite-duration recordings. We introduce estimators of the anomalous diffusion parameters on the basis of the time-averaged mean squared displacement and study their statistical properties. By using a copula approach, we derive a formula for the joint density function of their estimations conditioned on their actual values. The methodology introduced is indeed universal, it is valid for any Gaussian process and can be applied to any quadratic time-averaged statistics. We also explain the experimentally reported relation D∝exp(αc1+c2) for which we provide the exact expression. We finally compare our findings to numerical simulations of the fractional Brownian motion and quantify their accuracy by using the Hellinger distance.

23 Jul 2020

Starting from kinetic transport equations and subcellular dynamics we deduce

a multiscale model for glioma invasion relying on the go-or-grow dichotomy and

the influence of vasculature, acidity, and brain tissue anisotropy. Numerical

simulations are performed for this model with multiple taxis, in order to

assess the solution behavior under several scenarios of taxis and growth for

tumor and endothelial cells. An extension of the model to incorporate the

macroscopic evolution of normal tissue and necrotic matter allows us to perform

tumor grading.

There are no more papers matching your filters at the moment.