Beijing Institute of Big Data Research

Weinan E, Jiequn Han, and Arnulf Jentzen developed the Deep BSDE method to solve high-dimensional parabolic Partial Differential Equations (PDEs). The approach reformulates PDEs as Backward Stochastic Differential Equations (BSDEs) and uses deep neural networks to approximate their solutions, enabling accurate results for problems in up to 100 dimensions, such as the Allen-Cahn and Hamilton-Jacobi-Bellman equations.

10 Aug 2018

The well-known Mori-Zwanzig theory tells us that model reduction leads to

memory effect. For a long time, modeling the memory effect accurately and

efficiently has been an important but nearly impossible task in developing a

good reduced model. In this work, we explore a natural analogy between

recurrent neural networks and the Mori-Zwanzig formalism to establish a

systematic approach for developing reduced models with memory. Two training

models-a direct training model and a dynamically coupled training model-are

proposed and compared. We apply these methods to the Kuramoto-Sivashinsky

equation and the Navier-Stokes equation. Numerical experiments show that the

proposed method can produce reduced model with good performance on both

short-term prediction and long-term statistical properties.

In this paper, we present an initial attempt to learn evolution PDEs from data. Inspired by the latest development of neural network designs in deep learning, we propose a new feed-forward deep network, called PDE-Net, to fulfill two objectives at the same time: to accurately predict dynamics of complex systems and to uncover the underlying hidden PDE models. The basic idea of the proposed PDE-Net is to learn differential operators by learning convolution kernels (filters), and apply neural networks or other machine learning methods to approximate the unknown nonlinear responses. Comparing with existing approaches, which either assume the form of the nonlinear response is known or fix certain finite difference approximations of differential operators, our approach has the most flexibility by learning both differential operators and the nonlinear responses. A special feature of the proposed PDE-Net is that all filters are properly constrained, which enables us to easily identify the governing PDE models while still maintaining the expressive and predictive power of the network. These constrains are carefully designed by fully exploiting the relation between the orders of differential operators and the orders of sum rules of filters (an important concept originated from wavelet theory). We also discuss relations of the PDE-Net with some existing networks in computer vision such as Network-In-Network (NIN) and Residual Neural Network (ResNet). Numerical experiments show that the PDE-Net has the potential to uncover the hidden PDE of the observed dynamics, and predict the dynamical behavior for a relatively long time, even in a noisy environment.

We develop the mathematical foundations of the stochastic modified equations

(SME) framework for analyzing the dynamics of stochastic gradient algorithms,

where the latter is approximated by a class of stochastic differential

equations with small noise parameters. We prove that this approximation can be

understood mathematically as an weak approximation, which leads to a number of

precise and useful results on the approximations of stochastic gradient descent

(SGD), momentum SGD and stochastic Nesterov's accelerated gradient method in

the general setting of stochastic objectives. We also demonstrate through

explicit calculations that this continuous-time approach can uncover important

analytical insights into the stochastic gradient algorithms under consideration

that may not be easy to obtain in a purely discrete-time setting.

26 Dec 2021

Enhanced sampling methods such as metadynamics and umbrella sampling have become essential tools for exploring the configuration space of molecules and materials. At the same time, they have long faced a number of issues such as the inefficiency when dealing with a large number of collective variables (CVs) or systems with high free energy barriers. In this work, we show that with \redc{the clustering and adaptive tuning techniques}, the reinforced dynamics (RiD) scheme can be used to efficiently explore the configuration space and free energy landscapes with a large number of CVs or systems with high free energy barriers. We illustrate this by studying various representative and challenging examples. Firstly we demonstrate the efficiency of adaptive RiD compared with other methods, and construct the 9-dimensional free energy landscape of peptoid trimer which has energy barriers of more than 8 kcal/mol. We then study the folding of the protein chignolin using 18 CVs. In this case, both the folding and unfolding rates are observed to be equal to 4.30~μs−1. Finally, we propose a protein structure refinement protocol based on RiD. This protocol allows us to efficiently employ more than 100 CVs for exploring the landscape of protein structures and it gives rise to an overall improvement of 14.6 units over the initial Global Distance Test-High Accuracy (GDT-HA) score.

Deep learning achieves state-of-the-art results in many tasks in computer

vision and natural language processing. However, recent works have shown that

deep networks can be vulnerable to adversarial perturbations, which raised a

serious robustness issue of deep networks. Adversarial training, typically

formulated as a robust optimization problem, is an effective way of improving

the robustness of deep networks. A major drawback of existing adversarial

training algorithms is the computational overhead of the generation of

adversarial examples, typically far greater than that of the network training.

This leads to the unbearable overall computational cost of adversarial

training. In this paper, we show that adversarial training can be cast as a

discrete time differential game. Through analyzing the Pontryagin's Maximal

Principle (PMP) of the problem, we observe that the adversary update is only

coupled with the parameters of the first layer of the network. This inspires us

to restrict most of the forward and back propagation within the first layer of

the network during adversary updates. This effectively reduces the total number

of full forward and backward propagation to only one for each group of

adversary updates. Therefore, we refer to this algorithm YOPO (You Only

Propagate Once). Numerical experiments demonstrate that YOPO can achieve

comparable defense accuracy with approximately 1/5 ~ 1/4 GPU time of the

projected gradient descent (PGD) algorithm. Our codes are available at

https://this https URL

Fine-grained classification is challenging due to the difficulty of finding

discriminative features. Finding those subtle traits that fully characterize

the object is not straightforward. To handle this circumstance, we propose a

novel self-supervision mechanism to effectively localize informative regions

without the need of bounding-box/part annotations. Our model, termed NTS-Net

for Navigator-Teacher-Scrutinizer Network, consists of a Navigator agent, a

Teacher agent and a Scrutinizer agent. In consideration of intrinsic

consistency between informativeness of the regions and their probability being

ground-truth class, we design a novel training paradigm, which enables

Navigator to detect most informative regions under the guidance from Teacher.

After that, the Scrutinizer scrutinizes the proposed regions from Navigator and

makes predictions. Our model can be viewed as a multi-agent cooperation,

wherein agents benefit from each other, and make progress together. NTS-Net can

be trained end-to-end, while provides accurate fine-grained classification

predictions as well as highly informative regions during inference. We achieve

state-of-the-art performance in extensive benchmark datasets.

02 Jun 2018

The continuous dynamical system approach to deep learning is explored in order to devise alternative frameworks for training algorithms. Training is recast as a control problem and this allows us to formulate necessary optimality conditions in continuous time using the Pontryagin's maximum principle (PMP). A modification of the method of successive approximations is then used to solve the PMP, giving rise to an alternative training algorithm for deep learning. This approach has the advantage that rigorous error estimates and convergence results can be established. We also show that it may avoid some pitfalls of gradient-based methods, such as slow convergence on flat landscapes near saddle points. Furthermore, we demonstrate that it obtains favorable initial convergence rate per-iteration, provided Hamiltonian maximization can be efficiently carried out - a step which is still in need of improvement. Overall, the approach opens up new avenues to attack problems associated with deep learning, such as trapping in slow manifolds and inapplicability of gradient-based methods for discrete trainable variables.

Continuous word representation (aka word embedding) is a basic building block

in many neural network-based models used in natural language processing tasks.

Although it is widely accepted that words with similar semantics should be

close to each other in the embedding space, we find that word embeddings

learned in several tasks are biased towards word frequency: the embeddings of

high-frequency and low-frequency words lie in different subregions of the

embedding space, and the embedding of a rare word and a popular word can be far

from each other even if they are semantically similar. This makes learned word

embeddings ineffective, especially for rare words, and consequently limits the

performance of these neural network models. In this paper, we develop a neat,

simple yet effective way to learn \emph{FRequency-AGnostic word Embedding}

(FRAGE) using adversarial training. We conducted comprehensive studies on ten

datasets across four natural language processing tasks, including word

similarity, language modeling, machine translation and text classification.

Results show that with FRAGE, we achieve higher performance than the baselines

in all tasks.

The scarcity of class-labeled data is a ubiquitous bottleneck in many machine learning problems. While abundant unlabeled data typically exist and provide a potential solution, it is highly challenging to exploit them. In this paper, we address this problem by leveraging Positive-Unlabeled~(PU) classification and the conditional generation with extra unlabeled data \emph{simultaneously}. We present a novel training framework to jointly target both PU classification and conditional generation when exposed to extra data, especially out-of-distribution unlabeled data, by exploring the interplay between them: 1) enhancing the performance of PU classifiers with the assistance of a novel Classifier-Noise-Invariant Conditional GAN~(CNI-CGAN) that is robust to noisy labels, 2) leveraging extra data with predicted labels from a PU classifier to help the generation. Theoretically, we prove the optimal condition of CNI-CGAN and experimentally, we conducted extensive evaluations on diverse datasets.

30 Aug 2022

It is well-known that stochastic gradient noise (SGN) acts as implicit regularization for deep learning and is essentially important for both optimization and generalization of deep networks. Some works attempted to artificially simulate SGN by injecting random noise to improve deep learning. However, it turned out that the injected simple random noise cannot work as well as SGN, which is anisotropic and parameter-dependent. For simulating SGN at low computational costs and without changing the learning rate or batch size, we propose the Positive-Negative Momentum (PNM) approach that is a powerful alternative to conventional Momentum in classic optimizers. The introduced PNM method maintains two approximate independent momentum terms. Then, we can control the magnitude of SGN explicitly by adjusting the momentum difference. We theoretically prove the convergence guarantee and the generalization advantage of PNM over Stochastic Gradient Descent (SGD). By incorporating PNM into the two conventional optimizers, SGD with Momentum and Adam, our extensive experiments empirically verified the significant advantage of the PNM-based variants over the corresponding conventional Momentum-based optimizers.

05 Aug 2022

Machine-learning-based interatomic potential energy surface (PES) models are

revolutionizing the field of molecular modeling. However, although much faster

than electronic structure schemes, these models suffer from costly computations

via deep neural networks to predict the energy and atomic forces, resulting in

lower running efficiency as compared to the typical empirical force fields.

Herein, we report a model compression scheme for boosting the performance of

the Deep Potential (DP) model, a deep learning based PES model. This scheme, we

call DP Compress, is an efficient post-processing step after the training of DP

models (DP Train). DP Compress combines several DP-specific compression

techniques, which typically speed up DP-based molecular dynamics simulations by

an order of magnitude faster, and consume an order of magnitude less memory. We

demonstrate that DP Compress is sufficiently accurate by testing a variety of

physical properties of Cu, H2O, and Al-Cu-Mg systems. DP Compress applies to

both CPU and GPU machines and is publicly available online.

The identification of pulmonary lobes is of great importance in disease diagnosis and treatment. A few lung diseases have regional disorders at lobar level. Thus, an accurate segmentation of pulmonary lobes is necessary. In this work, we propose an automated segmentation of pulmonary lobes using coordination-guided deep neural networks from chest CT images. We first employ an automated lung segmentation to extract the lung area from CT image, then exploit volumetric convolutional neural network (V-net) for segmenting the pulmonary lobes. To reduce the misclassification of different lobes, we therefore adopt coordination-guided convolutional layers (CoordConvs) that generate additional feature maps of the positional information of pulmonary lobes. The proposed model is trained and evaluated on a few publicly available datasets and has achieved the state-of-the-art accuracy with a mean Dice coefficient index of 0.947 ± 0.044.

Compared with standard supervised learning, the key difficulty in

semi-supervised learning is how to make full use of the unlabeled data. A

recently proposed method, virtual adversarial training (VAT), smartly performs

adversarial training without label information to impose a local smoothness on

the classifier, which is especially beneficial to semi-supervised learning. In

this work, we propose tangent-normal adversarial regularization (TNAR) as an

extension of VAT by taking the data manifold into consideration. The proposed

TNAR is composed by two complementary parts, the tangent adversarial

regularization (TAR) and the normal adversarial regularization (NAR). In TAR,

VAT is applied along the tangent space of the data manifold, aiming to enforce

local invariance of the classifier on the manifold, while in NAR, VAT is

performed on the normal space orthogonal to the tangent space, intending to

impose robustness on the classifier against the noise causing the observed data

deviating from the underlying data manifold. Demonstrated by experiments on

both artificial and practical datasets, our proposed TAR and NAR complement

with each other, and jointly outperforms other state-of-the-art methods for

semi-supervised learning.

Convolutional neural networks (CNNs) have achieved remarkable performance in

various fields, particularly in the domain of computer vision. However, why

this architecture works well remains to be a mystery. In this work we move a

small step toward understanding the success of CNNs by investigating the

learning dynamics of a two-layer nonlinear convolutional neural network over

some specific data distributions. Rather than the typical Gaussian assumption

for input data distribution, we consider a more realistic setting that each

data point (e.g. image) contains a specific pattern determining its class

label. Within this setting, we both theoretically and empirically show that

some convolutional filters will learn the key patterns in data and the norm of

these filters will dominate during the training process with stochastic

gradient descent. And with any high probability, when the number of iterations

is sufficiently large, the CNN model could obtain 100% accuracy over the

considered data distributions. Our experiments demonstrate that for practical

image classification tasks our findings still hold to some extent.

Automatic article commenting is helpful in encouraging user engagement and

interaction on online news platforms. However, the news documents are usually

too long for traditional encoder-decoder based models, which often results in

general and irrelevant comments. In this paper, we propose to generate comments

with a graph-to-sequence model that models the input news as a topic

interaction graph. By organizing the article into graph structure, our model

can better understand the internal structure of the article and the connection

between topics, which makes it better able to understand the story. We collect

and release a large scale news-comment corpus from a popular Chinese online

news platform Tencent Kuaibao. Extensive experiment results show that our model

can generate much more coherent and informative comments compared with several

strong baseline models.

Non-autoregressive translation models (NAT) have achieved impressive

inference speedup. A potential issue of the existing NAT algorithms, however,

is that the decoding is conducted in parallel, without directly considering

previous context. In this paper, we propose an imitation learning framework for

non-autoregressive machine translation, which still enjoys the fast translation

speed but gives comparable translation performance compared to its

auto-regressive counterpart. We conduct experiments on the IWSLT16, WMT14 and

WMT16 datasets. Our proposed model achieves a significant speedup over the

autoregressive models, while keeping the translation quality comparable to the

autoregressive models. By sampling sentence length in parallel at inference

time, we achieve the performance of 31.85 BLEU on WMT16 Ro→En and

30.68 BLEU on IWSLT16 En→De.

Extracting multi-scale information is key to semantic segmentation. However,

the classic convolutional neural networks (CNNs) encounter difficulties in

achieving multi-scale information extraction: expanding convolutional kernel

incurs the high computational cost and using maximum pooling sacrifices image

information. The recently developed dilated convolution solves these problems,

but with the limitation that the dilation rates are fixed and therefore the

receptive field cannot fit for all objects with different sizes in the image.

We propose an adaptivescale convolutional neural network (ASCNet), which

introduces a 3-layer convolution structure in the end-to-end training, to

adaptively learn an appropriate dilation rate for each pixel in the image. Such

pixel-level dilation rates produce optimal receptive fields so that the

information of objects with different sizes can be extracted at the

corresponding scale. We compare the segmentation results using the classic CNN,

the dilated CNN and the proposed ASCNet on two types of medical images (The

Herlev dataset and SCD RBC dataset). The experimental results show that ASCNet

achieves the highest accuracy. Moreover, the automatically generated dilation

rates are positively correlated to the sizes of the objects, confirming the

effectiveness of the proposed method.

20 Aug 2019

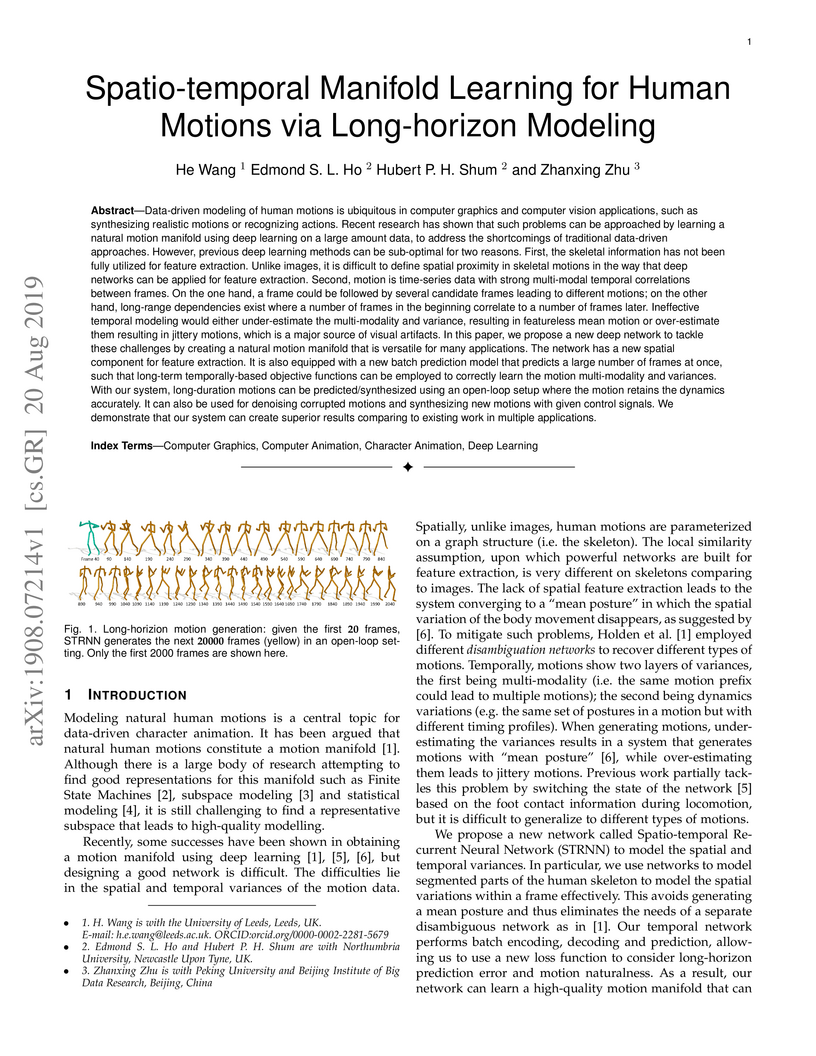

Data-driven modeling of human motions is ubiquitous in computer graphics and computer vision applications, such as synthesizing realistic motions or recognizing actions. Recent research has shown that such problems can be approached by learning a natural motion manifold using deep learning to address the shortcomings of traditional data-driven approaches. However, previous methods can be sub-optimal for two reasons. First, the skeletal information has not been fully utilized for feature extraction. Unlike images, it is difficult to define spatial proximity in skeletal motions in the way that deep networks can be applied. Second, motion is time-series data with strong multi-modal temporal correlations. A frame could be followed by several candidate frames leading to different motions; long-range dependencies exist where a number of frames in the beginning correlate to a number of frames later. Ineffective modeling would either under-estimate the multi-modality and variance, resulting in featureless mean motion or over-estimate them resulting in jittery motions. In this paper, we propose a new deep network to tackle these challenges by creating a natural motion manifold that is versatile for many applications. The network has a new spatial component for feature extraction. It is also equipped with a new batch prediction model that predicts a large number of frames at once, such that long-term temporally-based objective functions can be employed to correctly learn the motion multi-modality and variances. With our system, long-duration motions can be predicted/synthesized using an open-loop setup where the motion retains the dynamics accurately. It can also be used for denoising corrupted motions and synthesizing new motions with given control signals. We demonstrate that our system can create superior results comparing to existing work in multiple applications.

The complicated syntax structure of natural language is hard to be explicitly

modeled by sequence-based models. Graph is a natural structure to describe the

complicated relation between tokens. The recent advance in Graph Neural

Networks (GNN) provides a powerful tool to model graph structure data, but

simple graph models such as Graph Convolutional Networks (GCN) suffer from

over-smoothing problem, that is, when stacking multiple layers, all nodes will

converge to the same value. In this paper, we propose a novel Recursive

Graphical Neural Networks model (ReGNN) to represent text organized in the form

of graph. In our proposed model, LSTM is used to dynamically decide which part

of the aggregated neighbor information should be transmitted to upper layers

thus alleviating the over-smoothing problem. Furthermore, to encourage the

exchange between the local and global information, a global graph-level node is

designed. We conduct experiments on both single and multiple label text

classification tasks. Experiment results show that our ReGNN model surpasses

the strong baselines significantly in most of the datasets and greatly

alleviates the over-smoothing problem.

There are no more papers matching your filters at the moment.