CIFAR

Researchers from Mila and KAIST developed the Generative Flow Ant Colony Sampler (GFACS), a meta-heuristic that combines GFlowNets with Ant Colony Optimization (ACO) for combinatorial optimization. This approach generates multi-modal prior distributions of solutions and achieves superior solution quality and efficiency, outperforming strong baselines and specialized RL solvers across various benchmarks, often with reduced training time.

Lipreading is the task of decoding text from the movement of a speaker's mouth. Traditional approaches separated the problem into two stages: designing or learning visual features, and prediction. More recent deep lipreading approaches are end-to-end trainable (Wand et al., 2016; Chung & Zisserman, 2016a). However, existing work on models trained end-to-end perform only word classification, rather than sentence-level sequence prediction. Studies have shown that human lipreading performance increases for longer words (Easton & Basala, 1982), indicating the importance of features capturing temporal context in an ambiguous communication channel. Motivated by this observation, we present LipNet, a model that maps a variable-length sequence of video frames to text, making use of spatiotemporal convolutions, a recurrent network, and the connectionist temporal classification loss, trained entirely end-to-end. To the best of our knowledge, LipNet is the first end-to-end sentence-level lipreading model that simultaneously learns spatiotemporal visual features and a sequence model. On the GRID corpus, LipNet achieves 95.2% accuracy in sentence-level, overlapped speaker split task, outperforming experienced human lipreaders and the previous 86.4% word-level state-of-the-art accuracy (Gergen et al., 2016).

Researchers from New York University and Facebook AI Research introduced unlikelihood training to mitigate neural text degeneration, enabling models to generate less repetitive and more diverse text even with deterministic decoding. This training approach achieved up to a 97% reduction in 4-gram repetitions and was significantly preferred by human evaluators over existing decoding methods.

The LSPE framework enhances Graph Neural Networks by decoupling and learnably updating structural and positional representations, leveraging a novel Random Walk Positional Encoding (RWPE). This approach consistently improves performance across various graph datasets, achieving a state-of-the-art MAE of 0.090 on ZINC and demonstrating that sparse GNNs with LSPE can surpass Transformer-based GNNs while maintaining linear computational complexity.

Deep InfoMax (DIM) presents an unsupervised framework that learns deep representations by estimating and maximizing mutual information between inputs and their learned features. The method significantly improves classification accuracy on image datasets by focusing on local-global information maximization, outperforming prior unsupervised techniques and achieving performance comparable to supervised baselines.

26 Jun 2024

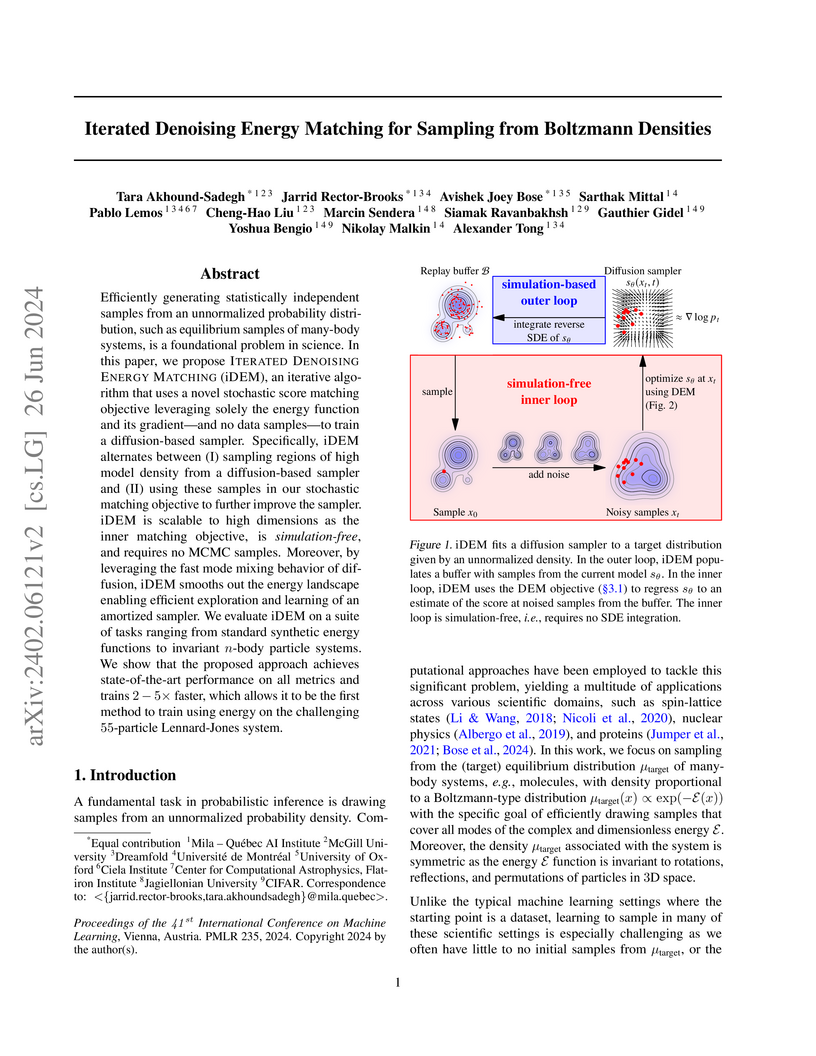

Iterated Denoising Energy Matching (iDEM) introduces a scalable, simulation-free neural sampler for unnormalized Boltzmann distributions, enabling efficient sampling from complex energy landscapes purely from the energy function and its gradient. The method achieves state-of-the-art sample quality and significantly faster training, successfully tackling the challenging 165-dimensional LJ-55 system for the first time.

Researchers from Google's Paradigms of Intelligence Team introduce COALA-PG, a learning-aware policy gradient algorithm, to foster cooperation among self-interested agents in multi-agent systems. The method achieves robust cooperation and higher returns in social dilemmas by enabling agents to implicitly model co-player learning dynamics using long observation histories, without requiring higher-order derivatives or privileged information.

We show that BERT (Devlin et al., 2018) is a Markov random field language model. This formulation gives way to a natural procedure to sample sentences from BERT. We generate from BERT and find that it can produce high-quality, fluent generations. Compared to the generations of a traditional left-to-right language model, BERT generates sentences that are more diverse but of slightly worse quality.

The paper demonstrates that deep neural networks exhibit qualitatively distinct optimization behaviors when learning from real, structured data compared to noisy data. It shows deep networks prioritize learning simple, generalizable patterns before memorizing noise and that explicit regularization, like dropout, can selectively hinder the memorization of noise without impacting generalization.

Understanding the interplay between intra-modality dependencies (the contribution of an individual modality to a target task) and inter-modality dependencies (the relationships between modalities and the target task) is fundamental to advancing multi-modal learning. However, the nature of and interaction between these dependencies within current benchmark evaluations remains poorly characterized. In this work, we present a large-scale empirical study to quantify these dependencies across 23 visual question-answering benchmarks using multi-modal large language models (MLLMs) covering domains such as general and expert knowledge reasoning, optical character recognition, and document understanding. Our findings show that the reliance on vision, question (text), and their interaction varies significantly, both across and within benchmarks. We discover that numerous benchmarks intended to mitigate text-only biases have inadvertently amplified image-only dependencies. This characterization persists across model sizes, as larger models often use these intra-modality dependencies to achieve high performance that mask an underlying lack of multi-modal reasoning. We provide a quantitative characterization of multi-modal datasets, enabling a principled approach to multi-modal benchmark design and evaluation.

Back-propagation has been the workhorse of recent successes of deep learning but it relies on infinitesimal effects (partial derivatives) in order to perform credit assignment. This could become a serious issue as one considers deeper and more non-linear functions, e.g., consider the extreme case of nonlinearity where the relation between parameters and cost is actually discrete. Inspired by the biological implausibility of back-propagation, a few approaches have been proposed in the past that could play a similar credit assignment role. In this spirit, we explore a novel approach to credit assignment in deep networks that we call target propagation. The main idea is to compute targets rather than gradients, at each layer. Like gradients, they are propagated backwards. In a way that is related but different from previously proposed proxies for back-propagation which rely on a backwards network with symmetric weights, target propagation relies on auto-encoders at each layer. Unlike back-propagation, it can be applied even when units exchange stochastic bits rather than real numbers. We show that a linear correction for the imperfectness of the auto-encoders, called difference target propagation, is very effective to make target propagation actually work, leading to results comparable to back-propagation for deep networks with discrete and continuous units and denoising auto-encoders and achieving state of the art for stochastic networks.

Recurrent neural networks (RNNs) are notoriously difficult to train. When the eigenvalues of the hidden to hidden weight matrix deviate from absolute value 1, optimization becomes difficult due to the well studied issue of vanishing and exploding gradients, especially when trying to learn long-term dependencies. To circumvent this problem, we propose a new architecture that learns a unitary weight matrix, with eigenvalues of absolute value exactly 1. The challenge we address is that of parametrizing unitary matrices in a way that does not require expensive computations (such as eigendecomposition) after each weight update. We construct an expressive unitary weight matrix by composing several structured matrices that act as building blocks with parameters to be learned. Optimization with this parameterization becomes feasible only when considering hidden states in the complex domain. We demonstrate the potential of this architecture by achieving state of the art results in several hard tasks involving very long-term dependencies.

Deep representation learning methods struggle with continual learning,

suffering from both catastrophic forgetting of useful units and loss of

plasticity, often due to rigid and unuseful units. While many methods address

these two issues separately, only a few currently deal with both

simultaneously. In this paper, we introduce Utility-based Perturbed Gradient

Descent (UPGD) as a novel approach for the continual learning of

representations. UPGD combines gradient updates with perturbations, where it

applies smaller modifications to more useful units, protecting them from

forgetting, and larger modifications to less useful units, rejuvenating their

plasticity. We use a challenging streaming learning setup where continual

learning problems have hundreds of non-stationarities and unknown task

boundaries. We show that many existing methods suffer from at least one of the

issues, predominantly manifested by their decreasing accuracy over tasks. On

the other hand, UPGD continues to improve performance and surpasses or is

competitive with all methods in all problems. Finally, in extended

reinforcement learning experiments with PPO, we show that while Adam exhibits a

performance drop after initial learning, UPGD avoids it by addressing both

continual learning issues.

Any well-behaved generative model over a variable x can be

expressed as a deterministic transformation of an exogenous ('outsourced')

Gaussian noise variable z: x=fθ(z). In

such a model (\eg, a VAE, GAN, or continuous-time flow-based model), sampling

of the target variable x∼pθ(x) is

straightforward, but sampling from a posterior distribution of the form

$p(\mathbf{x}\mid\mathbf{y}) \propto

p_\theta(\mathbf{x})r(\mathbf{x},\mathbf{y}),wherer$ is a constraint

function depending on an auxiliary variable y, is generally

intractable. We propose to amortize the cost of sampling from such posterior

distributions with diffusion models that sample a distribution in the noise

space (z). These diffusion samplers are trained by reinforcement

learning algorithms to enforce that the transformed samples

fθ(z) are distributed according to the posterior in the data

space (x). For many models and constraints, the posterior in noise

space is smoother than in data space, making it more suitable for amortized

inference. Our method enables conditional sampling under unconditional GAN,

(H)VAE, and flow-based priors, comparing favorably with other inference

methods. We demonstrate the proposed outsourced diffusion sampling in several

experiments with large pretrained prior models: conditional image generation,

reinforcement learning with human feedback, and protein structure generation.

On time-series data, most causal discovery methods fit a new model whenever they encounter samples from a new underlying causal graph. However, these samples often share relevant information which is lost when following this approach. Specifically, different samples may share the dynamics which describe the effects of their causal relations. We propose Amortized Causal Discovery, a novel framework that leverages such shared dynamics to learn to infer causal relations from time-series data. This enables us to train a single, amortized model that infers causal relations across samples with different underlying causal graphs, and thus leverages the shared dynamics information. We demonstrate experimentally that this approach, implemented as a variational model, leads to significant improvements in causal discovery performance, and show how it can be extended to perform well under added noise and hidden confounding.

The Z-loss is a classification loss function developed at MILA for extreme multi-class classification, featuring Z-normalization, shift/scale invariance, and tunable hyperparameters. It allows for exact gradient computation independent of the number of classes, leading to significant speedups and improved alignment with ranking-based task metrics compared to traditional methods.

Local Search GFlowNets (LS-GFN) integrates a local search mechanism into Generative Flow Networks, enhancing their capacity to consistently generate diverse and high-rewarding samples. This approach demonstrates improved reward distribution matching, increased mode discovery, and superior diversity compared to current GFlowNet methods and reward-maximization baselines across various biochemical tasks.

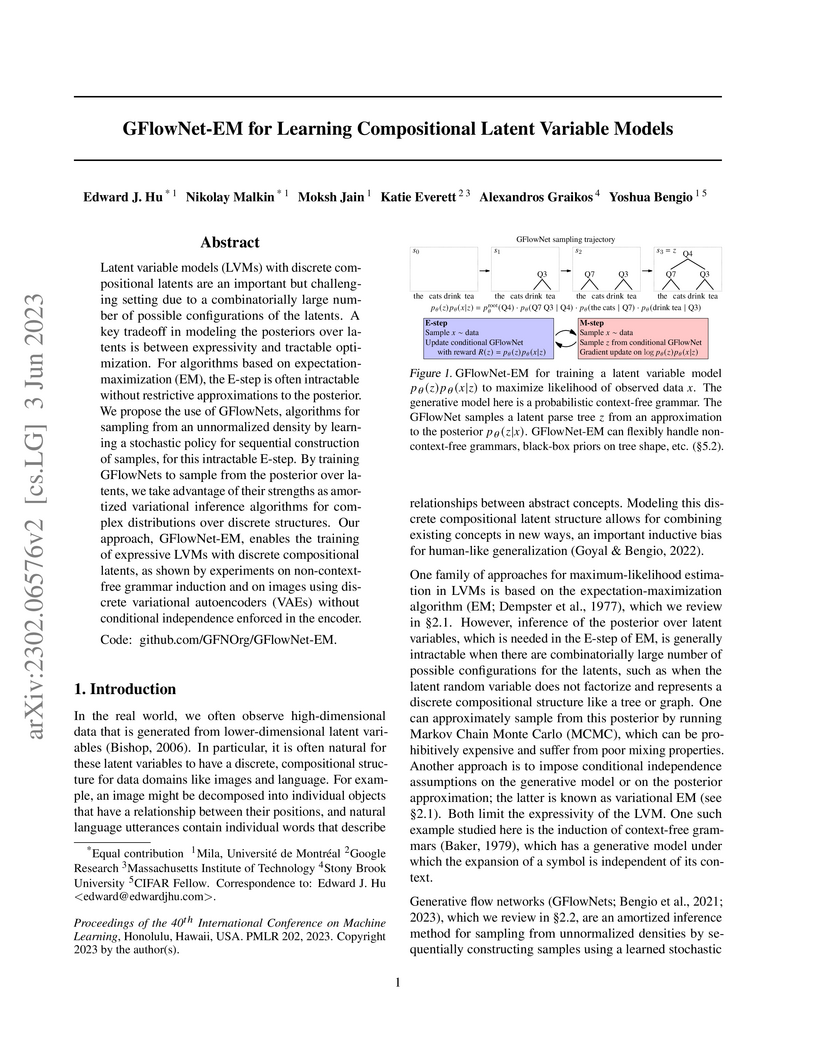

GFlowNet-EM enables maximum-likelihood estimation for latent variable models with discrete, compositional latents by leveraging GFlowNets to approximate the intractable E-step of the EM algorithm. The framework achieved improved performance on hierarchical mixture models, grammar induction, and discrete VAEs by learning expressive, non-factorized posterior distributions.

Biological and artificial neural networks develop internal representations

that enable them to perform complex tasks. In artificial networks, the

effectiveness of these models relies on their ability to build task specific

representation, a process influenced by interactions among datasets,

architectures, initialization strategies, and optimization algorithms. Prior

studies highlight that different initializations can place networks in either a

lazy regime, where representations remain static, or a rich/feature learning

regime, where representations evolve dynamically. Here, we examine how

initialization influences learning dynamics in deep linear neural networks,

deriving exact solutions for lambda-balanced initializations-defined by the

relative scale of weights across layers. These solutions capture the evolution

of representations and the Neural Tangent Kernel across the spectrum from the

rich to the lazy regimes. Our findings deepen the theoretical understanding of

the impact of weight initialization on learning regimes, with implications for

continual learning, reversal learning, and transfer learning, relevant to both

neuroscience and practical applications.

PhyloGFN applies Generative Flow Networks to phylogenetic inference, offering an amortized posterior sampler for Bayesian methods that achieves competitive marginal log-likelihood estimates and accurately models the entire tree space. It also provides a principled probabilistic framework for parsimony analysis, identifying optimal trees and learning their distribution.

There are no more papers matching your filters at the moment.