Carnegie Mellon University

Carnegie Mellon UniversityMamba introduces Selective State Space Models (SSMs) to create a linear-time sequence model that matches Transformer performance while scaling linearly with sequence length. It demonstrates competitive quality on language, genomics, and audio tasks, achieving up to 5x higher inference throughput and significantly better long-context generalization than prior models.

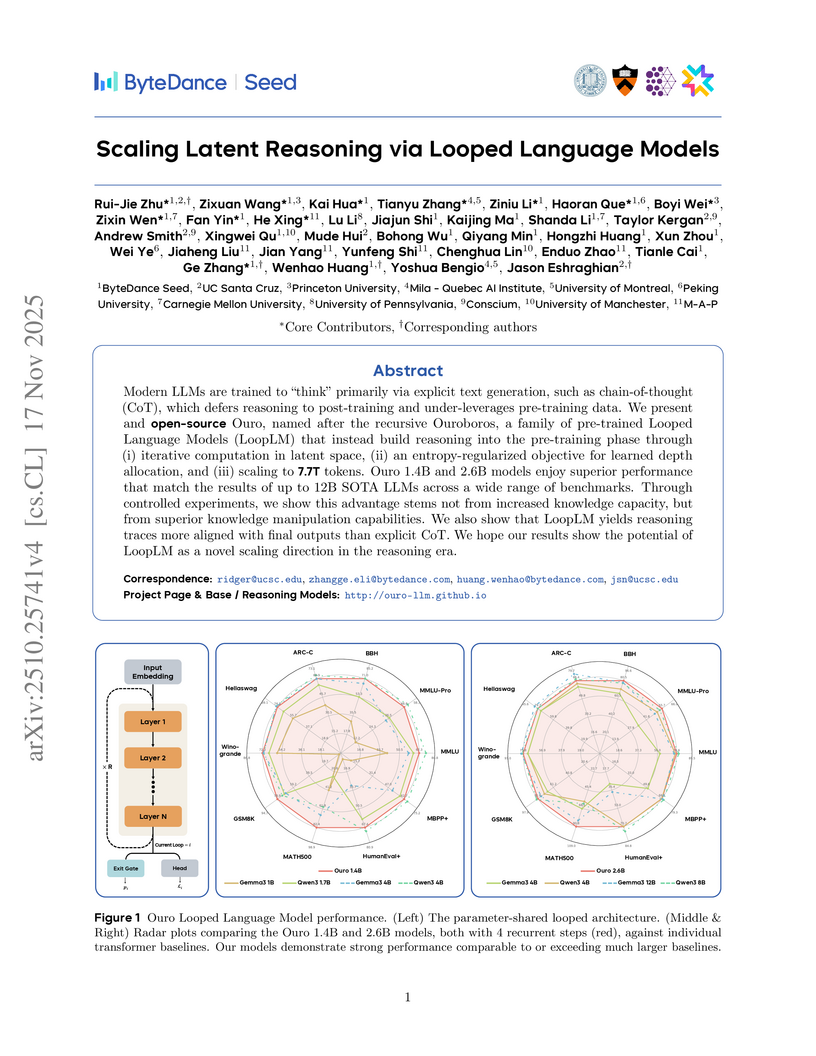

Ouro, a family of Looped Language Models (LoopLMs), embeds iterative computation directly into the pre-training process through parameter reuse, leading to enhanced parameter efficiency and reasoning abilities. These models achieve the performance of much larger non-looped Transformers while demonstrating improved safety and a more causally faithful internal reasoning process.

02 Oct 2025

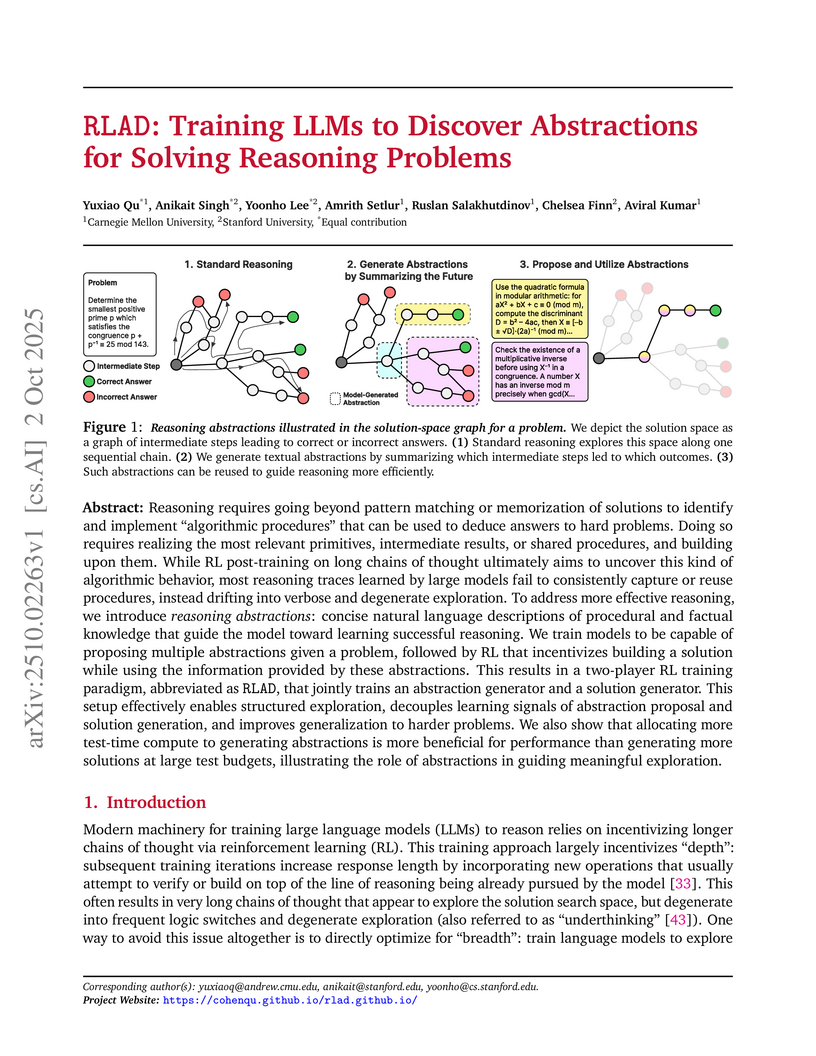

The RLAD framework enables large language models to self-discover and leverage high-level reasoning abstractions, leading to substantial improvements in accuracy and compute efficiency on challenging mathematical reasoning tasks. This approach teaches models to propose and utilize concise procedural and factual knowledge to guide complex problem-solving.

12 Sep 2025

Parallel-R1, a reinforcement learning framework developed by a collaborative team including Tencent AI Lab Seattle, teaches large language models to perform parallel thinking for complex mathematical reasoning tasks. The method effectively addresses cold-start challenges and achieves robust performance gains, including a 42.9% improvement on the AIME25 benchmark by utilizing parallel thinking as a mid-training exploration scaffold.

01 Aug 2025

Princeton AI Lab University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign University of California, Santa Barbara

University of California, Santa Barbara Carnegie Mellon University

Carnegie Mellon University Fudan University

Fudan University Shanghai Jiao Tong University

Shanghai Jiao Tong University Tsinghua University

Tsinghua University University of Michigan

University of Michigan The Chinese University of Hong KongThe Hong Kong University of Science and Technology (Guangzhou)

The Chinese University of Hong KongThe Hong Kong University of Science and Technology (Guangzhou) University of California, San DiegoPennsylvania State University

University of California, San DiegoPennsylvania State University The University of Hong Kong

The University of Hong Kong Princeton University

Princeton University University of SydneyOregon State University

University of SydneyOregon State University

University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign University of California, Santa Barbara

University of California, Santa Barbara Carnegie Mellon University

Carnegie Mellon University Fudan University

Fudan University Shanghai Jiao Tong University

Shanghai Jiao Tong University Tsinghua University

Tsinghua University University of Michigan

University of Michigan The Chinese University of Hong KongThe Hong Kong University of Science and Technology (Guangzhou)

The Chinese University of Hong KongThe Hong Kong University of Science and Technology (Guangzhou) University of California, San DiegoPennsylvania State University

University of California, San DiegoPennsylvania State University The University of Hong Kong

The University of Hong Kong Princeton University

Princeton University University of SydneyOregon State University

University of SydneyOregon State UniversityAn extensive international collaboration offers the first systematic review of self-evolving agents, establishing a unified theoretical framework categorized by 'what to evolve,' 'when to evolve,' and 'how to evolve'. The work consolidates diverse research, highlights key challenges, and maps applications, aiming to guide the development of AI systems capable of continuous autonomous improvement.

09 Mar 2024

Semi-supervised datasets are ubiquitous across diverse domains where obtaining fully labeled data is costly or time-consuming. The prevalence of such datasets has consistently driven the demand for new tools and methods that exploit the potential of unlabeled data. Responding to this demand, we introduce semi-supervised U-statistics enhanced by the abundance of unlabeled data, and investigate their statistical properties. We show that the proposed approach is asymptotically Normal and exhibits notable efficiency gains over classical U-statistics by effectively integrating various powerful prediction tools into the framework. To understand the fundamental difficulty of the problem, we derive minimax lower bounds in semi-supervised settings and showcase that our procedure is semi-parametrically efficient under regularity conditions. Moreover, tailored to bivariate kernels, we propose a refined approach that outperforms the classical U-statistic across all degeneracy regimes, and demonstrate its optimality properties. Simulation studies are conducted to corroborate our findings and to further demonstrate our framework.

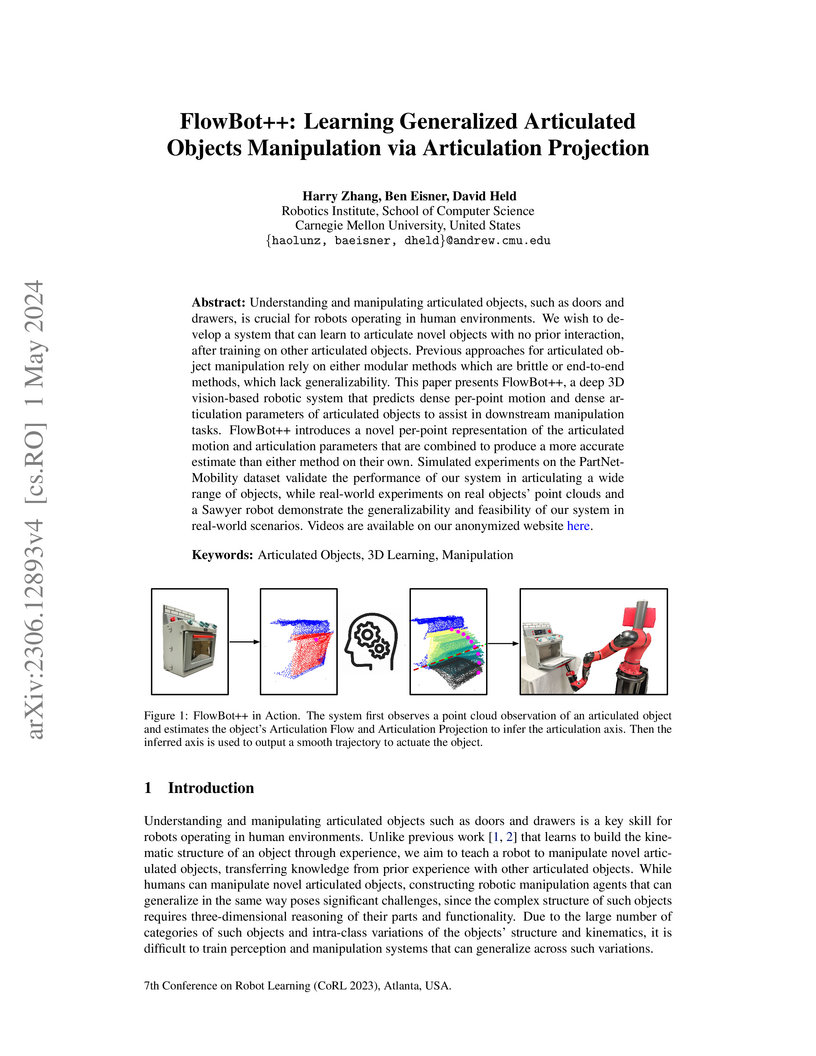

FlowBot++ enables robots to smoothly and efficiently manipulate novel articulated objects in a zero-shot manner by introducing "Articulation Projection" to infer global kinematic parameters from partial observations, complementing existing "Articulation Flow" predictions. This method, developed by researchers at Carnegie Mellon University, significantly reduces execution time and improves trajectory smoothness in both simulation and real-world experiments.

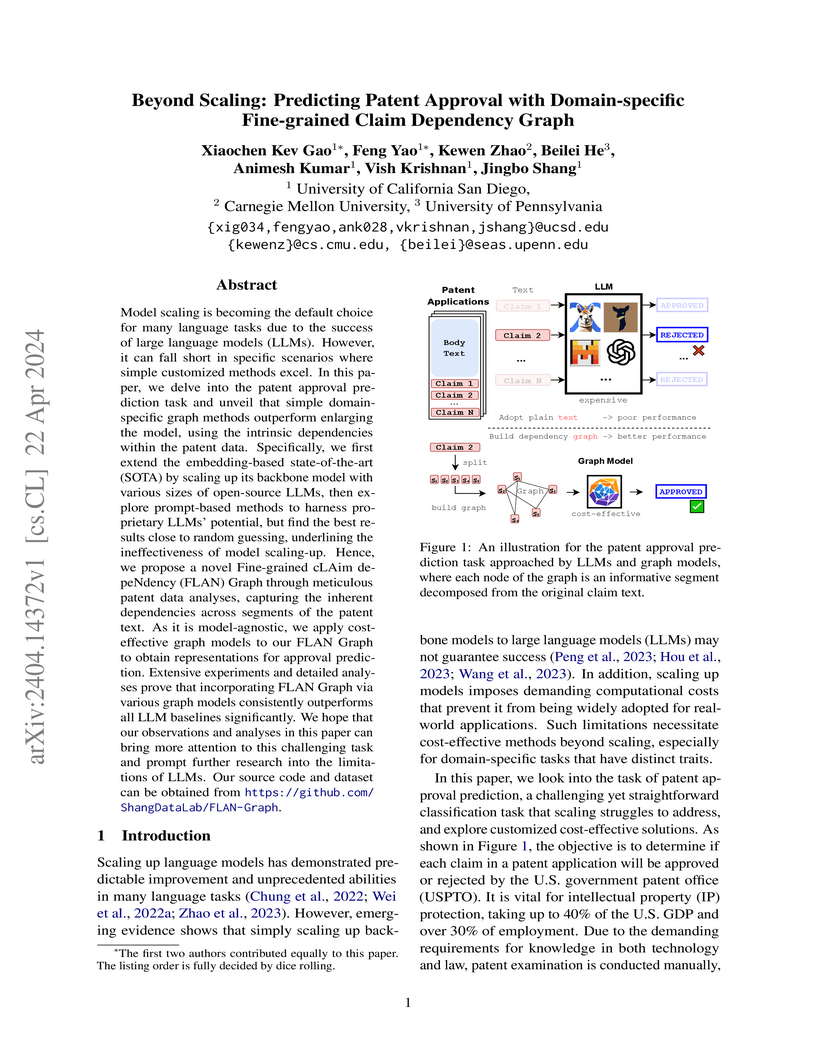

Researchers at UCSD developed the Fine-grained cLAim depeNdency (FLAN) Graph, a domain-specific graph-based method for predicting patent claim approval. This approach, which explicitly models dependencies within and between claims, achieved an AUC of 66.04% and a Macro-F1 of 58.22%, substantially outperforming all tested Large Language Models (LLMs) and the previous BERT-based state-of-the-art.

21 May 2024

In many real-world causal inference applications, the primary outcomes

(labels) are often partially missing, especially if they are expensive or

difficult to collect. If the missingness depends on covariates (i.e.,

missingness is not completely at random), analyses based on fully observed

samples alone may be biased. Incorporating surrogates, which are fully observed

post-treatment variables related to the primary outcome, can improve estimation

in this case. In this paper, we study the role of surrogates in estimating

continuous treatment effects and propose a doubly robust method to efficiently

incorporate surrogates in the analysis, which uses both labeled and unlabeled

data and does not suffer from the above selection bias problem. Importantly, we

establish the asymptotic normality of the proposed estimator and show possible

improvements on the variance compared with methods that solely use labeled

data. Extensive simulations show our methods enjoy appealing empirical

performance.

Researchers from Meta Reality Labs and Carnegie Mellon University developed MapAnything, a transformer-based model that unifies over 12 3D reconstruction tasks by directly predicting metric 3D scene geometry and camera parameters from diverse, heterogeneous inputs. This single feed-forward model achieves state-of-the-art or comparable performance across tasks such as multi-view dense reconstruction, monocular depth estimation, and camera localization.

Researchers from MIT, Meta AI, CMU, and NVIDIA developed StreamingLLM, a framework enabling Large Language Models to efficiently process infinitely long input sequences without fine-tuning. This is achieved by leveraging an "attention sink" phenomenon where LLMs disproportionately attend to initial tokens, allowing the model to maintain stable perplexity and achieve up to a 22.2x speedup in decoding latency.

13 Oct 2025

Researchers from ByteDance Seed, Carnegie Mellon University, and Stanford University developed Context-Folding, a framework allowing Large Language Model (LLM) agents to actively manage their context window for long-horizon tasks. Leveraging a reinforcement learning algorithm called FoldGRPO, the agent learns to dynamically branch for subtasks and condense their trajectories, achieving over 90% context compression and outperforming baseline agents on deep research and agentic software engineering benchmarks.

KAIST

KAIST University of Washington

University of Washington University of Toronto

University of Toronto Carnegie Mellon University

Carnegie Mellon University Université de Montréal

Université de Montréal New York University

New York University University of Chicago

University of Chicago UC Berkeley

UC Berkeley University of Oxford

University of Oxford Stanford University

Stanford University University of Michigan

University of Michigan Cornell University

Cornell University Nanyang Technological UniversityVector InstituteLG AI Research

Nanyang Technological UniversityVector InstituteLG AI Research MIT

MIT HKUSTUniversity of TübingenHong Kong Baptist University

HKUSTUniversity of TübingenHong Kong Baptist University University of California, Santa CruzCenter for AI SafetyGray Swan AIBeneficial AI ResearchConjectureLawZeroUniversity of Wisconsin

–MadisonMorph LabsInstitute for Applied PsychometricsCSER

University of California, Santa CruzCenter for AI SafetyGray Swan AIBeneficial AI ResearchConjectureLawZeroUniversity of Wisconsin

–MadisonMorph LabsInstitute for Applied PsychometricsCSERThe lack of a concrete definition for Artificial General Intelligence (AGI) obscures the gap between today's specialized AI and human-level cognition. This paper introduces a quantifiable framework to address this, defining AGI as matching the cognitive versatility and proficiency of a well-educated adult. To operationalize this, we ground our methodology in Cattell-Horn-Carroll theory, the most empirically validated model of human cognition. The framework dissects general intelligence into ten core cognitive domains-including reasoning, memory, and perception-and adapts established human psychometric batteries to evaluate AI systems. Application of this framework reveals a highly "jagged" cognitive profile in contemporary models. While proficient in knowledge-intensive domains, current AI systems have critical deficits in foundational cognitive machinery, particularly long-term memory storage. The resulting AGI scores (e.g., GPT-4 at 27%, GPT-5 at 57%) concretely quantify both rapid progress and the substantial gap remaining before AGI.

This paper systematically studies using powerful language models as automated judges for evaluating other LLMs, introducing the MT-bench and Chatbot Arena benchmarks for human-preference-aligned assessment. It demonstrates that GPT-4, when used as a judge, exhibits high agreement with human evaluations, provides insights into LLM judge biases, and advocates for a hybrid evaluation framework to holistically measure LLM capabilities and human alignment.

PAM introduces a novel, reference-free audio quality assessment metric that leverages Audio-Language Models and a two-prompt antonym strategy to effectively correlate with human perception across diverse audio generation and processing tasks. The metric demonstrates robust generalization, particularly in out-of-domain scenarios, without requiring task-specific training.

The Structured State Space Duality (SSD) framework establishes a mathematical connection between Transformers and Structured State Space Models (SSMs) through the lens of structured matrices. This framework enables the development of the SSD algorithm for efficient SSM computation and the Mamba-2 architecture, which achieves better perplexity on language modeling tasks with fewer FLOPs compared to Mamba and Transformer++ models.

This research from Carnegie Mellon University and Google DeepMind introduces an automated method, Greedy Coordinate Gradient (GCG), to generate universal and transferable adversarial attacks that bypass the safety alignments of Large Language Models. The attacks achieve high success rates against various LLMs, including commercial black-box models like GPT-3.5, GPT-4, and PaLM-2, by compelling them to generate objectionable content.

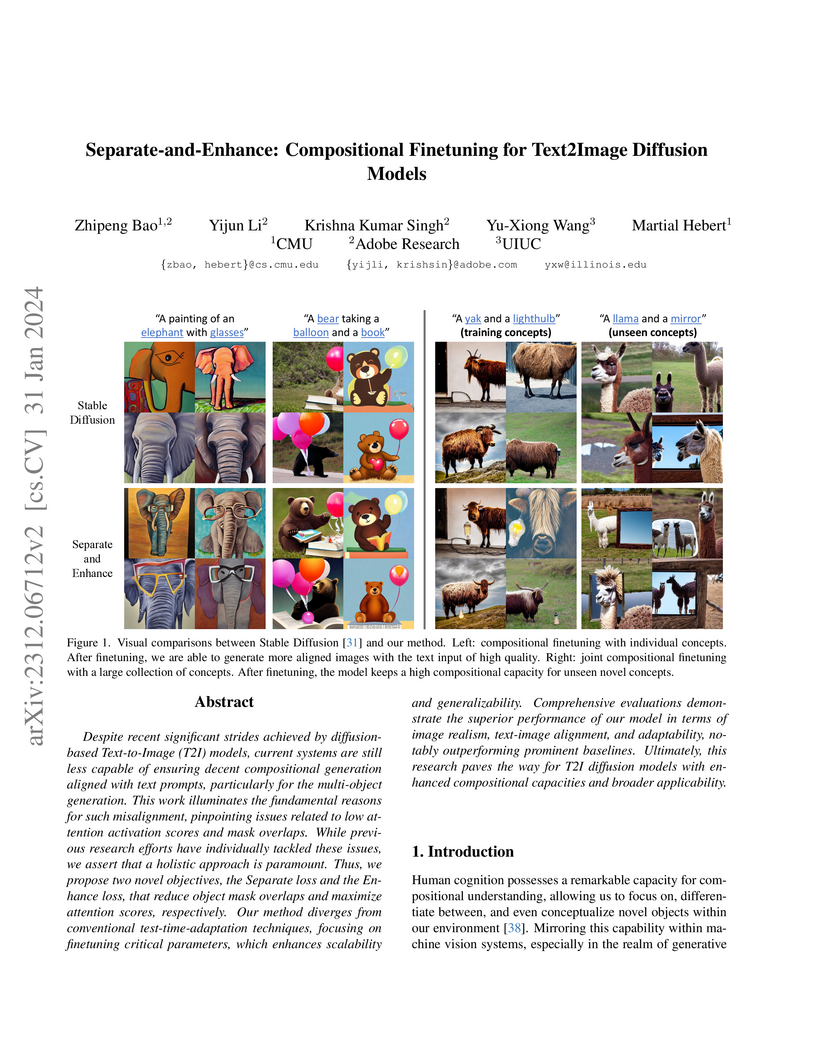

Despite recent significant strides achieved by diffusion-based Text-to-Image (T2I) models, current systems are still less capable of ensuring decent compositional generation aligned with text prompts, particularly for the multi-object generation. This work illuminates the fundamental reasons for such misalignment, pinpointing issues related to low attention activation scores and mask overlaps. While previous research efforts have individually tackled these issues, we assert that a holistic approach is paramount. Thus, we propose two novel objectives, the Separate loss and the Enhance loss, that reduce object mask overlaps and maximize attention scores, respectively. Our method diverges from conventional test-time-adaptation techniques, focusing on finetuning critical parameters, which enhances scalability and generalizability. Comprehensive evaluations demonstrate the superior performance of our model in terms of image realism, text-image alignment, and adaptability, notably outperforming prominent baselines. Ultimately, this research paves the way for T2I diffusion models with enhanced compositional capacities and broader applicability.

31 Jan 2024

In some high-dimensional and semiparametric inference problems involving two

populations, the parameter of interest can be characterized by two-sample

U-statistics involving some nuisance parameters. In this work we first extend

the framework of one-step estimation with cross-fitting to two-sample

U-statistics, showing that using an orthogonalized influence function can

effectively remove the first order bias, resulting in asymptotically normal

estimates of the parameter of interest. As an example, we apply this method and

theory to the problem of testing two-sample conditional distributions, also

known as strong ignorability. When combined with a conformal-based rank-sum

test, we discover that the nuisance parameters can be divided into two

categories, where in one category the nuisance estimation accuracy does not

affect the testing validity, whereas in the other the nuisance estimation

accuracy must satisfy the usual requirement for the test to be valid. We

believe these findings provide further insights into and enhance the conformal

inference toolbox.

03 Mar 2025

This paper introduces the Mixed Aggregate Preference Logit (MAPL, pronounced

"maple'') model, a novel class of discrete choice models that leverages machine

learning to model unobserved heterogeneity in discrete choice analysis. The

traditional mixed logit model (also known as "random parameters logit'')

parameterizes preference heterogeneity through assumptions about

feature-specific heterogeneity distributions. These parameters are also

typically assumed to be linearly added in a random utility (or random regret)

model. MAPL models relax these assumptions by instead directly relating model

inputs to parameters of alternative-specific distributions of aggregate

preference heterogeneity, with no feature-level assumptions required. MAPL

models eliminate the need to make any assumption about the functional form of

the latent decision model, freeing modelers from potential misspecification

errors. In a simulation experiment, we demonstrate that a single MAPL model

specification is capable of correctly modeling multiple different

data-generating processes with different forms of utility and heterogeneity

specifications. MAPL models advance machine-learning-based choice models by

accounting for unobserved heterogeneity. Further, MAPL models can be leveraged

by traditional choice modelers as a diagnostic tool for identifying utility and

heterogeneity misspecification.

There are no more papers matching your filters at the moment.