Ask or search anything...

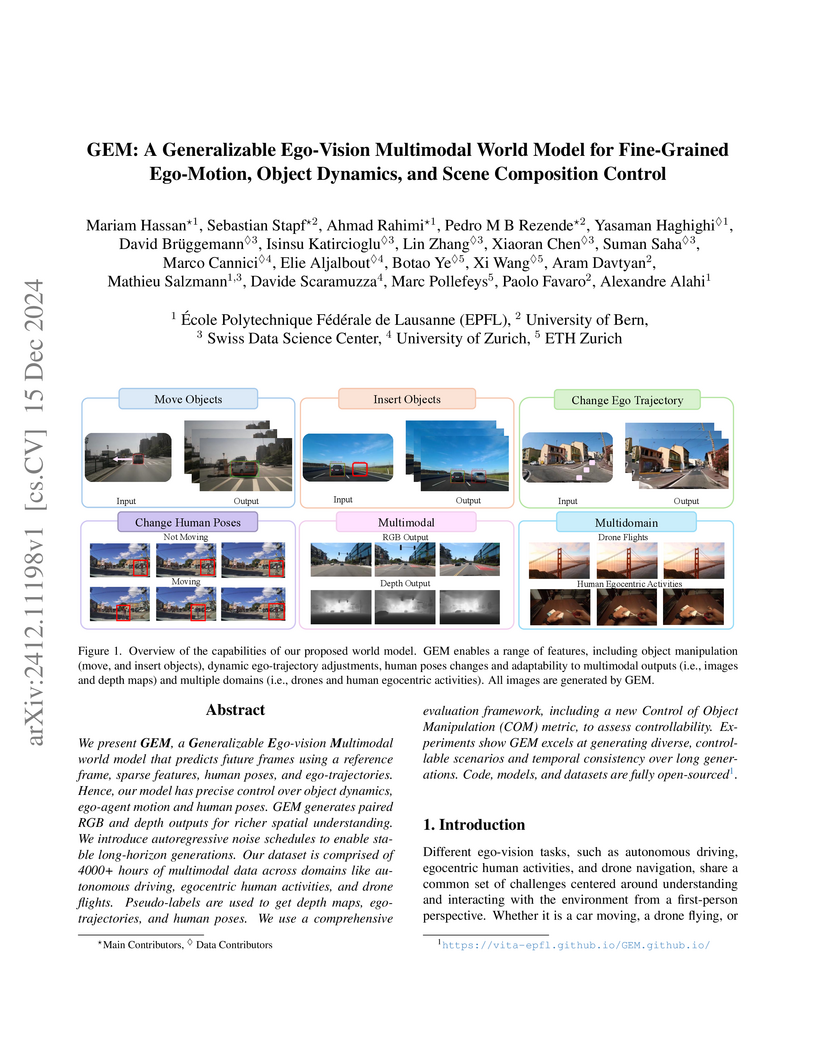

This paper introduces GEM, a generalizable world model for controlling ego-vision video generation with multiple modalities and domains.

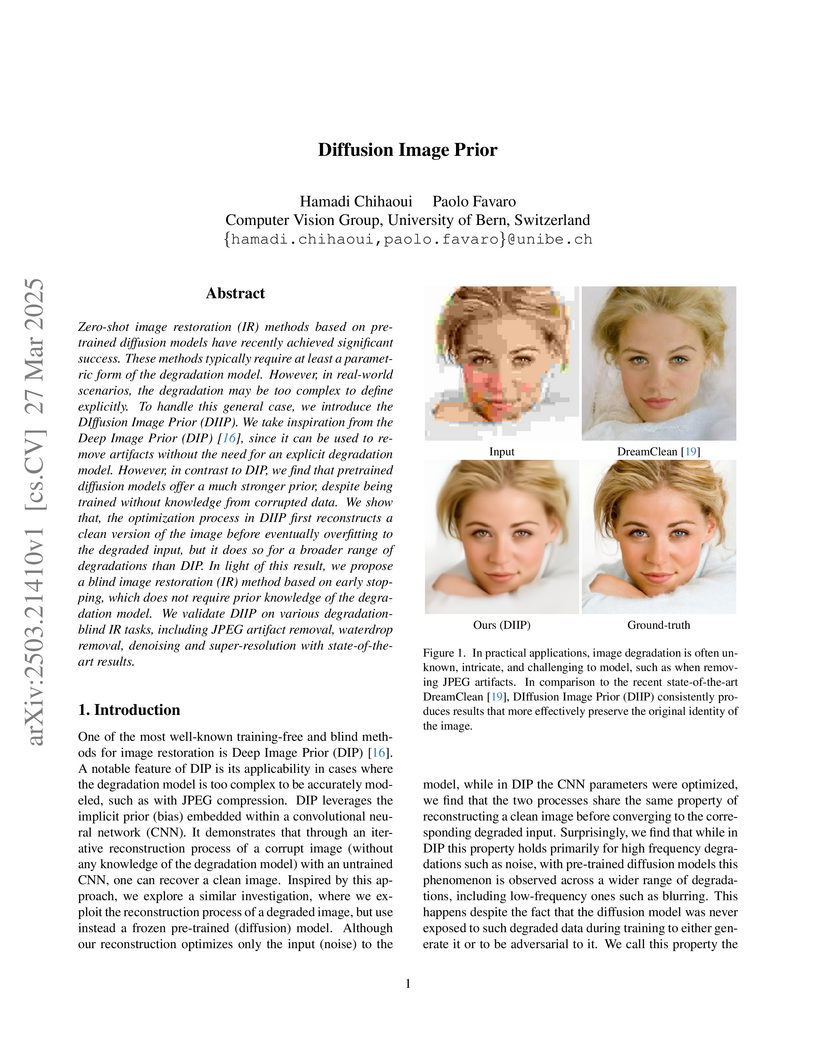

View blogDIffusion Image Prior (DIIP) offers a training-free and fully blind image restoration method by optimizing the input noise to a frozen pre-trained diffusion model. This approach robustly handles diverse degradations, outperforming prior blind techniques with improvements such as a 1.32 PSNR gain over DreamClean for denoising and a 1.37 PSNR gain for JPEG de-artifacting on CelebA.

View blogThis research analyzes encoder-only transformer dynamics at inference time using a mean-field model, identifying three distinct dynamical phases: alignment, heat, and pairing. The work mathematically characterizes how token representations evolve through these phases on separated timescales, offering a unified explanation for progressive representation refinement that is directly applicable to modern LLM scaling strategies.

View blogA deep learning framework, EAGLE, was developed to efficiently analyze whole slide pathology images, achieving an average AUROC of 0.742 across 31 tasks while processing images over 99% faster than previous methods by selectively focusing on critical regions.

View blogVideo Diffusion Models (VDMs) demonstrate emergent few-shot learning capabilities, capable of adapting to diverse visual tasks from geometric transformations to abstract reasoning using minimal examples. Researchers from the University of Bern and EPFL show that by reformulating tasks as visual transitions and employing parameter-efficient fine-tuning, VDMs leverage their implicit understanding of the visual world to achieve strong performance.

View blogExoJAX2 is presented as an updated differentiable spectral modeling framework for exoplanet and substellar atmospheres, enabling efficient and memory-optimized high-resolution emission, transmission, and reflection spectroscopy. It successfully performs Bayesian retrievals on JWST and ground-based data, yielding detailed atmospheric compositions and properties without requiring data binning.

View blog ETH Zurich

ETH Zurich

EPFL

EPFL

University of California, San Diego

University of California, San Diego

University of Washington

University of Washington Imperial College London

Imperial College London

UCLA

UCLA

CNRS

CNRS University of California, Santa Barbara

University of California, Santa Barbara

Stanford University

Stanford University

Tohoku University

Tohoku University

ETH Zürich

ETH Zürich

University of Texas at Austin

University of Texas at Austin