ENSIIE

Science and technology have a growing need for effective mechanisms that

ensure reliable, controlled performance from black-box machine learning

algorithms. These performance guarantees should ideally hold conditionally on

the input-that is the performance guarantees should hold, at least

approximately, no matter what the input. However, beyond stylized discrete

groupings such as ethnicity and gender, the right notion of conditioning can be

difficult to define. For example, in problems such as image segmentation, we

want the uncertainty to reflect the intrinsic difficulty of the test sample,

but this may be difficult to capture via a conditioning event. Building on the

recent work of Gibbs et al. [2023], we propose a methodology for achieving

approximate conditional control of statistical risks-the expected value of loss

functions-by adapting to the difficulty of test samples. Our framework goes

beyond traditional conditional risk control based on user-provided conditioning

events to the algorithmic, data-driven determination of appropriate function

classes for conditioning. We apply this framework to various regression and

segmentation tasks, enabling finer-grained control over model performance and

demonstrating that by continuously monitoring and adjusting these parameters,

we can achieve superior precision compared to conventional risk-control

methods.

Progress in machine learning (ML) comes with a cost to the environment, given that training ML models requires significant computational resources, energy and materials. In the present article, we aim to quantify the carbon footprint of BLOOM, a 176-billion parameter language model, across its life cycle. We estimate that BLOOM's final training emitted approximately 24.7 tonnes of~\carboneq~if we consider only the dynamic power consumption, and 50.5 tonnes if we account for all processes ranging from equipment manufacturing to energy-based operational consumption. We also study the energy requirements and carbon emissions of its deployment for inference via an API endpoint receiving user queries in real-time. We conclude with a discussion regarding the difficulty of precisely estimating the carbon footprint of ML models and future research directions that can contribute towards improving carbon emissions reporting.

We study optimal liquidation strategies under partial information for a single asset within a finite time horizon. We propose a model tailored for high-frequency trading, capturing price formation driven solely by order flow through mutually stimulating marked Hawkes processes. The model assumes a limit order book framework, accounting for both permanent price impact and transient market impact. Importantly, we incorporate liquidity as a hidden Markov process, influencing the intensities of the point processes governing bid and ask prices. Within this setting, we formulate the optimal liquidation problem as an impulse control problem. We elucidate the dynamics of the hidden Markov chain's filter and determine the related normalized filtering equations. We then express the value function as the limit of a sequence of auxiliary continuous functions, defined recursively. This characterization enables the use of a dynamic programming principle for optimal stopping problems and the determination of an optimal strategy. It also facilitates the development of an implementable algorithm to approximate the original liquidation problem. We enrich our analysis with numerical results and visualizations of candidate optimal strategies.

The rapid adoption of Large Language Models (LLMs) has exposed critical

security and ethical vulnerabilities, particularly their susceptibility to

adversarial manipulations. This paper introduces QROA, a novel black-box

jailbreak method designed to identify adversarial suffixes that can bypass LLM

alignment safeguards when appended to a malicious instruction. Unlike existing

suffix-based jailbreak approaches, QROA does not require access to the model's

logit or any other internal information. It also eliminates reliance on

human-crafted templates, operating solely through the standard query-response

interface of LLMs. By framing the attack as an optimization bandit problem,

QROA employs a surrogate model and token level optimization to efficiently

explore suffix variations. Furthermore, we propose QROA-UNV, an extension that

identifies universal adversarial suffixes for individual models, enabling

one-query jailbreaks across a wide range of instructions. Testing on multiple

models demonstrates Attack Success Rate (ASR) greater than 80\%. These findings

highlight critical vulnerabilities, emphasize the need for advanced defenses,

and contribute to the development of more robust safety evaluations for secure

AI deployment. The code is made public on the following link:

this https URL

Volume imbalance in a limit order book is often considered as a reliable indicator for predicting future price moves. In this work, we seek to analyse the nuances of the relationship between prices and volume imbalance. To this end, we study a market-making problem which allows us to view the imbalance as an optimal response to price moves. In our model, there is an underlying efficient price driving the mid-price, which follows the model with uncertainty zones. A single market maker knows the underlying efficient price and consequently the probability of a mid-price jump in the future. She controls the volumes she quotes at the best bid and ask prices. Solving her optimization problem allows us to understand endogenously the price-imbalance connection and to confirm in particular that it is optimal to quote a predictive imbalance. Our model can also be used by a platform to select a suitable tick size, which is known to be a crucial topic in financial regulation. The value function of the market maker's control problem can be viewed as a family of functions, indexed by the level of the market maker's inventory, solving a coupled system of PDEs. We show existence and uniqueness of classical solutions to this coupled system of equations. In the case of a continuous inventory, we also prove uniqueness of the market maker's optimal control policy.

This work studies the relationship between Contrastive Learning and Domain Adaptation from a theoretical perspective. The two standard contrastive losses, NT-Xent loss (Self-supervised) and Supervised Contrastive loss, are related to the Class-wise Mean Maximum Discrepancy (CMMD), a dissimilarity measure widely used for Domain Adaptation. Our work shows that minimizing the contrastive losses decreases the CMMD and simultaneously improves class-separability, laying the theoretical groundwork for the use of Contrastive Learning in the context of Domain Adaptation. Due to the relevance of Domain Adaptation in medical imaging, we focused the experiments on mammography images. Extensive experiments on three mammography datasets - synthetic patches, clinical (real) patches, and clinical (real) images - show improved Domain Adaptation, class-separability, and classification performance, when minimizing the Supervised Contrastive loss.

We present a novel instance-based approach to handle regression tasks in the context of supervised domain adaptation under an assumption of covariate shift. The approach developed in this paper is based on the assumption that the task on the target domain can be efficiently learned by adequately reweighting the source instances during training phase. We introduce a novel formulation of the optimization objective for domain adaptation which relies on a discrepancy distance characterizing the difference between domains according to a specific task and a class of hypotheses. To solve this problem, we develop an adversarial network algorithm which learns both the source weighting scheme and the task in one feed-forward gradient descent. We provide numerical evidence of the relevance of the method on public data sets for regression domain adaptation through reproducible experiments.

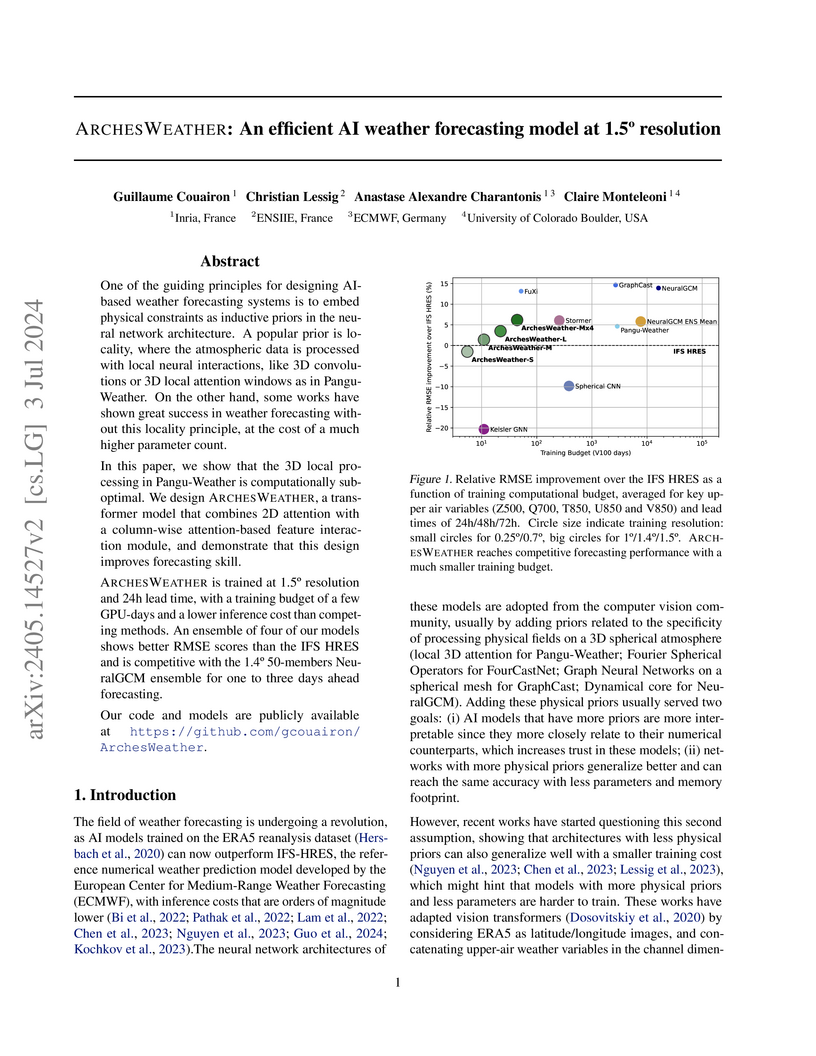

One of the guiding principles for designing AI-based weather forecasting

systems is to embed physical constraints as inductive priors in the neural

network architecture. A popular prior is locality, where the atmospheric data

is processed with local neural interactions, like 3D convolutions or 3D local

attention windows as in Pangu-Weather. On the other hand, some works have shown

great success in weather forecasting without this locality principle, at the

cost of a much higher parameter count. In this paper, we show that the 3D local

processing in Pangu-Weather is computationally sub-optimal. We design

ArchesWeather, a transformer model that combines 2D attention with a

column-wise attention-based feature interaction module, and demonstrate that

this design improves forecasting skill.

ArchesWeather is trained at 1.5{\deg} resolution and 24h lead time, with a

training budget of a few GPU-days and a lower inference cost than competing

methods. An ensemble of four of our models shows better RMSE scores than the

IFS HRES and is competitive with the 1.4{\deg} 50-members NeuralGCM ensemble

for one to three days ahead forecasting. Our code and models are publicly

available at https://github.com/gcouairon/ArchesWeather.

Researchers at Quantmetry and Universit

Paris-Saclay developed a Robust Principal Component Analysis (RPCA) framework for anomaly detection and data imputation in seasonal time series, integrating temporal regularizations and an adaptive online processing method. The online RPCA with a moving window demonstrated better results for both reconstruction errors and precision scores for anomaly detection on non-stationary real-world data.

14 Oct 2025

We consider the multiple quantile hedging problem, which is a class of partial hedging problems containing as special examples the quantile hedging problem (F{ö}llmer \& Leukert 1999) and the PnL matching problem (introduced in Bouchard \& Vu 2012). In complete non-linear markets, we show that the problem can be reformulated as a kind of Monge optimal transport problem. Using this observation, we introduce a Kantorovitch version of the problem and prove that the value of both problems coincide. In the linear case, we thus obtain that the multiple quantile hedging problem can be seen as a semi-discrete optimal transport problem, for which we further introduce the dual problem. We then prove that there is no duality gap, allowing us to design a numerical method based on SGA algorithms to compute the multiple quantile hedging price.

Federated Learning (FL) is gaining traction as a learning paradigm for training Machine Learning (ML) models in a decentralized way. Batch Normalization (BN) is ubiquitous in Deep Neural Networks (DNN), as it improves convergence and generalization. However, BN has been reported to hinder performance of DNNs in heterogeneous FL. Recently, the FedTAN algorithm has been proposed to mitigate the effect of heterogeneity on BN, by aggregating BN statistics and gradients from all the clients. However, it has a high communication cost, that increases linearly with the depth of the DNN. SCAFFOLD is a variance reduction algorithm, that estimates and corrects the client drift in a communication-efficient manner. Despite its promising results in heterogeneous FL settings, it has been reported to underperform for models with BN. In this work, we seek to revive SCAFFOLD, and more generally variance reduction, as an efficient way of training DNN with BN in heterogeneous FL. We introduce a unified theoretical framework for analyzing the convergence of variance reduction algorithms in the BN-DNN setting, inspired of by the work of Wang et al. 2023, and show that SCAFFOLD is unable to remove the bias introduced by BN. We thus propose the BN-SCAFFOLD algorithm, which extends the client drift correction of SCAFFOLD to BN statistics. We prove convergence using the aforementioned framework and validate the theoretical results with experiments on MNIST and CIFAR-10. BN-SCAFFOLD equals the performance of FedTAN, without its high communication cost, outperforming Federated Averaging (FedAvg), SCAFFOLD, and other FL algorithms designed to mitigate BN heterogeneity.

12 May 2020

The continuous and rapid growth of highly interconnected datasets, which are both voluminous and complex, calls for the development of adequate processing and analytical techniques. One method for condensing and simplifying such datasets is graph summarization. It denotes a series of application-specific algorithms designed to transform graphs into more compact representations while preserving structural patterns, query answers, or specific property distributions. As this problem is common to several areas studying graph topologies, different approaches, such as clustering, compression, sampling, or influence detection, have been proposed, primarily based on statistical and optimization methods. The focus of our chapter is to pinpoint the main graph summarization methods, but especially to focus on the most recent approaches and novel research trends on this topic, not yet covered by previous surveys.

The compute requirements associated with training Artificial Intelligence (AI) models have increased exponentially over time. Optimisation strategies aim to reduce the energy consumption and environmental impacts associated with AI, possibly shifting impacts from the use phase to the manufacturing phase in the life-cycle of hardware. This paper investigates the evolution of individual graphics cards production impacts and of the environmental impacts associated with training Machine Learning (ML) models over time. We collect information on graphics cards used to train ML models and released between 2013 and 2023. We assess the environmental impacts associated with the production of each card to visualize the trends on the same period. Then, using information on notable AI systems from the Epoch AI dataset we assess the environmental impacts associated with training each system. The environmental impacts of graphics cards production have increased continuously. The energy consumption and environmental impacts associated with training models have increased exponentially, even when considering reduction strategies such as location shifting to places with less carbon intensive electricity mixes. These results suggest that current impact reduction strategies cannot curb the growth in the environmental impacts of AI. This is consistent with rebound effect, where the efficiency increases fuel the creation of even larger models thereby cancelling the potential impact reduction. Furthermore, these results highlight the importance of considering the impacts of hardware over the entire life-cycle rather than the sole usage phase in order to avoid impact shifting. The environmental impact of AI cannot be reduced without reducing AI activities as well as increasing efficiency.

Designing new industrial materials with desired properties can be very

expensive and time consuming. The main difficulty is to generate compounds that

correspond to realistic materials. Indeed, the description of compounds as

vectors of components' proportions is characterized by discrete features and a

severe sparsity. Furthermore, traditional generative model validation processes

as visual verification, FID and Inception scores are tailored for images and

cannot then be used as such in this context. To tackle these issues, we develop

an original Binded-VAE model dedicated to the generation of discrete datasets

with high sparsity. We validate the model with novel metrics adapted to the

problem of compounds generation. We show on a real issue of rubber compound

design that the proposed approach outperforms the standard generative models

which opens new perspectives for material design optimization.

17 Nov 2020

The Adjusted Rand Index (ARI) is arguably one of the most popular measures

for cluster comparison. The adjustment of the ARI is based on a

hypergeometric distribution assumption which is unsatisfying from a modeling

perspective as (i) it is not appropriate when the two clusterings are

dependent, (ii) it forces the size of the clusters, and (iii) it ignores

randomness of the sampling. In this work, we present a new "modified" version

of the Rand Index. First, we redefine the MRI by only counting the pairs

consistent by similarity and ignoring the pairs consistent by difference,

increasing the interpretability of the score. Second, we base the adjusted

version, MARI, on a multinomial distribution instead of a hypergeometric

distribution. The multinomial model is advantageous as it does not force the

size of the clusters, properly models randomness, and is easily extended to the

dependant case. We show that the ARI is biased under the multinomial model

and that the difference between the ARI and MARI can be large for small n

but essentially vanish for large n, where n is the number of individuals.

Finally, we provide an efficient algorithm to compute all these quantities

((A)RI and M(A)RI) by relying on a sparse representation of the contingency

table in our \texttt{aricode} package. The space and time complexity is linear

in the number of samples and importantly does not depend on the number of

clusters as we do not explicitly compute the contingency table.

As deep generative models proliferate across the AI landscape, industrial practitioners still face critical yet unanswered questions about which deep generative models best suit complex manufacturing design tasks. This work addresses this question through a complete study of five representative models (Variational Autoencoder, Generative Adversarial Network, multimodal Variational Autoencoder, Denoising Diffusion Probabilistic Model, and Multinomial Diffusion Model) on industrial tire architecture generation. Our evaluation spans three key industrial scenarios: (i) unconditional generation of complete multi-component designs, (ii) component-conditioned generation (reconstructing architectures from partial observations), and (iii) dimension-constrained generation (creating designs that satisfy specific dimensional requirements). To enable discrete diffusion models to handle conditional scenarios, we introduce categorical inpainting, a mask-aware reverse diffusion process that preserves known labels without requiring additional training. Our evaluation employs geometry-aware metrics specifically calibrated for industrial requirements, quantifying spatial coherence, component interaction, structural connectivity, and perceptual fidelity. Our findings reveal that diffusion models achieve the strongest overall performance; a masking-trained VAE nonetheless outperforms the multimodal variant MMVAE\textsuperscript{+} on nearly all component-conditioned metrics, and within the diffusion family MDM leads in-distribution whereas DDPM generalises better to out-of-distribution dimensional constraints.

08 Jul 2023

Property graphs have reached a high level of maturity, witnessed by multiple robust graph database systems as well as the ongoing ISO standardization effort aiming at creating a new standard Graph Query Language (GQL). Yet, despite documented demand, schema support is limited both in existing systems and in the first version of the GQL Standard. It is anticipated that the second version of the GQL Standard will include a rich DDL. Aiming to inspire the development of GQL and enhance the capabilities of graph database systems, we propose PG-Schema, a simple yet powerful formalism for specifying property graph schemas. It features PG-Types with flexible type definitions supporting multi-inheritance, as well as expressive constraints based on the recently proposed PG-Keys formalism. We provide the formal syntax and semantics of PG-Schema, which meet principled design requirements grounded in contemporary property graph management scenarios, and offer a detailed comparison of its features with those of existing schema languages and graph database systems.

Physics-Informed Neural Networks (PINNs) have gained considerable interest in diverse engineering domains thanks to their capacity to integrate physical laws into deep learning models. Recently, geometry-aware PINN-based approaches that employ the strong form of underlying physical system equations have been developed with the aim of integrating geometric information into PINNs. Despite ongoing research, the assessment of PINNs in problems with various geometries remains an active area of investigation. In this work, we introduce a novel physics-informed framework named the Geometry-Aware Deep Energy Method (GADEM) for solving structural mechanics problems on different geometries. As the weak form of the physical system equation (or the energy-based approach) has demonstrated clear advantages compared to the strong form for solving solid mechanics problems, GADEM employs the weak form and aims to infer the solution on multiple shapes of geometries. Integrating a geometry-aware framework into an energy-based method results in an effective physics-informed deep learning model in terms of accuracy and computational cost. Different ways to represent the geometric information and to encode the geometric latent vectors are investigated in this work. We introduce a loss function of GADEM which is minimized based on the potential energy of all considered geometries. An adaptive learning method is also employed for the sampling of collocation points to enhance the performance of GADEM. We present some applications of GADEM to solve solid mechanics problems, including a loading simulation of a toy tire involving contact mechanics and large deformation hyperelasticity. The numerical results of this work demonstrate the remarkable capability of GADEM to infer the solution on various and new shapes of geometries using only one trained model.

Recent advancements in multimodal Variational AutoEncoders (VAEs) have

highlighted their potential for modeling complex data from multiple modalities.

However, many existing approaches use relatively straightforward aggregating

schemes that may not fully capture the complex dynamics present between

different modalities. This work introduces a novel multimodal VAE that

incorporates a Markov Random Field (MRF) into both the prior and posterior

distributions. This integration aims to capture complex intermodal interactions

more effectively. Unlike previous models, our approach is specifically designed

to model and leverage the intricacies of these relationships, enabling a more

faithful representation of multimodal data. Our experiments demonstrate that

our model performs competitively on the standard PolyMNIST dataset and shows

superior performance in managing complex intermodal dependencies in a specially

designed synthetic dataset, intended to test intricate relationships.

28 Aug 2024

In this paper we revisit Burnett (2021) \& Burnett and Williams (2021)'s

notion of hedging valuation adjustment (HVA), originally intended to deal with

dynamic hedging frictions such as transaction costs, in the direction of model

risk. The corresponding HVA reconciles a global fair valuation model with the

local models used by the different desks of the bank. Model risk and dynamic

hedging frictions indeed deserve a reserve, but a risk-adjusted one, so not

only an HVA, but also a contribution to the KVA of the bank. The orders of

magnitude of the effects involved suggest that local models should not so much

be managed via reserves, as excluded altogether.

There are no more papers matching your filters at the moment.