Fraunhofer IESE

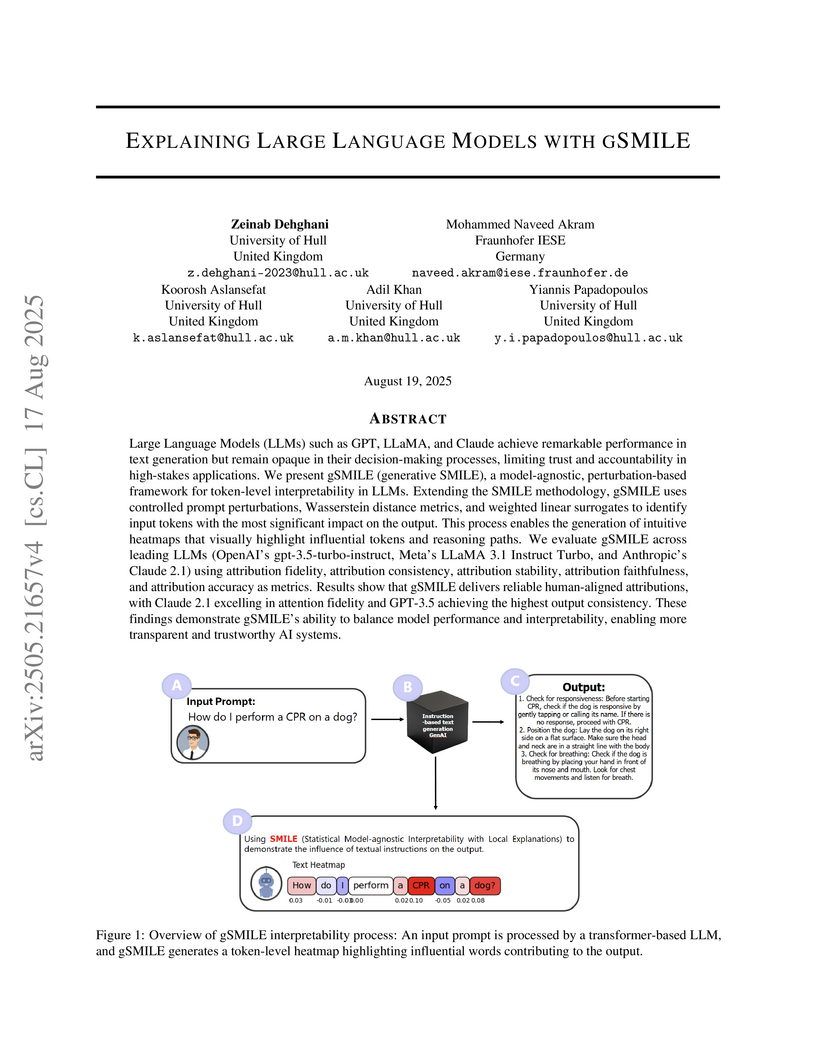

gSMILE, a model-agnostic framework from the University of Hull, provides token-level explanations for large language models by adapting perturbation-based local linear surrogate modeling. It uses Wasserstein distance to quantify semantic shifts and produces intuitive heatmaps and reasoning diagrams, demonstrating high attribution accuracy, faithfulness, and stability across leading LLMs.

10 Jul 2025

Researchers from Fraunhofer IESE and the University of Duisburg-Essen investigated developer practices in LLM-assisted software engineering, revealing that abstract requirements must be manually decomposed into concrete programming tasks for effective LLM use. Their qualitative study with 18 practitioners found that successful code generation depends on developers augmenting prompts with specific contextual information like architectural constraints and existing code.

Artificial Intelligence (AI) promises new opportunities across many domains, including agriculture. However, the adoption of AI systems in this sector faces several challenges. System complexity can impede trust, as farmers' livelihoods depend on their decision-making and they may reject opaque or hard-to-understand recommendations. Data privacy concerns also pose a barrier, especially when farmers lack transparency regarding who can access their data and for what purposes.

This paper examines dairy farmers' explainability requirements for technical recommendations and data privacy, along with the influence of socio-demographic factors. Based on a mixed-methods study involving 40 German dairy farmers, we identify five user personas through k-means clustering. Our findings reveal varying requirements, with some farmers preferring little detail while others seek full transparency across different aspects. Age, technology experience, and confidence in using digital systems were found to correlate with these explainability requirements. The resulting user personas offer practical guidance for requirements engineers aiming to tailor digital systems more effectively to the diverse requirements of farmers.

04 Feb 2014

A well-known approach for identifying defect-prone parts of software in order to focus testing is to use different kinds of product metrics such as size or complexity. Although this approach has been evaluated in many contexts, the question remains if there are further opportunities to improve test focusing. One idea is to identify other types of information that may indicate the location of defect-prone software parts. Data from software inspections, in particular, appear to be promising. This kind of data might already lead to software parts that have inherent difficulties or programming challenges, and in consequence might be defect-prone. This article first explains how inspection and product metrics can be used to focus testing activities. Second, we compare selected product and inspection metrics commonly used to predict defect-prone parts (e.g., size and complexity metrics, inspection defect content metrics, and defect density metrics). Based on initial experience from two case studies performed in different environments, the suitability of different metrics for predicting defect-prone parts is illustrated. The studies revealed that inspection defect data seems to be a suitable predictor, and a combination of certain inspection and product metrics led to the best prioritizations in our contexts. In addition, qualitative experience is presented, which substantiates the expected benefit of using inspection results to optimize testing.

Nowadays, systems containing components based on machine learning (ML)

methods are becoming more widespread. In order to ensure the intended behavior

of a software system, there are standards that define necessary quality aspects

of the system and its components (such as ISO/IEC 25010). Due to the different

nature of ML, we have to adjust quality aspects or add additional ones (such as

trustworthiness) and be very precise about which aspect is really relevant for

which object of interest (such as completeness of training data), and how to

objectively assess adherence to quality requirements. In this article, we

present the construction of a quality model (i.e., evaluation objects, quality

aspects, and metrics) for an ML system based on an industrial use case. This

quality model enables practitioners to specify and assess quality requirements

for such kinds of ML systems objectively. In the future, we want to learn how

the term quality differs between different types of ML systems and come up with

general guidelines for specifying and assessing qualities of ML systems.

Background: Distributed data-intensive systems are increasingly designed to

be only eventually consistent. Persistent data is no longer processed with

serialized and transactional access, exposing applications to a range of

potential concurrency anomalies that need to be handled by the application

itself. Controlling concurrent data access in monolithic systems is already

challenging, but the problem is exacerbated in distributed systems. To make it

worse, only little systematic engineering guidance is provided by the software

architecture community regarding this issue. Aims: In this paper, we report on

our study of the effectiveness and applicability of the novel design guidelines

we are proposing in this regard. Method: We used action research and conducted

it in the context of the software architecture design process of a multi-site

platform development project. Results: Our hypotheses regarding effectiveness

and applicability have been accepted in the context of the study. The initial

design guidelines were refined throughout the study. Thus, we also contribute

concrete guidelines for architecting distributed data-intensive systems with

eventually consistent data. The guidelines are an advancement of Domain-Driven

Design and provide additional patterns for the tactical design part.

Conclusions: Based on our results, we recommend using the guidelines to

architect safe eventually consistent systems. Because of the relevance of

distributed data-intensive systems, we will drive this research forward and

evaluate it in further domains.

In the future, most companies will be confronted with the topic of Artificial

Intelligence (AI) and will have to decide on their strategy in this regards.

Currently, a lot of companies are thinking about whether and how AI and the

usage of data will impact their business model and what potential use cases

could look like. One of the biggest challenges lies in coming up with

innovative solution ideas with a clear business value. This requires business

competencies on the one hand and technical competencies in AI and data

analytics on the other hand. In this article, we present the concept of AI

innovation labs and demonstrate a comprehensive framework, from coming up with

the right ideas to incrementally implementing and evaluating them regarding

their business value and their feasibility based on a company's capabilities.

The concept is the result of nine years of working on data-driven innovations

with companies from various domains. Furthermore, we share some lessons learned

from its practical applications. Even though a lot of technical publications

can be found in the literature regarding the development of AI models and many

consultancy companies provide corresponding services for building AI

innovations, we found very few publications sharing details about what an

end-to-end framework could look like.

05 Nov 2013

The allocation of tasks can be seen as a success-critical management activity

in distributed development projects. However, such task allocation is still one

of the major challenges in global software development due to an insufficient

understanding of the criteria that influence task allocation decisions. This

article presents a qualitative study aimed at identifying and understanding

such criteria that are used in practice. Based on interviews with managers from

selected software development organizations, criteria currently applied in

industry are identified. One important result is, for instance, that the

sourcing strategy and the type of software to be developed have a significant

effect on the applied criteria. The article presents the goals, design, and

results of the study as well as an overview of related and future work.

02 Dec 2013

Software process models need to be variant-rich, in the sense that they

should be systematically customizable to specific project goals and project

environments. It is currently very difficult to model Variant-Rich Process

(VRP) because variability mechanisms are largely missing in modern process

modeling languages. Variability mechanisms from other domains, such as

programming languages, might be suitable for the representation of variability

and could be adapted to the modeling of software processes. Mechanisms from

Software Product Line Engineering (SPLE) and concepts from Aspect- Oriented

Software Engineering (AOSE) show particular promise when modeling variability.

This paper presents an approach that integrates variability concepts from SPLE

and AOSE in the design of a VRP approach for the systematic support of

tailoring in software processes. This approach has also been implemented in

SPEM, resulting in the vSPEM notation. It has been used in a pilot application,

which indicates that our approach based on AOSE can make process tailoring

easier and more productive.

Over the years, software architecture has become a established discipline,

both in academia and industry, and the interest on software architecture

documentation has increased. In this context, the improvement of methods,

tools, and techniques around architecture documentation is of paramount

importance. We conducted a survey with 147 industrial participants (31 from

Brazil), analyzing their current problems and future wishes. We identified that

Brazilian stakeholders need updated architecture documents with the right

information. Finally, the automation of some parts of the documentation will

reduce the effort during the creation of the documents. But first, is necessary

to change the culture of the stakeholders. They have to participate actively in

the architecture documents creation.

Companies dealing with Artificial Intelligence (AI) models in Autonomous Systems (AS) face several problems, such as users' lack of trust in adverse or unknown conditions, gaps between software engineering and AI model development, and operation in a continuously changing operational environment. This work-in-progress paper aims to close the gap between the development and operation of trustworthy AI-based AS by defining an approach that coordinates both activities. We synthesize the main challenges of AI-based AS in industrial settings. We reflect on the research efforts required to overcome these challenges and propose a novel, holistic DevOps approach to put it into practice. We elaborate on four research directions: (a) increased users' trust by monitoring operational AI-based AS and identifying self-adaptation needs in critical situations; (b) integrated agile process for the development and evolution of AI models and AS; (c) continuous deployment of different context-specific instances of AI models in a distributed setting of AS; and (d) holistic DevOps-based lifecycle for AI-based AS.

A typical user interacts with many digital services nowadays, providing these

services with their data. As of now, the management of privacy preferences is

service-centric: Users must manage their privacy preferences according to the

rules of each service provider, meaning that every provider offers its unique

mechanisms for users to control their privacy settings. However, managing

privacy preferences holistically (i.e., across multiple digital services) is

just impractical. In this vision paper, we propose a paradigm shift towards an

enriched user-centric approach for cross-service privacy preferences

management: the realization of a decentralized data privacy protocol.

The use of generative AI-based coding assistants like ChatGPT and Github

Copilot is a reality in contemporary software development. Many of these tools

are provided as remote APIs. Using third-party APIs raises data privacy and

security concerns for client companies, which motivates the use of

locally-deployed language models. In this study, we explore the trade-off

between model accuracy and energy consumption, aiming to provide valuable

insights to help developers make informed decisions when selecting a language

model. We investigate the performance of 18 families of LLMs in typical

software development tasks on two real-world infrastructures, a commodity GPU

and a powerful AI-specific GPU. Given that deploying LLMs locally requires

powerful infrastructure which might not be affordable for everyone, we consider

both full-precision and quantized models. Our findings reveal that employing a

big LLM with a higher energy budget does not always translate to significantly

improved accuracy. Additionally, quantized versions of large models generally

offer better efficiency and accuracy compared to full-precision versions of

medium-sized ones. Apart from that, not a single model is suitable for all

types of software development tasks.

18 Oct 2025

ARCHYTAS aims to design and evaluate non-conventional hardware accelerators, in particular, optoelectronic, volatile and non-volatile processing-in-memory, and neuromorphic, to tackle the power, efficiency, and scalability bottlenecks of AI with an emphasis on defense use cases (e.g., autonomous vehicles, surveillance drones, maritime and space platforms). In this paper, we present the system architecture and software stack that ARCHYTAS will develop to integrate and support those accelerators, as well as the simulation software needed for early prototyping of the full system and its components.

As the use of Artificial Intelligence (AI) components in cyber-physical

systems is becoming more common, the need for reliable system architectures

arises. While data-driven models excel at perception tasks, model outcomes are

usually not dependable enough for safety-critical applications. In this work,we

present a timeseries-aware uncertainty wrapper for dependable uncertainty

estimates on timeseries data. The uncertainty wrapper is applied in combination

with information fusion over successive model predictions in time. The

application of the uncertainty wrapper is demonstrated with a traffic sign

recognition use case. We show that it is possible to increase model accuracy

through information fusion and additionally increase the quality of uncertainty

estimates through timeseries-aware input quality features.

Generating context specific data quality deficits is necessary to

experimentally assess data quality of data-driven (artificial intelligence (AI)

or machine learning (ML)) applications. In this paper we present badgers, an

extensible open-source Python library to generate data quality deficits

(outliers, imbalanced data, drift, etc.) for different modalities (tabular

data, time-series, text, etc.). The documentation is accessible at

this https URL and the source code at

this https URL

27 Mar 2024

Autonomous driving vehicles provide a vast potential for realizing use cases

in the on-road and off-road domains. Consequently, remarkable solutions exist

to autonomous systems' environmental perception and control. Nevertheless,

proof of safety remains an open challenge preventing such machinery from being

introduced to markets and deployed in real world. Traditional approaches for

safety assurance of autonomously driving vehicles often lead to

underperformance due to conservative safety assumptions that cannot handle the

overall complexity. Besides, the more sophisticated safety systems rely on the

vehicle's perception systems. However, perception is often unreliable due to

uncertainties resulting from disturbances or the lack of context incorporation

for data interpretation. Accordingly, this paper illustrates the potential of a

modular, self-adaptive autonomy framework with integrated dynamic risk

management to overcome the abovementioned drawbacks.

25 Nov 2013

Today's software quality assurance techniques are often applied in isolation. Consequently, synergies resulting from systematically integrating different quality assurance activities are often not exploited. Such combinations promise benefits, such as a reduction in quality assurance effort or higher defect detection rates. The integration of inspection and testing, for instance, can be used to guide testing activities. For example, testing activities can be focused on defect-prone parts based upon inspection results. Existing approaches for predicting defect-prone parts do not make systematic use of the results from inspections. This article gives an overview of an integrated inspection and testing approach, and presents a preliminary case study aiming at verifying a study design for evaluating the approach. First results from this preliminary case study indicate that synergies resulting from the integration of inspection and testing might exist, and show a trend that testing activities could be guided based on inspection results.

27 Jun 2024

The farming domain has seen a tremendous shift towards digital solutions.

However, capturing farmers' requirements regarding Digital Farming (DF)

technology remains a difficult task due to domain-specific challenges. Farmers

form a diverse and international crowd of practitioners who use a common pool

of agricultural products and services, which means we can consider the

possibility of applying Crowd-based Requirements Engineering (CrowdRE) for DF:

CrowdRE4DF. We found that online user feedback in this domain is limited,

necessitating a way of capturing user feedback from farmers in situ. Our

solution, the Farmers' Voice application, uses speech-to-text, Machine Learning

(ML), and Web 2.0 technology. A preliminary evaluation with five farmers showed

good technology acceptance, and accurate transcription and ML analysis even in

noisy farm settings. Our findings help to drive the development of DF

technology through in-situ requirements elicitation.

05 Jul 2024

The pervasive role played by software in virtually all industries has

fostered ever-increasing development of applied research in software

engineering. In this chapter, we contribute our experience in using the V-Model

as a framework for teaching how to conduct applied research in empirical

software engineering. The foundational idea of using the V-Model is presented,

and guidance for using it to frame the research is provided. Furthermore, we

show how the framework has been instantiated throughout nearly two decades of

PhD theses done at the University of Kaiserslautern (RPTU Kaiserslautern) in

partnership with Fraunhofer IESE, including the most frequent usage patterns,

how the different empirical methods fit into the framework, and the lessons we

have learned from this experience.

There are no more papers matching your filters at the moment.