Ask or search anything...

A framework called Adversarial Tuning enhances Large Language Model defense against jailbreak attacks by generating and learning from worst-case adversarial scenarios, significantly reducing the Attack Success Rate for models like Vicuna-13B to 2.69% from 66.54% on known attacks and demonstrating transferability across various open-source LLMs.

View blogA comprehensive benchmarking framework, JailTrickBench, evaluates jailbreak attacks on Large Language Models by systematically assessing eight key factors influencing attack success and their effectiveness against six distinct defense mechanisms. Researchers at The Hong Kong University of Science and Technology demonstrate varied model robustness across attack configurations and highlight trade-offs between safety and model utility in current defenses.

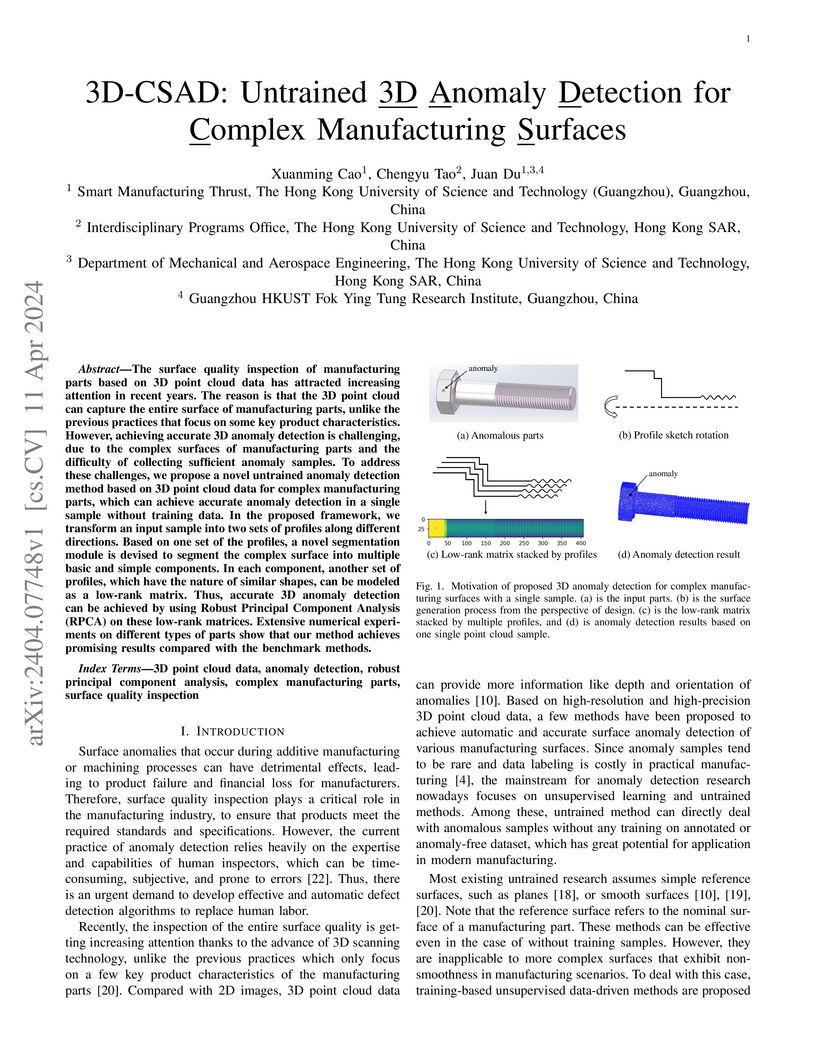

View blog3D-CSAD introduces an untrained method for 3D anomaly detection on complex manufacturing surfaces, utilizing a single point cloud sample. This approach accurately identifies both hole and scratch anomalies on intricate geometries, exhibiting superior performance (e.g., DICE coefficient of 0.9390 for Part1 hole anomalies) and lower false positive rates compared to benchmark methods.

View blog HKUST

HKUST

Chinese Academy of Sciences

Chinese Academy of Sciences

University of Science and Technology of China

University of Science and Technology of China

University of Waterloo

University of Waterloo Huazhong University of Science and Technology

Huazhong University of Science and Technology

Northeastern University

Northeastern University The Hong Kong Polytechnic University

The Hong Kong Polytechnic University