Hunan Normal University

Researchers from Chinese institutions developed the HeunC-Chen method, a novel approach utilizing analytic continuation with Confluent Heun functions, to compute complete quasinormal mode (QNM) spectra for Type-D black holes. This method resolved two decades-old open problems regarding QNM continuity and their relationship with algebraically special frequencies, delivering a comprehensive and highly accurate QNM dataset with significantly improved computational efficiency.

28 May 2025

This paper offers a comprehensive tutorial detailing the systematic integration of Large AI Models (LAMs) and Agentic AI into future 6G communication networks. It outlines core concepts, a five-component Agentic AI system architecture, and specific design methodologies, demonstrating how these advanced AI paradigms can enable autonomous and self-optimizing communication systems.

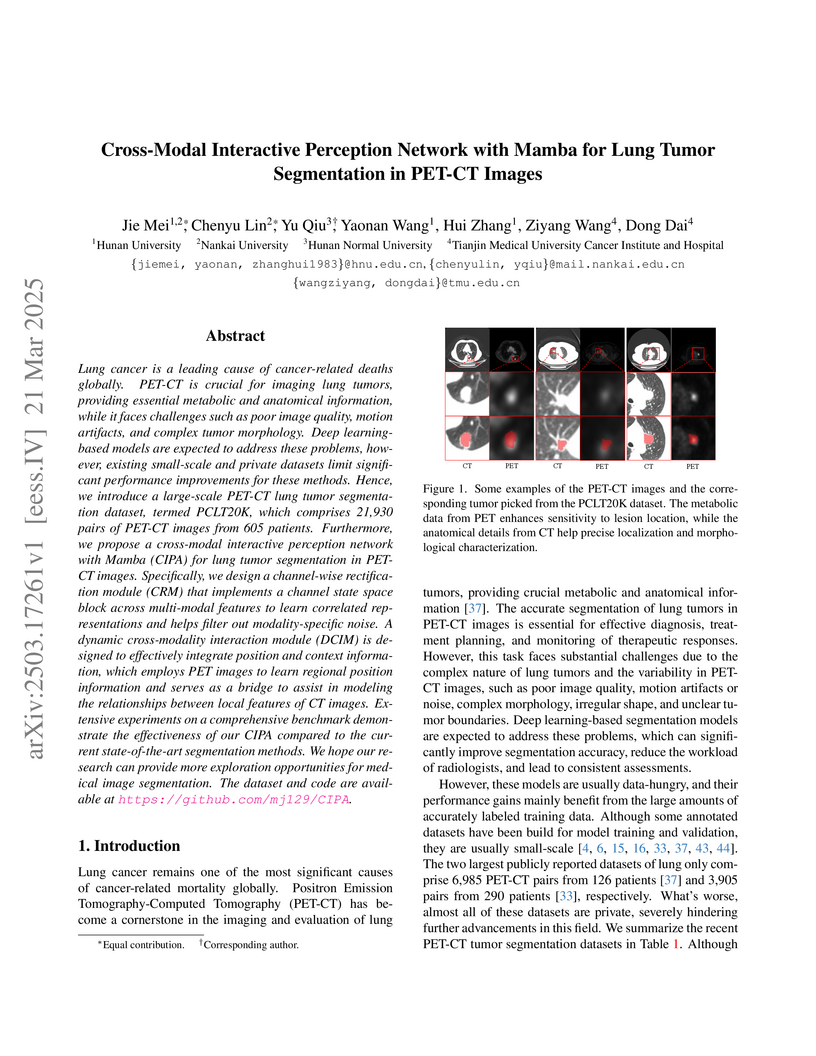

Lung cancer is a leading cause of cancer-related deaths globally. PET-CT is

crucial for imaging lung tumors, providing essential metabolic and anatomical

information, while it faces challenges such as poor image quality, motion

artifacts, and complex tumor morphology. Deep learning-based models are

expected to address these problems, however, existing small-scale and private

datasets limit significant performance improvements for these methods. Hence,

we introduce a large-scale PET-CT lung tumor segmentation dataset, termed

PCLT20K, which comprises 21,930 pairs of PET-CT images from 605 patients.

Furthermore, we propose a cross-modal interactive perception network with Mamba

(CIPA) for lung tumor segmentation in PET-CT images. Specifically, we design a

channel-wise rectification module (CRM) that implements a channel state space

block across multi-modal features to learn correlated representations and helps

filter out modality-specific noise. A dynamic cross-modality interaction module

(DCIM) is designed to effectively integrate position and context information,

which employs PET images to learn regional position information and serves as a

bridge to assist in modeling the relationships between local features of CT

images. Extensive experiments on a comprehensive benchmark demonstrate the

effectiveness of our CIPA compared to the current state-of-the-art segmentation

methods. We hope our research can provide more exploration opportunities for

medical image segmentation. The dataset and code are available at

this https URL

A dual-task learning framework called ChemDual leverages large language models for chemical reaction and retrosynthesis prediction by combining a 4.4M molecule synthetic dataset with correlated forward/backward task training, achieving state-of-the-art performance on USPTO-50K and ChemLLMBench while generating chemically valid compounds with strong target binding affinity.

We present a model-independent determination of the Hubble constant (H0) using the latest observational data from multiple cosmological probes. By combining baryon acoustic oscillation (BAO) measurements from the second data release of the Dark Energy Spectroscopic Instrument (DESI DR2), cosmic chronometer H(z) data, and the Pantheon Plus Type Ia supernova (SN Ia) sample, we reconstruct the cosmic expansion history through Gaussian process regression without assuming a specific cosmological model or relying on sound horizon calibration. Our analysis incorporates the complete covariance structure of the measurements and yields H0 constraints at five distinct redshifts: 65.72±1.99 (z=0.51), 67.78±1.75 (z=0.706), 70.74±1.39 (z=0.934), 71.04±1.93 (z=1.321), and 68.37±3.95 km s−1 Mpc−1 (z=1.484). The optimal combination of these measurements gives H^0=69.0±1.0 km s−1 Mpc−1 with 1.4\% precision, which occupies an intermediate position between the Planck CMB result and the SH0ES local measurement and is consistent with the TRGB result. Rather than providing a single integrated H0 value, our approach delivers independent constraints at multiple redshifts, thereby enabling a detailed investigation of potential redshift-dependent systematic effects that could contribute to the Hubble tension. We identify significant correlations between adjacent redshift bins (ρ=−0.033 to 0.26), primarily arising from the BAO covariance and reconstruction effects. These results demonstrate a clear redshift evolution in Hubble constant measurements and suggest that the Hubble tension may involve more complex redshift-dependent effects than a simple dichotomy between early and late universe probes.

An approach to multimodal semantic communication (LAM-MSC) leverages large AI models for unifying diverse data types, personalizing semantic interpretation, and robustly estimating wireless channels. Simulations indicate the framework achieves high compression rates and maintains robust performance.

We present the distance priors from the finally released $Planck~

\textrm{TT,TE,EE}+\textrm{lowE}$ data in 2018. The uncertainties are around

40% smaller than those from Planck 2015 TT+lowP. In order to check the

validity of these new distance priors, we adopt the distance priors to

constrain the cosmological parameters in different dark energy models,

including the ΛCDM model, the wCDM model and the CPL model, and

conclude that the distance priors provide consistent constraints on the

relevant cosmological parameters compared to those from the full Planck 2018

data release.

16 Oct 2025

The pursuit of materials combining light constituent elements with ultralow lattice thermal conductivity (κL) is crucial to advancing technologies like thermoelectrics and thermal barrier coatings, yet it remains a formidable challenge to date. Herein, we achieve ultralow κL in lightweight cyanide-bridged framework materials (CFMs) through the rational integration of properties such as the hierarchical vibrations exhibited in superatomic structures and rotational dynamics exhibited in perovskites. Unique hierarchical rotation behavior leads to multiple negative peaks in Grüneisen parameters across a wide frequency range, thereby inducing pronounced negative thermal expansion and strong cubic anharmonicity in CFMs. Meanwhile, the synergistic effect between large four-phonon scattering phase space (induced by phonon quasi-flat bands and wide bandgaps) and strong quartic anharmonicity (associated with rotation modes) leads to giant quartic anharmonic scattering rates in these materials. Consequently, the κL of these CFMs decreases by one to two orders of magnitude compared to the known perovskites or perovskite-like materials with equivalent average atomic masses. For instance, the Cd(CN)2, NaB(CN)4, LiIn(CN)4, and AgX(CN)4 (X = B, Al, Ga, In) exhibit ultralow room-temperature κL values ranging from 0.35 to 0.81 W/mK. This work not only establishes CFMs as a novel and rich platform for studying extreme phonon anharmonicity, but also provides a new paradigm for achieving ultralow thermal conductivity in lightweight materials via the conscious integration of hierarchical and rotational dynamics.

We investigate a novel gravitational configuration formed by a massless real phantom field and an axion scalar field, minimally coupled to gravity. This system describes an Ellis-type wormhole situated at the center of an axion star. By normalizing the mass of the axion field to unity, the physical properties of the model are determined by three independent parameters: the potential's decay constant, the frequency of the axion field, and the wormhole's throat parameter. We assess the traversability of this wormhole by examining the curvature scalars and energy conditions of the static solution. Our analysis of the wormhole's embedding diagrams indicates that, although the wormhole typically exhibits a single-throat geometry, a double-throat configuration featuring an equatorial plane may arise under specific conditions. Finally, an analysis of the null-geodesics reveals the existence of at least one unstable light ring at the wormhole throat.

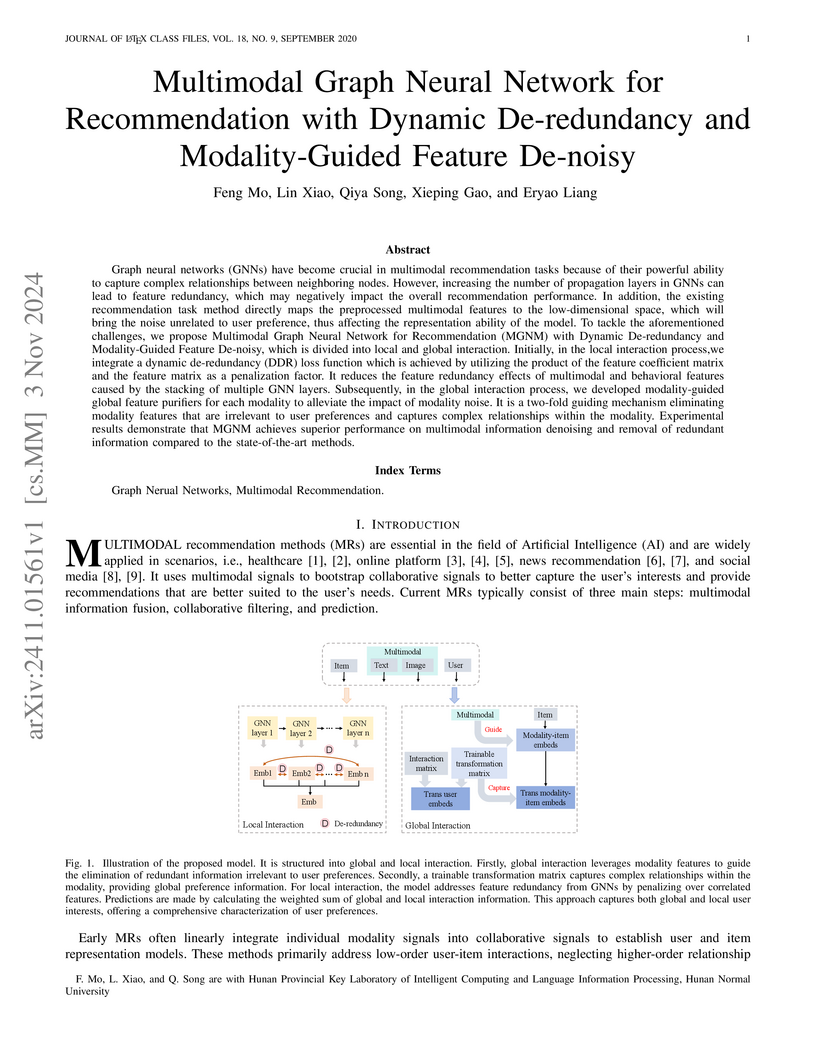

Graph neural networks (GNNs) have become crucial in multimodal recommendation tasks because of their powerful ability to capture complex relationships between neighboring nodes. However, increasing the number of propagation layers in GNNs can lead to feature redundancy, which may negatively impact the overall recommendation performance. In addition, the existing recommendation task method directly maps the preprocessed multimodal features to the low-dimensional space, which will bring the noise unrelated to user preference, thus affecting the representation ability of the model. To tackle the aforementioned challenges, we propose Multimodal Graph Neural Network for Recommendation (MGNM) with Dynamic De-redundancy and Modality-Guided Feature De-noisy, which is divided into local and global interaction. Initially, in the local interaction process,we integrate a dynamic de-redundancy (DDR) loss function which is achieved by utilizing the product of the feature coefficient matrix and the feature matrix as a penalization factor. It reduces the feature redundancy effects of multimodal and behavioral features caused by the stacking of multiple GNN layers. Subsequently, in the global interaction process, we developed modality-guided global feature purifiers for each modality to alleviate the impact of modality noise. It is a two-fold guiding mechanism eliminating modality features that are irrelevant to user preferences and captures complex relationships within the modality. Experimental results demonstrate that MGNM achieves superior performance on multimodal information denoising and removal of redundant information compared to the state-of-the-art methods.

It was recently proposed by Rosato {\it et al.} and Oshita {\it et al.} that black hole greybody factors, as stable observables at relatively high frequencies, are more relevant quantities than quasinormal modes in modeling ringdown spectral amplitudes. It was argued that the overall contributions of spectrally unstable quasinormal modes conspire to produce stable observables through collective interference effects. In this regard, the present study investigates the Regge poles, the underlying quantities of the greybody factor governed by the singularities in the complex angular momentum plane, for perturbed black hole metrics. To this end, we generalize the matrix method to evaluate the Regge poles in black hole metrics with discontinuities. To verify our approach, the numerical results are compared with those obtained using a modified version of the continued fraction method. The obtained Regge pole spectrum is then used to calculate the scattering amplitude and cross-section. We show that the stability of these observables at moderate frequencies can be readily interpreted in terms of the stability of the Regge pole spectrum, particularly the low-lying modes. Nonetheless, destabilization still occurs at higher frequencies, characterized by the emergence of a bifurcation in the spectrum. The latter further evolves, leading to more significant deformation in the Regge poles, triggered by ultraviolet metric perturbations moving further away from the black hole. However, based on the validity of the WKB approximation, it is argued that such an instability in the spectrum is not expected to cause significant observable implications.

Black holes constitute nature's fastest quantum information scramblers, a phenomenon captured by gravitational analogue systems such as position-dependent XY spin chains. In these models, scrambling dynamics are governed exclusively by the hopping interactions profile, independent of system size. Utilizing such curved spacetime analogues as quantum batteries, we explore how the black hole scrambling affects charging via controlled quenches of preset scrambling parameters. Our analysis reveals that the intentionally engineered difference between post-quench and pre-quench scrambling parameters could significantly enhance both maximum stored energy Emax and peak charging power Pmax in the quench charging protocol. Furthermore, the peaks of extractable work and stored energy coincide. This is because the system's evolution under a weak perturbation remains close to the ground state, resulting in a passive state energy nearly identical to the ground state energy. The optimal charging time τ∗ exhibits negligible dependence on the preset initial horizon parameter xh0, while decreasing monotonically with increasing quench horizon parameter xht. This temporal compression confines high-power operation to regimes with strong post-quench scrambling xht>xh0, demonstrating accelerated charging mediated by spacetime-mimicking scrambling dynamics.

Semantic communication (SC) is an emerging intelligent paradigm, offering solutions for various future applications like metaverse, mixed reality, and the Internet of Everything. However, in current SC systems, the construction of the knowledge base (KB) faces several issues, including limited knowledge representation, frequent knowledge updates, and insecure knowledge sharing. Fortunately, the development of the large AI model (LAM) provides new solutions to overcome the above issues. Here, we propose a LAM-based SC framework (LAM-SC) specifically designed for image data, where we first apply the segment anything model (SAM)-based KB (SKB) that can split the original image into different semantic segments by universal semantic knowledge. Then, we present an attention-based semantic integration (ASI) to weigh the semantic segments generated by SKB without human participation and integrate them as the semantic aware image. Additionally, we propose an adaptive semantic compression (ASC) encoding to remove redundant information in semantic features, thereby reducing communication overhead. Finally, through simulations, we demonstrate the effectiveness of the LAM-SC framework and the possibility of applying the LAM-based KB in future SC paradigms.

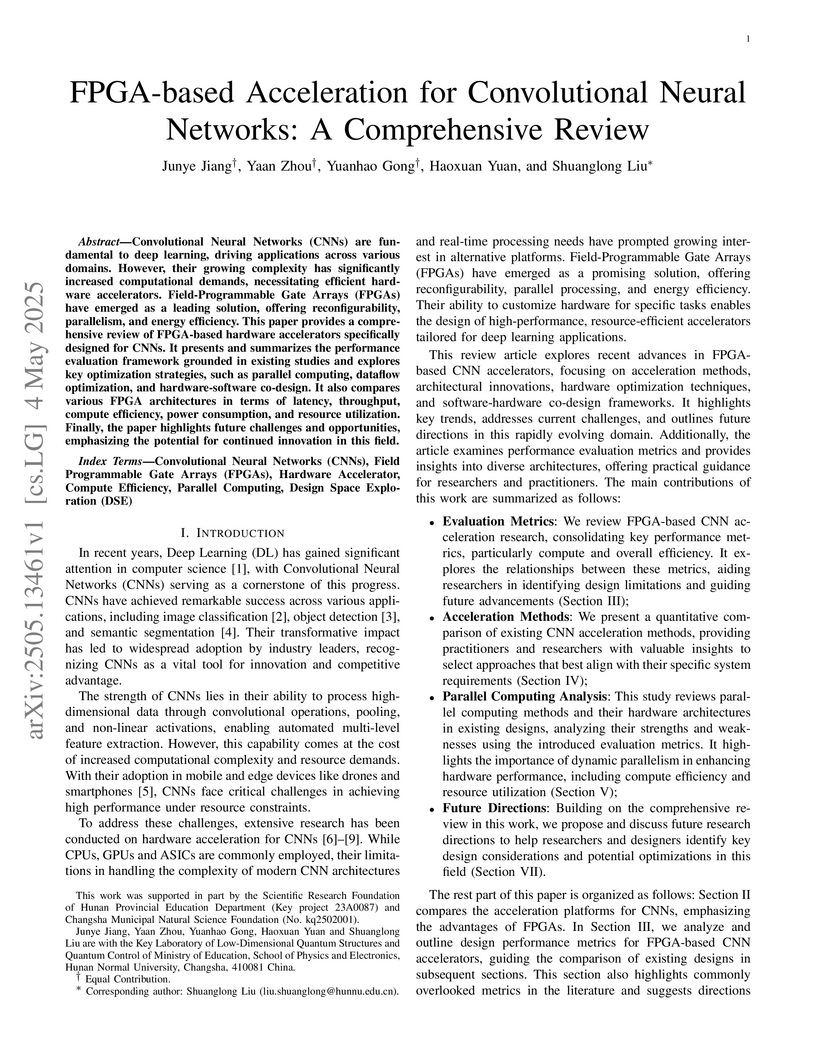

Convolutional Neural Networks (CNNs) are fundamental to deep learning,

driving applications across various domains. However, their growing complexity

has significantly increased computational demands, necessitating efficient

hardware accelerators. Field-Programmable Gate Arrays (FPGAs) have emerged as a

leading solution, offering reconfigurability, parallelism, and energy

efficiency. This paper provides a comprehensive review of FPGA-based hardware

accelerators specifically designed for CNNs. It presents and summarizes the

performance evaluation framework grounded in existing studies and explores key

optimization strategies, such as parallel computing, dataflow optimization, and

hardware-software co-design. It also compares various FPGA architectures in

terms of latency, throughput, compute efficiency, power consumption, and

resource utilization. Finally, the paper highlights future challenges and

opportunities, emphasizing the potential for continued innovation in this

field.

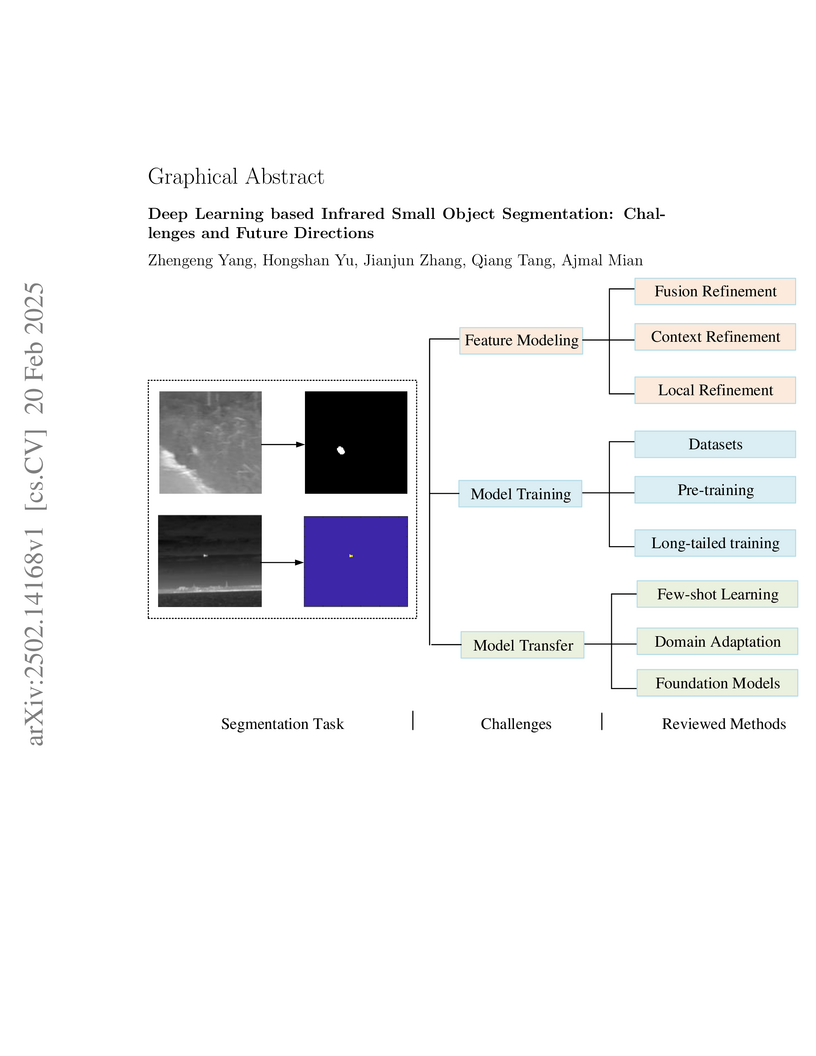

Infrared sensing is a core method for supporting unmanned systems, such as

autonomous vehicles and drones. Recently, infrared sensors have been widely

deployed on mobile and stationary platforms for detection and classification of

objects from long distances and in wide field of views. Given its success in

the vision image analysis domain, deep learning has also been applied for

object recognition in infrared images. However, techniques that have proven

successful in visible light perception face new challenges in the infrared

domain. These challenges include extremely low signal-to-noise ratios in

infrared images, very small and blurred objects of interest, and limited

availability of labeled/unlabeled training data due to the specialized nature

of infrared sensors. Numerous methods have been proposed in the literature for

the detection and classification of small objects in infrared images achieving

varied levels of success. There is a need for a survey paper that critically

analyzes existing techniques in this domain, identifies unsolved challenges and

provides future research directions. This paper fills the gap and offers a

concise and insightful review of deep learning-based methods. It also

identifies the challenges faced by existing infrared object segmentation

methods and provides a structured review of existing infrared perception

methods from the perspective of these challenges and highlights the motivations

behind the various approaches. Finally, this review suggests promising future

directions based on recent advancements within this domain.

10 Nov 2025

Chinese Academy of Sciences

Chinese Academy of Sciences Beijing Normal UniversityHunan Normal UniversityAnhui UniversityYunnan UniversityNational Astronomical ObservatoriesPurple Mountain ObservatoryShanghai Astronomical ObservatorySouth-Western Institute for Astronomy ResearchChangchun Institute of Optics, Fine Mechanics and Physics, Chinese Academy of SciencesChangchun Institute of Optics, Fine Mechanics and PhysicsLudwig-Maximilians-Universit

is-M

isnchenLudwig-Maximilians-Universit

is M

in

Beijing Normal UniversityHunan Normal UniversityAnhui UniversityYunnan UniversityNational Astronomical ObservatoriesPurple Mountain ObservatoryShanghai Astronomical ObservatorySouth-Western Institute for Astronomy ResearchChangchun Institute of Optics, Fine Mechanics and Physics, Chinese Academy of SciencesChangchun Institute of Optics, Fine Mechanics and PhysicsLudwig-Maximilians-Universit

is-M

isnchenLudwig-Maximilians-Universit

is M

inThe Chinese Space Station Survey Telescope (CSST) is a flagship space-based observatory. Its main survey camera is designed to conduct high spatial resolution near-ultraviolet to near-infrared imaging and low-resolution spectroscopic surveys. To maximize the scientific output of CSST, we have developed a comprehensive, high-fidelity simulation pipeline for reproducing both imaging and spectroscopic observations. This paper presents an overview of the simulation framework, detailing its implementation and components. Built upon the GalSim package and incorporating the latest CSST instrumental specifications, our pipeline generates pixel-level mock observations that closely replicate the expected instrumental and observational conditions. The simulation suite integrates realistic astrophysical object catalogs, instrumental effects, point spread function (PSF) modeling, and observational noises to produce accurate synthetic data. We describe the key processing stages of the simulation, from constructing the input object catalogs to modeling the telescope optics and detector responses. Furthermore, we introduce the most recent release of simulated datasets, which provide a crucial testbed for data processing pipeline developments, calibration strategies, and scientific analyses, ensuring that CSST will meet its stringent requirements. Our pipeline serves as a vital tool for optimizing CSST main survey strategies and ensuring robust cosmological measurements.

A generic knowledge distillation framework, HeteroAKD, enables effective knowledge transfer between heterogeneous deep learning architectures for semantic segmentation tasks. It consistently outperforms state-of-the-art methods, achieving up to a 3.37% mIoU gain on Cityscapes and allowing student models to sometimes exceed their teacher's performance.

19 Aug 2025

Monash UniversityCSIRONational Astronomical Observatory of Japan

Monash UniversityCSIRONational Astronomical Observatory of Japan Chinese Academy of Sciences

Chinese Academy of Sciences Beijing Normal UniversityHunan Normal UniversityCurtin UniversityMacquarie University

Beijing Normal UniversityHunan Normal UniversityCurtin UniversityMacquarie University HKUSTLeibniz Universität HannoverSwinburne University of TechnologyMax Planck Institute for Gravitational Physics (Albert Einstein Institute)Western Sydney UniversityAustralian Research Council Centre of Excellence for Gravitational Wave Discovery (OzGrav)

HKUSTLeibniz Universität HannoverSwinburne University of TechnologyMax Planck Institute for Gravitational Physics (Albert Einstein Institute)Western Sydney UniversityAustralian Research Council Centre of Excellence for Gravitational Wave Discovery (OzGrav)We present results from an all-sky search for continuous gravitational waves from individual supermassive binary black holes using the third data release (DR3) of the Parkes Pulsar Timing Array (PPTA). Even though we recover a common-spectrum stochastic process, potentially induced by a nanohertz gravitational wave background, we find no evidence of continuous waves. Therefore, we place upper limits on the gravitational-wave strain amplitude: in the most sensitive frequency range around 10 nHz, we obtain a sky-averaged 95\% credibility upper limit of ≈7×10−15. Our search is sensitive to supermassive binary black holes with a chirp mass of ≥109M⊙ up to a luminosity distance of 50 Mpc for our least sensitive sky direction and 200 Mpc for the most sensitive direction. This work provides at least 4 times better sensitivity in the 1-200 nHz frequency band than our last search based on the PPTA's first data release. We expect that PPTA will continue to play a key role in detecting continuous gravitational waves in the exciting era of nanohertz gravitational wave astronomy.

Gravitational waves (GWs) originating from cosmological sources offer direct insights into the physics of the primordial Universe, the fundamental nature of gravity, and the cosmic expansion of the Universe. In this review paper, we present a comprehensive overview of our recent advances in GW cosmology, supported by the national key research and development program of China, focusing on cosmological GW sources and their implications for fundamental physics and cosmology. We first discuss the generation mechanisms and characteristics of stochastic gravitational wave backgrounds generated by physical processes occurred in the early Universe, including those from inflation, phase transitions, and topological defects, and summarize current and possible future constraints from pulsar timing array and space-based detectors. Next, we explore the formation and observational prospects of primordial black holes as GW sources and their potential connection to dark matter. We then analyze how GWs are affected by large-scale structure, cosmological perturbations, and possible modifications of gravity on GW propagation, and how these effects can be used to test fundamental symmetry of gravity. Finally, we discuss the application of GW standard sirens in measuring the Hubble constant, the expansion history, and dark energy parameters, including their combination with electromagnetic observations. These topics together show how GW observations, especially with upcoming space-based detectors, such as LISA, Taiji, and Tianqin, can provide new information about the physics of the early Universe, cosmological evolution, and the nature of gravity.

Monash UniversityCSIRONational Astronomical Observatory of Japan

Monash UniversityCSIRONational Astronomical Observatory of Japan Beijing Normal UniversityHunan Normal UniversityCurtin UniversityMacquarie UniversitySwinburne University of TechnologyMax Planck Institute for Gravitational Physics (Albert Einstein Institute)International Centre for Radio Astronomy ResearchLishui UniversityCentre for Astrophysics and SupercomputingNational Institute of Technology, Sendai CollegeLeibniz Universit\"at HannoverSynergetic Innovation Center for Quantum Effects and ApplicationsInstitute for Frontier in Astronomy and AstrophysicsInstitute of Interdisciplinary StudiesARC Centre for Excellence for Gravitational Wave DiscoveryInstitute of Optoelectronic Technology

Beijing Normal UniversityHunan Normal UniversityCurtin UniversityMacquarie UniversitySwinburne University of TechnologyMax Planck Institute for Gravitational Physics (Albert Einstein Institute)International Centre for Radio Astronomy ResearchLishui UniversityCentre for Astrophysics and SupercomputingNational Institute of Technology, Sendai CollegeLeibniz Universit\"at HannoverSynergetic Innovation Center for Quantum Effects and ApplicationsInstitute for Frontier in Astronomy and AstrophysicsInstitute of Interdisciplinary StudiesARC Centre for Excellence for Gravitational Wave DiscoveryInstitute of Optoelectronic TechnologyA sub-parsec supermassive black hole binary at the center of the galaxy 3C 66B is a promising candidate for continuous gravitational wave searches with Pulsar Timing Arrays. In this work, we search for such a signal in the third data release of the Parkes Pulsar Timing Array. Matching our priors to estimates of binary parameters from electromagnetic observations, we find log Bayes factor of 0.22, highlighting that the source can be neither confirmed nor ruled out. We place upper limits at 95% credibility on the chirp mass M < 6.05 \times 10^{8}\ M_{\odot}, and on the characteristic strain amplitude \textrm{log}_{10}(h_0)< -14.44. This partially rules out the parameter space suggested by electromagnetic observations of 3C 66B. We also independently reproduce the calculation of the chirp mass with the 3-mm flux monitor data from the unresolved core of 3C 66B. Based on this, we outline a new methodology for constructing a joint likelihood of electromagnetic and gravitational-wave data from supermassive black hole binaries. Finally, we suggest that firmly established SMBHB candidates may be treated as standard sirens for complementary constraints on the universe expansion rate. With this, we obtain constraints on the Hubble constant with 3C 66B.

There are no more papers matching your filters at the moment.