IBM Research Zürıch

Researchers from IBM Research – Zurich and ETH Zürich introduce PD-SSM, a State-Space Model architecture that uses structured sparse transition matrices. This approach enables SSMs to accurately emulate any N-state Finite-State Automata (FSA) with a single layer and state size N, while maintaining linear computational scaling, significantly outperforming other parallelizable SSMs in algorithmic reasoning.

Bayesian optimization in large unstructured discrete spaces is often hindered by the computational cost of maximizing acquisition functions due to the absence of gradients. We propose a scalable alternative based on Thompson sampling that eliminates the need for acquisition function maximization by directly parameterizing the probability that a candidate yields the maximum reward. Our approach, Thompson Sampling via Fine-Tuning (ToSFiT) leverages the prior knowledge embedded in prompt-conditioned large language models, and incrementally adapts them toward the posterior. Theoretically, we derive a novel regret bound for a variational formulation of Thompson Sampling that matches the strong guarantees of its standard counterpart. Our analysis reveals the critical role of careful adaptation to the posterior probability of maximality--a principle that underpins our ToSFiT algorithm. Empirically, we validate our method on three diverse tasks: FAQ response refinement, thermally stable protein search, and quantum circuit design. We demonstrate that online fine-tuning significantly improves sample efficiency, with negligible impact on computational efficiency.

20 Nov 2025

The recent advent of reasoning models like OpenAI's o1 was met with excited speculation by the AI community about the mechanisms underlying these capabilities in closed models, followed by a rush of replication efforts, particularly from the open source community. These speculations were largely settled by the demonstration from DeepSeek-R1 that chains-of-thought and reinforcement learning (RL) can effectively replicate reasoning on top of base LLMs. However, it remains valuable to explore alternative methods for theoretically eliciting reasoning that could help elucidate the underlying mechanisms, as well as providing additional methods that may offer complementary benefits.

Here, we build on the long-standing literature in cognitive psychology and cognitive architectures, which postulates that reasoning arises from the orchestrated, sequential execution of a set of modular, predetermined cognitive operations. Crucially, we implement this key idea within a modern agentic tool-calling framework. In particular, we endow an LLM with a small set of "cognitive tools" encapsulating specific reasoning operations, each executed by the LLM itself. Surprisingly, this simple strategy results in considerable gains in performance on standard mathematical reasoning benchmarks compared to base LLMs, for both closed and open-weight models. For instance, providing our "cognitive tools" to GPT-4.1 increases its pass@1 performance on AIME2024 from 32% to 53%, even surpassing the performance of o1-preview.

In addition to its practical implications, this demonstration contributes to the debate regarding the role of post-training methods in eliciting reasoning in LLMs versus the role of inherent capabilities acquired during pre-training, and whether post-training merely uncovers these latent abilities.

This research introduces Analog Foundation Models (AFMs), a method for adapting large, pre-trained language models to operate robustly on energy-efficient analog in-memory computing (AIMC) hardware. The approach uses hardware-aware training and knowledge distillation to significantly improve performance under realistic analog noise conditions compared to standard quantization techniques, while maintaining model capabilities.

31 Oct 2025

We introduce several dynamical schemes that take advantage of mid-circuit measurement and nearest-neighbor gates on a lattice with maximum vertex degree three to implement topological codes and perform logic gates between them. We first review examples of Floquet codes and their implementation with nearest-neighbor gates and ancillary qubits. Next, we describe implementations of these Floquet codes that make use of the ancillary qubits to reset all qubits every measurement cycle. We then show how switching the role of data and ancilla qubits allows a pair of Floquet codes to be implemented simultaneously. We describe how to perform a logical Clifford gate to entangle a pair of Floquet codes that are implemented in this way. Finally, we show how switching between the color code and a pair of Floquet codes, via a depth-two circuit followed by mid-circuit measurement, can be used to perform syndrome extraction for the color code.

Researchers at IBM Research propose a four-class taxonomy for RAG question-context pairs, demonstrating that public datasets are often skewed towards simple factual queries. They developed strategies, including fine-tuning smaller models, to generate balanced, high-quality synthetic evaluation datasets, improving cost-effectiveness and evaluation reliability for RAG systems.

Self-supervised learning (SSL) has emerged as a central paradigm for training foundation models by leveraging large-scale unlabeled datasets, often producing representations with strong generalization capabilities. These models are typically pre-trained on general-purpose datasets such as ImageNet and subsequently adapted to various downstream tasks through finetuning. While recent advances have explored parameter-efficient strategies for adapting pre-trained models, extending SSL pre-training itself to new domains - particularly under limited data regimes and for dense prediction tasks - remains underexplored. In this work, we address the problem of adapting vision foundation models to new domains in an unsupervised and data-efficient manner, specifically targeting downstream semantic segmentation. We propose GLARE (Global Local and Regional Enforcement), a novel continual self-supervised pre-training task designed to enhance downstream segmentation performance. GLARE introduces patch-level augmentations to encourage local consistency and incorporates a regional consistency constraint that leverages spatial semantics in the data. For efficient continual pre-training, we initialize Vision Transformers (ViTs) with weights from existing SSL models and update only lightweight adapter modules - specifically UniAdapter - while keeping the rest of the backbone frozen. Experiments across multiple semantic segmentation benchmarks on different domains demonstrate that GLARE consistently improves downstream performance with minimal computational and parameter overhead.

Analog In-Memory Computing (AIMC) offers a promising solution to the von Neumann bottleneck. However, deploying transformer models on AIMC remains challenging due to their inherent need for flexibility and adaptability across diverse tasks. For the benefits of AIMC to be fully realized, weights of static vector-matrix multiplications must be mapped and programmed to analog devices in a weight-stationary manner. This poses two challenges for adapting a base network to hardware and downstream tasks: (i) conventional analog hardware-aware (AHWA) training requires retraining the entire model, and (ii) reprogramming analog devices is both time- and energy-intensive. To address these issues, we propose Analog Hardware-Aware Low-Rank Adaptation (AHWA-LoRA) training, a novel approach for efficiently adapting transformers to AIMC hardware. AHWA-LoRA training keeps the analog weights fixed as meta-weights and introduces lightweight external LoRA modules for both hardware and task adaptation. We validate AHWA-LoRA training on SQuAD v1.1 and the GLUE benchmark, demonstrate its scalability to larger models, and show its effectiveness in instruction tuning and reinforcement learning. We further evaluate a practical deployment scenario that balances AIMC tile latency with digital LoRA processing using optimized pipeline strategies, with RISC-V-based programmable multi-core accelerators. This hybrid architecture achieves efficient transformer inference with only a 4% per-layer overhead compared to a fully AIMC implementation.

Q-SAM2 presents a comprehensive quantization methodology for the Segment Anything Model 2 (SAM2), enabling its efficient deployment on resource-constrained hardware by addressing challenges posed by outlier distributions. The approach improved the J&F score on SA-V test data by 18.9% for the SAM2 Base Plus model with 2-bit weights and 4-bit activations, demonstrating accurate segmentation at ultra-low bit-widths.

Modern state-space models (SSMs) often utilize transition matrices which enable efficient computation but pose restrictions on the model's expressivity, as measured in terms of the ability to emulate finite-state automata (FSA). While unstructured transition matrices are optimal in terms of expressivity, they come at a prohibitively high compute and memory cost even for moderate state sizes. We propose a structured sparse parametrization of transition matrices in SSMs that enables FSA state tracking with optimal state size and depth, while keeping the computational cost of the recurrence comparable to that of diagonal SSMs. Our method, PD-SSM, parametrizes the transition matrix as the product of a column one-hot matrix (P) and a complex-valued diagonal matrix (D). Consequently, the computational cost of parallel scans scales linearly with the state size. Theoretically, the model is BIBO-stable and can emulate any N-state FSA with one layer of dimension N and a linear readout of size N×N, significantly improving on all current structured SSM guarantees. Experimentally, the model significantly outperforms a wide collection of modern SSM variants on various FSA state tracking tasks. On multiclass time-series classification, the performance is comparable to that of neural controlled differential equations, a paradigm explicitly built for time-series analysis. Finally, we integrate PD-SSM into a hybrid Transformer-SSM architecture and demonstrate that the model can effectively track the states of a complex FSA in which transitions are encoded as a set of variable-length English sentences. The code is available at this https URL

Quantum computing is emerging as a new computational paradigm with the

potential to transform several research fields, including quantum chemistry.

However, current hardware limitations (including limited coherence times, gate

infidelities, and limited connectivity) hamper the straightforward

implementation of most quantum algorithms and call for more noise-resilient

solutions. In quantum chemistry, the limited number of available qubits and

gate operations is particularly restrictive since, for each molecular orbital,

one needs, in general, two qubits. In this study, we propose an explicitly

correlated Ansatz based on the transcorrelated (TC) approach, which transfers

-- without any approximation -- correlation from the wavefunction directly into

the Hamiltonian, thus reducing the number of resources needed to achieve

accurate results with noisy, near-term quantum devices. In particular, we show

that the exact transcorrelated approach not only allows for more shallow

circuits but also improves the convergence towards the so-called basis set

limit, providing energies within chemical accuracy to experiment with smaller

basis sets and, therefore, fewer qubits. We demonstrate our method by computing

bond lengths, dissociation energies, and vibrational frequencies close to

experimental results for the hydrogen dimer and lithium hydride using just 4

and 6 qubits, respectively. Conventional methods require at least ten times

more qubits for the same accuracy.

06 Aug 2024

The depth of quantum circuits is a critical factor when running them on state-of-the-art quantum devices due to their limited coherence times. Reducing circuit depth decreases noise in near-term quantum computations and reduces overall computation time, thus, also benefiting fault-tolerant quantum computations. Here, we show how to reduce the depth of quantum sub-routines that typically scale linearly with the number of qubits, such as quantum fan-out and long-range CNOT gates, to a constant depth using mid-circuit measurements and feed-forward operations, while only requiring a 1D line topology. We compare our protocols with existing ones to highlight their advantages. Additionally, we verify the feasibility by implementing the measurement-based quantum fan-out gate and long-range CNOT gate on real quantum hardware, demonstrating significant improvements over their unitary implementations.

On the Expressiveness and Length Generalization of Selective State-Space Models on Regular Languages

On the Expressiveness and Length Generalization of Selective State-Space Models on Regular Languages

Selective state-space models (SSMs) are an emerging alternative to the Transformer, offering the unique advantage of parallel training and sequential inference. Although these models have shown promising performance on a variety of tasks, their formal expressiveness and length generalization properties remain underexplored. In this work, we provide insight into the workings of selective SSMs by analyzing their expressiveness and length generalization performance on regular language tasks, i.e., finite-state automaton (FSA) emulation. We address certain limitations of modern SSM-based architectures by introducing the Selective Dense State-Space Model (SD-SSM), the first selective SSM that exhibits perfect length generalization on a set of various regular language tasks using a single layer. It utilizes a dictionary of dense transition matrices, a softmax selection mechanism that creates a convex combination of dictionary matrices at each time step, and a readout consisting of layer normalization followed by a linear map. We then proceed to evaluate variants of diagonal selective SSMs by considering their empirical performance on commutative and non-commutative automata. We explain the experimental results with theoretical considerations. Our code is available at this https URL.

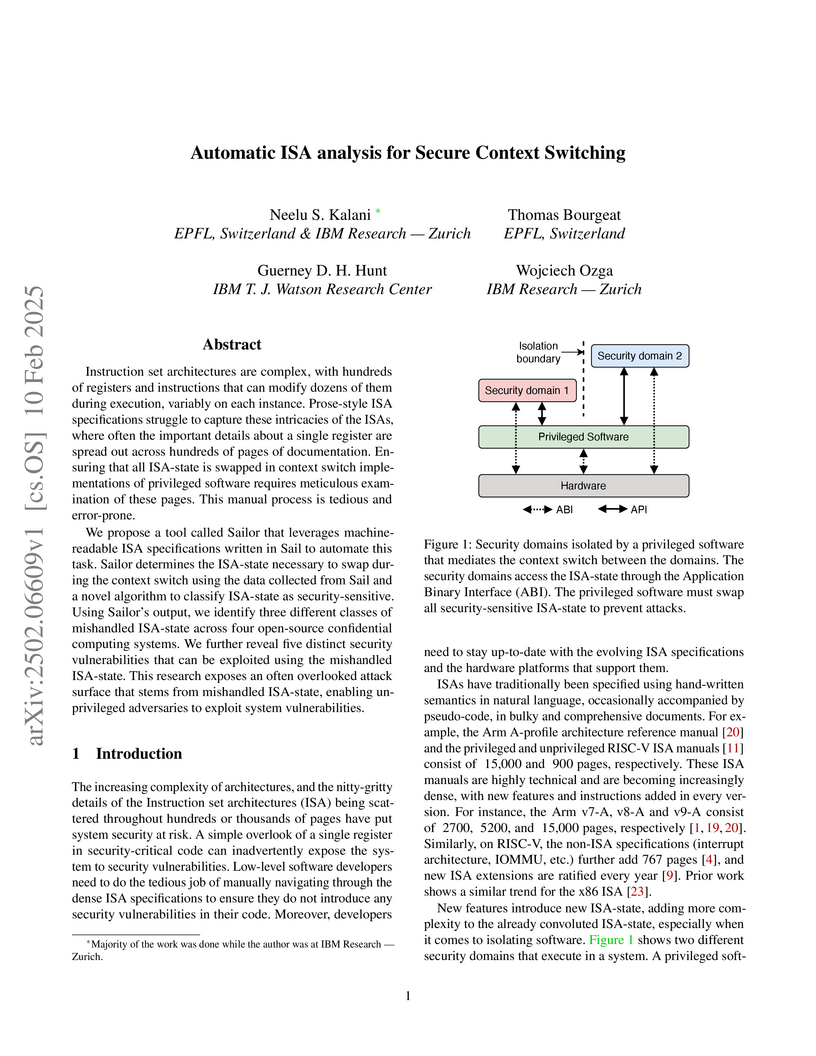

Instruction set architectures are complex, with hundreds of registers and

instructions that can modify dozens of them during execution, variably on each

instance. Prose-style ISA specifications struggle to capture these intricacies

of the ISAs, where often the important details about a single register are

spread out across hundreds of pages of documentation. Ensuring that all

ISA-state is swapped in context switch implementations of privileged software

requires meticulous examination of these pages. This manual process is tedious

and error-prone.

We propose a tool called Sailor that leverages machine-readable ISA

specifications written in Sail to automate this task. Sailor determines the

ISA-state necessary to swap during the context switch using the data collected

from Sail and a novel algorithm to classify ISA-state as security-sensitive.

Using Sailor's output, we identify three different classes of mishandled

ISA-state across four open-source confidential computing systems. We further

reveal five distinct security vulnerabilities that can be exploited using the

mishandled ISA-state. This research exposes an often overlooked attack surface

that stems from mishandled ISA-state, enabling unprivileged adversaries to

exploit system vulnerabilities.

24 Apr 2024

Researchers at IBM Quantum successfully demonstrated the creation of entangled logical qubits using topological quantum error correcting codes on a 133-qubit superconducting processor. They achieved a logical Bell-state fidelity of 93.7% and a Bell's inequality violation of 2.63 (d=2, T=1, with post-selection) by simultaneously implementing 3CX surface and Bacon-Shor codes on distinct qubit sublattices.

This work presents a first evaluation of two state-of-the-art Large Reasoning

Models (LRMs), OpenAI's o3-mini and DeepSeek R1, on analogical reasoning,

focusing on well-established nonverbal human IQ tests based on Raven's

progressive matrices. We benchmark with the I-RAVEN dataset and its extension,

I-RAVEN-X, which tests the ability to generalize to longer reasoning rules and

ranges of the attribute values. To assess the influence of visual uncertainties

on these symbolic analogical reasoning tests, we extend the I-RAVEN-X dataset,

which otherwise assumes an oracle perception. We adopt a two-fold strategy to

simulate this imperfect visual perception: 1) we introduce confounding

attributes which, being sampled at random, do not contribute to the prediction

of the correct answer of the puzzles, and 2) we smoothen the distributions of

the input attributes' values. We observe a sharp decline in OpenAI's o3-mini

task accuracy, dropping from 86.6% on the original I-RAVEN to just 17.0% --

approaching random chance -- on the more challenging I-RAVEN-X, which increases

input length and range and emulates perceptual uncertainty. This drop occurred

despite spending 3.4x more reasoning tokens. A similar trend is also observed

for DeepSeek R1: from 80.6% to 23.2%. On the other hand, a neuro-symbolic

probabilistic abductive model, ARLC, that achieves state-of-the-art

performances on I-RAVEN, can robustly reason under all these

out-of-distribution tests, maintaining strong accuracy with only a modest

accuracy reduction from 98.6% to 88.0%. Our code is available at

this https URL

13 Oct 2019

We extend variational quantum optimization algorithms for Quadratic

Unconstrained Binary Optimization problems to the class of Mixed Binary

Optimization problems. This allows us to combine binary decision variables with

continuous decision variables, which, for instance, enables the modeling of

inequality constraints via slack variables. We propose two heuristics and

introduce the Transaction Settlement problem to demonstrate them. Transaction

Settlement is defined as the exchange of securities and cash between parties

and is crucial to financial market infrastructure. We test our algorithms using

classical simulation as well as real quantum devices provided by the IBM

Quantum Computation Center.

18 Jan 2018

Collocated data processing and storage are the norm in biological systems.

Indeed, the von Neumann computing architecture, that physically and temporally

separates processing and memory, was born more of pragmatism based on available

technology. As our ability to create better hardware improves, new

computational paradigms are being explored. Integrated photonic circuits are

regarded as an attractive solution for on-chip computing using only light,

leveraging the increased speed and bandwidth potential of working in the

optical domain, and importantly, removing the need for time and energy sapping

electro-optical conversions. Here we show that we can combine the emerging area

of integrated optics with collocated data storage and processing to enable

all-photonic in-memory computations. By employing non-volatile photonic

elements based on the phase-change material, Ge2Sb2Te5, we are able to achieve

direct scalar multiplication on single devices. Featuring a novel single-shot

Write/Erase and a drift-free process, such elements can multiply two scalar

numbers by mapping their values to the energy of an input pulse and to the

transmittance of the device, codified in the crystallographic state of the

element. The output pulse, carrying the information of the light-matter

interaction, is the result of the computation. Our all-optical approach is

novel, easy to fabricate and operate, and sets the stage for development of

entirely photonic computers.

27 Jul 2025

Quantum machine learning (QML) has great potential for the analysis of chemical datasets. However, conventional quantum data-encoding schemes, such as fingerprint encoding, are generally unfeasible for the accurate representation of chemical moieties in such datasets. In this contribution, we introduce the quantum molecular structure encoding (QMSE) scheme, which encodes the molecular bond orders and interatomic couplings expressed as a hybrid Coulomb-adjacency matrix, directly as one- and two-qubit rotations within parameterised circuits. We show that this strategy provides an efficient and interpretable method in improving state separability between encoded molecules compared to other fingerprint encoding methods, which is especially crucial for the success in preparing feature maps in QML workflows. To benchmark our method, we train a parameterised ansatz on molecular datasets to perform classification of state phases and regression on boiling points, demonstrating the competitive trainability and generalisation capabilities of QMSE. We further prove a fidelity-preserving chain-contraction theorem that reuses common substructures to cut qubit counts, with an application to long-chain fatty acids. We expect this scalable and interpretable encoding framework to greatly pave the way for practical QML applications of molecular datasets.

With the proliferation of ultra-high-speed mobile networks and

internet-connected devices, along with the rise of artificial intelligence, the

world is generating exponentially increasing amounts of data - data that needs

to be processed in a fast, efficient and smart way. These developments are

pushing the limits of existing computing paradigms, and highly parallelized,

fast and scalable hardware concepts are becoming progressively more important.

Here, we demonstrate a computational specific integrated photonic tensor core -

the optical analog of an ASIC-capable of operating at Tera-Multiply-Accumulate

per second (TMAC/s) speeds. The photonic core achieves parallelized photonic

in-memory computing using phase-change memory arrays and photonic chip-based

optical frequency combs (soliton microcombs). The computation is reduced to

measuring the optical transmission of reconfigurable and non-resonant passive

components and can operate at a bandwidth exceeding 14 GHz, limited only by the

speed of the modulators and photodetectors. Given recent advances in hybrid

integration of soliton microcombs at microwave line rates, ultra-low loss

silicon nitride waveguides, and high speed on-chip detectors and modulators,

our approach provides a path towards full CMOS wafer-scale integration of the

photonic tensor core. While we focus on convolution processing, more generally

our results indicate the major potential of integrated photonics for parallel,

fast, and efficient computational hardware in demanding AI applications such as

autonomous driving, live video processing, and next generation cloud computing

services.

There are no more papers matching your filters at the moment.