Indian Institute of Technology Dharwad

12 Sep 2025

Quantum key distribution (QKD) using entangled photon sources (EPS) is a cornerstone of secure communication. Despite rapid advances in QKD, conventional protocols still employ beam splitters (BSs) for passive random basis selection. However, BSs intrinsically suffer from photon loss, imperfect splitting ratios, and polarization dependence, limiting the key rate, increasing the quantum bit error rate (QBER), and constraining scalability, particularly over long distances. By contrast, EPSs based on spontaneous parametric down-conversion (SPDC) intrinsically exhibit quantum randomness in spatial and spectral degrees of freedom, offering a natural replacement for BSs in basis selection. Here, we demonstrate a proof-of-concept spatial-randomness-based QKD scheme in which the annular SPDC emission ring is divided into four sections, effectively generating two independent EPSs. pair photons from these sources, distributed to Alice and Bob, enable H/V and D/A measurements. The quantum-random pair generation inherently mimics the stochastic basis choice otherwise performed by a BS. Experimentally, our scheme achieves a 6.4-fold enhancement in sifted key rate, a consistently reduced QBER, and a near-ideal encoding balance between logical bits 0 and 1. Furthermore, the need for four spatial channels can be avoided by employing wavelength demultiplexing to generate two EPSs at distinct wavelength pairs. Harnessing intrinsic spatial/spectral randomness thus enables robust, bias-free, high-rate, and low-QBER QKD, offering a scalable pathway for next-generation quantum networks.

12 Mar 2021

Biometric recognition is a trending technology that uses unique characteristics data to identify or verify/authenticate security applications. Amidst the classically used biometrics, voice and face attributes are the most propitious for prevalent applications in day-to-day life because they are easy to obtain through restrained and user-friendly procedures. The pervasiveness of low-cost audio and face capture sensors in smartphones, laptops, and tablets has made the advantage of voice and face biometrics more exceptional when compared to other biometrics. For many years, acoustic information alone has been a great success in automatic speaker verification applications. Meantime, the last decade or two has also witnessed a remarkable ascent in face recognition technologies. Nonetheless, in adverse unconstrained environments, neither of these techniques achieves optimal performance. Since audio-visual information carries correlated and complementary information, integrating them into one recognition system can increase the system's performance. The vulnerability of biometrics towards presentation attacks and audio-visual data usage for the detection of such attacks is also a hot topic of research. This paper made a comprehensive survey on existing state-of-the-art audio-visual recognition techniques, publicly available databases for benchmarking, and Presentation Attack Detection (PAD) algorithms. Further, a detailed discussion on challenges and open problems is presented in this field of biometrics.

The CRUPL framework provides a semi-supervised learning method for cyber-attack detection in smart grids, utilizing consistency regularization and uncertainty-aware pseudo-labeling. This approach achieved 99% accuracy and a False Positive Rate of 0.01-0.02 on two smart grid datasets while operating with only 5% labeled data.

This study showcases the utilisation of OpenCV for extracting features from photos of 2D engineering drawings. These features are then employed to reconstruct 3D CAD models in SCAD format and generate 3D point cloud data similar to LIDAR scans. Many historical mechanical, aerospace, and civil engineering designs exist only as drawings, lacking software-generated CAD or BIM models. While 2D to 3D conversion itself is not novel, the novelty of this work is in the usage of simple photos rather than scans or electronic documentation of 2D drawings. The method can also use scanned drawing data. While the approach is effective for simple shapes, it currently does not address hidden lines in CAD drawings. The Python Jupyter notebook codes developed for this purpose are accessible through GitHub.

This paper presents a novel approach to solving convex optimization problems by leveraging the fact that, under certain regularity conditions, any set of primal or dual variables satisfying the Karush-Kuhn-Tucker (KKT) conditions is necessary and sufficient for optimality. Similar to Theory-Trained Neural Networks (TTNNs), the parameters of the convex optimization problem are input to the neural network, and the expected outputs are the optimal primal and dual variables. A choice for the loss function in this case is a loss, which we refer to as the KKT Loss, that measures how well the network's outputs satisfy the KKT conditions. We demonstrate the effectiveness of this approach using a linear program as an example. For this problem, we observe that minimizing the KKT Loss alone outperforms training the network with a weighted sum of the KKT Loss and a Data Loss (the mean-squared error between the ground truth optimal solutions and the network's output). Moreover, minimizing only the Data Loss yields inferior results compared to those obtained by minimizing the KKT Loss. While the approach is promising, the obtained primal and dual solutions are not sufficiently close to the ground truth optimal solutions. In the future, we aim to develop improved models to obtain solutions closer to the ground truth and extend the approach to other problem classes.

While a lot of work has been done on developing models to tackle the problem of Visual Question Answering, the ability of these models to relate the question to the image features still remain less explored. We present an empirical study of different feature extraction methods with different loss functions. We propose New dataset for the task of Visual Question Answering with multiple image inputs having only one ground truth, and benchmark our results on them. Our final model utilising Resnet + RCNN image features and Bert embeddings, inspired from stacked attention network gives 39% word accuracy and 99% image accuracy on CLEVER+TinyImagenet dataset.

The integration of Open Radio Access Network (O-RAN) principles into 5G

networks introduces a paradigm shift in how radio resources are managed and

optimized. O-RAN's open architecture enables the deployment of intelligent

applications (xApps) that can dynamically adapt to varying network conditions

and user demands. In this paper, we present radio resource scheduling schemes

-- a possible O-RAN-compliant xApp can be designed. This xApp facilitates the

implementation of customized scheduling strategies, tailored to meet the

diverse Quality-of-Service (QoS) requirements of emerging 5G use cases, such as

enhanced mobile broadband (eMBB), massive machine-type communications (mMTC),

and ultra-reliable low-latency communications (URLLC).

We have tested the implemented scheduling schemes within an ns-3 simulation

environment, integrated with the O-RAN framework. The evaluation includes the

implementation of the Max-Throughput (MT) scheduling policy -- which

prioritizes resource allocation based on optimal channel conditions, the

Proportional-Fair (PF) scheduling policy -- which balances fairness with

throughput, and compared with the default Round Robin (RR) scheduler. In

addition, the implemented scheduling schemes support dynamic Time Division

Duplex (TDD), allowing flexible configuration of Downlink (DL) and Uplink (UL)

switching for bidirectional transmissions, ensuring efficient resource

utilization across various scenarios. The results demonstrate resource

allocation's effectiveness under MT and PF scheduling policies. To assess the

efficiency of this resource allocation, we analyzed the Modulation Coding

Scheme (MCS), the number of symbols, and Transmission Time Intervals (TTIs)

allocated per user, and compared them with the throughput achieved. The

analysis revealed a consistent relationship between these factors and the

observed throughput.

PERTINENCE, developed by researchers from IIT Dharwad and Univ Rennes, CNRS, Inria, IRISA, Rennes, France, introduces an input-based opportunistic neural network dynamic execution method. It achieves superior accuracy-efficiency trade-offs by dynamically dispatching inputs to the most lightweight pre-trained model, demonstrating reductions in computational operations by up to 36.29% for CNNs and 13.65% for Vision Transformers while maintaining or improving accuracy.

In a graph, the switching operation reverses adjacencies between a subset of

vertices and the others. For a hereditary graph class G, we are

concerned with the maximum subclass and the minimum superclass of G

that are closed under switching. We characterize the maximum subclass for many

important classes G, and prove that it is finite when G

is minor-closed and omits at least one graph. For several graph classes, we

develop polynomial-time algorithms to recognize the minimum superclass. We also

show that the recognition of the superclass is NP-complete for H-free graphs

when H is a sufficiently long path or cycle, and it cannot be solved in

subexponential time assuming the Exponential Time Hypothesis.

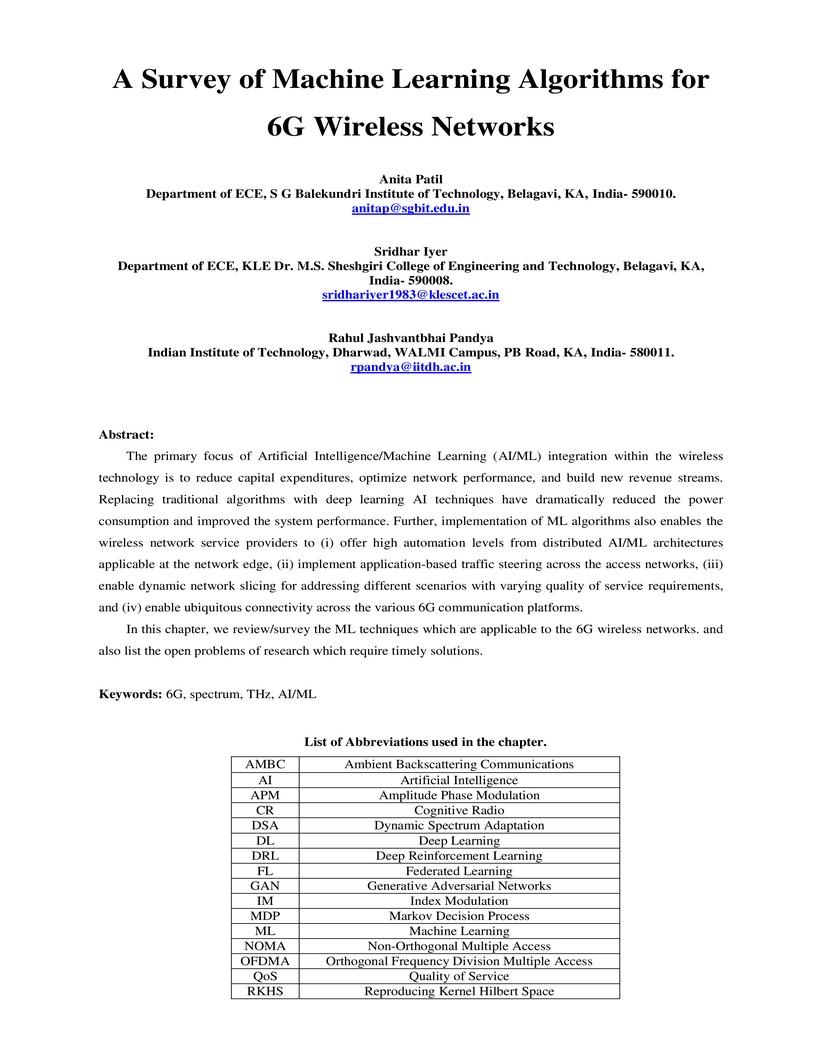

The primary focus of Artificial Intelligence/Machine Learning (AI/ML) integration within the wireless technology is to reduce capital expenditures, optimize network performance, and build new revenue streams. Replacing traditional algorithms with deep learning AI techniques have dramatically reduced the power consumption and improved the system performance. Further, implementation of ML algorithms also enables the wireless network service providers to (i) offer high automation levels from distributed AI/ML architectures applicable at the network edge, (ii) implement application-based traffic steering across the access networks, (iii) enable dynamic network slicing for addressing different scenarios with varying quality of service requirements, and (iv) enable ubiquitous connectivity across the various 6G communication platforms.

In this chapter, we review/survey the ML techniques which are applicable to the 6G wireless networks. and also list the open problems of research which require timely solutions.

12 Sep 2025

Quantum key distribution (QKD) using entangled photon sources (EPS) is a cornerstone of secure communication. Despite rapid advances in QKD, conventional protocols still employ beam splitters (BSs) for passive random basis selection. However, BSs intrinsically suffer from photon loss, imperfect splitting ratios, and polarization dependence, limiting the key rate, increasing the quantum bit error rate (QBER), and constraining scalability, particularly over long distances. By contrast, EPSs based on spontaneous parametric down-conversion (SPDC) intrinsically exhibit quantum randomness in spatial and spectral degrees of freedom, offering a natural replacement for BSs in basis selection. Here, we demonstrate a proof-of-concept spatial-randomness-based QKD scheme in which the annular SPDC emission ring is divided into four sections, effectively generating two independent EPSs. pair photons from these sources, distributed to Alice and Bob, enable H/V and D/A measurements. The quantum-random pair generation inherently mimics the stochastic basis choice otherwise performed by a BS. Experimentally, our scheme achieves a 6.4-fold enhancement in sifted key rate, a consistently reduced QBER, and a near-ideal encoding balance between logical bits 0 and 1. Furthermore, the need for four spatial channels can be avoided by employing wavelength demultiplexing to generate two EPSs at distinct wavelength pairs. Harnessing intrinsic spatial/spectral randomness thus enables robust, bias-free, high-rate, and low-QBER QKD, offering a scalable pathway for next-generation quantum networks.

Cervical cancer, the fourth leading cause of cancer in women globally,

requires early detection through Pap smear tests to identify precancerous

changes and prevent disease progression. In this study, we performed a focused

analysis by segmenting the cellular boundaries and drawing bounding boxes to

isolate the cancer cells. A novel Deep Learning (DL) architecture, the

``Multi-Resolution Fusion Deep Convolutional Network", was proposed to

effectively handle images with varying resolutions and aspect ratios, with its

efficacy showcased using the SIPaKMeD dataset. The performance of this DL model

was observed to be similar to the state-of-the-art models, with accuracy

variations of a mere 2\% to 3\%, achieved using just 1.7 million learnable

parameters, which is approximately 85 times less than the VGG-19 model.

Furthermore, we introduced a multi-task learning technique that simultaneously

performs segmentation and classification tasks and begets an Intersection over

Union score of 0.83 and a classification accuracy of 90\%. The final stage of

the workflow employs a probabilistic approach for risk assessment, extracting

feature vectors to predict the likelihood of normal cells progressing to

malignant states, which can be utilized for the prognosis of cervical cancer.

In recent years, the advances in digitalisation have also adversely contributed to the significant rise in cybercrimes. Hence, building the threat intelligence to shield against rising cybercrimes has become a fundamental requisite. Internet Protocol (IP) addresses play a crucial role in the threat intelligence and prevention of cyber crimes. However, we have noticed the lack of one-stop, free, and open-source tools that can analyse IP addresses. Hence, this work introduces a comprehensive web tool for advanced IP address characterisation. Our tool offers a wide range of features, including geolocation, blocklist check, VPN detection, proxy detection, bot detection, Tor detection, port scan, and accurate domain statistics that include the details about the name servers and registrar information. In addition, our tool calculates a confidence score based on a weighted sum of publicly accessible online results from different reliable sources to give users a dependable measure of accuracy. Further, to improve performance, our tool also incorporates a local database for caching the results, to enable fast content retrieval with minimal external Web API calls. Our tool supports domain names and IPv4 addresses, making it a multi-functional and powerful IP analyser tool for threat intelligence. Our tool is available at this http URL

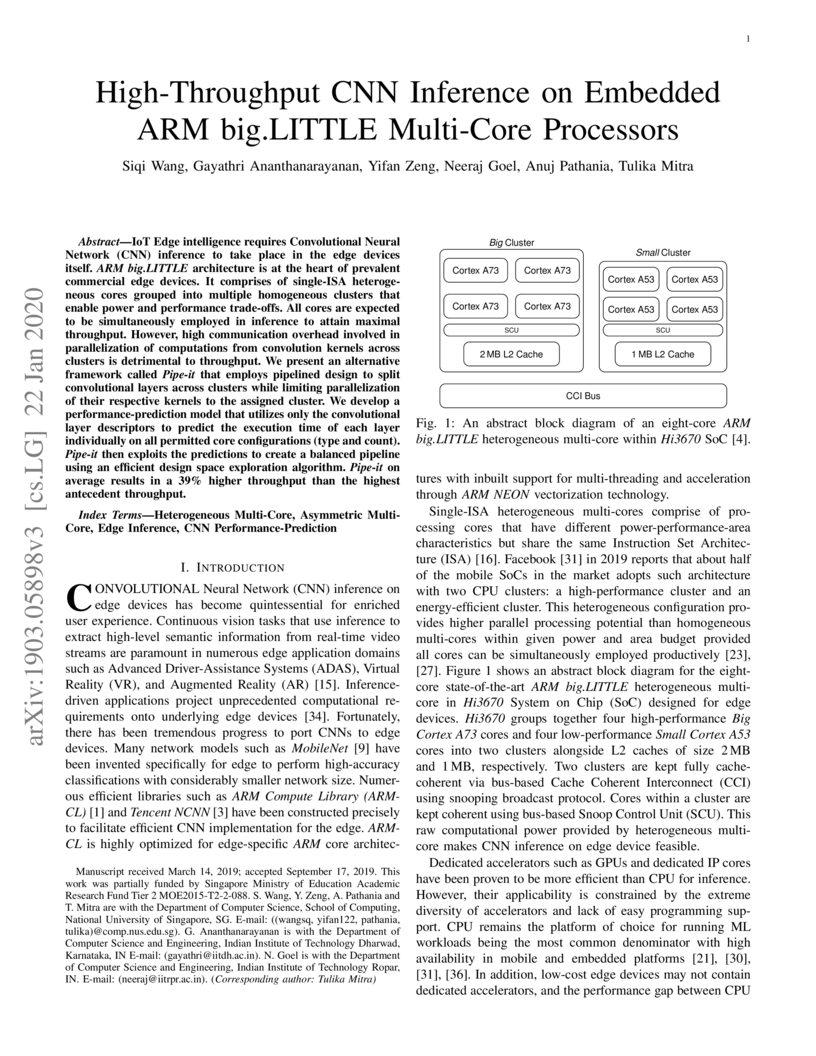

IoT Edge intelligence requires Convolutional Neural Network (CNN) inference to take place in the edge devices itself. ARM this http URL architecture is at the heart of prevalent commercial edge devices. It comprises of single-ISA heterogeneous cores grouped into multiple homogeneous clusters that enable power and performance trade-offs. All cores are expected to be simultaneously employed in inference to attain maximal throughput. However, high communication overhead involved in parallelization of computations from convolution kernels across clusters is detrimental to throughput. We present an alternative framework called Pipe-it that employs pipelined design to split convolutional layers across clusters while limiting parallelization of their respective kernels to the assigned cluster. We develop a performance-prediction model that utilizes only the convolutional layer descriptors to predict the execution time of each layer individually on all permitted core configurations (type and count). Pipe-it then exploits the predictions to create a balanced pipeline using an efficient design space exploration algorithm. Pipe-it on average results in a 39% higher throughput than the highest antecedent throughput.

15 Oct 2025

In 6G systems, extremely large-scale antenna arrays operating at terahertz frequencies extend the near-field region to typical user distances from the base station, enabling near-field communication (NFC) with fine spatial resolution through beamfocusing. Existing multiuser NFC systems predominantly employ linear precoding techniques such as zero-forcing (ZF), which suffer from performance degradation due to the high transmit power required to suppress interference. This paper proposes a nonlinear precoding framework based on Dirty Paper Coding (DPC), which pre-cancels known interference to maximize the sum-rate performance. We formulate and solve the corresponding sum-rate maximization problems, deriving optimal power allocation strategies for both DPC and ZF schemes. Extensive simulations demonstrate that DPC achieves substantial sum-rate gains over ZF across various near-field configurations, with the most pronounced improvements observed for closely spaced users.

Concerning the recent notion of circular chromatic number of signed graphs, for each given integer k we introduce two signed bipartite graphs, each on 2k2−k+1 vertices, having shortest negative cycle of length 2k, and the circular chromatic number 4.

Each of the construction can be viewed as a bipartite analogue of the generalized Mycielski graphs on odd cycles, Mℓ(C2k+1). In the course of proving our result, we also obtain a simple proof of the fact that Mℓ(C2k+1) and some similar quadrangulations of the projective plane have circular chromatic number 4. These proofs have the advantage that they illuminate, in an elementary manner, the strong relation between algebraic topology and graph coloring problems.

Fake speech detection systems have become a necessity to combat against speech deepfakes. Current systems exhibit poor generalizability on out-of-domain speech samples due to lack to diverse training data. In this paper, we attempt to address domain generalization issue by proposing a novel speech representation using self-supervised (SSL) speech embeddings and the Modulation Spectrogram (MS) feature. A fusion strategy is used to combine both speech representations to introduce a new front-end for the classification task. The proposed SSL+MS fusion representation is passed to the AASIST back-end network. Experiments are conducted on monolingual and multilingual fake speech datasets to evaluate the efficacy of the proposed model architecture in cross-dataset and multilingual cases. The proposed model achieves a relative performance improvement of 37% and 20% on the ASVspoof 2019 and MLAAD datasets, respectively, in in-domain settings compared to the baseline. In the out-of-domain scenario, the model trained on ASVspoof 2019 shows a 36% relative improvement when evaluated on the MLAAD dataset. Across all evaluated languages, the proposed model consistently outperforms the baseline, indicating enhanced domain generalization.

08 Jul 2019

We study the dynamics of microscopic quantum correlations, viz., bipartite

entanglement and quantum discord between nearest neighbor sites, in Ising spin

chain with a periodically varying external magnetic field along the transverse

direction. Quantum correlations exhibit periodic revivals with the driving

cycles in the finite-size chain. The time of first revival is proportional to

the system size and is inversely proportional to the maximum group velocity of

Floquet quasi-particles. On the other hand, the local quantum correlations in

the infinite chain may get saturated to non-zero values after a sufficiently

large number of driving cycles. Moreover, we investigate the convergence of

local density matrices, from which the quantum correlations under study

originate, towards the final steady-state density matrices as a function of

driving cycles. We find that the geometric distance, d, between the reduced

density matrices of non-equilibrium state and steady-state obeys a power-law

scaling of the form d∼n−B, where n is the number of driving cycles

and B is the scaling exponent. The steady-state quantum correlations are

studied as a function of time period of the driving field and are marked by the

presence of prominent peaks in frequency domain. The steady-state features can

be further understood by probing band structures of Floquet Hamiltonian and

purity of the bipartite state between nearest neighbor sites. Finally, we

compare the steady-state values of the local quantum correlations with that of

the canonical Gibbs ensemble and infer about their canonical ergodic

properties. Moreover, we identify generic features in the ergodic properties

depending upon the quantum phases of the initial state and the pathway of

repeated driving that may be within the same quantum phase or across two

different equilibrium phases.

We study a goal-oriented communication system in which a source monitors an environment that evolves as a discrete-time, two-state Markov chain. At each time slot, a controller decides whether to sample the environment and if so whether to transmit a raw or processed sample, to the controller. Processing improves transmission reliability over an unreliable wireless channel, but incurs an additional cost. The objective is to minimize the long-term average age of information (AoI), subject to constraints on the costs incurred at the source and the cost of actuation error (CAE), a semantic metric that assigns different penalties to different actuation errors. Although reducing AoI can potentially help reduce CAE, optimizing AoI alone is insufficient, as it overlooks the evolution of the underlying process. For instance, faster source dynamics lead to higher CAE for the same average AoI, and different AoI trajectories can result in markedly different CAE under identical average AoI. To address this, we propose a stationary randomized policy that achieves an average AoI within a bounded multiplicative factor of the optimal among all feasible policies. Extensive numerical experiments are conducted to characterize system behavior under a range of parameters. These results offer insights into the feasibility of the optimization problem, the structure of near-optimal actions, and the fundamental trade-offs between AoI, CAE, and the costs involved.

Bayesian Optimization (BO) is used to find the global optima of black box functions. In this work, we propose a practical BO method of function compositions where the form of the composition is known but the constituent functions are expensive to evaluate. By assuming an independent Gaussian process (GP) model for each of the constituent black-box function, we propose Expected Improvement (EI) and Upper Confidence Bound (UCB) based BO algorithms and demonstrate their ability to outperform not just vanilla BO but also the current state-of-art algorithms. We demonstrate a novel application of the proposed methods to dynamic pricing in revenue management when the underlying demand function is expensive to evaluate.

There are no more papers matching your filters at the moment.