Insight Centre for Data AnalyticsDSI

The stock market is a fundamental component of financial systems, reflecting

economic health, providing investment opportunities, and influencing global

dynamics. Accurate stock market predictions can lead to significant gains and

promote better investment decisions. However, predicting stock market trends is

challenging due to their non-linear and stochastic nature. This study

investigates the efficacy of advanced deep learning models for short-term trend

forecasting using daily and hourly closing prices from the S&P 500 index and

the Brazilian ETF EWZ. The models explored include Temporal Convolutional

Networks (TCN), Neural Basis Expansion Analysis for Time Series Forecasting

(N-BEATS), Temporal Fusion Transformers (TFT), Neural Hierarchical

Interpolation for Time Series Forecasting (N-HiTS), and Time-series Dense

Encoder (TiDE). Furthermore, we introduce the Extended Long Short-Term Memory

for Time Series (xLSTM-TS) model, an xLSTM adaptation optimised for time series

prediction. Wavelet denoising techniques were applied to smooth the signal and

reduce minor fluctuations, providing cleaner data as input for all approaches.

Denoising significantly improved performance in predicting stock price

direction. Among the models tested, xLSTM-TS consistently outperformed others.

For example, it achieved a test accuracy of 72.82% and an F1 score of 73.16% on

the EWZ daily dataset. By leveraging advanced deep learning models and

effective data preprocessing techniques, this research provides valuable

insights into the application of machine learning for market movement

forecasting, highlighting both the potential and the challenges involved.

This paper investigates applying general-purpose Large Language Models (LLMs) for enhancing recommendation diversity through a zero-shot re-ranking process. The study demonstrates that LLMs can interpret and perform diversity-aware re-ranking, achieving pure diversity metrics comparable to traditional methods while sacrificing more relevance and incurring higher computational and monetary costs.

Researchers at University College Dublin and Accenture Labs introduced group counterfactual explanations and an algorithm for their generation, enabling AI systems to explain multiple similar predictions with a single, coherent explanation. A large user study demonstrated these group explanations improve user accuracy, confidence, satisfaction, and trust compared to individual counterfactuals, particularly when users are explicitly aware of the grouping.

Bayesian Additive Regression Trees (BART) is a tree-based machine learning

method that has been successfully applied to regression and classification

problems. BART assumes regularisation priors on a set of trees that work as

weak learners and is very flexible for predicting in the presence of

non-linearity and high-order interactions. In this paper, we introduce an

extension of BART, called Model Trees BART (MOTR-BART), that considers

piecewise linear functions at node levels instead of piecewise constants. In

MOTR-BART, rather than having a unique value at node level for the prediction,

a linear predictor is estimated considering the covariates that have been used

as the split variables in the corresponding tree. In our approach, local

linearities are captured more efficiently and fewer trees are required to

achieve equal or better performance than BART. Via simulation studies and real

data applications, we compare MOTR-BART to its main competitors. R code for

MOTR-BART implementation is available at this https URL

Researchers from Maynooth University developed GP-BART, an extension of Bayesian Additive Regression Trees (BART) that incorporates Gaussian Processes at terminal nodes and employs rotated splitting rules. This method generates smooth, piecewise-defined functions with explicit covariance structures and more flexible decision boundaries, resulting in improved predictive accuracy and uncertainty calibration across various datasets.

Recently, the use of synthetic datasets based on game engines has been shown to improve the performance of several tasks in computer vision. However, these datasets are typically only appropriate for the specific domains depicted in computer games, such as urban scenes involving vehicles and people. In this paper, we present an approach to generate synthetic datasets for object counting for any domain without the need for photo-realistic techniques manually generated by expensive teams of 3D artists. We introduce a domain randomization approach for object counting based on synthetic datasets that are quick and inexpensive to generate. We deliberately avoid photorealism and drastically increase the variability of the dataset, producing images with random textures and 3D transformations, which improves generalization. Experiments show that our method facilitates good performance on various real word object counting datasets for multiple domains: people, vehicles, penguins, and fruit. The source code is available at: this https URL

Spell checking and morphological analysis are two fundamental tasks in text and natural language processing and are addressed in the early stages of the development of language technology. Despite the previous efforts, there is no progress in open-source to create such tools for Sorani Kurdish, also known as Central Kurdish, as a less-resourced language. In this paper, we present our efforts in annotating a lexicon with morphosyntactic tags and also, extracting morphological rules of Sorani Kurdish to build a morphological analyzer, a stemmer and a spell-checking system using Hunspell. This implementation can be used for further developments in the field by researchers and also, be integrated into text editors under a publicly available license.

23 Jul 2025

With the rise of smart mobility and shared e-mobility services, numerous advanced technologies have been applied to this field. Cloud-based traffic simulation solutions have flourished, offering increasingly realistic representations of the evolving mobility landscape. LLMs have emerged as pioneering tools, providing robust support for various applications, including intelligent decision-making, user interaction, and real-time traffic analysis. As user demand for e-mobility continues to grow, delivering comprehensive end-to-end solutions has become crucial. In this paper, we present a cloud-based, LLM-powered shared e-mobility platform, integrated with a mobile application for personalized route recommendations. The optimization module is evaluated based on travel time and cost across different traffic scenarios. Additionally, the LLM-powered RAG framework is evaluated at the schema level for different users, using various evaluation methods. Schema-level RAG with XiYanSQL achieves an average execution accuracy of 0.81 on system operator queries and 0.98 on user queries.

University of Washington

University of Washington University of Amsterdam

University of Amsterdam University of Waterloo

University of Waterloo University College London

University College London Fudan University

Fudan University Mila - Quebec AI InstituteUniversity of Electronic Science and Technology of ChinaUniversity of Edinburgh

Mila - Quebec AI InstituteUniversity of Electronic Science and Technology of ChinaUniversity of Edinburgh Google Research

Google Research University of British ColumbiaLancaster University

University of British ColumbiaLancaster University Technical University of MunichSaarland UniversityMontclair State UniversityUniversity of IbadanUniversity of PortoRIKEN Center for AIPInstituto Politécnico NacionalBayero University KanoKwame Nkrumah University of Science and TechnologyInsight Centre for Data AnalyticsJülich Supercomputing CentreKaduna State UniversityLelapa AIKenyatta UniversityUniversity of ZambiaNnamdi Azikiwe UniversityAccra Institute of TechnologySouth African Centre for Digital Language ResourcesResearch Center of Intelligent Machines, McGill UniversityTom Mboya UniversityAi4InnovrCollege de ReberoAfrican Master in Machine IntelligenceGalsenAI

Technical University of MunichSaarland UniversityMontclair State UniversityUniversity of IbadanUniversity of PortoRIKEN Center for AIPInstituto Politécnico NacionalBayero University KanoKwame Nkrumah University of Science and TechnologyInsight Centre for Data AnalyticsJülich Supercomputing CentreKaduna State UniversityLelapa AIKenyatta UniversityUniversity of ZambiaNnamdi Azikiwe UniversityAccra Institute of TechnologySouth African Centre for Digital Language ResourcesResearch Center of Intelligent Machines, McGill UniversityTom Mboya UniversityAi4InnovrCollege de ReberoAfrican Master in Machine IntelligenceGalsenAIAfrican languages have far less in-language content available digitally,

making it challenging for question answering systems to satisfy the information

needs of users. Cross-lingual open-retrieval question answering (XOR QA)

systems -- those that retrieve answer content from other languages while

serving people in their native language -- offer a means of filling this gap.

To this end, we create AfriQA, the first cross-lingual QA dataset with a focus

on African languages. AfriQA includes 12,000+ XOR QA examples across 10 African

languages. While previous datasets have focused primarily on languages where

cross-lingual QA augments coverage from the target language, AfriQA focuses on

languages where cross-lingual answer content is the only high-coverage source

of answer content. Because of this, we argue that African languages are one of

the most important and realistic use cases for XOR QA. Our experiments

demonstrate the poor performance of automatic translation and multilingual

retrieval methods. Overall, AfriQA proves challenging for state-of-the-art QA

models. We hope that the dataset enables the development of more equitable QA

technology.

10 Jul 2020

Bayesian inference for models with intractable likelihood functions

represents a challenging suite of problems in modern statistics. In this work

we analyse the Conway-Maxwell-Poisson (COM-Poisson) distribution, a two

parameter generalisation of the Poisson distribution. COM-Poisson regression

modelling allows the flexibility to model dispersed count data as part of a

generalised linear model (GLM) with a COM-Poisson response, where exogenous

covariates control the mean and dispersion level of the response. The major

difficulty with COM-Poisson regression is that the likelihood function contains

multiple intractable normalising constants and is not amenable to standard

inference and MCMC techniques. Recent work by Chanialidis et al. (2017) has

seen the development of a sampler to draw random variates from the COM-Poisson

likelihood using a rejection sampling algorithm. We provide a new rejection

sampler for the COM-Poisson distribution which significantly reduces the CPU

time required to perform inference for COM-Poisson regression models. A novel

extension of this work shows that for any intractable likelihood function with

an associated rejection sampler it is possible to construct unbiased estimators

of the intractable likelihood which proves useful for model selection or for

use within pseudo-marginal MCMC algorithms (Andrieu and Roberts, 2009). We

demonstrate all of these methods on a real-world dataset of takeover bids.

Complex Event Processing (CEP) is an event processing paradigm to perform real-time analytics over streaming data and match high-level event patterns. Presently, CEP is limited to process structured data stream. Video streams are complicated due to their unstructured data model and limit CEP systems to perform matching over them. This work introduces a graph-based structure for continuous evolving video streams, which enables the CEP system to query complex video event patterns. We propose the Video Event Knowledge Graph (VEKG), a graph driven representation of video data. VEKG models video objects as nodes and their relationship interaction as edges over time and space. It creates a semantic knowledge representation of video data derived from the detection of high-level semantic concepts from the video using an ensemble of deep learning models. A CEP-based state optimization - VEKG-Time Aggregated Graph (VEKG-TAG) is proposed over VEKG representation for faster event detection. VEKG-TAG is a spatiotemporal graph aggregation method that provides a summarized view of the VEKG graph over a given time length. We defined a set of nine event pattern rules for two domains (Activity Recognition and Traffic Management), which act as a query and applied over VEKG graphs to discover complex event patterns. To show the efficacy of our approach, we performed extensive experiments over 801 video clips across 10 datasets. The proposed VEKG approach was compared with other state-of-the-art methods and was able to detect complex event patterns over videos with F-Score ranging from 0.44 to 0.90. In the given experiments, the optimized VEKG-TAG was able to reduce 99% and 93% of VEKG nodes and edges, respectively, with 5.19X faster search time, achieving sub-second median latency of 4-20 milliseconds.

Epilepsy is a neurological disorder and for its detection, encephalography (EEG) is a commonly used clinical approach. Manual inspection of EEG brain signals is a time-consuming and laborious process, which puts heavy burden on neurologists and affects their performance. Several automatic techniques have been proposed using traditional approaches to assist neurologists in detecting binary epilepsy scenarios e.g. seizure vs. non-seizure or normal vs. ictal. These methods do not perform well when classifying ternary case e.g. ictal vs. normal vs. inter-ictal; the maximum accuracy for this case by the state-of-the-art-methods is 97+-1%. To overcome this problem, we propose a system based on deep learning, which is an ensemble of pyramidal one-dimensional convolutional neural network (P-1D-CNN) models. In a CNN model, the bottleneck is the large number of learnable parameters. P-1D-CNN works on the concept of refinement approach and it results in 60% fewer parameters compared to traditional CNN models. Further to overcome the limitations of small amount of data, we proposed augmentation schemes for learning P-1D-CNN model. In almost all the cases concerning epilepsy detection, the proposed system gives an accuracy of 99.1+-0.9% on the University of Bonn dataset.

Evaluating the quality of videos generated from text-to-video (T2V) models is important if they are to produce plausible outputs that convince a viewer of their authenticity. We examine some of the metrics used in this area and highlight their limitations. The paper presents a dataset of more than 1,000 generated videos from 5 very recent T2V models on which some of those commonly used quality metrics are applied. We also include extensive human quality evaluations on those videos, allowing the relative strengths and weaknesses of metrics, including human assessment, to be compared. The contribution is an assessment of commonly used quality metrics, and a comparison of their performances and the performance of human evaluations on an open dataset of T2V videos. Our conclusion is that naturalness and semantic matching with the text prompt used to generate the T2V output are important but there is no single measure to capture these subtleties in assessing T2V model output.

Many researchers have made use of the Wikipedia network for relatedness and similarity tasks. However, most approaches use only the most recent information and not historical changes in the network. We provide an analysis of entity relatedness using temporal graph-based approaches over different versions of the Wikipedia article link network and DBpedia, which is an open-source knowledge base extracted from Wikipedia. We consider creating the Wikipedia article link network as both a union and intersection of edges over multiple time points and present a novel variation of the Jaccard index to weight edges based on their transience. We evaluate our results against the KORE dataset, which was created in 2010, and show that using the 2010 Wikipedia article link network produces the strongest result, suggesting that semantic similarity is time sensitive. We then show that integrating multiple time frames in our methods can give a better overall similarity demonstrating that temporal evolution can have an important effect on entity relatedness.

10 Jan 2023

Competitive balance is the subject of much interest in the sports analytics

literature and beyond. In this paper, we develop a statistical network model

based on an extension of the stochastic block model to assess the balance

between teams in a league. Here we represent the outcome of all matches in a

football season as a dense network with nodes identified by teams and

categorical edges representing the outcome of each game as a win, draw or a

loss. The main focus and motivation for this paper is to provide a statistical

framework to assess the issue of competitive balance in the context of the

English First Division / Premier League over more than 40 seasons. The Premier

League is arguably one of the most popular leagues in the world, in terms of

its global reach and the revenue which it generates. Therefore it is of wide

interest to assess its competitiveness. Our analysis provides evidence

suggesting a structural change around the early 2000's from a reasonably

balanced league to a two-tier league.

This paper presents a systematic comparison of five counterfactual explanation methods for text classifiers, including traditional and LLM-based approaches. The study identifies trade-offs between explanation validity, sparsity, and linguistic plausibility, demonstrating that specialized methods like CLOSS often produce more valid counterfactuals while LLM-based methods excel in linguistic naturalness.

Question answering (QA) models have shown compelling results in the task of

Machine Reading Comprehension (MRC). Recently these systems have proved to

perform better than humans on held-out test sets of datasets e.g. SQuAD, but

their robustness is not guaranteed. The QA model's brittleness is exposed when

evaluated on adversarial generated examples by a performance drop. In this

study, we explore the robustness of MRC models to entity renaming, with

entities from low-resource regions such as Africa. We propose EntSwap, a method

for test-time perturbations, to create a test set whose entities have been

renamed. In particular, we rename entities of type: country, person,

nationality, location, organization, and city, to create AfriSQuAD2. Using the

perturbed test set, we evaluate the robustness of three popular MRC models. We

find that compared to base models, large models perform well comparatively on

novel entities. Furthermore, our analysis indicates that entity type person

highly challenges the MRC models' performance.

Interactive constraint systems often suffer from infeasibility (no solution)

due to conflicting user constraints. A common approach to recover infeasibility

is to eliminate the constraints that cause the conflicts in the system. This

approach allows the system to provide an explanation as: "if the user is

willing to drop out some of their constraints, there exists a solution".

However, one can criticise this form of explanation as not being very

informative. A counterfactual explanation is a type of explanation that can

provide a basis for the user to recover feasibility by helping them understand

which changes can be applied to their existing constraints rather than removing

them. This approach has been extensively studied in the machine learning field,

but requires a more thorough investigation in the context of constraint

satisfaction. We propose an iterative method based on conflict detection and

maximal relaxations in over-constrained constraint satisfaction problems to

help compute a counterfactual explanation.

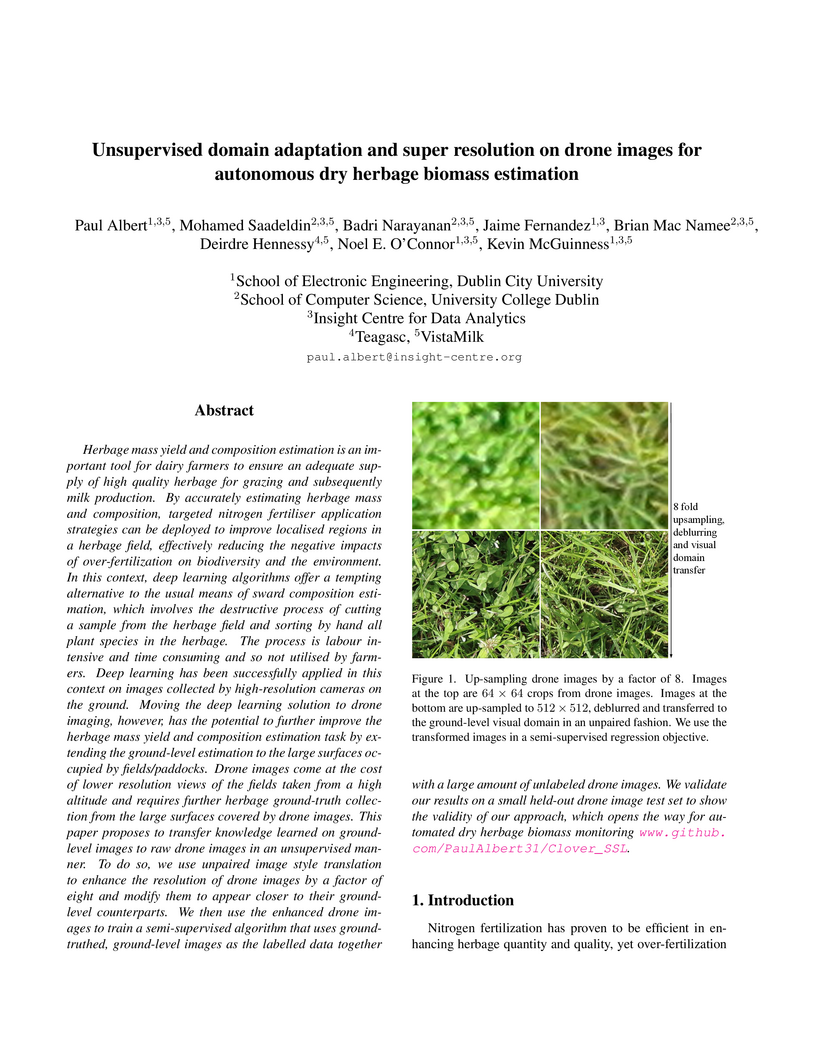

Herbage mass yield and composition estimation is an important tool for dairy farmers to ensure an adequate supply of high quality herbage for grazing and subsequently milk production. By accurately estimating herbage mass and composition, targeted nitrogen fertiliser application strategies can be deployed to improve localised regions in a herbage field, effectively reducing the negative impacts of over-fertilization on biodiversity and the environment. In this context, deep learning algorithms offer a tempting alternative to the usual means of sward composition estimation, which involves the destructive process of cutting a sample from the herbage field and sorting by hand all plant species in the herbage. The process is labour intensive and time consuming and so not utilised by farmers. Deep learning has been successfully applied in this context on images collected by high-resolution cameras on the ground. Moving the deep learning solution to drone imaging, however, has the potential to further improve the herbage mass yield and composition estimation task by extending the ground-level estimation to the large surfaces occupied by fields/paddocks. Drone images come at the cost of lower resolution views of the fields taken from a high altitude and requires further herbage ground-truth collection from the large surfaces covered by drone images. This paper proposes to transfer knowledge learned on ground-level images to raw drone images in an unsupervised manner. To do so, we use unpaired image style translation to enhance the resolution of drone images by a factor of eight and modify them to appear closer to their ground-level counterparts. We then ... ~\url{this http URL}.

Wuhan University of Technology University of Amsterdam

University of Amsterdam University of Waterloo

University of Waterloo University College London

University College London Fudan University

Fudan University Mila - Quebec AI Institute

Mila - Quebec AI Institute McGill UniversityLancaster University

McGill UniversityLancaster University University of California, Davis

University of California, Davis Technical University of MunichSaarland UniversityIowa State UniversityMontclair State UniversityPaderborn UniversityAston UniversityUniversity of PortoMakerere UniversityHaramaya UniversityUniversity of RwandaInsight Centre for Data AnalyticsAhmadu Bello UniversityKaduna State UniversityNational Open University of NigeriaLelapa AIAIMSLanfricaNnamdi Azikiwe UniversitySomali National UniversityJamhuriya UniversityThe College of Saint RoseInstituto Polit

ecnico NacionalLule

a University of TechnologyTanzania Data LabBIUSTPAUSTIDire Dawa University Institute of TechnologyDeutschzentrum an der Universit

at Burundi

Technical University of MunichSaarland UniversityIowa State UniversityMontclair State UniversityPaderborn UniversityAston UniversityUniversity of PortoMakerere UniversityHaramaya UniversityUniversity of RwandaInsight Centre for Data AnalyticsAhmadu Bello UniversityKaduna State UniversityNational Open University of NigeriaLelapa AIAIMSLanfricaNnamdi Azikiwe UniversitySomali National UniversityJamhuriya UniversityThe College of Saint RoseInstituto Polit

ecnico NacionalLule

a University of TechnologyTanzania Data LabBIUSTPAUSTIDire Dawa University Institute of TechnologyDeutschzentrum an der Universit

at Burundi

University of Amsterdam

University of Amsterdam University of Waterloo

University of Waterloo University College London

University College London Fudan University

Fudan University Mila - Quebec AI Institute

Mila - Quebec AI Institute McGill UniversityLancaster University

McGill UniversityLancaster University University of California, Davis

University of California, Davis Technical University of MunichSaarland UniversityIowa State UniversityMontclair State UniversityPaderborn UniversityAston UniversityUniversity of PortoMakerere UniversityHaramaya UniversityUniversity of RwandaInsight Centre for Data AnalyticsAhmadu Bello UniversityKaduna State UniversityNational Open University of NigeriaLelapa AIAIMSLanfricaNnamdi Azikiwe UniversitySomali National UniversityJamhuriya UniversityThe College of Saint RoseInstituto Polit

ecnico NacionalLule

a University of TechnologyTanzania Data LabBIUSTPAUSTIDire Dawa University Institute of TechnologyDeutschzentrum an der Universit

at Burundi

Technical University of MunichSaarland UniversityIowa State UniversityMontclair State UniversityPaderborn UniversityAston UniversityUniversity of PortoMakerere UniversityHaramaya UniversityUniversity of RwandaInsight Centre for Data AnalyticsAhmadu Bello UniversityKaduna State UniversityNational Open University of NigeriaLelapa AIAIMSLanfricaNnamdi Azikiwe UniversitySomali National UniversityJamhuriya UniversityThe College of Saint RoseInstituto Polit

ecnico NacionalLule

a University of TechnologyTanzania Data LabBIUSTPAUSTIDire Dawa University Institute of TechnologyDeutschzentrum an der Universit

at BurundiAfrican languages are severely under-represented in NLP research due to lack of datasets covering several NLP tasks. While there are individual language specific datasets that are being expanded to different tasks, only a handful of NLP tasks (e.g. named entity recognition and machine translation) have standardized benchmark datasets covering several geographical and typologically-diverse African languages. In this paper, we develop MasakhaNEWS -- a new benchmark dataset for news topic classification covering 16 languages widely spoken in Africa. We provide an evaluation of baseline models by training classical machine learning models and fine-tuning several language models. Furthermore, we explore several alternatives to full fine-tuning of language models that are better suited for zero-shot and few-shot learning such as cross-lingual parameter-efficient fine-tuning (like MAD-X), pattern exploiting training (PET), prompting language models (like ChatGPT), and prompt-free sentence transformer fine-tuning (SetFit and Cohere Embedding API). Our evaluation in zero-shot setting shows the potential of prompting ChatGPT for news topic classification in low-resource African languages, achieving an average performance of 70 F1 points without leveraging additional supervision like MAD-X. In few-shot setting, we show that with as little as 10 examples per label, we achieved more than 90\% (i.e. 86.0 F1 points) of the performance of full supervised training (92.6 F1 points) leveraging the PET approach.

There are no more papers matching your filters at the moment.