Institut Teknologi Sepuluh Nopember

Despite the progress in cross-domain few-shot learning, a model pre-trained with DINO combined with a prototypical classifier outperforms the latest SOTA methods. A crucial limitation that needs to be overcome is that updating too many parameters of the transformers leads to overfitting due to the scarcity of labeled samples. To address this challenge, we propose a new concept, coalescent projection, as an effective successor to soft prompts. Additionally, we propose a novel pseudo-class generation method, combined with self-supervised transformations, that relies solely on the base domain to prepare the network to encounter unseen samples from different domains. The proposed method exhibits its effectiveness in comprehensive experiments on the extreme domain-shift problem of the BSCD-FSL benchmark. Our code is published at \href{this https URL}{this https URL}.

Researchers provide a Systematization of Knowledge for timeline-based event reconstruction in digital forensics, introducing a unified visual framework, the TER-Model, alongside harmonized terminology and a comprehensive classification of challenges. This work aims to structure the discipline and guide future research efforts effectively.

Tianjin University University of Toronto

University of Toronto Carnegie Mellon University

Carnegie Mellon University New York University

New York University National University of Singapore

National University of Singapore Mila - Quebec AI Institute

Mila - Quebec AI Institute Meta

Meta CohereCapital OneSCB 10XSEACrowdAI SingaporeMBZUAIThe University of ManchesterSingapore University of Technology and DesignBeijing Academy of Artificial Intelligence (BAAI)Indian Statistical Institute, Kolkata

CohereCapital OneSCB 10XSEACrowdAI SingaporeMBZUAIThe University of ManchesterSingapore University of Technology and DesignBeijing Academy of Artificial Intelligence (BAAI)Indian Statistical Institute, Kolkata Brown UniversityHanyang UniversityUniversity of BathChulalongkorn UniversityUniversitas Gadjah MadaUniversity of the PhilippinesNara Institute of Science and TechnologyBandung Institute of TechnologyOracleInstitut Teknologi Sepuluh NopemberMacau University of Science and TechnologySeoul National University of Science and TechnologyAuburn UniversitySony Group CorporationVidyasirimedhi Institute of Science and TechnologyPolytechnique MontrealKing Mongkut's University of Technology ThonburiSrinakharinwirot UniversityUniversity of New HavenTon Duc Thang UniversityBrawijaya UniversityUniversitas Pelita HarapanThammasat UniversityAteneo de Manila UniversityUniversitas Islam IndonesiaMonash University IndonesiaIndoNLPUniversity of IndonesiaSingapore PolytechnicMOH Office for Healthcare TransformationAllen AINational University, PhilippinesGraphcoreBinus UniversitySamsung R&D Institute PhilippinesUniversity of Illiinois, Urbana-ChampaignDataxet:SonarFaculty of Medicine Siriraj Hospital, Mahidol UniversityWrocław TechInstitute for Infocomm Research, SingaporeWorks Applications

Brown UniversityHanyang UniversityUniversity of BathChulalongkorn UniversityUniversitas Gadjah MadaUniversity of the PhilippinesNara Institute of Science and TechnologyBandung Institute of TechnologyOracleInstitut Teknologi Sepuluh NopemberMacau University of Science and TechnologySeoul National University of Science and TechnologyAuburn UniversitySony Group CorporationVidyasirimedhi Institute of Science and TechnologyPolytechnique MontrealKing Mongkut's University of Technology ThonburiSrinakharinwirot UniversityUniversity of New HavenTon Duc Thang UniversityBrawijaya UniversityUniversitas Pelita HarapanThammasat UniversityAteneo de Manila UniversityUniversitas Islam IndonesiaMonash University IndonesiaIndoNLPUniversity of IndonesiaSingapore PolytechnicMOH Office for Healthcare TransformationAllen AINational University, PhilippinesGraphcoreBinus UniversitySamsung R&D Institute PhilippinesUniversity of Illiinois, Urbana-ChampaignDataxet:SonarFaculty of Medicine Siriraj Hospital, Mahidol UniversityWrocław TechInstitute for Infocomm Research, SingaporeWorks Applications

University of Toronto

University of Toronto Carnegie Mellon University

Carnegie Mellon University New York University

New York University National University of Singapore

National University of Singapore Mila - Quebec AI Institute

Mila - Quebec AI Institute Meta

Meta CohereCapital OneSCB 10XSEACrowdAI SingaporeMBZUAIThe University of ManchesterSingapore University of Technology and DesignBeijing Academy of Artificial Intelligence (BAAI)Indian Statistical Institute, Kolkata

CohereCapital OneSCB 10XSEACrowdAI SingaporeMBZUAIThe University of ManchesterSingapore University of Technology and DesignBeijing Academy of Artificial Intelligence (BAAI)Indian Statistical Institute, Kolkata Brown UniversityHanyang UniversityUniversity of BathChulalongkorn UniversityUniversitas Gadjah MadaUniversity of the PhilippinesNara Institute of Science and TechnologyBandung Institute of TechnologyOracleInstitut Teknologi Sepuluh NopemberMacau University of Science and TechnologySeoul National University of Science and TechnologyAuburn UniversitySony Group CorporationVidyasirimedhi Institute of Science and TechnologyPolytechnique MontrealKing Mongkut's University of Technology ThonburiSrinakharinwirot UniversityUniversity of New HavenTon Duc Thang UniversityBrawijaya UniversityUniversitas Pelita HarapanThammasat UniversityAteneo de Manila UniversityUniversitas Islam IndonesiaMonash University IndonesiaIndoNLPUniversity of IndonesiaSingapore PolytechnicMOH Office for Healthcare TransformationAllen AINational University, PhilippinesGraphcoreBinus UniversitySamsung R&D Institute PhilippinesUniversity of Illiinois, Urbana-ChampaignDataxet:SonarFaculty of Medicine Siriraj Hospital, Mahidol UniversityWrocław TechInstitute for Infocomm Research, SingaporeWorks Applications

Brown UniversityHanyang UniversityUniversity of BathChulalongkorn UniversityUniversitas Gadjah MadaUniversity of the PhilippinesNara Institute of Science and TechnologyBandung Institute of TechnologyOracleInstitut Teknologi Sepuluh NopemberMacau University of Science and TechnologySeoul National University of Science and TechnologyAuburn UniversitySony Group CorporationVidyasirimedhi Institute of Science and TechnologyPolytechnique MontrealKing Mongkut's University of Technology ThonburiSrinakharinwirot UniversityUniversity of New HavenTon Duc Thang UniversityBrawijaya UniversityUniversitas Pelita HarapanThammasat UniversityAteneo de Manila UniversityUniversitas Islam IndonesiaMonash University IndonesiaIndoNLPUniversity of IndonesiaSingapore PolytechnicMOH Office for Healthcare TransformationAllen AINational University, PhilippinesGraphcoreBinus UniversitySamsung R&D Institute PhilippinesUniversity of Illiinois, Urbana-ChampaignDataxet:SonarFaculty of Medicine Siriraj Hospital, Mahidol UniversityWrocław TechInstitute for Infocomm Research, SingaporeWorks ApplicationsSoutheast Asia (SEA) is a region of extraordinary linguistic and cultural

diversity, yet it remains significantly underrepresented in vision-language

(VL) research. This often results in artificial intelligence (AI) models that

fail to capture SEA cultural nuances. To fill this gap, we present SEA-VL, an

open-source initiative dedicated to developing high-quality, culturally

relevant data for SEA languages. By involving contributors from SEA countries,

SEA-VL aims to ensure better cultural relevance and diversity, fostering

greater inclusivity of underrepresented languages in VL research. Beyond

crowdsourcing, our initiative goes one step further in the exploration of the

automatic collection of culturally relevant images through crawling and image

generation. First, we find that image crawling achieves approximately ~85%

cultural relevance while being more cost- and time-efficient than

crowdsourcing. Second, despite the substantial progress in generative vision

models, synthetic images remain unreliable in accurately reflecting SEA

cultures. The generated images often fail to reflect the nuanced traditions and

cultural contexts of the region. Collectively, we gather 1.28M SEA

culturally-relevant images, more than 50 times larger than other existing

datasets. Through SEA-VL, we aim to bridge the representation gap in SEA,

fostering the development of more inclusive AI systems that authentically

represent diverse cultures across SEA.

17 Jun 2025

This paper introduces a novel sub-sampling block maxima technique to model

and characterize environmental extreme risks. We examine the relationships

between block size and block maxima statistics derived from the Gaussian and

generalized Pareto distributions. We introduce a weighted least square

estimator for extreme value index (EVI) and evaluate its performance using

simulated auto-correlated data. We employ the second moment of block maxima for

plateau finding in EVI and extremal index (EI) estimation, and present the

effect of EI on Kullback-Leibler divergence. The applicability of this approach

is demonstrated across diverse environmental datasets, including meteorite

landing mass, earthquake energy release, solar activity, and variations in

Greenland's land snow cover and sea ice extent. Our method provides a

sample-efficient framework, robust to temporal dependencies, that delivers

actionable environmental extreme risk measures across different timescales.

With its flexibility, sample efficiency, and limited reliance on subjective

tuning, this approach emerges as a useful tool for environmental extreme risk

assessment and management.

Analysing malware is important to understand how malicious software works and

to develop appropriate detection and prevention methods. Dynamic analysis can

overcome evasion techniques commonly used to bypass static analysis and provide

insights into malware runtime activities. Much research on dynamic analysis

focused on investigating machine-level information (e.g., CPU, memory, network

usage) to identify whether a machine is running malicious activities. A

malicious machine does not necessarily mean all running processes on the

machine are also malicious. If we can isolate the malicious process instead of

isolating the whole machine, we could kill the malicious process, and the

machine can keep doing its job. Another challenge dynamic malware detection

research faces is that the samples are executed in one machine without any

background applications running. It is unrealistic as a computer typically runs

many benign (background) applications when a malware incident happens. Our

experiment with machine-level data shows that the existence of background

applications decreases previous state-of-the-art accuracy by about 20.12% on

average. We also proposed a process-level Recurrent Neural Network (RNN)-based

detection model. Our proposed model performs better than the machine-level

detection model; 0.049 increase in detection rate and a false-positive rate

below 0.1.

06 May 2025

Large language models (LLMs) have seen widespread adoption in many domains

including digital forensics. While prior research has largely centered on case

studies and examples demonstrating how LLMs can assist forensic investigations,

deeper explorations remain limited, i.e., a standardized approach for precise

performance evaluations is lacking. Inspired by the NIST Computer Forensic Tool

Testing Program, this paper proposes a standardized methodology to

quantitatively evaluate the application of LLMs for digital forensic tasks,

specifically in timeline analysis. The paper describes the components of the

methodology, including the dataset, timeline generation, and ground truth

development. Additionally, the paper recommends using BLEU and ROUGE metrics

for the quantitative evaluation of LLMs through case studies or tasks involving

timeline analysis. Experimental results using ChatGPT demonstrate that the

proposed methodology can effectively evaluate LLM-based forensic timeline

analysis. Finally, we discuss the limitations of applying LLMs to forensic

timeline analysis.

14 Jul 2025

Direct and natural interaction is essential for intuitive human-robot collaboration, eliminating the need for additional devices such as joysticks, tablets, or wearable sensors. In this paper, we present a lightweight deep learning-based hand gesture recognition system that enables humans to control collaborative robots naturally and efficiently. This model recognizes eight distinct hand gestures with only 1,103 parameters and a compact size of 22 KB, achieving an accuracy of 93.5%. To further optimize the model for real-world deployment on edge devices, we applied quantization and pruning using TensorFlow Lite, reducing the final model size to just 7 KB. The system was successfully implemented and tested on a Universal Robot UR5 collaborative robot within a real-time robotic framework based on ROS2. The results demonstrate that even extremely lightweight models can deliver accurate and responsive hand gesture-based control for collaborative robots, opening new possibilities for natural human-robot interaction in constrained environments.

Recent developments in applied mathematics increasingly employ machine learning (ML)-particularly supervised learning-to accelerate numerical computations, such as solving nonlinear partial differential equations. In this work, we extend such techniques to objects of a more theoretical nature: the classification and structural analysis of fractal sets. Focusing on the Mandelbrot and Julia sets as principal examples, we demonstrate that supervised learning methods-including Classification and Regression Trees (CART), K-Nearest Neighbors (KNN), Multilayer Perceptrons (MLP), and Recurrent Neural Networks using both Long Short-Term Memory (LSTM) and Bidirectional LSTM (BiLSTM), Random Forests (RF), and Convolutional Neural Networks (CNN)-can classify fractal points with significantly higher predictive accuracy and substantially lower computational cost than traditional numerical approaches, such as the thresholding technique. These improvements are consistent across a range of models and evaluation metrics. Notably, KNN and RF exhibit the best overall performance, and comparative analyses between models (e.g., KNN vs. LSTM) suggest the presence of novel regularity properties in these mathematical structures. Collectively, our findings indicate that ML not only enhances classification efficiency but also offers promising avenues for generating new insights, intuitions, and conjectures within pure mathematics.

21 Apr 2023

The main objective of this study is to propose an enhanced wind power

forecasting (EWPF) transformer model for handling power grid operations and

boosting power market competition. It helps reliable large-scale integration of

wind power relies in large part on accurate wind power forecasting (WPF). The

proposed model is evaluated for single-step and multi-step WPF, and compared

with gated recurrent unit (GRU) and long short-term memory (LSTM) models on a

wind power dataset. The results of the study indicate that the proposed EWPF

transformer model outperforms conventional recurrent neural network (RNN)

models in terms of time-series forecasting accuracy. In particular, the results

reveal a minimum performance improvement of 5% and a maximum of 20% compared to

LSTM and GRU. These results indicate that the EWPF transformer model provides a

promising alternative for wind power forecasting and has the potential to

significantly improve the precision of WPF. The findings of this study have

implications for energy producers and researchers in the field of WPF.

One of the critical issues contributing to inefficiency in Puskesmas (Indonesian community health centers) is the time-consuming nature of documenting doctor-patient interactions. Doctors must conduct thorough consultations and manually transcribe detailed notes into ePuskesmas electronic health records (EHR), which creates substantial administrative burden to already overcapacitated physicians. This paper presents a proof-of-concept framework using large language models (LLMs) to automate real-time transcription and summarization of doctor-patient conversations in Bahasa Indonesia. Our system combines Whisper model for transcription with GPT-3.5 for medical summarization, implemented as a browser extension that automatically populates ePuskesmas forms. Through controlled roleplay experiments with medical validation, we demonstrate the technical feasibility of processing detailed 300+ seconds trimmed consultations in under 30 seconds while maintaining clinical accuracy. This work establishes the foundation for AI-assisted clinical documentation in resource-constrained healthcare environments. However, concerns have also been raised regarding privacy compliance and large-scale clinical evaluation addressing language and cultural biases for LLMs.

NijiGAN, developed by Institut Teknologi Sepuluh Nopember and Avalon AI, transforms real-world photographs into high-fidelity anime visuals using contrastive semi-supervised learning and Neural Ordinary Differential Equations. The model achieves an FID score of 58.71, outperforming Scenimefy (60.32), while reducing computational overhead and mitigating checkered artifacts.

09 Oct 2020

CNRSUniversity of Warsaw

CNRSUniversity of Warsaw Delft University of TechnologyUniversity of Turin

Delft University of TechnologyUniversity of Turin Shandong University

Shandong University Karlsruhe Institute of TechnologyTechnische Universität MünchenInstitut Teknologi Sepuluh NopemberUniversité de Picardie Jules VerneGrenoble-INPUniv Grenoble AlpesUniv Paris Est CreteilMohammed V UniversityInstitute for Energy TechnologySIMaPHelmholtz Institute UlmICMPE

Karlsruhe Institute of TechnologyTechnische Universität MünchenInstitut Teknologi Sepuluh NopemberUniversité de Picardie Jules VerneGrenoble-INPUniv Grenoble AlpesUniv Paris Est CreteilMohammed V UniversityInstitute for Energy TechnologySIMaPHelmholtz Institute UlmICMPEThe current energy transition imposes a rapid implementation of energy

storage systems with high energy density and eminent regeneration and cycling

efficiency. Metal hydrides are potential candidates for generalized energy

storage, when coupled with fuel cell units and/or batteries. An overview of

ongoing research is reported and discussed in this review work on the light of

application as hydrogen and heat storage matrices, as well as thin films for

hydrogen optical sensors. These include a selection of single-metal hydrides,

Ti-V(Fe) based intermetallics, multi-principal element alloys (high-entropy

alloys), and a series of novel synthetically accessible metal borohydrides.

Metal hydride materials can be as well of important usefulness for MH-based

electrodes with high capacity (e.g. MgH2 ~ 2000 mAh g-1) and solid-state

electrolytes displaying high ionic conductivity suitable, respectively, for

Li-ion and Li/Mg battery technologies. To boost further research and

development directions some characterization techniques dedicated to the study

of M-H interactions, their equilibrium reactions, and additional quantification

of hydrogen concentration in thin film and bulk hydrides are presented at the

end of this manuscript.

17 Sep 2024

Sign language translation is one of the important issues in communication between deaf and hearing people, as it expresses words through hand, body, and mouth movements. American Sign Language is one of the sign languages used, one of which is the alphabetic sign. The development of neural machine translation technology is moving towards sign language translation. Transformer became the state-of-the-art in natural language processing. This study compares the Transformer with the Sequence-to-Sequence (Seq2Seq) model in translating sign language to text. In addition, an experiment was conducted by adding Residual Long Short-Term Memory (ResidualLSTM) in the Transformer. The addition of ResidualLSTM to the Transformer reduces the performance of the Transformer model by 23.37% based on the BLEU Score value. In comparison, the Transformer itself increases the BLEU Score value by 28.14 compared to the Seq2Seq model.

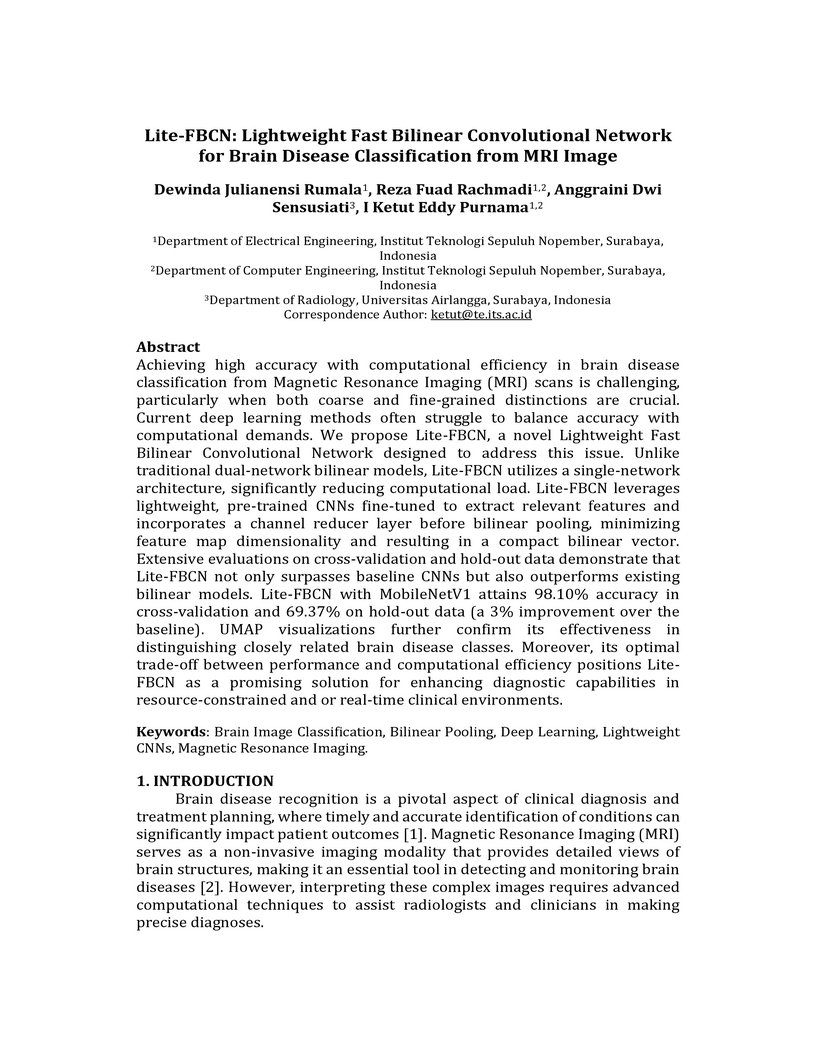

Achieving high accuracy with computational efficiency in brain disease classification from Magnetic Resonance Imaging (MRI) scans is challenging, particularly when both coarse and fine-grained distinctions are crucial. Current deep learning methods often struggle to balance accuracy with computational demands. We propose Lite-FBCN, a novel Lightweight Fast Bilinear Convolutional Network designed to address this issue. Unlike traditional dual-network bilinear models, Lite-FBCN utilizes a single-network architecture, significantly reducing computational load. Lite-FBCN leverages lightweight, pre-trained CNNs fine-tuned to extract relevant features and incorporates a channel reducer layer before bilinear pooling, minimizing feature map dimensionality and resulting in a compact bilinear vector. Extensive evaluations on cross-validation and hold-out data demonstrate that Lite-FBCN not only surpasses baseline CNNs but also outperforms existing bilinear models. Lite-FBCN with MobileNetV1 attains 98.10% accuracy in cross-validation and 69.37% on hold-out data (a 3% improvement over the baseline). UMAP visualizations further confirm its effectiveness in distinguishing closely related brain disease classes. Moreover, its optimal trade-off between performance and computational efficiency positions Lite-FBCN as a promising solution for enhancing diagnostic capabilities in resource-constrained and or real-time clinical environments.

The issue of source-free time-series domain adaptations still gains scarce research attentions. On the other hand, existing approaches rely solely on time-domain features ignoring frequency components providing complementary information. This paper proposes Time Frequency Domain Adaptation (TFDA), a method to cope with the source-free time-series domain adaptation problems. TFDA is developed with a dual branch network structure fully utilizing both time and frequency features in delivering final predictions. It induces pseudo-labels based on a neighborhood concept where predictions of a sample group are aggregated to generate reliable pseudo labels. The concept of contrastive learning is carried out in both time and frequency domains with pseudo label information and a negative pair exclusion strategy to make valid neighborhood assumptions. In addition, the time-frequency consistency technique is proposed using the self-distillation strategy while the uncertainty reduction strategy is implemented to alleviate uncertainties due to the domain shift problem. Last but not least, the curriculum learning strategy is integrated to combat noisy pseudo labels. Our experiments demonstrate the advantage of our approach over prior arts with noticeable margins in benchmark problems.

19 Dec 2024

Fault zones exhibit geometrical complexity and are often surrounded by multiscale fracture networks within their damage zones, influencing rupture dynamics and near-field ground motions. We investigate the ground-motion characteristics of cascading ruptures across damage zone fracture networks of moderate-sized earthquakes using high-resolution 3D dynamic rupture simulations. Our models feature a listric fault surrounded by over 800 fractures, emulating a major fault and its associated damage zone. We analyze three cases: a cascading rupture propagating within the fracture network, a non-cascading main-fault rupture with off-fault fracture slip, and a main-fault rupture without a fracture network. Cascading ruptures within the fracture network produce distinct ground-motion signatures with high-frequency content, arising from simultaneous slip of multiple fractures and parts of the main fault, resembling source coda-wave-like signatures. This case shows elevated near-field characteristic frequency (fc) and stress drop, approximately an order of magnitude higher than the estimation directly on the fault of the dynamic rupture simulation. The inferred fc of the modeled vertical components reflects the complexity of the radiation pattern and rupture directivity of cascading earthquakes. We show that this is consistent with observations of strong azimuthal dependence of corner frequency in the 2009-2016 Central Apennines, Italy, earthquake sequence. Simulated ground motions from cascading ruptures also show pronounced azimuthal variations in peak ground acceleration (PGA), peak ground velocity, and pseudo-spectral acceleration, with average PGA nearly double that of the non-cascading cases. Such outcomes emphasize the critical role of fault-zone complexity in affecting rupture dynamics and seismic radiation and have important implications for physics-based seismic hazard assessment.

13 Mar 2025

This paper focuses on the best approximation in quasi-cone metric spaces, a

combination of quasi-metrics and cone metrics, which generalizes the notion of

distance by allowing it to take values in an ordered Banach space. We explore

the fundamental properties of best approximations in this setting, such as the

best approximation sets and the Chebyshev sets.

23 Jun 2025

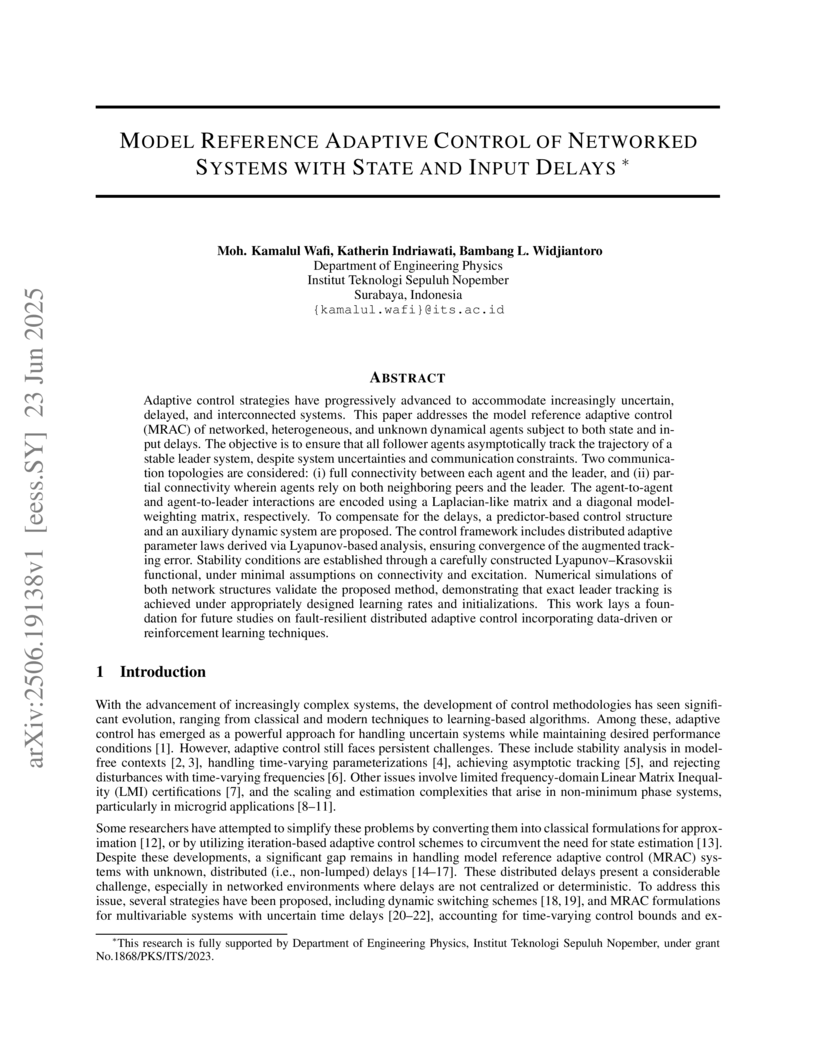

Adaptive control strategies have progressively advanced to accommodate increasingly uncertain, delayed, and interconnected systems. This paper addresses the model reference adaptive control (MRAC) of networked, heterogeneous, and unknown dynamical agents subject to both state and input delays. The objective is to ensure that all follower agents asymptotically track the trajectory of a stable leader system, despite system uncertainties and communication constraints. Two communication topologies are considered, full connectivity between each agent and the leader, and partial connectivity wherein agents rely on both neighboring peers and the leader. The agent-to-agent and agent-to-leader interactions are encoded using a Laplacian-like matrix and a diagonal model-weighting matrix, respectively. To compensate for the delays, a predictor-based control structure and an auxiliary dynamic system are proposed. The control framework includes distributed adaptive parameter laws derived via Lyapunov-based analysis, ensuring convergence of the augmented tracking error. Stability conditions are established through a carefully constructed Lyapunov Krasovskii functional, under minimal assumptions on connectivity and excitation. Numerical simulations of both network structures validate the proposed method, demonstrating that exact leader tracking is achieved under appropriately designed learning rates and initializations. This work lays a foundation for future studies on fault-resilient distributed adaptive control incorporating data-driven or reinforcement learning techniques.

03 May 2020

We propose channel matrices by using unfolding matrices from their reduced

density matrices. These channel matrices can be a criterion for a channel

whether the channel can teleport or not any qubit state. We consider a special

case, teleportation of the arbitrary two-qubit state by using the four-qubit

channel. The four-qubit channel can only teleport if the rank of the related

channel matrix is four.

Despite the progress in cross-domain few-shot learning, a model pre-trained with DINO combined with a prototypical classifier outperforms the latest SOTA methods. A crucial limitation that needs to be overcome is that updating too many parameters of the transformers leads to overfitting due to the scarcity of labeled samples. To address this challenge, we propose a new concept, coalescent projection, as an effective successor to soft prompts. Additionally, we propose a novel pseudo-class generation method, combined with self-supervised transformations, that relies solely on the base domain to prepare the network to encounter unseen samples from different domains. The proposed method exhibits its effectiveness in comprehensive experiments on the extreme domain-shift problem of the BSCD-FSL benchmark. Our code is published at \href{this https URL}{this https URL}.

There are no more papers matching your filters at the moment.