Ask or search anything...

Researchers from KAIST, MIT, and KTH establish the first instance-specific high-probability lower bound on the clustering error for Mixture of Markov Chains. They also propose a two-stage, parameter-free algorithm that achieves a near-optimal error rate, matching the derived lower bound asymptotically and demonstrating improved sample complexity compared to previous methods.

View blogScorch integrates a compiler-driven approach for sparse tensor computation directly into PyTorch, enabling efficient and general sparse deep learning. It automates performance optimizations for sparse operations, demonstrating substantial speedups for models like GCNs, sparse autoencoders, and sparse transformers on CPU inference.

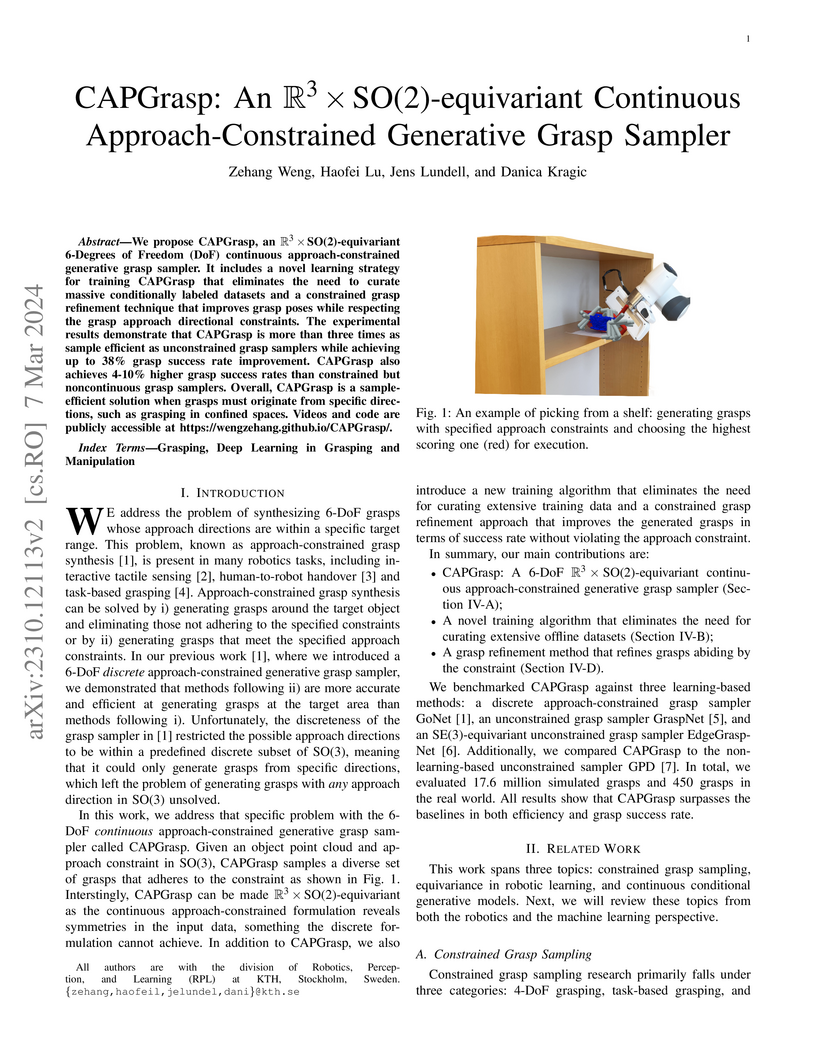

View blogResearchers at KTH Royal Institute of Technology developed DexDiffuser, a framework utilizing conditional diffusion models and refinement strategies to generate 16-DoF dexterous grasps for unknown objects from partial point clouds. It achieved a real-world grasp success rate of 68.89%, outperforming previous methods by 20.00% on diverse objects.

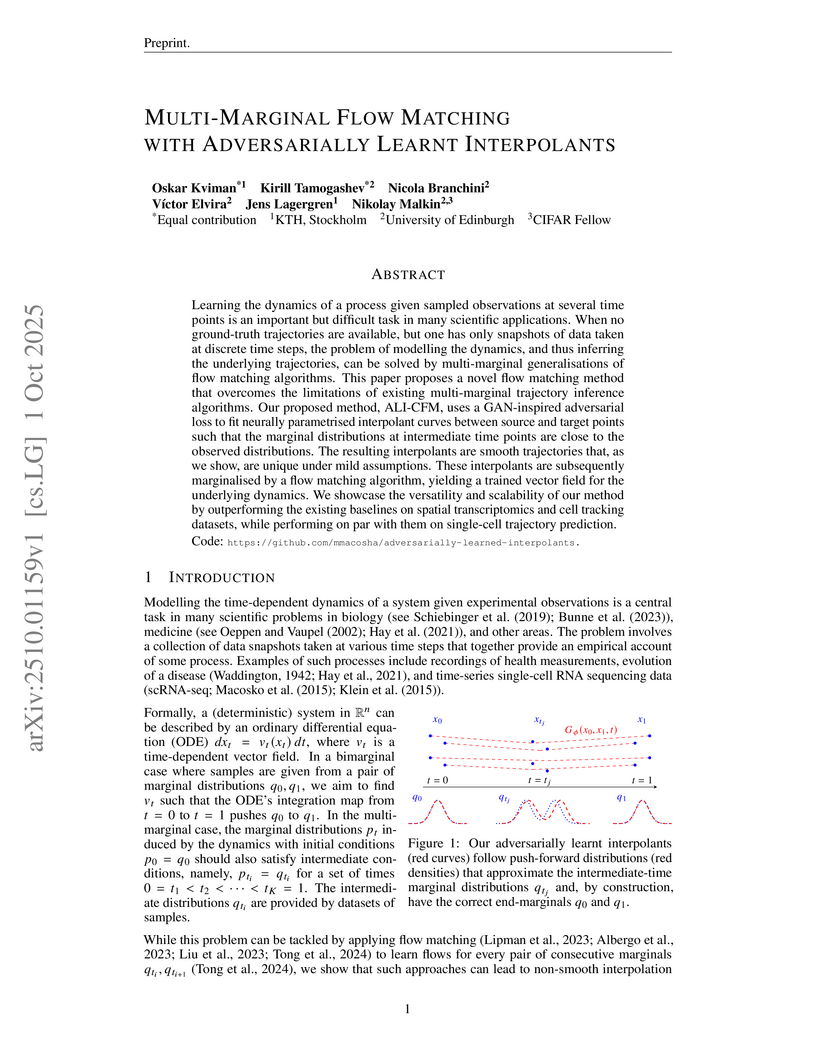

View blogSemLA introduces a training-free test-time adaptation method for open-vocabulary semantic segmentation that addresses domain shift. It builds a library of domain-specific LoRA adapters, indexed by CLIP embeddings, and dynamically merges the most semantically relevant adapters for a given test image to improve segmentation quality, achieving 2-4% mIoU gains over zero-shot baselines on a diverse 20-domain benchmark.

View blogThe Apex Data & Knowledge Management Lab at Shanghai Jiao Tong University developed CodeApex, a comprehensive bilingual (English and Chinese) benchmark for assessing large language models across programming comprehension, code generation, and correction tasks. Evaluations using CodeApex showed GPT-4 led in all tasks, for example, achieving around 69% accuracy in comprehension, while human experts consistently demonstrated superior performance, scoring approximately 80% in comprehension.

View blog UC Berkeley

UC Berkeley Stanford University

Stanford University

KU Leuven

KU Leuven

KAIST

KAIST MIT

MIT

Shanghai Jiao Tong University

Shanghai Jiao Tong University

ETH Zurich

ETH Zurich University of Toronto

University of Toronto

University of California, Santa Barbara

University of California, Santa Barbara

Princeton University

Princeton University

KTH Royal Institute of Technology

KTH Royal Institute of Technology