Malm University

15 Sep 2025

Protein design has the potential to revolutionize biotechnology and medicine. While most efforts have focused on proteins with well-defined structures, increased recognition of the functional significance of intrinsically disordered regions, together with improvements in their modeling, has paved the way to their computational de novo design. This review summarizes recent advances in engineering intrinsically disordered regions with tailored conformational ensembles, molecular recognition, and phase behavior. We discuss challenges in combining models with predictive accuracy with scalable design workflows and outline emerging strategies that integrate knowledge-based, physics-based, and machine-learning approaches.

30 Sep 2024

CNRSUniversity of New South WalesHeidelberg University

CNRSUniversity of New South WalesHeidelberg University University of Notre Dame

University of Notre Dame Yale University

Yale University University of FloridaAarhus University

University of FloridaAarhus University Université Paris-Saclay

Université Paris-Saclay University of Arizona

University of Arizona Sorbonne Université

Sorbonne Université CEA

CEA University of SydneyUniversity of Vienna

University of SydneyUniversity of Vienna The Ohio State UniversitySpace Science InstituteObservatoire de ParisUniversity of BirminghamInstituto de Astrofísica de CanariasUniversidad de La LagunaNew Mexico State UniversityInstitute of Science and Technology AustriaObservatoire de la Côte d’AzurUniversity of Hawai’iThüringer LandessternwarteInstitut d’Estudis Espacials de CatalunyaOsservatorio Astronomico di PadovaObservatório NacionalINAF - Osservatorio Astrofisico di CataniaCalifornia State UniversityHeidelberg Institute for Theoretical StudiesCanada-France-Hawaii TelescopeTexas Christian UniversityInstitute of Space SciencesMalm University

The Ohio State UniversitySpace Science InstituteObservatoire de ParisUniversity of BirminghamInstituto de Astrofísica de CanariasUniversidad de La LagunaNew Mexico State UniversityInstitute of Science and Technology AustriaObservatoire de la Côte d’AzurUniversity of Hawai’iThüringer LandessternwarteInstitut d’Estudis Espacials de CatalunyaOsservatorio Astronomico di PadovaObservatório NacionalINAF - Osservatorio Astrofisico di CataniaCalifornia State UniversityHeidelberg Institute for Theoretical StudiesCanada-France-Hawaii TelescopeTexas Christian UniversityInstitute of Space SciencesMalm UniversityIn the third APOKASC catalog, we present data for the complete sample of 15,808 evolved stars with APOGEE spectroscopic parameters and Kepler asteroseismology. We used ten independent asteroseismic analysis techniques and anchor our system on fundamental radii derived from Gaia L and spectroscopic Teff. We provide evolutionary state, asteroseismic surface gravity, mass, radius, age, and the spectroscopic and asteroseismic measurements used to derive them for 12,418 stars. This includes 10,036 exceptionally precise measurements, with median fractional uncertainties in \nmax, \dnu, mass, radius and age of 0.6\%, 0.6\%, 3.8\%, 1.8\%, and 11.1\% respectively. We provide more limited data for 1,624 additional stars which either have lower quality data or are outside of our primary calibration domain. Using lower red giant branch (RGB) stars, we find a median age for the chemical thick disk of 9.14±0.05(ran)±0.9(sys) Gyr with an age dispersion of 1.1 Gyr, consistent with our error model. We calibrate our red clump (RC) mass loss to derive an age consistent with the lower RGB and provide asymptotic GB and RGB ages for luminous stars. We also find a sharp upper age boundary in the chemical thin disk. We find that scaling relations are precise and accurate on the lower RGB and RC, but they become more model dependent for more luminous giants and break down at the tip of the RGB. We recommend the usage of multiple methods, calibration to a fundamental scale, and the usage of stellar models to interpret frequency spacings.

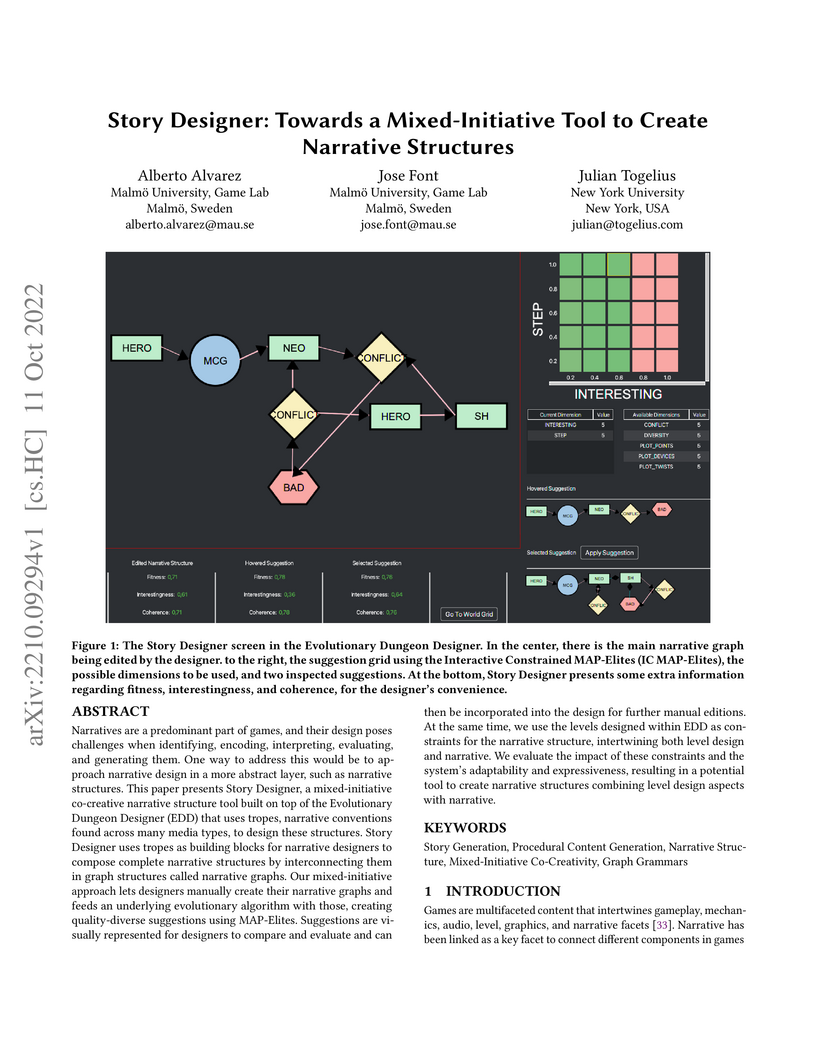

Narratives are a predominant part of games, and their design poses challenges

when identifying, encoding, interpreting, evaluating, and generating them. One

way to address this would be to approach narrative design in a more abstract

layer, such as narrative structures. This paper presents Story Designer, a

mixed-initiative co-creative narrative structure tool built on top of the

Evolutionary Dungeon Designer (EDD) that uses tropes, narrative conventions

found across many media types, to design these structures. Story Designer uses

tropes as building blocks for narrative designers to compose complete narrative

structures by interconnecting them in graph structures called narrative graphs.

Our mixed-initiative approach lets designers manually create their narrative

graphs and feeds an underlying evolutionary algorithm with those, creating

quality-diverse suggestions using MAP-Elites. Suggestions are visually

represented for designers to compare and evaluate and can then be incorporated

into the design for further manual editions. At the same time, we use the

levels designed within EDD as constraints for the narrative structure,

intertwining both level design and narrative. We evaluate the impact of these

constraints and the system's adaptability and expressiveness, resulting in a

potential tool to create narrative structures combining level design aspects

with narrative.

This paper presents a hybrid framework for literature reviews that augments

traditional bibliometric methods with large language models (LLMs). By

fine-tuning open-source LLMs, our approach enables scalable extraction of

qualitative insights from large volumes of research content, enhancing both the

breadth and depth of knowledge synthesis. To improve annotation efficiency and

consistency, we introduce an error-focused validation process in which LLMs

generate initial labels and human reviewers correct misclassifications.

Applying this framework to over 20000 scientific articles about human

migration, we demonstrate that a domain-adapted LLM can serve as a "specialist"

model - capable of accurately selecting relevant studies, detecting emerging

trends, and identifying critical research gaps. Notably, the LLM-assisted

review reveals a growing scholarly interest in climate-induced migration.

However, existing literature disproportionately centers on a narrow set of

environmental hazards (e.g., floods, droughts, sea-level rise, and land

degradation), while overlooking others that more directly affect human health

and well-being, such as air and water pollution or infectious diseases. This

imbalance highlights the need for more comprehensive research that goes beyond

physical environmental changes to examine their ecological and societal

consequences, particularly in shaping migration as an adaptive response.

Overall, our proposed framework demonstrates the potential of fine-tuned LLMs

to conduct more efficient, consistent, and insightful literature reviews across

disciplines, ultimately accelerating knowledge synthesis and scientific

discovery.

16 Oct 2025

Chinese Academy of Sciences

Chinese Academy of Sciences the University of Tokyo

the University of Tokyo Nanjing University

Nanjing University Stockholm UniversityNordic Optical TelescopeUniversidad Andres BelloMalm UniversityINAF "," Astronomic Observatory of RomeINAF

Osservatorio Astrofisico di ArcetriUniversity of Rome

“Tor Vergata

”INAF

Osservatorio Astronomico di PadovaINAF Osservatorio di Astrofisica e Scienza dello Spazio di Bologna

Stockholm UniversityNordic Optical TelescopeUniversidad Andres BelloMalm UniversityINAF "," Astronomic Observatory of RomeINAF

Osservatorio Astrofisico di ArcetriUniversity of Rome

“Tor Vergata

”INAF

Osservatorio Astronomico di PadovaINAF Osservatorio di Astrofisica e Scienza dello Spazio di BolognaWe present phosphorus abundance measurements for a total of 102 giant stars, including 82 stars in 24 open clusters and 20 Cepheids, based on high-resolution near-infrared spectra obtained with GIANO-B. Evolution of phosphorus abundance, despite its astrophysical and biological significance, remains poorly understood due to a scarcity of observational data. By combining precise stellar parameters from the optical, a robust line selection and measurement method, we measure phosphorus abundances using available P I lines. Our analysis confirms a declining trend in [P/Fe] with increasing [Fe/H] around solar metallicity for clusters and Cepheids, consistent with previous studies. We also report a [P/Fe]-age relation among open clusters older than 1 Gyr, indicating a time-dependent enrichment pattern. Such pattern can be explained by the different stellar formation history of their parental gas, with more efficient stellar formation in the gas of older clusters (thus with higher phosphorus abundances). [P/Fe] shows a flat trend among cepheids and clusters younger than 1 Gyr (along with three Cepheids inside open clusters), possibly hinting at the phosphorus contribution from the previous-generation low-mass stars. Such trend suggests that the young clusters share a nearly common chemical history, with a mild increase in phosphorus production by low-mass stars.

01 May 2025

From Requirements to Test Cases: An NLP-Based Approach for High-Performance ECU Test Case Automation

From Requirements to Test Cases: An NLP-Based Approach for High-Performance ECU Test Case Automation

Automating test case specification generation is vital for improving the

efficiency and accuracy of software testing, particularly in complex systems

like high-performance Electronic Control Units (ECUs). This study investigates

the use of Natural Language Processing (NLP) techniques, including Rule-Based

Information Extraction and Named Entity Recognition (NER), to transform natural

language requirements into structured test case specifications. A dataset of

400 feature element documents from the Polarion tool was used to evaluate both

approaches for extracting key elements such as signal names and values. The

results reveal that the Rule-Based method outperforms the NER method, achieving

95% accuracy for more straightforward requirements with single signals, while

the NER method, leveraging SVM and other machine learning algorithms, achieved

77.3% accuracy but struggled with complex scenarios. Statistical analysis

confirmed that the Rule-Based approach significantly enhances efficiency and

accuracy compared to manual methods. This research highlights the potential of

NLP-driven automation in improving quality assurance, reducing manual effort,

and expediting test case generation, with future work focused on refining NER

and hybrid models to handle greater complexity.

This paper proposes a Federated Learning Code Smell Detection (FedCSD)

approach that allows organizations to collaboratively train federated ML models

while preserving their data privacy. These assertions have been supported by

three experiments that have significantly leveraged three manually validated

datasets aimed at detecting and examining different code smell scenarios. In

experiment 1, which was concerned with a centralized training experiment,

dataset two achieved the lowest accuracy (92.30%) with fewer smells, while

datasets one and three achieved the highest accuracy with a slight difference

(98.90% and 99.5%, respectively). This was followed by experiment 2, which was

concerned with cross-evaluation, where each ML model was trained using one

dataset, which was then evaluated over the other two datasets. Results from

this experiment show a significant drop in the model's accuracy (lowest

accuracy: 63.80\%) where fewer smells exist in the training dataset, which has

a noticeable reflection (technical debt) on the model's performance. Finally,

the last and third experiments evaluate our approach by splitting the dataset

into 10 companies. The ML model was trained on the company's site, then all

model-updated weights were transferred to the server. Ultimately, an accuracy

of 98.34% was achieved by the global model that has been trained using 10

companies for 100 training rounds. The results reveal a slight difference in

the global model's accuracy compared to the highest accuracy of the centralized

model, which can be ignored in favour of the global model's comprehensive

knowledge, lower training cost, preservation of data privacy, and avoidance of

the technical debt problem.

Artificial intelligence (AI) and machine learning (ML) are increasingly

broadly adopted in industry, However, based on well over a dozen case studies,

we have learned that deploying industry-strength, production quality ML models

in systems proves to be challenging. Companies experience challenges related to

data quality, design methods and processes, performance of models as well as

deployment and compliance. We learned that a new, structured engineering

approach is required to construct and evolve systems that contain ML/DL

components. In this paper, we provide a conceptualization of the typical

evolution patterns that companies experience when employing ML as well as an

overview of the key problems experienced by the companies that we have studied.

The main contribution of the paper is a research agenda for AI engineering that

provides an overview of the key engineering challenges surrounding ML solutions

and an overview of open items that need to be addressed by the research

community at large.

Simulation has become, in many application areas, a sine-qua-non. Most

recently, COVID-19 has underlined the importance of simulation studies and

limitations in current practices and methods. We identify four goals of

methodological work for addressing these limitations. The first is to provide

better support for capturing, representing, and evaluating the context of

simulation studies, including research questions, assumptions, requirements,

and activities contributing to a simulation study. In addition, the composition

of simulation models and other simulation studies' products must be supported

beyond syntactical coherence, including aspects of semantics and purpose,

enabling their effective reuse. A higher degree of automating simulation

studies will contribute to more systematic, standardized simulation studies and

their efficiency. Finally, it is essential to invest increased effort into

effectively communicating results and the processes involved in simulation

studies to enable their use in research and decision-making. These goals are

not pursued independently of each other, but they will benefit from and

sometimes even rely on advances in other subfields. In the present paper, we

explore the basis and interdependencies evident in current research and

practice and delineate future research directions based on these

considerations.

Using raw sensor data to model and train networks for Human Activity

Recognition can be used in many different applications, from fitness tracking

to safety monitoring applications. These models can be easily extended to be

trained with different data sources for increased accuracies or an extension of

classifications for different prediction classes. This paper goes into the

discussion on the available dataset provided by WISDM and the unique features

of each class for the different axes. Furthermore, the design of a Long Short

Term Memory (LSTM) architecture model is outlined for the application of human

activity recognition. An accuracy of above 94% and a loss of less than 30% has

been reached in the first 500 epochs of training.

08 Jun 2021

In this paper, we present a semiclassical description of surface waves or

modes in an elastic medium near a boundary, in spatial dimension three. The

medium is assumed to be essentially stratified near the boundary at some scale

comparable to the wave length. Such a medium can also be thought of as a

surficial layer (which can be thick) overlying a half space. The analysis is

based on the work of Colin de Verdi\`ere on acoustic surface waves. The

description is geometric in the boundary and locally spectral "beneath" it.

Effective Hamiltonians of surface waves correspond with eigenvalues of ordinary

differential operators, which, to leading order, define their phase velocities.

Using these Hamiltonians, we obtain pseudodifferential surface wave equations.

We then construct a parametrix. Finally, we discuss Weyl's formulas for

counting surface modes, and the decoupling into two classes of surface waves,

that is, Rayleigh and Love waves, under appropriate symmetry conditions.

We propose modeling designer style in mixed-initiative game content creation tools as archetypical design traces. These design traces are formulated as transitions between design styles; these design styles are in turn found through clustering all intermediate designs along the way to making a complete design. This method is implemented in the Evolutionary Dungeon Designer, a research platform for mixed-initiative systems to create adventure and dungeon crawler games. We present results both in the form of design styles for rooms, which can be analyzed to better understand the kind of rooms designed by users, and in the form of archetypical sequences between these rooms, i.e., Designer Personas.

As the amount of data that needs to be processed in real-time due to recent application developments increase, the need for a new computing paradigm is required. Edge computing resolves this issue by offloading computing resources required by intelligent transportation systems such as the Internet of Vehicles from the cloud closer to the end devices to improve performance however, it is susceptible to security issues that make the transportation systems vulnerable to attackers. In addition to this, there are security issues in transportation technologies that impact the edge computing paradigm as well. This paper presents some of the main security issues and challenges that are present in edge computing, which are Distributed Denial of Service attacks, side channel attacks, malware injection attacks and authentication and authorization attacks, how these impact intelligent transportation systems and research being done to help realize and mitigate these issues.

01 Jul 2022

Randomised field experiments, such as A/B testing, have long been the gold

standard for evaluating software changes. In the automotive domain, running

randomised field experiments is not always desired, possible, or even ethical.

In the face of such limitations, we develop a framework BOAT (Bayesian causal

modelling for ObvservAtional Testing), utilising observational studies in

combination with Bayesian causal inference, in order to understand real-world

impacts from complex automotive software updates and help software development

organisations arrive at causal conclusions. In this study, we present three

causal inference models in the Bayesian framework and their corresponding cases

to address three commonly experienced challenges of software evaluation in the

automotive domain. We develop the BOAT framework with our industry

collaborator, and demonstrate the potential of causal inference by conducting

empirical studies on a large fleet of vehicles. Moreover, we relate the causal

assumption theories to their implications in practise, aiming to provide a

comprehensive guide on how to apply the causal models in automotive software

engineering. We apply Bayesian propensity score matching for producing balanced

control and treatment groups when we do not have access to the entire user

base, Bayesian regression discontinuity design for identifying covariate

dependent treatment assignments and the local treatment effect, and Bayesian

difference-in-differences for causal inference of treatment effect overtime and

implicitly control unobserved confounding factors. Each one of the

demonstrative case has its grounds in practise, and is a scenario experienced

when randomisation is not feasible. With the BOAT framework, we enable online

software evaluation in the automotive domain without the need of a fully

randomised experiment.

30 Apr 2025

This paper presents a user-centred evaluation framework for keyword extraction algorithms, specifically for contextual advertising, assessing their performance based on both traditional metrics and human perception. The study found KeyBERT strikes a favorable balance between user preference and computational efficiency, performing comparably to human-generated keywords in some instances and significantly outperforming Llama 2 in practical applicability while requiring substantially fewer resources.

This paper presents the Designer Preference Model, a data-driven solution

that pursues to learn from user generated data in a Quality-Diversity

Mixed-Initiative Co-Creativity (QD MI-CC) tool, with the aims of modelling the

user's design style to better assess the tool's procedurally generated content

with respect to that user's preferences. Through this approach, we aim for

increasing the user's agency over the generated content in a way that neither

stalls the user-tool reciprocal stimuli loop nor fatigues the user with

periodical suggestion handpicking. We describe the details of this novel

solution, as well as its implementation in the MI-CC tool the Evolutionary

Dungeon Designer. We present and discuss our findings out of the initial tests

carried out, spotting the open challenges for this combined line of research

that integrates MI-CC with Procedural Content Generation through Machine

Learning.

Visual Human Activity Recognition (HAR) and data fusion with other sensors can help us at tracking the behavior and activity of underground miners with little obstruction. Existing models, such as Single Shot Detector (SSD), trained on the Common Objects in Context (COCO) dataset is used in this paper to detect the current state of a miner, such as an injured miner vs a non-injured miner. Tensorflow is used for the abstraction layer of implementing machine learning algorithms, and although it uses Python to deal with nodes and tensors, the actual algorithms run on C++ libraries, providing a good balance between performance and speed of development. The paper further discusses evaluation methods for determining the accuracy of the machine-learning and an approach to increase the accuracy of the detected activity/state of people in a mining environment, by means of data fusion.

15 Oct 2024

The Graspg program package is an extension of Grasp2018 [Comput. Phys. Commun. 237 (2019) 184-187] based on configuration state function generators (CSFGs). The generators keep spin-angular integrations at a minimum and reduce substantially the execution time and the memory requirements for large-scale multiconfiguration Dirac-Hartree-Fock (MCDHF) and relativistic configuration interaction (CI) atomic structure calculations. The package includes the improvements reported in [Atoms 11 (2023) 12] in terms of redesigned and efficient constructions of direct- and exchange potentials, as well as Lagrange multipliers, and additional parallelization of the diagonalization procedure. Tools have been developed for predicting configuration state functions (CSFs) that are unimportant and can be discarded for large MCDHF or CI calculations based on results from smaller calculations, thus providing efficient methods for a priori condensation. The package provides a seamless interoperability with Grasp2018. From extensive test runs and benchmarking, we have demonstrated reductions in the execution time and disk file sizes with factors of 37 and 98, respectively, for MCDHF calculations based on large orbital sets compared to corresponding Grasp2018 calculations. For CI calculations, reductions of the execution time with factors over 200 have been attained. With a sensible use of the new possibilities for a priori condensation, CI calculations with nominally hundreds of millions of CSFs can be handled.

21 May 2025

Large-scale multiconfigurational calculations are conducted on experimentally

significant transitions in Lr I and its lanthanide homologue Lu I, exhibiting

good agreement with recent theoretical and experimental results. A single

reference calculation is performed, allowing for substitutions from the core

within a sufficiently large active set to effectively capture the influence of

the core on the valence shells, improving upon previous multiconfigurational

calculations. An additional calculation utilising a multireference set is

performed to account for static correlation effects which contribute to the

wavefunction. Reported energies for the two selected transitions are

20716±550 cm−1 and 28587±650 cm−1 for $7\!s^2

8s~^{2} \! {S}_{1\!/\!2}\rightarrow7\!s^2 7\!p ~^{2} \! {P}^{o}_{1\!/\!2

}and7\!s^2 7\!d ~^{2} \! {D}_{3\!/\!2 }\rightarrow7\!s^2 7\!p ~^{2}

\! {P}^{o}_{1\!/\!2 }$, respectively.

Various mechanisms have been proposed for hydrogen embrittlement, but the causation of hydrogen-induced material degradation has remained unclear. This work shows hydrogen embrittlement due to phase instability (decomposition). In-situ diffraction measurements revealed metastable hydrides formed in stainless steel, typically declared as a non-hydride forming material. Hydride formation is possible by increasing the hydrogen chemical potential during electrochemical charging and low defect formation energy of hydrogen interstitials. Our findings demonstrate that hydrogen-induced material degradation can only be understood if measured in situ and in real-time during the embrittlement process.

There are no more papers matching your filters at the moment.