Penn State University

02 Aug 2025

Google DeepMind

Google DeepMind University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign Université de Montréal

Université de Montréal University of Southern California

University of Southern California Stanford University

Stanford University Mila - Quebec AI Institute

Mila - Quebec AI Institute The Hong Kong Polytechnic University

The Hong Kong Polytechnic University Yale University

Yale University University of Georgia

University of Georgia Nanyang Technological University

Nanyang Technological University Microsoft

Microsoft Argonne National Laboratory

Argonne National Laboratory Duke University

Duke University HKUSTKing Abdullah University of Science and Technology

HKUSTKing Abdullah University of Science and Technology University of Sydney

University of Sydney The Ohio State UniversityPenn State UniversityMetaGPT

The Ohio State UniversityPenn State UniversityMetaGPTA comprehensive, brain-inspired framework integrates diverse research areas of LLM-based intelligent agents, encompassing individual architecture, collaborative systems, and safety. The framework formally conceptualizes agent components, maps AI capabilities to human cognition to identify research gaps, and outlines a roadmap for developing autonomous, adaptive, and safe AI.

The Chain-of-Agents (CoA) framework enables multiple Large Language Models to collaboratively process long text contexts by distributing the cognitive load across sequentially communicating agents. This approach yields up to 10% performance improvements over traditional methods and addresses the "lost in the middle" problem, even surpassing models with extensive context windows like Claude 3.

A prompt injection attack aims to inject malicious instruction/data into the input of an LLM-Integrated Application such that it produces results as an attacker desires. Existing works are limited to case studies. As a result, the literature lacks a systematic understanding of prompt injection attacks and their defenses. We aim to bridge the gap in this work. In particular, we propose a framework to formalize prompt injection attacks. Existing attacks are special cases in our framework. Moreover, based on our framework, we design a new attack by combining existing ones. Using our framework, we conduct a systematic evaluation on 5 prompt injection attacks and 10 defenses with 10 LLMs and 7 tasks. Our work provides a common benchmark for quantitatively evaluating future prompt injection attacks and defenses. To facilitate research on this topic, we make our platform public at this https URL.

The FOVER approach, developed by researchers at Penn State University, automatically synthesizes accurate training data for Process Reward Models (PRMs) using formal verification tools. This enables PRMs to achieve robust cross-task and easy-to-difficult generalization, significantly improving reasoning verification across diverse benchmarks.

The rise of algorithmic pricing raises concerns of algorithmic collusion. We conduct experiments with algorithmic pricing agents based on Large Language Models (LLMs). We find that LLM-based pricing agents quickly and autonomously reach supracompetitive prices and profits in oligopoly settings and that variation in seemingly innocuous phrases in LLM instructions ("prompts") may substantially influence the degree of supracompetitive pricing. Off-path analysis using novel techniques uncovers price-war concerns as contributing to these phenomena. Our results extend to auction settings. Our findings uncover unique challenges to any future regulation of LLM-based pricing agents, and AI-based pricing agents more broadly.

This paper presents AutoRAN, the first framework to automate the hijacking of internal safety reasoning in large reasoning models (LRMs). At its core, AutoRAN pioneers an execution simulation paradigm that leverages a weaker but less-aligned model to simulate execution reasoning for initial hijacking attempts and iteratively refine attacks by exploiting reasoning patterns leaked through the target LRM's refusals. This approach steers the target model to bypass its own safety guardrails and elaborate on harmful instructions. We evaluate AutoRAN against state-of-the-art LRMs, including GPT-o3/o4-mini and Gemini-2.5-Flash, across multiple benchmarks (AdvBench, HarmBench, and StrongReject). Results show that AutoRAN achieves approaching 100% success rate within one or few turns, effectively neutralizing reasoning-based defenses even when evaluated by robustly aligned external models. This work reveals that the transparency of the reasoning process itself creates a critical and exploitable attack surface, highlighting the urgent need for new defenses that protect models' reasoning traces rather than merely their final outputs.

FOLIO introduces a human-annotated dataset for complex first-order logic reasoning in natural language, revealing that even state-of-the-art large language models like GPT-4 struggle significantly with multi-step logical deduction and precise natural language to first-order logic translation, falling far short of human expert performance.

11 Oct 2025

A new benchmark called TRAJECT-Bench introduces a rigorous framework for evaluating Large Language Model (LLM) agents' tool-use capabilities using over 1,000 real-world APIs, complex multi-step trajectories, and both direct and indirect user queries. Evaluations reveal LLMs struggle significantly with inferring intent from indirect queries and scaling tool use, though agentic frameworks like ReAct provide modest improvements.

LaMMA-P introduces a framework that integrates large language models with PDDL planning for generalizable multi-agent long-horizon task allocation and execution with heterogeneous robot teams. It achieves a 105% higher average success rate and 36% higher average efficiency compared to prior state-of-the-art methods on a new multi-agent benchmark.

12 Apr 2016

Human society needs to increase food production by an estimated 70% by 2050 to feed an expected population size that is predicted to be over 9 billion people. Currently, infectious diseases reduce the potential yield by an average of 40% with many farmers in the developing world experiencing yield losses as high as 100%. The widespread distribution of smartphones among crop growers around the world with an expected 5 billion smartphones by 2020 offers the potential of turning the smartphone into a valuable tool for diverse communities growing food. One potential application is the development of mobile disease diagnostics through machine learning and crowdsourcing. Here we announce the release of over 50,000 expertly curated images on healthy and infected leaves of crops plants through the existing online platform PlantVillage. We describe both the data and the platform. These data are the beginning of an on-going, crowdsourcing effort to enable computer vision approaches to help solve the problem of yield losses in crop plants due to infectious diseases.

Aligning large language models with human feedback at inference time has received increasing attention due to its flexibility. Existing methods rely on generating multiple responses from the base policy for search using a reward model, which can be considered as searching in a discrete response space. However, these methods struggle to explore informative candidates when the base policy is weak or the candidate set is small, resulting in limited effectiveness. In this paper, to address this problem, we propose Simple Energy Adaptation (SEA), a simple yet effective algorithm for inference-time alignment. In contrast to expensive search over the discrete space, SEA directly adapts original responses from the base policy toward the optimal one via gradient-based sampling in continuous latent space. Specifically, SEA formulates inference as an iterative optimization procedure on an energy function over actions in the continuous space defined by the optimal policy, enabling simple and effective alignment. For instance, despite its simplicity, SEA outperforms the second-best baseline with a relative improvement of up to \textbf{77.51%} on AdvBench and \textbf{16.36%} on MATH. Our code is publicly available at this https URL

Prompt injection attacks pose a pervasive threat to the security of Large Language Models (LLMs). State-of-the-art prevention-based defenses typically rely on fine-tuning an LLM to enhance its security, but they achieve limited effectiveness against strong attacks. In this work, we propose \emph{SecInfer}, a novel defense against prompt injection attacks built on \emph{inference-time scaling}, an emerging paradigm that boosts LLM capability by allocating more compute resources for reasoning during inference. SecInfer consists of two key steps: \emph{system-prompt-guided sampling}, which generates multiple responses for a given input by exploring diverse reasoning paths through a varied set of system prompts, and \emph{target-task-guided aggregation}, which selects the response most likely to accomplish the intended task. Extensive experiments show that, by leveraging additional compute at inference, SecInfer effectively mitigates both existing and adaptive prompt injection attacks, outperforming state-of-the-art defenses as well as existing inference-time scaling approaches.

This research investigates LLM hallucinations from an inner representation perspective, identifying "in-context sharpness" as a signal for factual correctness. It introduces Activation Decoding, an entropy-based constrained decoding method that consistently improves factuality and informativeness, achieving up to 8.6 absolute points increase in Truth*Info on TruthfulQA for LLaMA2-70B-chat.

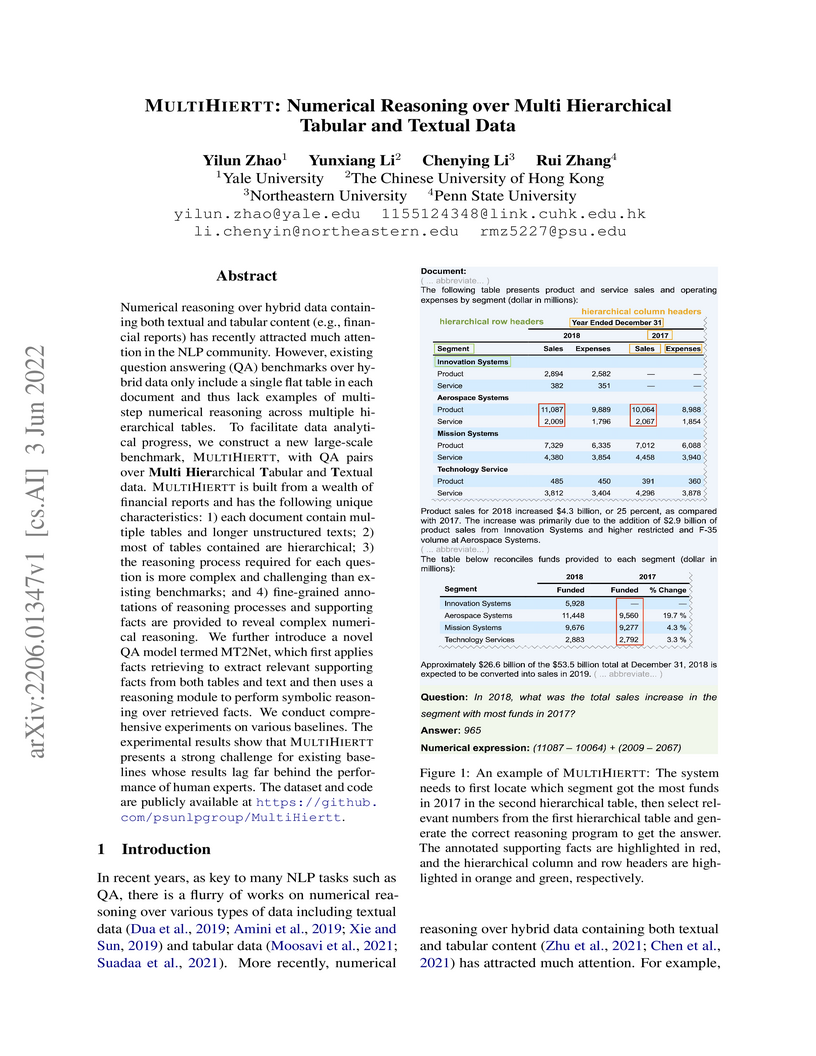

Researchers from Yale University, The Chinese University of Hong Kong, Northeastern University, and Penn State University introduce MULTIHIERTT, a large-scale question-answering benchmark for numerical reasoning over financial documents containing multiple hierarchical tables and extensive text. They also propose MT2Net, a two-stage model that establishes a strong baseline but reveals a substantial performance gap compared to human experts.

The Mixture-of-Prompts (MoP) framework is introduced to improve Large Language Model performance by automatically constructing specialized prompts, each comprising an instruction and in-context demonstrations, adapted from the Mixture-of-Experts paradigm. This approach consistently outperformed existing prompt optimization methods, achieving an average win rate of 81% across diverse benchmarks when using GPT-3.5-Turbo-Instruct.

This research systematically quantifies the extent of non-determinism in Large Language Models, revealing that even with deterministic settings like temperature set to zero, LLMs show significant output variations. The study found accuracy differences of up to 15% across runs and up to 70% between best and worst possible outcomes, challenging the reliability of single-run benchmark scores and the predictability of LLM behavior in applications.

08 Oct 2025

POME (Post Optimization Model Edit) is a training-free method that enhances fine-tuned large language models by applying a Muon-style orthogonal projection to the aggregated weight difference between the pretrained and fine-tuned models. The technique consistently improves performance across various benchmarks, models, and fine-tuning paradigms, for instance, boosting LLaMA2-7B's GSM8K accuracy by an average of +2.5%.

AgentFormer, developed by researchers at Carnegie Mellon University, proposes a Transformer-based model for multi-agent trajectory forecasting that jointly models socio-temporal interactions. It utilizes an agent-aware attention mechanism and a CVAE framework with joint latent intent to generate diverse, socially plausible trajectories, achieving improved accuracy on pedestrian (ETH/UCY) and autonomous driving (nuScenes) datasets.

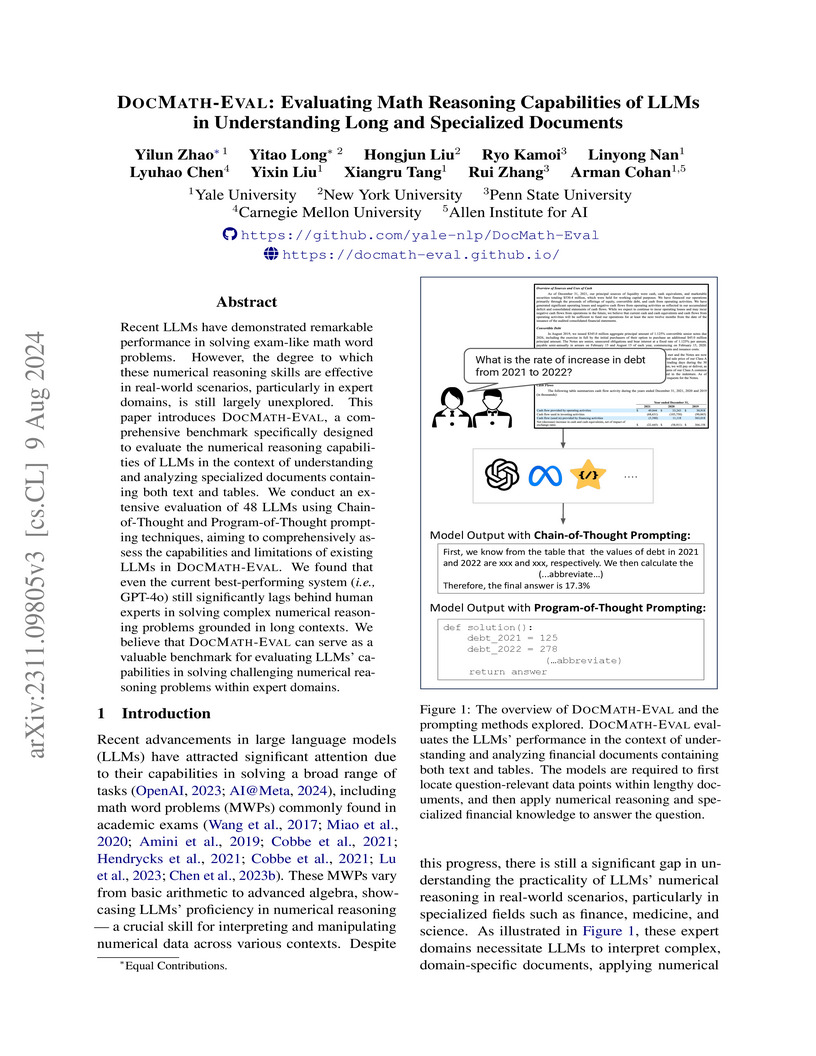

Recent LLMs have demonstrated remarkable performance in solving exam-like

math word problems. However, the degree to which these numerical reasoning

skills are effective in real-world scenarios, particularly in expert domains,

is still largely unexplored. This paper introduces DocMath-Eval, a

comprehensive benchmark specifically designed to evaluate the numerical

reasoning capabilities of LLMs in the context of understanding and analyzing

specialized documents containing both text and tables. We conduct an extensive

evaluation of 48 LLMs with Chain-of-Thought and Program-of-Thought prompting

methods, aiming to comprehensively assess the capabilities and limitations of

existing LLMs in DocMath-Eval. We found that even the current best-performing

system (i.e., GPT-4o) still significantly lags behind human experts in solving

complex numerical reasoning problems grounded in long contexts. We believe that

DocMath-Eval can serve as a valuable benchmark for evaluating LLMs'

capabilities in solving challenging numerical reasoning problems within expert

domains.

Making large language models (LLMs) more efficient in memory, latency, and serving cost is crucial for edge deployment, interactive applications, and sustainable inference at scale. Pruning is a promising technique, but existing pruning methods are limited: width pruning often breaks the standard transformer layout, requiring custom inference code, while depth pruning can cause abrupt accuracy drops. Also, while many pruning approaches are effective against LLMs, they struggle to maintain performance on small language models (SLMs). In this work, we propose COMPACT, which jointly (i) prunes rare vocabulary to shrink embedding/LM head layers and (ii) prunes FFN intermediate channels using common-token-weighted activations, aligning importance with the post-pruning token distribution. COMPACT inherits strengths of both depth and width pruning, such as: deployment-friendliness (keeps a standard transformer architecture), scale-adaptivity (trade off vocab. vs. FFN pruning), competitive pruning times, and strong memory savings alongside throughput gains. Experiments across Qwen, LLaMA, and Gemma families (0.5B-70B) show state-of-the-art downstream performance, with substantial reductions in parameters, GPU memory, and latency.

There are no more papers matching your filters at the moment.