Skolkovo Institute of Science and Technology

This research from Samsung AI Center Moscow introduces LaMa, a single-stage image inpainting system that employs Fast Fourier Convolutions to enable robust performance on large missing areas and exceptional generalization to high resolutions. The method accurately synthesizes complex and repetitive structures, outperforming prior state-of-the-art models on standard benchmarks while demonstrating greater parameter efficiency.

17 Sep 2025

The PhysicalAgent framework integrates foundation world models for cognitive robotics by synthesizing actions as conditional video, thereby decoupling high-level reasoning from robot-specific execution. It achieves up to 80% final task success on bimanual and humanoid robots through an iterative error recovery mechanism, outperforming existing task-specific baselines.

Accurate prediction of protein-ligand binding poses is crucial for structure-based drug design, yet existing methods struggle to balance speed, accuracy, and physical plausibility. We introduce Matcha, a novel molecular docking pipeline that combines multi-stage flow matching with learned scoring and physical validity filtering. Our approach consists of three sequential stages applied consecutively to refine docking predictions, each implemented as a flow matching model operating on appropriate geometric spaces (R3, SO(3), and SO(2)). We enhance the prediction quality through a dedicated scoring model and apply unsupervised physical validity filters to eliminate unrealistic poses. Compared to various approaches, Matcha demonstrates superior performance on Astex and PDBbind test sets in terms of docking success rate and physical plausibility. Moreover, our method works approximately 25 times faster than modern large-scale co-folding models. The model weights and inference code to reproduce our results are available at this https URL.

A deep convolutional generator network, even when randomly initialized and without prior training, can serve as a powerful implicit prior for various image restoration tasks. The study demonstrates that optimizing this network's weights to fit a single degraded image can effectively perform denoising, super-resolution, and inpainting, leveraging the inherent structural biases of the network itself.

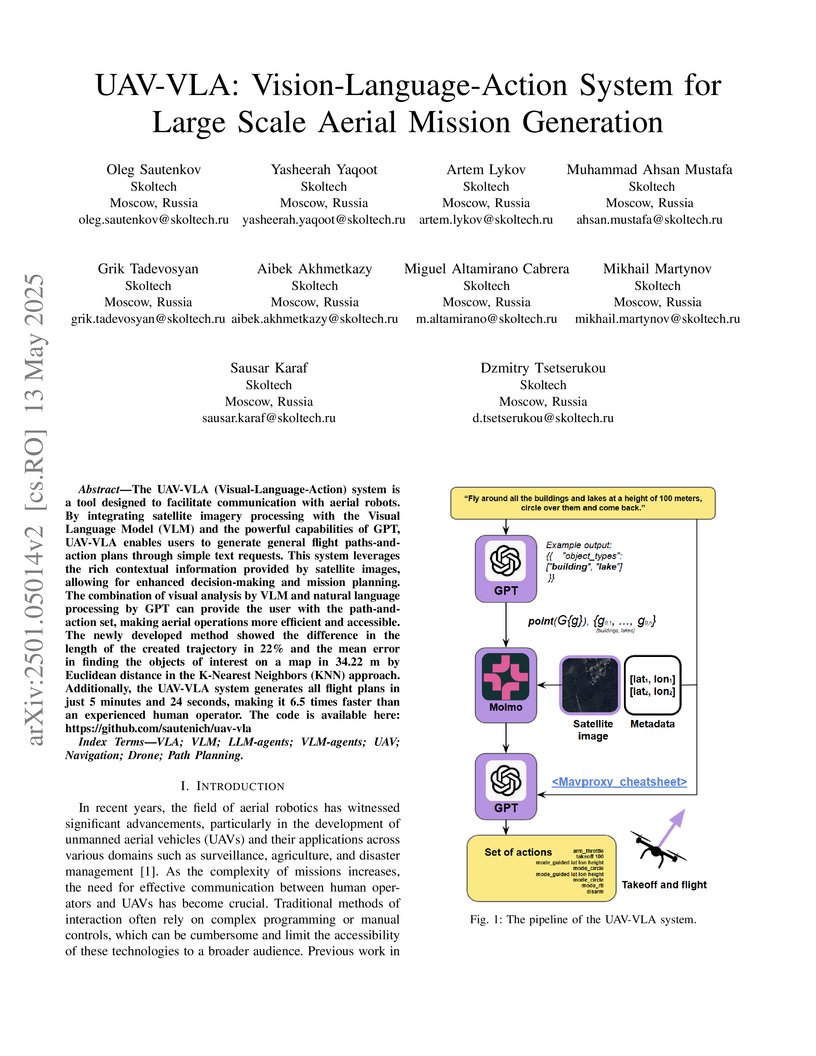

The UAV-VLA system enables the generation of comprehensive flight paths and action plans for Unmanned Aerial Vehicles (UAVs) using natural language instructions and satellite imagery. The system can generate mission plans 6.5 times faster than a human operator, with its waypoint placement accuracy having a K-Nearest Neighbors (KNN) RMSE of 34.22 meters.

Skoltech researchers developed an end-to-end deep learning framework that enables unsupervised domain adaptation by learning discriminative, domain-invariant features using a gradient reversal layer. This approach consistently improved target domain accuracy, outperforming prior methods and achieving state-of-the-art results on benchmarks like the OFFICE dataset.

12 May 2025

A multi-agent framework enables autonomous UAV mission planning through vision-language models and reactive reasoning, achieving 93% success rate on complex scenarios while reducing mission creation time to 96.96 seconds through fine-tuned models and pixel-level grounding on satellite imagery.

Skoltech and CNRS researchers leverage sparse autoencoders to extract interpretable features from LLM residual streams for artificial text detection, revealing distinct patterns in AI-generated content while demonstrating superior classification performance and robustness through innovative feature steering and systematic analysis of model behaviors across different domains.

19 Mar 2024

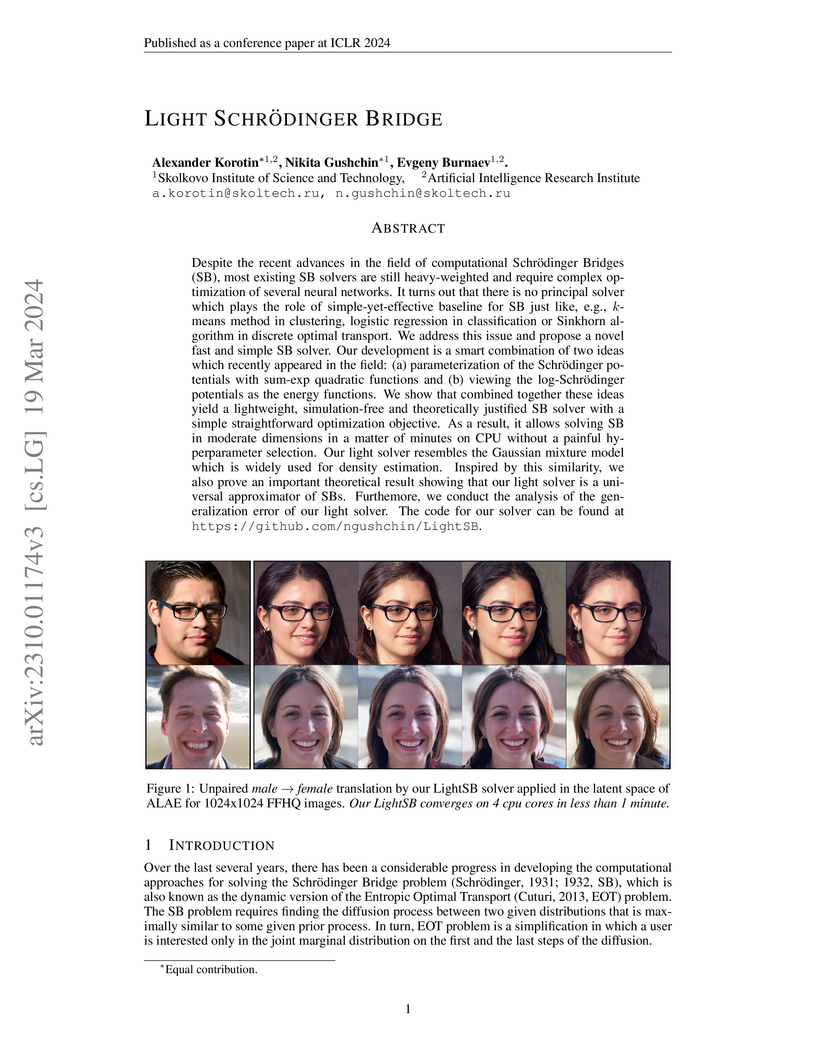

Light Schr

Hdinger Bridge (LightSB) presents an efficient solver for continuous Schr

Hdinger Bridge (SB) and Entropic Optimal Transport (EOT) problems, leveraging a Gaussian mixture parameterization for potentials. The method achieves competitive accuracy across various tasks while providing substantial speedups, operating in minutes on a CPU.

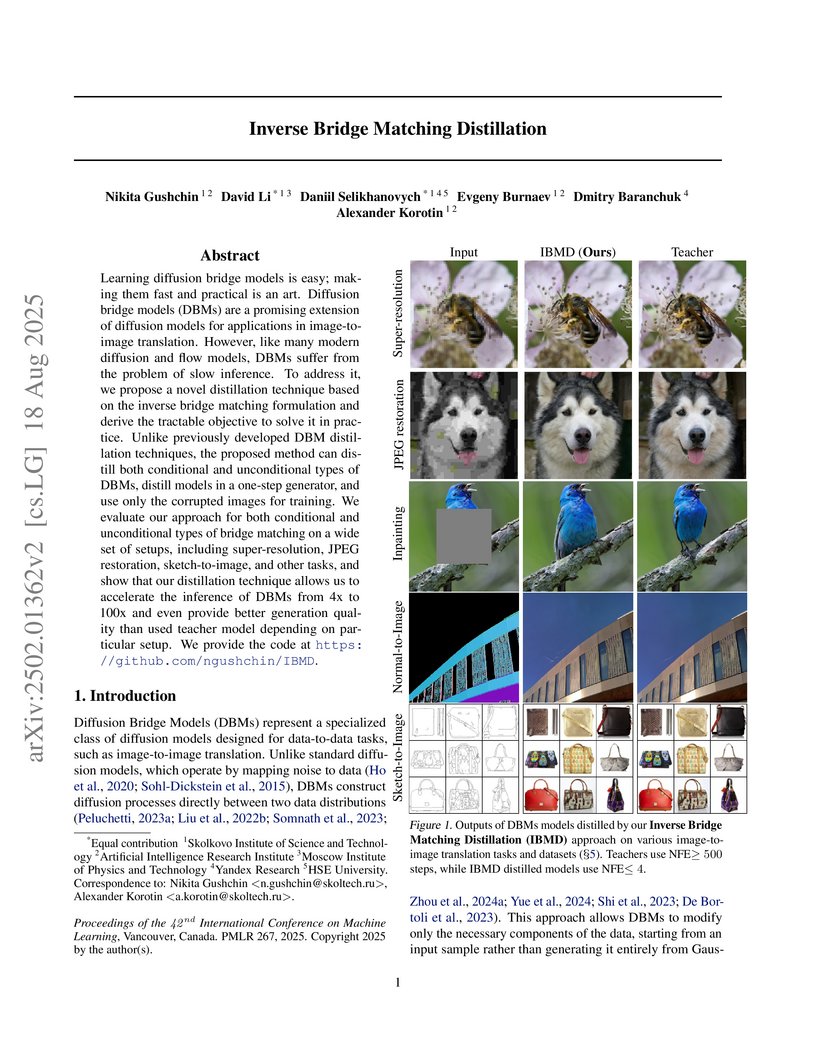

Inverse Bridge Matching Distillation (IBMD) presents a universal framework for accelerating Diffusion Bridge Models (DBMs), achieving up to 100x faster inference for both conditional and unconditional DBMs. The method often maintains or even improves generation quality, as demonstrated by superior FID and Classifier Accuracy in 4x super-resolution tasks.

Asynchronous Stochastic Gradient Descent (Asynchronous SGD) is a cornerstone method for parallelizing learning in distributed machine learning. However, its performance suffers under arbitrarily heterogeneous computation times across workers, leading to suboptimal time complexity and inefficiency as the number of workers scales. While several Asynchronous SGD variants have been proposed, recent findings by Tyurin & Richtárik (NeurIPS 2023) reveal that none achieve optimal time complexity, leaving a significant gap in the literature. In this paper, we propose Ringmaster ASGD, a novel Asynchronous SGD method designed to address these limitations and tame the inherent challenges of Asynchronous SGD. We establish, through rigorous theoretical analysis, that Ringmaster ASGD achieves optimal time complexity under arbitrarily heterogeneous and dynamically fluctuating worker computation times. This makes it the first Asynchronous SGD method to meet the theoretical lower bounds for time complexity in such scenarios.

18 Mar 2024

Energy-based models (EBMs) are known in the Machine Learning community for

decades. Since the seminal works devoted to EBMs dating back to the noughties,

there have been a lot of efficient methods which solve the generative modelling

problem by means of energy potentials (unnormalized likelihood functions). In

contrast, the realm of Optimal Transport (OT) and, in particular, neural OT

solvers is much less explored and limited by few recent works (excluding

WGAN-based approaches which utilize OT as a loss function and do not model OT

maps themselves). In our work, we bridge the gap between EBMs and

Entropy-regularized OT. We present a novel methodology which allows utilizing

the recent developments and technical improvements of the former in order to

enrich the latter. From the theoretical perspective, we prove generalization

bounds for our technique. In practice, we validate its applicability in toy 2D

and image domains. To showcase the scalability, we empower our method with a

pre-trained StyleGAN and apply it to high-res AFHQ 512×512 unpaired I2I

translation. For simplicity, we choose simple short- and long-run EBMs as a

backbone of our Energy-guided Entropic OT approach, leaving the application of

more sophisticated EBMs for future research. Our code is available at:

this https URL

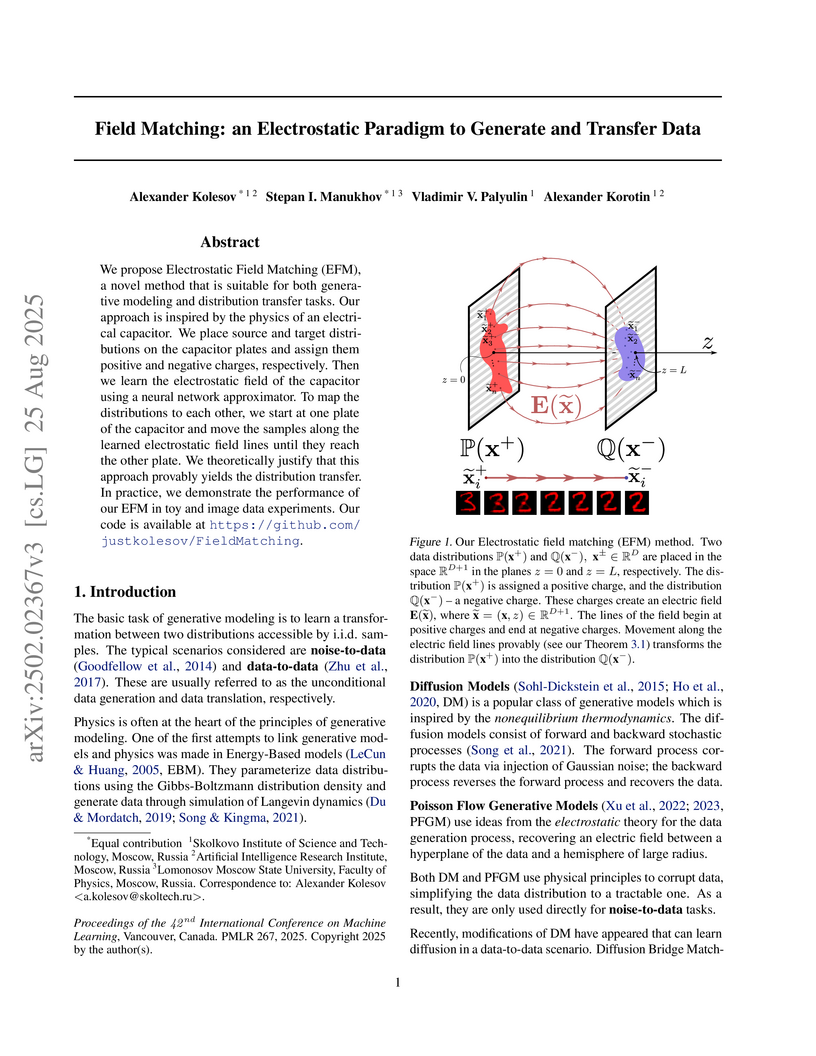

We propose Electrostatic Field Matching (EFM), a novel method that is suitable for both generative modeling and distribution transfer tasks. Our approach is inspired by the physics of an electrical capacitor. We place source and target distributions on the capacitor plates and assign them positive and negative charges, respectively. Then we learn the electrostatic field of the capacitor using a neural network approximator. To map the distributions to each other, we start at one plate of the capacitor and move the samples along the learned electrostatic field lines until they reach the other plate. We theoretically justify that this approach provably yields the distribution transfer. In practice, we demonstrate the performance of our EFM in toy and image data experiments. Our code is available at this https URL

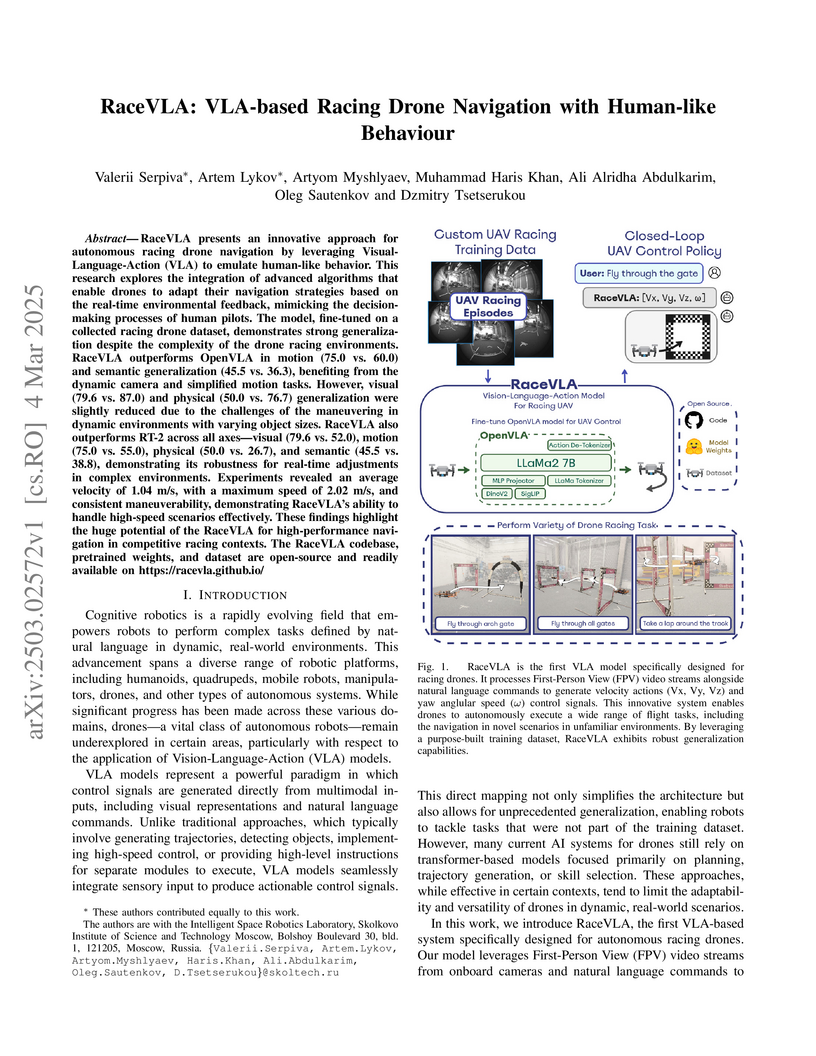

RaceVLA applies Vision-Language-Action (VLA) models to autonomous racing drones, enabling navigation through natural language commands and visual inputs. The system, developed by researchers at Skoltech, achieved stable flight patterns with an average velocity of 1.04 m/s and demonstrated strong generalization capabilities compared to existing VLA models.

This research provides a comprehensive review and taxonomy of reward engineering and shaping techniques in Reinforcement Learning (RL). It systematically categorizes methods for designing and refining reward functions, demonstrating how these approaches accelerate learning, improve exploration, and enhance robustness across various RL applications.

03 Mar 2025

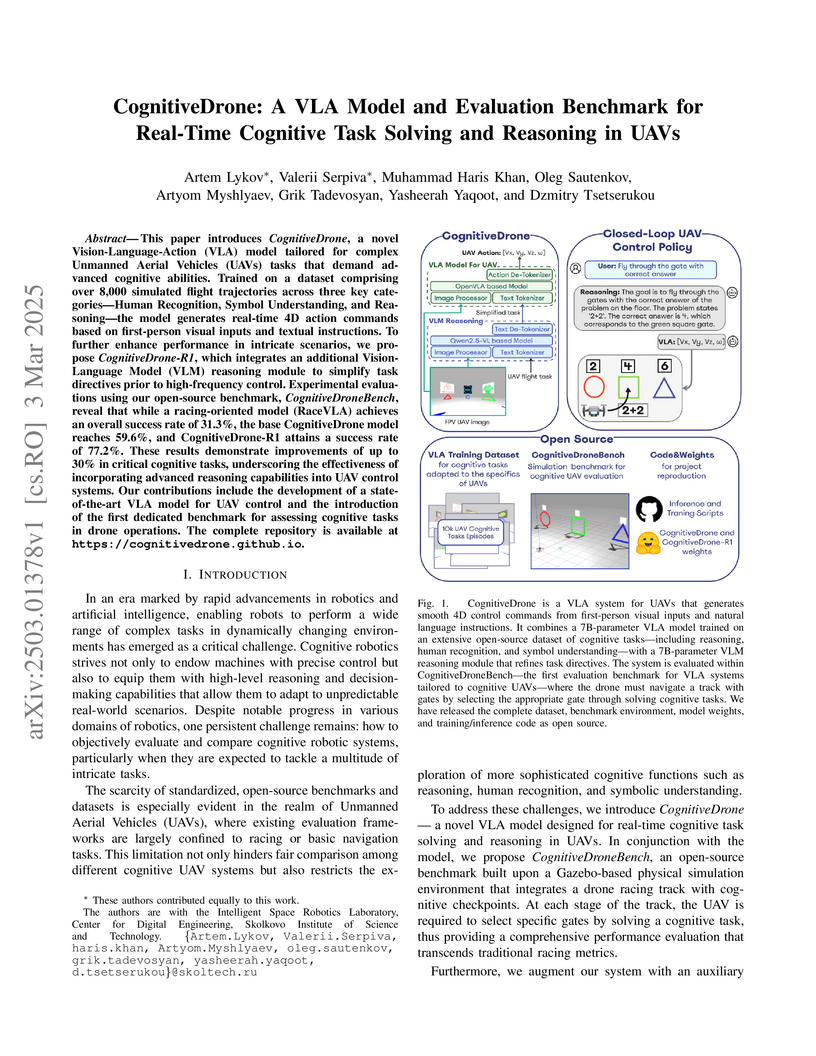

This research introduces CognitiveDrone, a Vision-Language-Action model for UAVs capable of reasoning, human recognition, and symbol understanding, along with CognitiveDroneBench, an open-source evaluation benchmark. The enhanced CognitiveDrone-R1 model, which incorporates a VLM reasoning module, achieved a 77.2% average success rate across diverse cognitive tasks, demonstrating improved performance compared to a baseline VLA model.

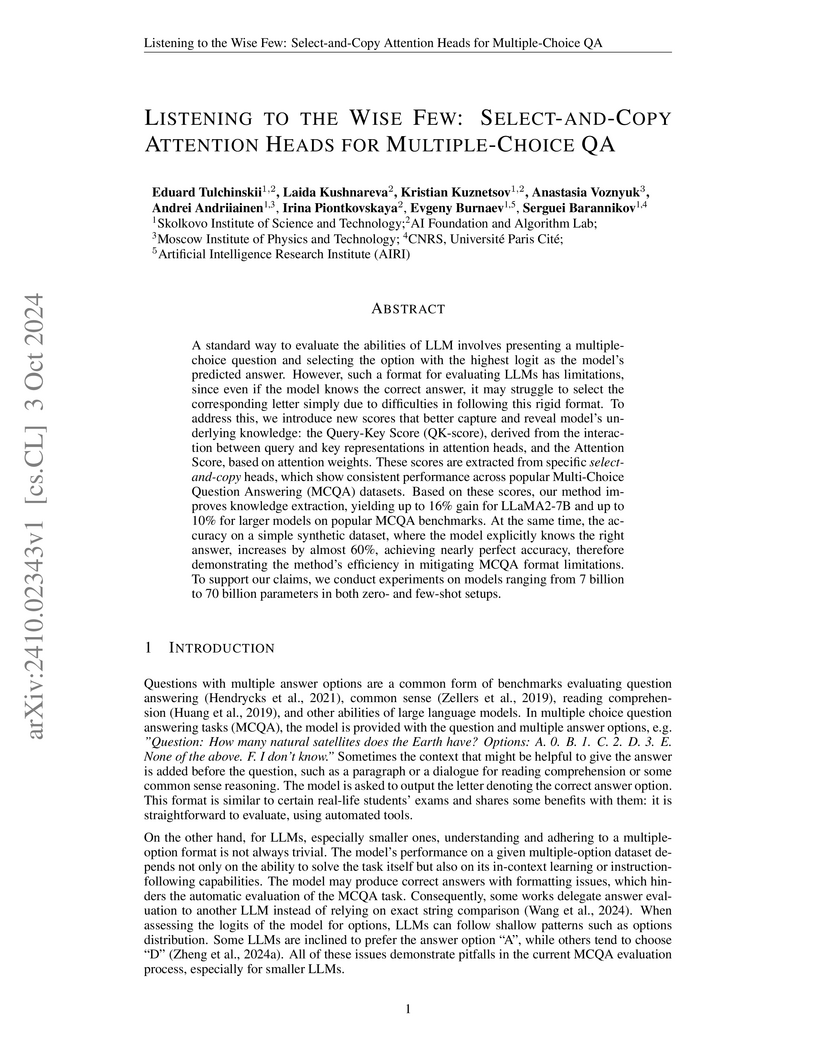

New scoring methods based on internal attention head mechanics, called QK-score and Attention-score, reveal an LLM's latent knowledge in multiple-choice question answering tasks. By identifying specific "select-and-copy" attention heads, the approach improves accuracy by 7-16% on LLaMA2-7B and up to 10% on larger LLaMA models across various benchmarks, while enhancing robustness to prompt variations.

Modern Video Large Language Models (VLLMs) often rely on uniform frame

sampling for video understanding, but this approach frequently fails to capture

critical information due to frame redundancy and variations in video content.

We propose MaxInfo, a training-free method based on the maximum volume

principle, which selects and retains the most representative frames from the

input video. By maximizing the geometric volume formed by selected embeddings,

MaxInfo ensures that the chosen frames cover the most informative regions of

the embedding space, effectively reducing redundancy while preserving

diversity. This method enhances the quality of input representations and

improves long video comprehension performance across benchmarks. For instance,

MaxInfo achieves a 3.28% improvement on LongVideoBench and a 6.4% improvement

on EgoSchema for LLaVA-Video-7B. It also achieves a 3.47% improvement for

LLaVA-Video-72B. The approach is simple to implement and works with existing

VLLMs without the need for additional training, making it a practical and

effective alternative to traditional uniform sampling methods.

01 Jul 2024

This paper proposes a novel method, Explicit Flow Matching (ExFM), for

training and analyzing flow-based generative models. ExFM leverages a

theoretically grounded loss function, ExFM loss (a tractable form of Flow

Matching (FM) loss), to demonstrably reduce variance during training, leading

to faster convergence and more stable learning. Based on theoretical analysis

of these formulas, we derived exact expressions for the vector field (and score

in stochastic cases) for model examples (in particular, for separating multiple

exponents), and in some simple cases, exact solutions for trajectories. In

addition, we also investigated simple cases of diffusion generative models by

adding a stochastic term and obtained an explicit form of the expression for

score. While the paper emphasizes the theoretical underpinnings of ExFM, it

also showcases its effectiveness through numerical experiments on various

datasets, including high-dimensional ones. Compared to traditional FM methods,

ExFM achieves superior performance in terms of both learning speed and final

outcomes.

Factcheck-Bench introduces a fine-grained, diagnostic evaluation benchmark and an eight-stage annotation framework for automatic fact-checkers of Large Language Model (LLM) outputs. This work enables component-level assessment of fact-checking systems, moving beyond black-box evaluations and revealing specific areas for improvement in tasks like stance detection and false claim identification.

There are no more papers matching your filters at the moment.