Yandex

17 Nov 2025

Researchers from Yandex, HSE University, and IST Austria developed "Hogwild! Inference," a protocol enabling multiple Large Language Models (LLMs) to generate text concurrently by sharing a dynamically updated attention Key-Value (KV) cache. This mechanism allows LLMs to self-organize and collaborate in real-time, achieving up to 3.6x speedup and improved accuracy on complex reasoning tasks.

Researchers at Yandex and HSE University developed TabM, an MLP-based model that utilizes parameter-efficient ensembling to achieve state-of-the-art performance on tabular data. This approach demonstrates that simple MLP architectures, when enhanced with ensemble techniques, can outperform more complex deep learning models while maintaining high efficiency across a diverse set of 46 datasets.

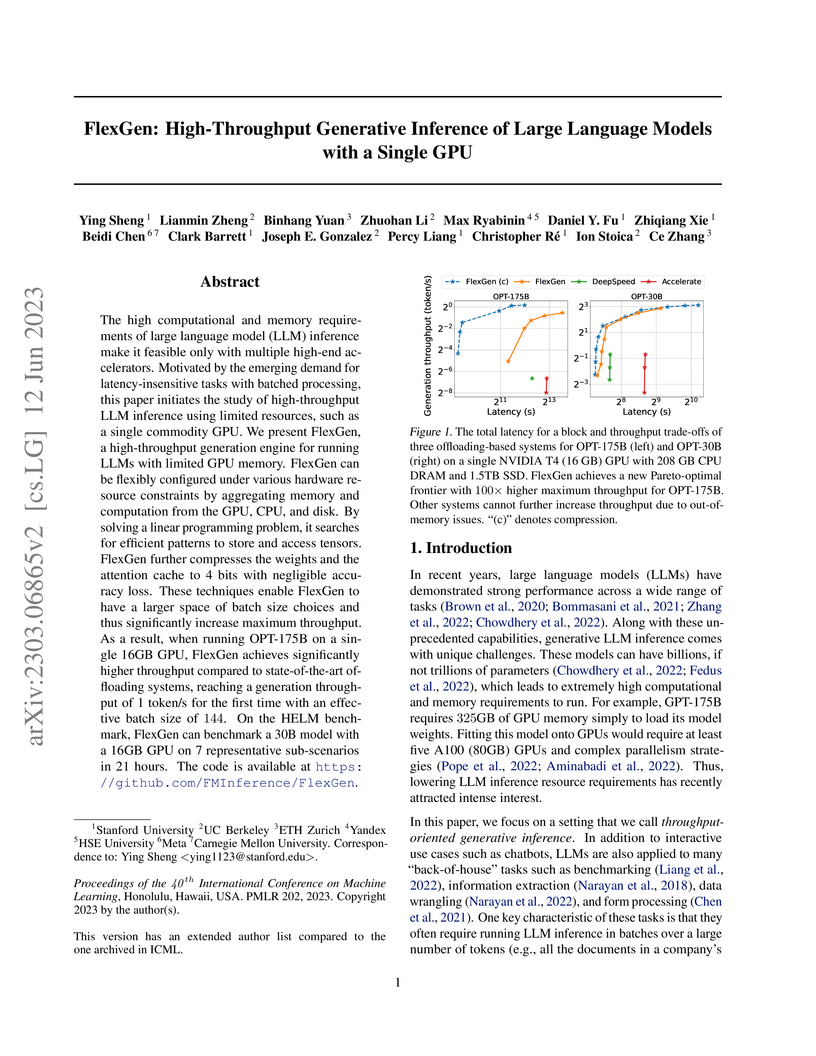

FlexGen enables high-throughput generative inference of large language models like OPT-175B on a single commodity GPU. It achieves this by proposing a novel memory offloading and scheduling system that optimally manages the GPU-CPU-Disk hierarchy and leverages 4-bit quantization, yielding up to 112x higher throughput compared to existing systems.

Researchers from Yandex, MIPT, and HSE conducted a rigorous evaluation of deep learning architectures for tabular data, introducing a ResNet-like baseline and the FT-Transformer. Their work clarifies that while the FT-Transformer achieves state-of-the-art deep learning performance and excels on certain datasets, Gradient Boosted Decision Trees retain superiority on others, underscoring that no single model universally outperforms.

TabDDPM adapts denoising diffusion probabilistic models to heterogeneous tabular data, generating synthetic datasets with more realistic feature distributions and inter-feature correlations. The approach outperforms existing GAN/VAE methods in machine learning utility while offering improved privacy over interpolation-based techniques like SMOTE.

11 Jun 2025

Foundation models are an emerging research direction in tabular deep

learning. Notably, TabPFNv2 recently claimed superior performance over

traditional GBDT-based methods on small-scale datasets using an in-context

learning paradigm, which does not adapt model parameters to target datasets.

However, the optimal finetuning approach for adapting tabular foundational

models, and how this adaptation reshapes their internal mechanisms, remains

underexplored. While prior works studied finetuning for earlier foundational

models, inconsistent findings and TabPFNv2's unique architecture necessitate

fresh investigation. To address these questions, we first systematically

evaluate various finetuning strategies on diverse datasets. Our findings

establish full finetuning as the most practical solution for TabPFNv2 in terms

of time-efficiency and effectiveness. We then investigate how finetuning alters

TabPFNv2's inner mechanisms, drawing an analogy to retrieval-augmented models.

We reveal that the success of finetuning stems from the fact that after

gradient-based adaptation, the dot products of the query-representations of

test objects and the key-representations of in-context training objects more

accurately reflect their target similarity. This improved similarity allows

finetuned TabPFNv2 to better approximate target dependency by appropriately

weighting relevant in-context samples, improving the retrieval-based prediction

logic. From the practical perspective, we managed to finetune TabPFNv2 on

datasets with up to 50K objects, observing performance improvements on almost

all tasks. More precisely, on academic datasets with I.I.D. splits, finetuning

allows TabPFNv2 to achieve state-of-the-art results, while on datasets with

gradual temporal shifts and rich feature sets, TabPFNv2 is less stable and

prior methods remain better.

CatBoost, developed by Yandex, introduces a gradient boosting framework that addresses prediction shift and target leakage in categorical feature processing. It achieves superior predictive quality across various benchmarks by employing ordered boosting and ordered target statistics.

SpQR introduces a sparse-quantized representation that achieves near-lossless compression of large language model weights to 3-4 bits, reducing memory footprint by over 3.4x while maintaining perplexity within 1% of 16-bit models and accelerating inference by 20-30%.

A deep convolutional generator network, even when randomly initialized and without prior training, can serve as a powerful implicit prior for various image restoration tasks. The study demonstrates that optimizing this network's weights to fit a single degraded image can effectively perform denoising, super-resolution, and inpainting, leveraging the inherent structural biases of the network itself.

This paper identifies Instance Normalization (IN) as a crucial architectural component for improving feed-forward neural style transfer, enabling real-time stylization networks to produce images of comparable quality to the slower iterative methods. Replacing Batch Normalization with IN resolves issues like image artifacts and achieves high-fidelity results across different generator architectures and resolutions.

This research analyzes how various modern deep learning techniques achieve competitive performance on tabular data by examining their implicit mechanisms for handling data (aleatoric) uncertainty. The study reveals that methods such as numerical feature embeddings, retrieval-augmented models, and advanced ensembling strategies provide robustness in high-uncertainty regions, explaining their effectiveness.

We introduce AutoJudge, a method that accelerates large language model (LLM) inference with task-specific lossy speculative decoding. Instead of matching the original model output distribution token-by-token, we identify which of the generated tokens affect the downstream quality of the response, relaxing the distribution match guarantee so that the "unimportant" tokens can be generated faster. Our approach relies on a semi-greedy search algorithm to test which of the mismatches between target and draft models should be corrected to preserve quality and which ones may be skipped. We then train a lightweight classifier based on existing LLM embeddings to predict, at inference time, which mismatching tokens can be safely accepted without compromising the final answer quality. We evaluate the effectiveness of AutoJudge with multiple draft/target model pairs on mathematical reasoning and programming benchmarks, achieving significant speedups at the cost of a minor accuracy reduction. Notably, on GSM8k with the Llama 3.1 70B target model, our approach achieves up to ≈2× speedup over speculative decoding at the cost of ≤1% drop in accuracy. When applied to the LiveCodeBench benchmark, AutoJudge automatically detects programming-specific important tokens, accepting ≥25 tokens per speculation cycle at 2% drop in Pass@1. Our approach requires no human annotation and is easy to integrate with modern LLM inference frameworks.

A decentralized model-parallel algorithm, SWARM parallelism, enables efficient training of billion-scale neural networks using unreliable, heterogeneous, and low-bandwidth computing resources. It successfully trained a 1.01 billion parameter Transformer on 400 preemptible T4 GPUs over a public cloud network with less than 200 Mb/s, demonstrating convergence comparable to models trained on high-end A100 GPUs.

Researchers at Yandex and collaborating universities demonstrated that only a small subset of Transformer attention heads are crucial for neural machine translation, identifying specialized functions like positional, syntactic, and rare word attention. Their novel pruning method allows removing up to 90% of encoder heads with minimal BLEU score degradation, enhancing efficiency and interpretability.

SEQUOIA presents a speculative decoding method that uses a dynamic programming approach to find optimal token tree topologies and a robust sampling-without-replacement verification algorithm. This approach achieves up to 4.04x speedup for Llama2-7B on A100 GPUs and up to 9.5x speedup for Llama3-70B in offloading settings on L40 GPUs, consistently outperforming prior methods.

There has been significant interest in "extreme" compression of large

language models (LLMs), i.e., to 1-2 bits per parameter, which allows such

models to be executed efficiently on resource-constrained devices. Existing

work focused on improved one-shot quantization techniques and weight

representations; yet, purely post-training approaches are reaching diminishing

returns in terms of the accuracy-vs-bit-width trade-off. State-of-the-art

quantization methods such as QuIP# and AQLM include fine-tuning (part of) the

compressed parameters over a limited amount of calibration data; however, such

fine-tuning techniques over compressed weights often make exclusive use of

straight-through estimators (STE), whose performance is not well-understood in

this setting. In this work, we question the use of STE for extreme LLM

compression, showing that it can be sub-optimal, and perform a systematic study

of quantization-aware fine-tuning strategies for LLMs. We propose PV-Tuning - a

representation-agnostic framework that generalizes and improves upon existing

fine-tuning strategies, and provides convergence guarantees in restricted

cases. On the practical side, when used for 1-2 bit vector quantization,

PV-Tuning outperforms prior techniques for highly-performant models such as

Llama and Mistral. Using PV-Tuning, we achieve the first Pareto-optimal

quantization for Llama 2 family models at 2 bits per parameter.

We present Yambda-5B, a large-scale open dataset sourced from the Yandex

Music streaming platform. Yambda-5B contains 4.79 billion user-item

interactions from 1 million users across 9.39 million tracks. The dataset

includes two primary types of interactions: implicit feedback (listening

events) and explicit feedback (likes, dislikes, unlikes and undislikes). In

addition, we provide audio embeddings for most tracks, generated by a

convolutional neural network trained on audio spectrograms. A key

distinguishing feature of Yambda-5B is the inclusion of the is_organic flag,

which separates organic user actions from recommendation-driven events. This

distinction is critical for developing and evaluating machine learning

algorithms, as Yandex Music relies on recommender systems to personalize track

selection for users. To support rigorous benchmarking, we introduce an

evaluation protocol based on a Global Temporal Split, allowing recommendation

algorithms to be assessed in conditions that closely mirror real-world use. We

report benchmark results for standard baselines (ItemKNN, iALS) and advanced

models (SANSA, SASRec) using a variety of evaluation metrics. By releasing

Yambda-5B to the community, we aim to provide a readily accessible,

industrial-scale resource to advance research, foster innovation, and promote

reproducible results in recommender systems.

The high computational costs of large language models (LLMs) have led to a flurry of research on LLM compression, via methods such as quantization, sparsification, or structured pruning. A new frontier in this area is given by dynamic, non-uniform compression methods, which adjust the compression levels (e.g., sparsity) per-block or even per-layer in order to minimize accuracy loss, while guaranteeing a global compression threshold. Yet, current methods rely on estimating the importance of a given layer, implicitly assuming that layers contribute independently to the overall compression error. We begin from the motivating observation that this independence assumption does not generally hold for LLM compression: pruning a model further may even significantly recover performance. To address this, we propose EvoPress, a novel evolutionary framework for dynamic LLM compression. By formulating dynamic compression as a general optimization problem, EvoPress identifies optimal compression profiles in a highly efficient manner, and generalizes across diverse models and compression techniques. Via EvoPress, we achieve state-of-the-art performance for dynamic compression of Llama, Mistral, and Phi models, setting new benchmarks for structural pruning (block/layer dropping), unstructured sparsity, and quantization with dynamic bitwidths. Our code is available at this https URL}.

21 Jul 2025

Yandex researchers scaled transformer encoders for recommender systems to one billion parameters using the ARGUS framework, demonstrating that such large models yield substantial performance improvements. The approach achieved record-breaking online gains of +2.26% in Total Listening Time and +6.37% in Like Likelihood on a large music platform, validating the scaling hypothesis for user behavior modeling.

PETALS enables collaborative inference and parameter-efficient fine-tuning of large language models (LLMs) such as BLOOM-176B, allowing their use on distributed consumer-grade GPUs at interactive speeds of approximately one inference step per second.

There are no more papers matching your filters at the moment.