Sungshin Women’s University

OrbitCache introduces a novel in-network caching architecture that uses continuous packet recirculation to store variable-length key-value items directly within the programmable switch data plane. This approach enables effective load balancing for real-world workloads, achieving up to 3.59x higher throughput compared to no caching and 1.95x higher than previous in-network caching methods for skewed datasets.

Reinforcement learning (RL) agents often face challenges in balancing exploration and exploitation, particularly in environments where sparse or dense rewards bias learning. Biological systems, such as human toddlers, naturally navigate this balance by transitioning from free exploration with sparse rewards to goal-directed behavior guided by increasingly dense rewards. Inspired by this natural progression, we investigate the Toddler-Inspired Reward Transition in goal-oriented RL tasks. Our study focuses on transitioning from sparse to potential-based dense (S2D) rewards while preserving optimal strategies. Through experiments on dynamic robotic arm manipulation and egocentric 3D navigation tasks, we demonstrate that effective S2D reward transitions significantly enhance learning performance and sample efficiency. Additionally, using a Cross-Density Visualizer, we show that S2D transitions smooth the policy loss landscape, resulting in wider minima that improve generalization in RL models. In addition, we reinterpret Tolman's maze experiments, underscoring the critical role of early free exploratory learning in the context of S2D rewards.

03 Jan 2025

In recent years, diffusion models, and more generally score-based deep generative models, have achieved remarkable success in various applications, including image and audio generation. In this paper, we view diffusion models as an implicit approach to nonparametric density estimation and study them within a statistical framework to analyze their surprising performance. A key challenge in high-dimensional statistical inference is leveraging low-dimensional structures inherent in the data to mitigate the curse of dimensionality. We assume that the underlying density exhibits a low-dimensional structure by factorizing into low-dimensional components, a property common in examples such as Bayesian networks and Markov random fields. Under suitable assumptions, we demonstrate that an implicit density estimator constructed from diffusion models adapts to the factorization structure and achieves the minimax optimal rate with respect to the total variation distance. In constructing the estimator, we design a sparse weight-sharing neural network architecture, where sparsity and weight-sharing are key features of practical architectures such as convolutional neural networks and recurrent neural networks.

25 Aug 2025

Database normalization is crucial to preserving data integrity. However, it is time-consuming and error-prone, as it is typically performed manually by data engineers. To this end, we present Miffie, a database normalization framework that leverages the capability of large language models. Miffie enables automated data normalization without human effort while preserving high accuracy. The core of Miffie is a dual-model self-refinement architecture that combines the best-performing models for normalized schema generation and verification, respectively. The generation module eliminates anomalies based on the feedback of the verification module until the output schema satisfies the requirement for normalization. We also carefully design task-specific zero-shot prompts to guide the models for achieving both high accuracy and cost efficiency. Experimental results show that Miffie can normalize complex database schemas while maintaining high accuracy.

02 Aug 2024

There are two things to be considered when we evaluate predictive models. One

is prediction accuracy,and the other is interpretability. Over the recent

decades, many prediction models of high performance, such as ensemble-based

models and deep neural networks, have been developed. However, these models are

often too complex, making it difficult to intuitively interpret their

predictions. This complexity in interpretation limits their use in many

real-world fields that require accountability, such as medicine, finance, and

college admissions. In this study, we develop a novel method called Meta-ANOVA

to provide an interpretable model for any given prediction model. The basic

idea of Meta-ANOVA is to transform a given black-box prediction model to the

functional ANOVA model. A novel technical contribution of Meta-ANOVA is a

procedure of screening out unnecessary interaction before transforming a given

black-box model to the functional ANOVA model. This screening procedure allows

the inclusion of higher order interactions in the transformed functional ANOVA

model without computational difficulties. We prove that the screening procedure

is asymptotically consistent. Through various experiments with synthetic and

real-world datasets, we empirically demonstrate the superiority of Meta-ANOVA

As they have a vital effect on social decision-making, AI algorithms should

be not only accurate but also fair. Among various algorithms for fairness AI,

learning fair representation (LFR), whose goal is to find a fair representation

with respect to sensitive variables such as gender and race, has received much

attention. For LFR, the adversarial training scheme is popularly employed as is

done in the generative adversarial network type algorithms. The choice of a

discriminator, however, is done heuristically without justification. In this

paper, we propose a new adversarial training scheme for LFR, where the integral

probability metric (IPM) with a specific parametric family of discriminators is

used. The most notable result of the proposed LFR algorithm is its theoretical

guarantee about the fairness of the final prediction model, which has not been

considered yet. That is, we derive theoretical relations between the fairness

of representation and the fairness of the prediction model built on the top of

the representation (i.e., using the representation as the input). Moreover, by

numerical experiments, we show that our proposed LFR algorithm is

computationally lighter and more stable, and the final prediction model is

competitive or superior to other LFR algorithms using more complex

discriminators.

31 Dec 2021

Web browsers are integral parts of everyone's daily life. They are commonly used for security-critical and privacy sensitive tasks, like banking transactions and checking medical records. Unfortunately, modern web browsers are too complex to be bug free (e.g., 25 million lines of code in Chrome), and their role as an interface to the cyberspace makes them an attractive target for attacks. Accordingly, web browsers naturally become an arena for demonstrating advanced exploitation techniques by attackers and state-of-the-art defenses by browser vendors. Web browsers, arguably, are the most exciting place to learn the latest security issues and techniques, but remain as a black art to most security researchers because of their fast-changing characteristics and complex code bases.

To bridge this gap, this paper attempts to systematize the security landscape of modern web browsers by studying the popular classes of security bugs, their exploitation techniques, and deployed defenses. More specifically, we first introduce a unified architecture that faithfully represents the security design of four major web browsers. Second, we share insights from a 10-year longitudinal study on browser bugs. Third, we present a timeline and context of mitigation schemes and their effectiveness. Fourth, we share our lessons from a full-chain exploit used in 2020 Pwn2Own competition. and the implication of bug bounty programs to web browser security. We believe that the key takeaways from this systematization can shed light on how to advance the status quo of modern web browsers, and, importantly, how to create secure yet complex software in the future.

We propose Confidence-guided Refinement Reasoning (C2R), a novel training-free framework applicable to question-answering (QA) tasks across text, image, and video domains. C2R strategically constructs and refines sub-questions and their answers (sub-QAs), deriving a better confidence score for the target answer. C2R first curates a subset of sub-QAs to explore diverse reasoning paths, then compares the confidence scores of the resulting answer candidates to select the most reliable final answer. Since C2R relies solely on confidence scores derived from the model itself, it can be seamlessly integrated with various existing QA models, demonstrating consistent performance improvements across diverse models and benchmarks. Furthermore, we provide essential yet underexplored insights into how leveraging sub-QAs affects model behavior, specifically analyzing the impact of both the quantity and quality of sub-QAs on achieving robust and reliable reasoning.

19 Jun 2022

The low-earth-orbit (LEO) satellite network with mega-constellations can

provide global coverage while supporting the high-data rates. The coverage

performance of such a network is highly dependent on orbit geometry parameters,

including satellite altitude and inclination angle. Traditionally,

simulation-based coverage analysis dominates because of the lack of analytical

approaches. This paper presents a novel systematic analysis framework for the

LEO satellite network by highlighting orbit geometric parameters. Specifically,

we assume that satellite locations are placed on a circular orbit according to

a one-dimensional Poisson point process. Then, we derive the distribution of

the nearest distance between the satellite and a fixed user's location on the

Earth in terms of the orbit-geometry parameters. Leveraging this distribution,

we characterize the coverage probability of the single-orbit LEO network as a

function of the network geometric parameters in conjunction with small and

large-scale fading effects. Finally, we extend our coverage analysis to

multi-orbit networks and verify the synergistic gain of harnessing multi-orbit

satellite networks in terms of the coverage probability. Simulation results are

provided to validate the mathematical derivations and the accuracy of the

proposed model.

In real-world data analysis, missingness distributional shifts between training and test input datasets frequently occur, posing a significant challenge to achieving robust prediction performance. In this study, we propose a novel deep learning framework designed to address such shifts in missingness distributions. We begin by introducing a set of mutual information-based conditions, called MI robustness conditions, which guide a prediction model to extract label-relevant information while remaining invariant to diverse missingness patterns, thereby enhancing robustness to unseen missingness scenarios at test-time. To make these conditions practical, we propose simple yet effective techniques to derive loss terms corresponding to each and formulate a final objective function, termed MIRRAMS(Mutual Information Regularization for Robustness Against Missingness Shifts). As a by-product, our analysis provides a theoretical interpretation of the principles underlying consistency regularization-based semi-supervised learning methods, such as FixMatch. Extensive experiments across various benchmark datasets show that MIRRAMS consistently outperforms existing baselines and maintains stable performance across diverse missingness scenarios. Moreover, our approach achieves state-of-the-art performance even without missing data and can be naturally extended to address semi-supervised learning tasks, highlighting MIRRAMS as a powerful, off-the-shelf framework for general-purpose learning.

14 Mar 2025

This paper presents a unified rank-based inferential procedure for fitting

the accelerated failure time model to partially interval-censored data. A

Gehan-type monotone estimating function is constructed based on the idea of the

familiar weighted log-rank test, and an extension to a general class of

rank-based estimating functions is suggested. The proposed estimators can be

obtained via linear programming and are shown to be consistent and

asymptotically normal via standard empirical process theory. Unlike common

maximum likelihood-based estimators for partially interval-censored regression

models, our approach can directly provide a regression coefficient estimator

without involving a complex nonparametric estimation of the underlying residual

distribution function. An efficient variance estimation procedure for the

regression coefficient estimator is considered. Moreover, we extend the

proposed rank-based procedure to the linear regression analysis of multivariate

clustered partially interval-censored data. The finite-sample operating

characteristics of our approach are examined via simulation studies. Data

example from a colorectal cancer study illustrates the practical usefulness of

the method.

21 Feb 2025

This paper presents a study on an ℓ1-penalized covariance regression

method. Conventional approaches in high-dimensional covariance estimation often

lack the flexibility to integrate external information. As a remedy, we adopt

the regression-based covariance modeling framework and introduce a linear

covariance selection model (LCSM) to encompass a broader spectrum of covariance

structures when covariate information is available. Unlike existing methods, we

do not assume that the true covariance matrix can be exactly represented by a

linear combination of known basis matrices. Instead, we adopt additional basis

matrices for a portion of the covariance patterns not captured by the given

bases. To estimate high-dimensional regression coefficients, we exploit the

sparsity-inducing ℓ1-penalization scheme. Our theoretical analyses are

based on the (symmetric) matrix regression model with additive random error

matrix, which allows us to establish new non-asymptotic convergence rates of

the proposed covariance estimator.

The proposed method is implemented with the coordinate descent algorithm. We

conduct empirical evaluation on simulated data to complement theoretical

findings and underscore the efficacy of our approach. To show a practical

applicability of our method, we further apply it to the co-expression analysis

of liver gene expression data where the given basis corresponds to the

adjacency matrix of the co-expression network.

07 Jul 2025

Undirected graphical models are powerful tools for uncovering complex relationships among high-dimensional variables. This paper aims to fully recover the structure of an undirected graphical model when the data naturally take matrix form, such as temporal multivariate data.

As conventional vector-variate analyses have clear limitations in handling such matrix-structured data, several approaches have been proposed, mostly relying on the likelihood of the Gaussian distribution with a separable covariance structure. Although some of these methods provide theoretical guarantees against false inclusions (i.e. all identified edges exist in the true graph), they may suffer from crucial limitations: (1) failure to detect important true edges, or (2) dependency on conditions for the estimators that have not been verified.

We propose a novel regression-based method for estimating matrix graphical models, based on the relationship between partial correlations and regression coefficients. Adopting the primal-dual witness technique from the regression framework, we derive a non-asymptotic inequality for exact recovery of an edge set. Under suitable regularity conditions, our method consistently identifies the true edge set with high probability.

Through simulation studies, we compare the support recovery performance of the proposed method against existing alternatives. We also apply our method to an electroencephalography (EEG) dataset to estimate both the spatial brain network among 64 electrodes and the temporal network across 256 time points.

28 Jul 2025

This paper addresses classification problems with matrix-valued data, which commonly arises in applications such as neuroimaging and signal processing. Building on the assumption that the data from each class follows a matrix normal distribution, we propose a novel extension of Fisher's Linear Discriminant Analysis (LDA) tailored for matrix-valued observations. To effectively capture structural information while maintaining estimation flexibility, we adopt a nonparametric empirical Bayes framework based on Nonparametric Maximum Likelihood Estimation (NPMLE), applied to vectorized and scaled matrices. The NPMLE method has been shown to provide robust, flexible, and accurate estimates for vector-valued data with various structures in the mean vector or covariance matrix. By leveraging its strengths, our method is effectively generalized to the matrix setting, thereby improving classification performance. Through extensive simulation studies and real data applications, including electroencephalography (EEG) and magnetic resonance imaging (MRI) analysis, we demonstrate that the proposed method consistently outperforms existing approaches across a variety of data structures.

Wireless-powered communications (WPCs) are increasingly crucial for extending the lifespan of low-power Internet of Things (IoT) devices. Furthermore, reconfigurable intelligent surfaces (RISs) can create favorable electromagnetic environments by providing alternative signal paths to counteract blockages. The strategic integration of WPC and RIS technologies can significantly enhance energy transfer and data transmission efficiency. However, passive RISs suffer from double-fading attenuation over RIS-aided cascaded links. In this article, we propose the application of an active RIS within WPC-enabled IoT networks. The enhanced flexibility of the active RIS in terms of energy transfer and information transmission is investigated using adjustable parameters. We derive novel closed-form expressions for the ergodic rate and outage probability by incorporating key parameters, including signal amplification, active noise, power consumption, and phase quantization errors. Additionally, we explore the optimization of WPC scenarios, focusing on the time-switching factor and power consumption of the active RIS. The results validate our analysis, demonstrating that an active RIS significantly enhances WPC performance compared to a passive RIS.

18 Apr 2025

One of the common challenges faced by researchers in recent data analysis is missing values. In the context of penalized linear regression, which has been extensively explored over several decades, missing values introduce bias and yield a non-positive definite covariance matrix of the covariates, rendering the least square loss function non-convex. In this paper, we propose a novel procedure called the linear shrinkage positive definite (LPD) modification to address this issue. The LPD modification aims to modify the covariance matrix of the covariates in order to ensure consistency and positive definiteness. Employing the new covariance estimator, we are able to transform the penalized regression problem into a convex one, thereby facilitating the identification of sparse solutions. Notably, the LPD modification is computationally efficient and can be expressed analytically. In the presence of missing values, we establish the selection consistency and prove the convergence rate of the ℓ1-penalized regression estimator with LPD, showing an ℓ2-error convergence rate of square-root of logp over n by a factor of (s0)3/2 (s0: the number of non-zero coefficients). To further evaluate the effectiveness of our approach, we analyze real data from the Genomics of Drug Sensitivity in Cancer (GDSC) dataset. This dataset provides incomplete measurements of drug sensitivities of cell lines and their protein expressions. We conduct a series of penalized linear regression models with each sensitivity value serving as a response variable and protein expressions as explanatory variables.

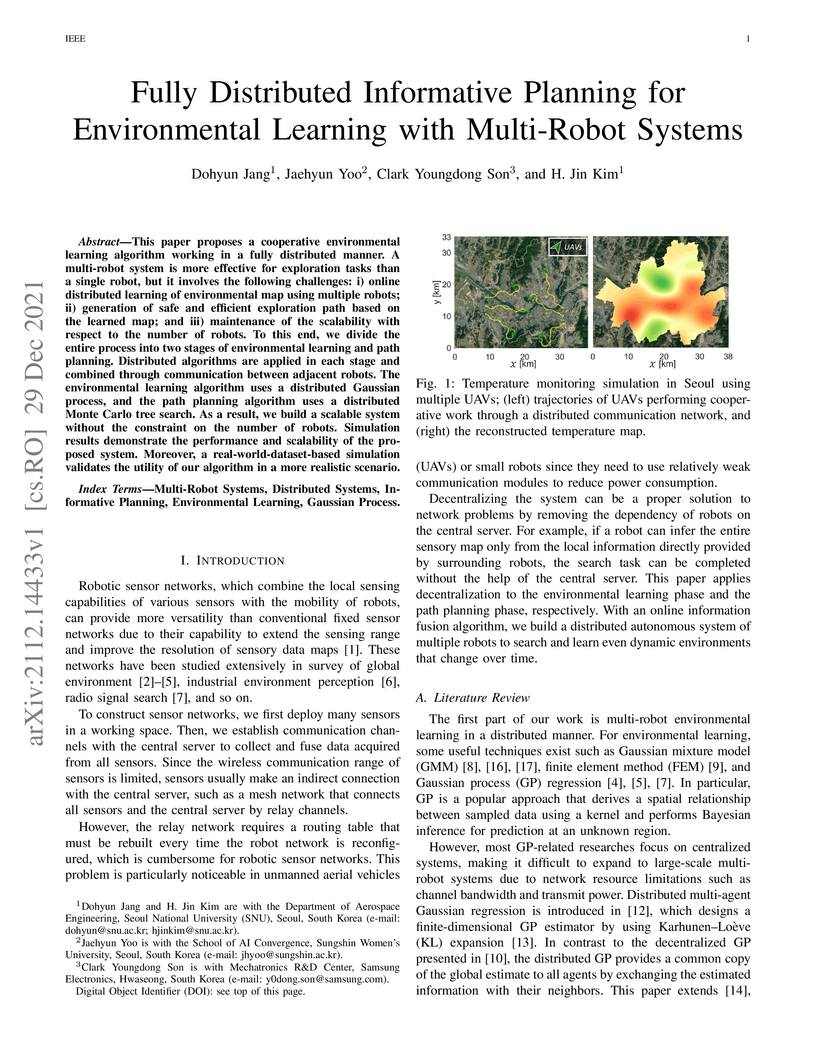

This paper proposes a cooperative environmental learning algorithm working in a fully distributed manner. A multi-robot system is more effective for exploration tasks than a single robot, but it involves the following challenges: 1) online distributed learning of environmental map using multiple robots; 2) generation of safe and efficient exploration path based on the learned map; and 3) maintenance of the scalability with respect to the number of robots. To this end, we divide the entire process into two stages of environmental learning and path planning. Distributed algorithms are applied in each stage and combined through communication between adjacent robots. The environmental learning algorithm uses a distributed Gaussian process, and the path planning algorithm uses a distributed Monte Carlo tree search. As a result, we build a scalable system without the constraint on the number of robots. Simulation results demonstrate the performance and scalability of the proposed system. Moreover, a real-world-dataset-based simulation validates the utility of our algorithm in a more realistic scenario.

Early detection of cancers has been much explored due to its paramount importance in biomedical fields. Among different types of data used to answer this biological question, studies based on T cell receptors (TCRs) are under recent spotlight due to the growing appreciation of the roles of the host immunity system in tumor biology. However, the one-to-many correspondence between a patient and multiple TCR sequences hinders researchers from simply adopting classical statistical/machine learning methods. There were recent attempts to model this type of data in the context of multiple instance learning (MIL).

Despite the novel application of MIL to cancer detection using TCR sequences and the demonstrated adequate performance in several tumor types, there is still room for improvement, especially for certain cancer types. Furthermore, explainable neural network models are not fully investigated for this application.

In this article, we propose multiple instance neural networks based on sparse attention (MINN-SA) to enhance the performance in cancer detection and explainability. The sparse attention structure drops out uninformative instances in each bag, achieving both interpretability and better predictive performance in combination with the skip connection.

Our experiments show that MINN-SA yields the highest area under the ROC curve (AUC) scores on average measured across 10 different types of cancers, compared to existing MIL approaches. Moreover, we observe from the estimated attentions that MINN-SA can identify the TCRs that are specific for tumor antigens in the same T cell repertoire.

20 Sep 2022

In this paper, we derive a new version of Hanson-Wright inequality for a

sparse bilinear form of sub-Gaussian variables. Our results are generalization

of previous deviation inequalities that consider either sparse quadratic forms

or dense bilinear forms. We apply the new concentration inequality to testing

the cross-covariance matrix when data are subject to missing. Using our

results, we can find a threshold value of correlations that controls the

family-wise error rate. Furthermore, we discuss the multiplicative measurement

error case for the bilinear form with a boundedness condition.

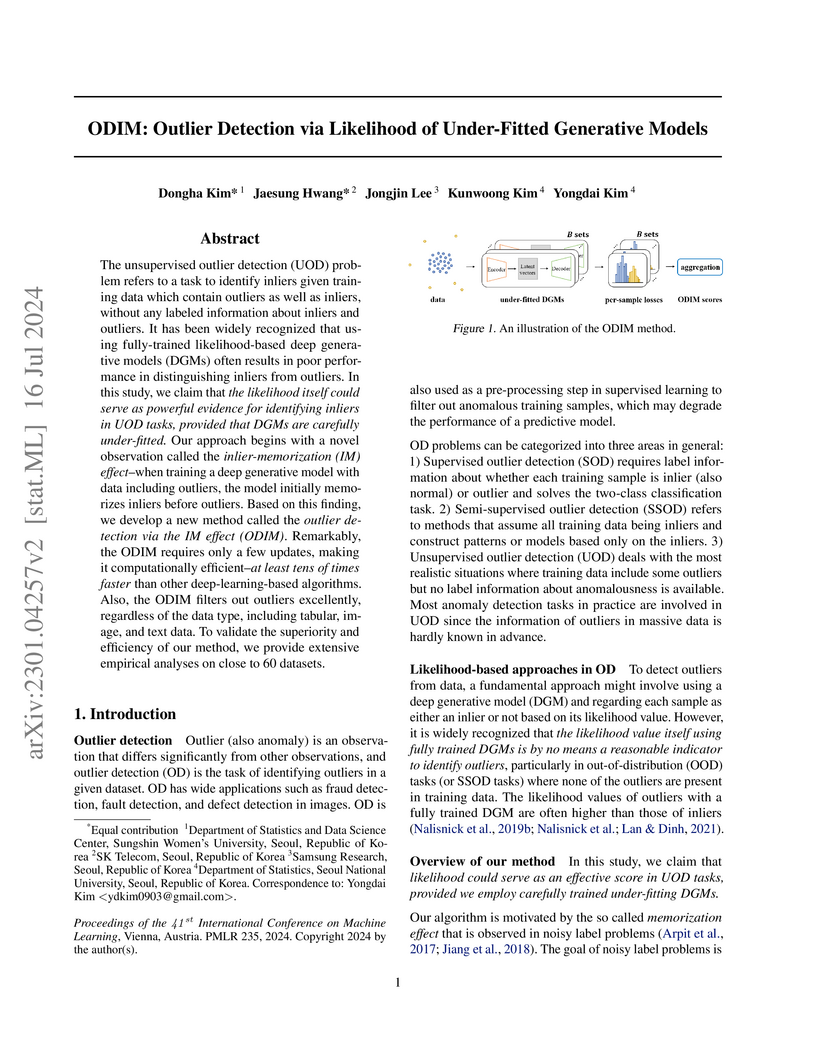

The unsupervised outlier detection (UOD) problem refers to a task to identify

inliers given training data which contain outliers as well as inliers, without

any labeled information about inliers and outliers. It has been widely

recognized that using fully-trained likelihood-based deep generative models

(DGMs) often results in poor performance in distinguishing inliers from

outliers. In this study, we claim that the likelihood itself could serve as

powerful evidence for identifying inliers in UOD tasks, provided that DGMs are

carefully under-fitted. Our approach begins with a novel observation called the

inlier-memorization (IM) effect-when training a deep generative model with data

including outliers, the model initially memorizes inliers before outliers.

Based on this finding, we develop a new method called the outlier detection via

the IM effect (ODIM). Remarkably, the ODIM requires only a few updates, making

it computationally efficient-at least tens of times faster than other

deep-learning-based algorithms. Also, the ODIM filters out outliers

excellently, regardless of the data type, including tabular, image, and text

data. To validate the superiority and efficiency of our method, we provide

extensive empirical analyses on close to 60 datasets.

There are no more papers matching your filters at the moment.