Tunisia Polytechnic SchoolUniversity of Carthage

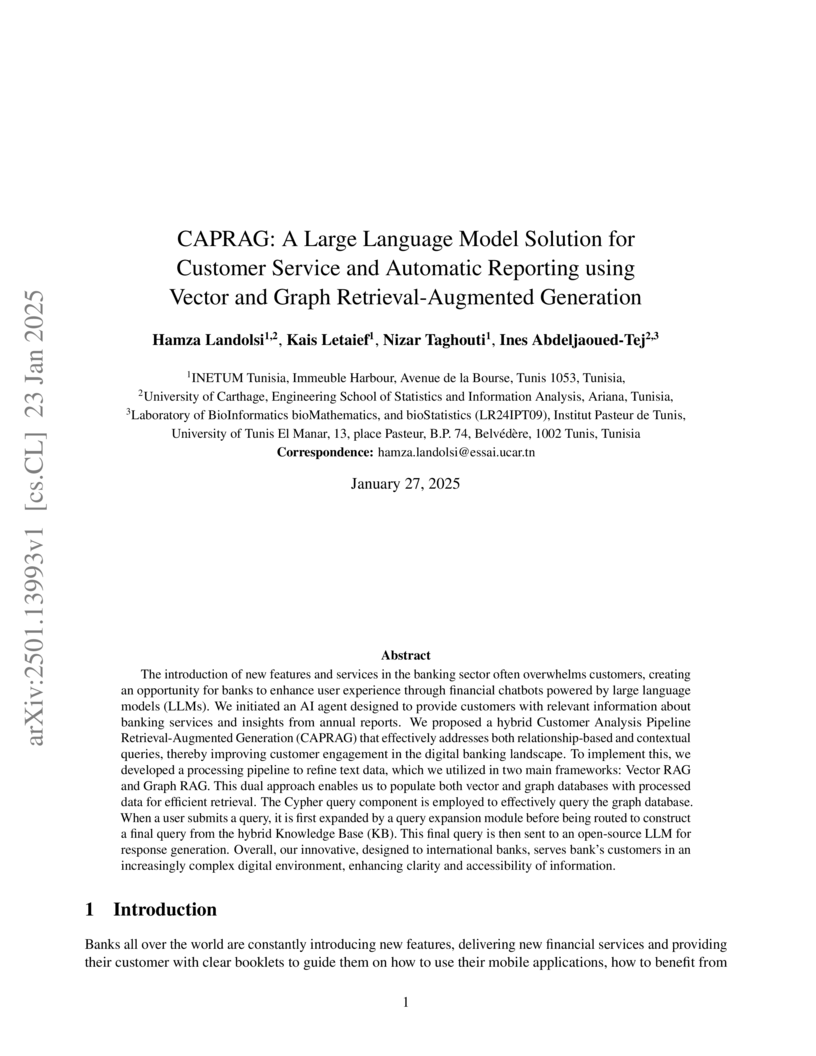

The introduction of new features and services in the banking sector often overwhelms customers, creating an opportunity for banks to enhance user experience through financial chatbots powered by large language models (LLMs). We initiated an AI agent designed to provide customers with relevant information about banking services and insights from annual reports. We proposed a hybrid Customer Analysis Pipeline Retrieval-Augmented Generation (CAPRAG) that effectively addresses both relationship-based and contextual queries, thereby improving customer engagement in the digital banking landscape. To implement this, we developed a processing pipeline to refine text data, which we utilized in two main frameworks: Vector RAG and Graph RAG. This dual approach enables us to populate both vector and graph databases with processed data for efficient retrieval. The Cypher query component is employed to effectively query the graph database. When a user submits a query, it is first expanded by a query expansion module before being routed to construct a final query from the hybrid Knowledge Base (KB). This final query is then sent to an open-source LLM for response generation. Overall, our innovative, designed to international banks, serves bank's customers in an increasingly complex digital environment, enhancing clarity and accessibility of information.

05 May 2020

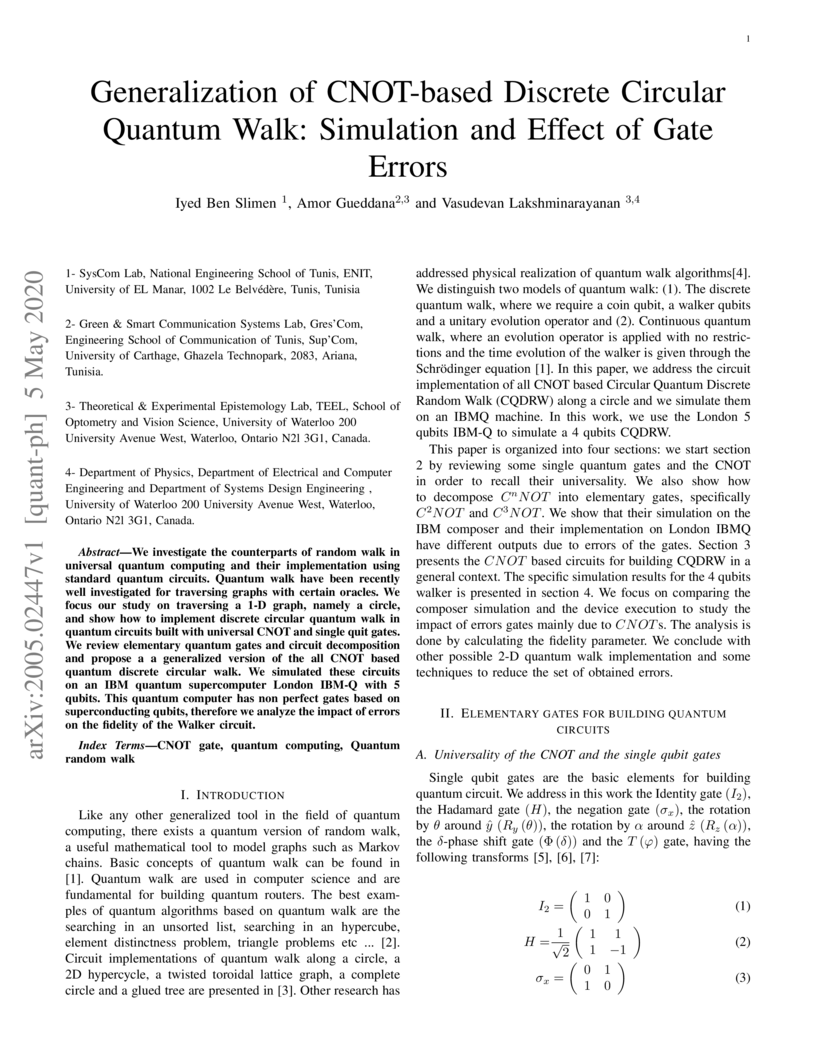

We investigate the counterparts of random walk in universal quantum computing and their implementation using standard quantum circuits. Quantum walk have been recently well investigated for traversing graphs with certain oracles. We focus our study on traversing a 1-D graph, namely a circle, and show how to implement discrete circular quantum walk in quantum circuits built with universal CNOT and single quit gates. We review elementary quantum gates and circuit decomposition and propose a a generalized version of the all CNOT based quantum discrete circular walk. We simulated these circuits on an IBM quantum supercomputer London IBM-Q with 5 qubits. This quantum computer has non perfect gates based on superconducting qubits, therefore we analyze the impact of errors on the fidelity of the Walker circuit.

30 Oct 2014

For fixed c, Prolate Spheroidal Wave Functions (PSWFs), denoted by ψn,c, form an orthogonal basis with remarkable properties for the space of band-limited functions with bandwith c. They have been largely studied and used after the seminal work of D. Slepian and his co-authors. In several applications, uniform estimates of the ψn,c in n and c, are needed. To progress in this direction, we push forward the uniform approximation error bounds and give an explicit approximation of their values at 1 in terms of the

Legendre complete elliptic integral of the first kind. Also, we give an explicit formula for the accurate approximation the eigenvalues of the Sturm-Liouville operator associated with the PSWFs.

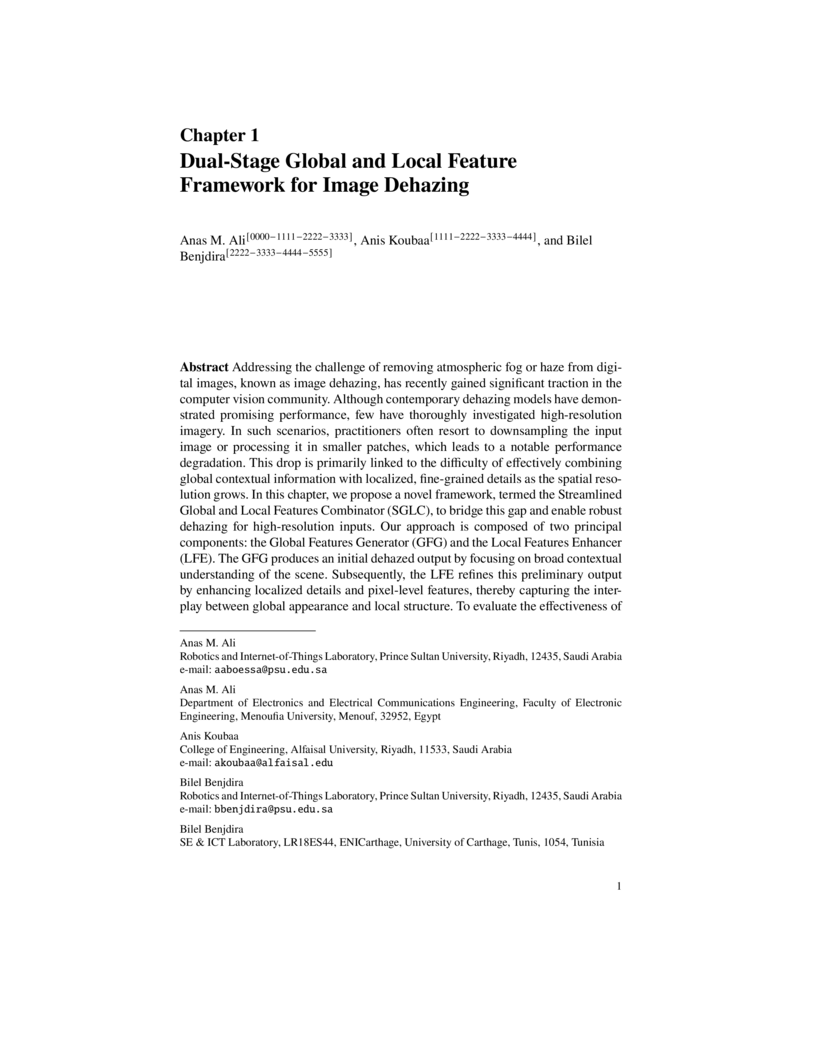

Addressing the challenge of removing atmospheric fog or haze from digital images, known as image dehazing, has recently gained significant traction in the computer vision community. Although contemporary dehazing models have demonstrated promising performance, few have thoroughly investigated high-resolution imagery. In such scenarios, practitioners often resort to downsampling the input image or processing it in smaller patches, which leads to a notable performance degradation. This drop is primarily linked to the difficulty of effectively combining global contextual information with localized, fine-grained details as the spatial resolution grows. In this chapter, we propose a novel framework, termed the Streamlined Global and Local Features Combinator (SGLC), to bridge this gap and enable robust dehazing for high-resolution inputs. Our approach is composed of two principal components: the Global Features Generator (GFG) and the Local Features Enhancer (LFE). The GFG produces an initial dehazed output by focusing on broad contextual understanding of the scene. Subsequently, the LFE refines this preliminary output by enhancing localized details and pixel-level features, thereby capturing the interplay between global appearance and local structure. To evaluate the effectiveness of SGLC, we integrated it with the Uformer architecture, a state-of-the-art dehazing model. Experimental results on high-resolution datasets reveal a considerable improvement in peak signal-to-noise ratio (PSNR) when employing SGLC, indicating its potency in addressing haze in large-scale imagery. Moreover, the SGLC design is model-agnostic, allowing any dehazing network to be augmented with the proposed global-and-local feature fusion mechanism. Through this strategy, practitioners can harness both scene-level cues and granular details, significantly improving visual fidelity in high-resolution environments.

The rapid expansion of information from diverse sources has heightened the need for effective automatic text summarization, which condenses documents into shorter, coherent texts. Summarization methods generally fall into two categories: extractive, which selects key segments from the original text, and abstractive, which generates summaries by rephrasing the content coherently. Large language models have advanced the field of abstractive summarization, but they are resourceintensive and face significant challenges in retaining key information across lengthy documents, which we call being "lost in the middle". To address these issues, we propose a hybrid summarization approach that combines extractive and abstractive techniques. Our method splits the document into smaller text chunks, clusters their vector embeddings, generates a summary for each cluster that represents a key idea in the document, and constructs the final summary by relying on a Markov chain graph when selecting the semantic order of ideas.

Three-dimensional (3D) point cloud analysis has become one of the attractive subjects in realistic imaging and machine visions due to its simplicity, flexibility and powerful capacity of visualization. Actually, the representation of scenes and buildings using 3D shapes and formats leveraged many applications among which automatic driving, scenes and objects reconstruction, etc. Nevertheless, working with this emerging type of data has been a challenging task for objects representation, scenes recognition, segmentation, and reconstruction. In this regard, a significant effort has recently been devoted to developing novel strategies, using different techniques such as deep learning models. To that end, we present in this paper a comprehensive review of existing tasks on 3D point cloud: a well-defined taxonomy of existing techniques is performed based on the nature of the adopted algorithms, application scenarios, and main objectives. Various tasks performed on 3D point could data are investigated, including objects and scenes detection, recognition, segmentation and reconstruction. In addition, we introduce a list of used datasets, we discuss respective evaluation metrics and we compare the performance of existing solutions to better inform the state-of-the-art and identify their limitations and strengths. Lastly, we elaborate on current challenges facing the subject of technology and future trends attracting considerable interest, which could be a starting point for upcoming research studies

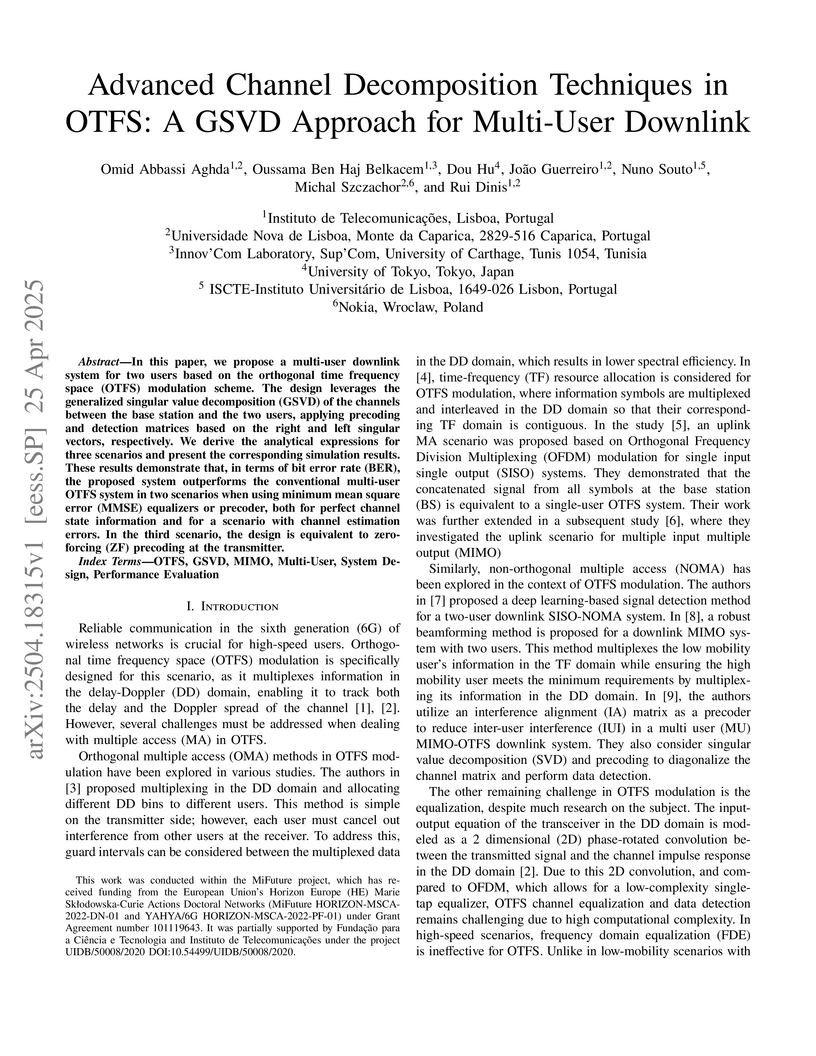

In this paper, we propose a multi-user downlink system for two users based on

the orthogonal time frequency space (OTFS) modulation scheme. The design

leverages the generalized singular value decomposition (GSVD) of the channels

between the base station and the two users, applying precoding and detection

matrices based on the right and left singular vectors, respectively. We derive

the analytical expressions for three scenarios and present the corresponding

simulation results. These results demonstrate that, in terms of bit error rate

(BER), the proposed system outperforms the conventional multi-user OTFS system

in two scenarios when using minimum mean square error (MMSE) equalizers or

precoder, both for perfect channel state information and for a scenario with

channel estimation errors. In the third scenario, the design is equivalent to

zero-forcing (ZF) precoding at the transmitter.

Addressing the challenge of removing atmospheric fog or haze from digital images, known as image dehazing, has recently gained significant traction in the computer vision community. Although contemporary dehazing models have demonstrated promising performance, few have thoroughly investigated high-resolution imagery. In such scenarios, practitioners often resort to downsampling the input image or processing it in smaller patches, which leads to a notable performance degradation. This drop is primarily linked to the difficulty of effectively combining global contextual information with localized, fine-grained details as the spatial resolution grows. In this chapter, we propose a novel framework, termed the Streamlined Global and Local Features Combinator (SGLC), to bridge this gap and enable robust dehazing for high-resolution inputs. Our approach is composed of two principal components: the Global Features Generator (GFG) and the Local Features Enhancer (LFE). The GFG produces an initial dehazed output by focusing on broad contextual understanding of the scene. Subsequently, the LFE refines this preliminary output by enhancing localized details and pixel-level features, thereby capturing the interplay between global appearance and local structure. To evaluate the effectiveness of SGLC, we integrated it with the Uformer architecture, a state-of-the-art dehazing model. Experimental results on high-resolution datasets reveal a considerable improvement in peak signal-to-noise ratio (PSNR) when employing SGLC, indicating its potency in addressing haze in large-scale imagery. Moreover, the SGLC design is model-agnostic, allowing any dehazing network to be augmented with the proposed global-and-local feature fusion mechanism. Through this strategy, practitioners can harness both scene-level cues and granular details, significantly improving visual fidelity in high-resolution environments.

A Cardiac Implantable Medical device (IMD) is a device, which is surgically implanted into a patient's body, and wirelessly configured using an external programmer by prescribing physicians and doctors. A set of lethal attacks targeting these devices can be conducted due to the use of vulnerable wireless communication and security protocols, and the lack of security protection mechanisms deployed on IMDs. In this paper, we propose a system for postmortem analysis of lethal attack scenarios targeting cardiac IMDs. Such a system reconciles in the same framework conclusions derived by technical investigators and deductions generated by pathologists. An inference system integrating a library of medical rules is used to automatically infer potential medical scenarios that could have led to the death of a patient. A Model Checking based formal technique allowing the reconstruction of potential technical attack scenarios on the IMD, starting from the collected evidence, is also proposed. A correlation between the results obtained by the two techniques allows to prove whether a potential attack scenario is the source of the patient's death.

Nowadays, social networks such as Twitter, Facebook and LinkedIn become

increasingly popular. In fact, they introduced new habits, new ways of

communication and they collect every day several information that have

different sources. Most existing research works fo-cus on the analysis of

homogeneous social networks, i.e. we have a single type of node and link in the

network. However, in the real world, social networks offer several types of

nodes and links. Hence, with a view to preserve as much information as

possible, it is important to consider so-cial networks as heterogeneous and

uncertain. The goal of our paper is to classify the social message based on its

spreading in the network and the theory of belief functions. The proposed

classifier interprets the spread of messages on the network, crossed paths and

types of links. We tested our classifier on a real word network that we

collected from Twitter, and our experiments show the performance of our belief

classifier.

Speech Recognition searches to predict the spoken words automatically. These

systems are known to be very expensive because of using several pre-recorded

hours of speech. Hence, building a model that minimizes the cost of the

recognizer will be very interesting. In this paper, we present a new approach

for recognizing speech based on belief HMMs instead of proba-bilistic HMMs.

Experiments shows that our belief recognizer is insensitive to the lack of the

data and it can be trained using only one exemplary of each acoustic unit and

it gives a good recognition rates. Consequently, using the belief HMM

recognizer can greatly minimize the cost of these systems.

In the last few years, Unmanned Aerial Vehicles (UAVs) are making a

revolution as an emerging technology with many different applications in the

military, civilian, and commercial fields. The advent of autonomous drones has

initiated serious challenges, including how to maintain their safe operation

during their missions. The safe operation of UAVs remains an open and sensitive

issue since any unexpected behavior of the drone or any hazard would lead to

potential risks that might be very severe. The motivation behind this work is

to propose a methodology for the safety assurance of drones over the Internet

{(Internet of drones (IoD))}. Two approaches will be used in performing the

safety analysis: (1) a qualitative safety analysis approach, and (2) a

quantitative safety analysis approach. The first approach uses the

international safety standards, namely ISO 12100 and ISO 13849 to assess the

safety of drone's missions by focusing on qualitative assessment techniques.

The methodology starts with hazard identification, risk assessment, risk

mitigation, and finally, draws the safety recommendations associated with a

drone delivery use case. The second approach presents a method for the

quantitative safety assessment using Bayesian Networks (BN) for probabilistic

modeling. BN utilizes the information provided by the first approach to model

the safety risks related to UAVs' flights. An illustrative UAV crash scenario

is presented as a case study, followed by scenario analysis, to demonstrate the

applicability of the proposed approach. These two analyses, qualitative and

quantitative, enable { all involved stakeholders} to detect, explore and

address the risks of UAV flights, which will help the industry to better manage

the safety concerns of UAVs.

In this paper, we propose an original object detection methodology applied to

Global Wheat Head Detection (GWHD) Dataset. We have been through two major

architectures of object detection which are FasterRCNN and EfficientDet, in

order to design a novel and robust wheat head detection model. We emphasize on

optimizing the performance of our proposed final architectures. Furthermore, we

have been through an extensive exploratory data analysis and adapted best data

augmentation techniques to our context. We use semi supervised learning to

boost previous supervised models of object detection. Moreover, we put much

effort on ensemble to achieve higher performance. Finally we use specific

post-processing techniques to optimize our wheat head detection results. Our

results have been submitted to solve a research challenge launched on the GWHD

Dataset which is led by nine research institutes from seven countries. Our

proposed method was ranked within the top 6% in the above mentioned challenge.

07 Oct 2025

We introduce a new generalization of Stirling numbers of the second kind and analyze their properties, including generating functions, integral representations, and recurrence relations. These numbers are used to approximate Riemann zeta values by rationals with exponentially decreasing error. We establish connections with Hurwitz zeta functions, polylogarithms, harmonic sums, and multiple sums. Finally, we extend our study to q-Stirling numbers, linking them to q-hypergeometric functions and a q-zeta function, revealing new insights in combinatorics and number theory.

02 Feb 2022

In this work, we study a random orthogonal projection based least squares estimator for the stable solution of a multivariate nonparametric regression (MNPR) problem. More precisely, given an integer d≥1 corresponding to the dimension of the MNPR problem, a positive integer N≥1 and a real parameter α≥−21, we show that a fairly large class of d−variate regression functions are well and stably approximated by its random projection over the orthonormal set of tensor product d−variate Jacobi polynomials with parameters (α,α). The associated uni-variate Jacobi polynomials have degree at most N and their tensor products are orthonormal over U=[0,1]d, with respect to the associated multivariate Jacobi weights. In particular, if we consider n random sampling points Xi following the d−variate Beta distribution, with parameters (α+1,α+1), then we give a relation involving n,N,α to ensure that the resulting (N+1)d×(N+1)d random projection matrix is well conditioned. Moreover, we provide squared integrated as well as L2−risk errors of this estimator. Precise estimates of these errors are given in the case where the regression function belongs to an isotropic Sobolev space Hs(Id), with s>2d. Also, to handle the general and practical case of an unknown distribution of the Xi, we use Shepard's scattered interpolation scheme in order to generate fairly precise approximations of the observed data at n i.i.d. sampling points Xi following a d−variate Beta distribution. Finally, we illustrate the performance of our proposed multivariate nonparametric estimator by some numerical simulations with synthetic as well as real data.

The Future wireless communication systems face the challenging task of

simultaneously providing high quality of service (QoS) and broadband data

transmission, while also minimizing power consumption, latency, and system

complexity. Although Orthogonal Frequency Division Multiplexing OFDM has been

widely adopted in 4G and 5G systems, it struggles to cope with the significant

delay and Doppler spread in high mobility scenarios. To address these

challenges, a novel waveform called Orthogonal Time Frequency Space OTFS has

emerged, aiming to outperform OFDM by closely aligning signals with the channel

behaviour. In this paper, we propose a switching strategy that empowers

operators to select the most appropriate waveform based on the estimated speed

of the mobile user. This strategy enables the base station to dynamically

choose the waveform that best suits the mobile users speed. Additionally, we

suggest integrating an Integrated Sensing and Communication radar for accurate

Doppler estimation, providing precise information to aid in waveform selection.

By leveraging the switching strategy and harnessing the Doppler estimation

capabilities of an ISAC radar, our proposed approach aims to enhance the

performance of wireless communication systems in scenarios of high mobility.

Considering the complexity of waveform processing, we introduce an optimized

hybrid system that combines OTFS and OFDM, resulting in reduced complexity

while still retaining performance benefits. This hybrid system presents a

promising solution for improving the performance of wireless communication

systems in scenarios with a high mobility. The simulation results validate the

effectiveness of our approach, demonstrating its potential advantages for

future wireless communication systems. The effectiveness of the proposed

approach is validated by simulation results as it will be shown.

02 Feb 2022

In this work, we study a random orthogonal projection based least squares estimator for the stable solution of a multivariate nonparametric regression (MNPR) problem. More precisely, given an integer d≥1 corresponding to the dimension of the MNPR problem, a positive integer N≥1 and a real parameter α≥−21, we show that a fairly large class of d−variate regression functions are well and stably approximated by its random projection over the orthonormal set of tensor product d−variate Jacobi polynomials with parameters (α,α). The associated uni-variate Jacobi polynomials have degree at most N and their tensor products are orthonormal over U=[0,1]d, with respect to the associated multivariate Jacobi weights. In particular, if we consider n random sampling points Xi following the d−variate Beta distribution, with parameters (α+1,α+1), then we give a relation involving n,N,α to ensure that the resulting (N+1)d×(N+1)d random projection matrix is well conditioned. Moreover, we provide squared integrated as well as L2−risk errors of this estimator. Precise estimates of these errors are given in the case where the regression function belongs to an isotropic Sobolev space Hs(Id), with s>2d. Also, to handle the general and practical case of an unknown distribution of the Xi, we use Shepard's scattered interpolation scheme in order to generate fairly precise approximations of the observed data at n i.i.d. sampling points Xi following a d−variate Beta distribution. Finally, we illustrate the performance of our proposed multivariate nonparametric estimator by some numerical simulations with synthetic as well as real data.

05 Mar 2025

Light dragging refers to the change in the path of light passing through a

moving medium. This effect enables accurate detection of very slow speeds of

light, which have prominent applications in state transfer, quantum gate

operations, and quantum memory implementations. Here, to the best of our

knowledge, we demonstrate the existence of the light-dragging effect in an

optomechanical system (OMS) for the first time. The origin of this key factor

arises from the nonlinear effects linked to optomechanical-induced transparency

(OMIT). Hence, we observe prominent effects in the group and refractive indices

profile spectra related to optomechanical parameters such as the decay rate of

the cavity field, the mirror's damping momentum rate, and mechanical frequency.

We find out that lateral light drag depends on the detuning by altering the

amplitude and direction of the translational velocity. This allowed us to

change the light's propagation through the optomechanical cavity from

superluminal to subluminal and vice versa by modifying the probe's detuning.

The ability to manipulate and control the light drag through an optomechanical

system might be useful in designing novel optical devices and systems with

enhanced performance.

In large organizations, the number of financial transactions can grow

rapidly, driving the need for fast and accurate multi-criteria invoice

validation. Manual processing remains error-prone and time-consuming, while

current automated solutions are limited by their inability to support a variety

of constraints, such as documents that are partially handwritten or

photographed with a mobile phone. In this paper, we propose to automate the

validation of machine written invoices using document layout analysis and

object detection techniques based on recent deep learning (DL) models. We

introduce a novel dataset consisting of manually annotated real-world invoices

and a multi-criteria validation process. We fine-tune and benchmark the most

relevant DL models on our dataset. Experimental results show the effectiveness

of the proposed pipeline and selected DL models in terms of achieving fast and

accurate validation of invoices.

In this survey, we analyze the newest machine learning (ML) techniques for

optical orthogonal frequency division multiplexing (O-OFDM)-based optical

communications. ML has been proposed to mitigate channel and transceiver

imperfections. For instance, ML can improve the signal quality under low

modulation extinction ratio or can tackle both determinist and

stochastic-induced nonlinearities such as parametric noise amplification in

long-haul transmission. The proposed ML algorithms for O-OFDM can in

particularly tackle inter-subcarrier nonlinear effects such as four-wave mixing

and cross-phase modulation. In essence, these ML techniques could be beneficial

for any multi-carrier approach (e.g. filter bank modulation). Supervised and

unsupervised ML techniques are analyzed in terms of both O-OFDM transmission

performance and computational complexity for potential real-time

implementation. We indicate the strict conditions under which a ML algorithm

should perform classification, regression or clustering. The survey also

discusses open research issues and future directions towards the ML

implementation.

There are no more papers matching your filters at the moment.