Ukrainian Catholic University

24 Sep 2024

This article introduces the Clarke transform and Clarke coordinates, which present a solution to the disengagement of an arbitrary number of coupled displacement actuation of continuum and soft robots. The Clarke transform utilizes the generalized Clarke transformation and its inverse to reduce any number of joint values to a two-dimensional space without sacrificing any significant information. This space is the manifold of the joint space and is described by two orthogonal Clarke coordinates. Application to kinematics, sampling, and control are presented. By deriving the solution to the previously unknown forward robot-dependent mapping for an arbitrary number of joints, the forward and inverse kinematics formulations are branchless, closed-form, and singular-free. Sampling is used as a proxy for gauging the performance implications for various methods and frameworks, leading to a branchless, closed-form, and vectorizable sampling method with a 100 percent success rate and the possibility to shape desired distributions. Due to the utilization of the manifold, the fairly simple constraint-informed, two-dimensional, and linear controller always provides feasible control outputs. On top of that, the relations to improved representations in continuum and soft robotics are established, where the Clarke coordinates are their generalizations.

The Clarke transform offers valuable geometric insights and paves the way for developing approaches directly on the two-dimensional manifold within the high-dimensional joint space, ensuring compliance with the constraint. While being an easy-to-construct linear map, the proposed Clarke transform is mathematically consistent, physically meaningful, as well as interpretable and contributes to the unification of frameworks across continuum and soft robots.

We present FEAR, a family of fast, efficient, accurate, and robust Siamese

visual trackers. We present a novel and efficient way to benefit from

dual-template representation for object model adaption, which incorporates

temporal information with only a single learnable parameter. We further improve

the tracker architecture with a pixel-wise fusion block. By plugging-in

sophisticated backbones with the abovementioned modules, FEAR-M and FEAR-L

trackers surpass most Siamese trackers on several academic benchmarks in both

accuracy and efficiency. Employed with the lightweight backbone, the optimized

version FEAR-XS offers more than 10 times faster tracking than current Siamese

trackers while maintaining near state-of-the-art results. FEAR-XS tracker is

2.4x smaller and 4.3x faster than LightTrack with superior accuracy. In

addition, we expand the definition of the model efficiency by introducing FEAR

benchmark that assesses energy consumption and execution speed. We show that

energy consumption is a limiting factor for trackers on mobile devices. Source

code, pretrained models, and evaluation protocol are available at

this https URL

We present DAD-3DHeads, a dense and diverse large-scale dataset, and a robust model for 3D Dense Head Alignment in the wild. It contains annotations of over 3.5K landmarks that accurately represent 3D head shape compared to the ground-truth scans. The data-driven model, DAD-3DNet, trained on our dataset, learns shape, expression, and pose parameters, and performs 3D reconstruction of a FLAME mesh. The model also incorporates a landmark prediction branch to take advantage of rich supervision and co-training of multiple related tasks. Experimentally, DAD-3DNet outperforms or is comparable to the state-of-the-art models in (i) 3D Head Pose Estimation on AFLW2000-3D and BIWI, (ii) 3D Face Shape Reconstruction on NoW and Feng, and (iii) 3D Dense Head Alignment and 3D Landmarks Estimation on DAD-3DHeads dataset. Finally, the diversity of DAD-3DHeads in camera angles, facial expressions, and occlusions enables a benchmark to study in-the-wild generalization and robustness to distribution shifts. The dataset webpage is this https URL.

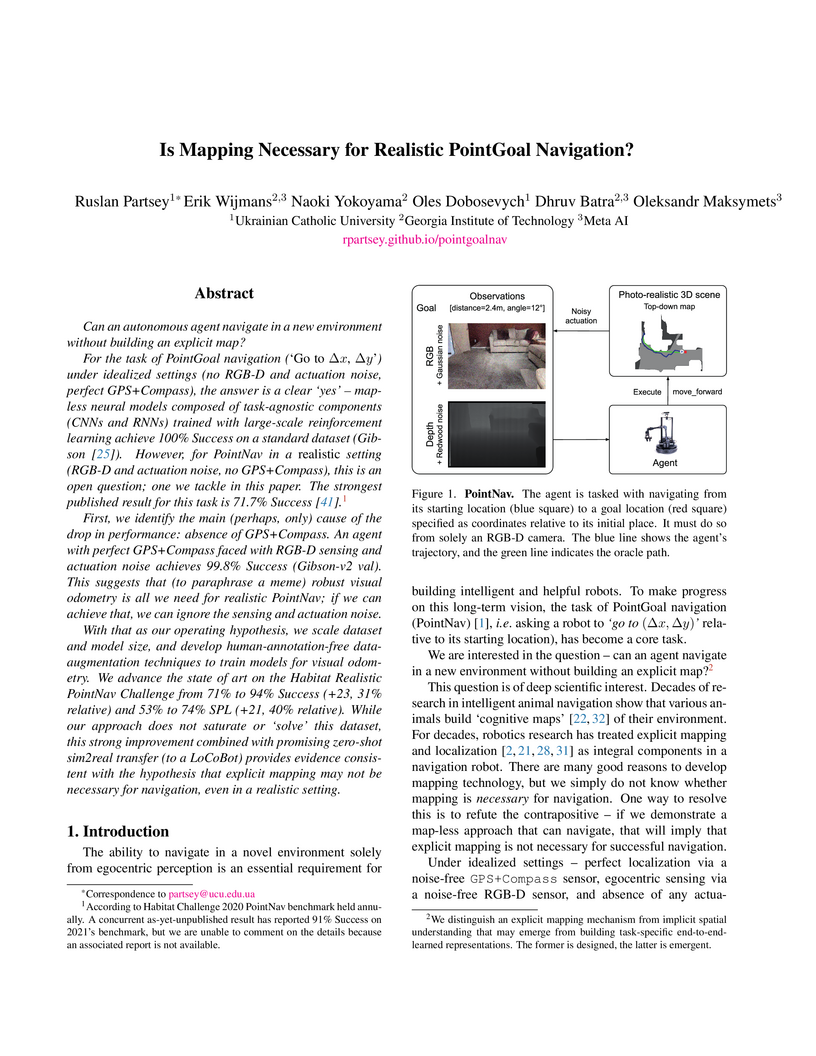

Can an autonomous agent navigate in a new environment without building an

explicit map?

For the task of PointGoal navigation ('Go to Δx, Δy') under

idealized settings (no RGB-D and actuation noise, perfect GPS+Compass), the

answer is a clear 'yes' - map-less neural models composed of task-agnostic

components (CNNs and RNNs) trained with large-scale reinforcement learning

achieve 100% Success on a standard dataset (Gibson). However, for PointNav in a

realistic setting (RGB-D and actuation noise, no GPS+Compass), this is an open

question; one we tackle in this paper. The strongest published result for this

task is 71.7% Success.

First, we identify the main (perhaps, only) cause of the drop in performance:

the absence of GPS+Compass. An agent with perfect GPS+Compass faced with RGB-D

sensing and actuation noise achieves 99.8% Success (Gibson-v2 val). This

suggests that (to paraphrase a meme) robust visual odometry is all we need for

realistic PointNav; if we can achieve that, we can ignore the sensing and

actuation noise.

With that as our operating hypothesis, we scale the dataset and model size,

and develop human-annotation-free data-augmentation techniques to train models

for visual odometry. We advance the state of art on the Habitat Realistic

PointNav Challenge from 71% to 94% Success (+23, 31% relative) and 53% to 74%

SPL (+21, 40% relative). While our approach does not saturate or 'solve' this

dataset, this strong improvement combined with promising zero-shot sim2real

transfer (to a LoCoBot) provides evidence consistent with the hypothesis that

explicit mapping may not be necessary for navigation, even in a realistic

setting.

This paper presents one of the top-performing solutions to the UNLP 2025

Shared Task on Detecting Manipulation in Social Media. The task focuses on

detecting and classifying rhetorical and stylistic manipulation techniques used

to influence Ukrainian Telegram users. For the classification subtask, we

fine-tuned the Gemma 2 language model with LoRA adapters and applied a

second-level classifier leveraging meta-features and threshold optimization.

For span detection, we employed an XLM-RoBERTa model trained for multi-target,

including token binary classification. Our approach achieved 2nd place in

classification and 3rd place in span detection.

Multi-object tracking (MOT) aims to maintain consistent identities of objects across video frames. Associating objects in low-frame-rate videos captured by moving unmanned aerial vehicles (UAVs) in actual combat scenarios is complex due to rapid changes in object appearance and position within the frame. The task becomes even more challenging due to image degradation caused by cloud video streaming and compression algorithms. We present how instance association learning from single-frame annotations can overcome these challenges. We show that global features of the scene provide crucial context for low-FPS instance association, allowing our solution to be robust to distractors and gaps in detections. We also demonstrate that such a tracking approach maintains high association quality even when reducing the input image resolution and latent representation size for faster inference. Finally, we present a benchmark dataset of annotated military vehicles collected from publicly available data sources. This paper was initially presented at the NATO Science and Technology Organization Symposium (ICMCIS) organized by the Information Systems Technology (IST)Scientific and Technical Committee, IST-209-RSY - the ICMCIS, held in Oeiras, Portugal, 13-14 May 2025.

Wikipedia is powered by MediaWiki, a free and open-source software that is

also the infrastructure for many other wiki-based online encyclopedias. These

include the recently launched website Ruwiki, which has copied and modified the

original Russian Wikipedia content to conform to Russian law. To identify

practices and narratives that could be associated with different forms of

knowledge manipulation, this article presents an in-depth analysis of this

Russian Wikipedia fork. We propose a methodology to characterize the main

changes with respect to the original version. The foundation of this study is a

comprehensive comparative analysis of more than 1.9M articles from Russian

Wikipedia and its fork. Using meta-information and geographical, temporal,

categorical, and textual features, we explore the changes made by Ruwiki

editors. Furthermore, we present a classification of the main topics of

knowledge manipulation in this fork, including a numerical estimation of their

scope. This research not only sheds light on significant changes within Ruwiki,

but also provides a methodology that could be applied to analyze other

Wikipedia forks and similar collaborative projects.

This report analyzes key trends, challenges, risks, and opportunities

associated with the development of Large Language Models (LLMs) globally. It

examines national experiences in developing LLMs and assesses the feasibility

of investment in this sector. Additionally, the report explores strategies for

implementing, regulating, and financing AI projects at the state level.

In order to develop robots that can effectively serve as versatile and

capable home assistants, it is crucial for them to reliably perceive and

interact with a wide variety of objects across diverse environments. To this

end, we proposed Open Vocabulary Mobile Manipulation as a key benchmark task

for robotics: finding any object in a novel environment and placing it on any

receptacle surface within that environment. We organized a NeurIPS 2023

competition featuring both simulation and real-world components to evaluate

solutions to this task. Our baselines on the most challenging version of this

task, using real perception in simulation, achieved only an 0.8% success rate;

by the end of the competition, the best participants achieved an 10.8\% success

rate, a 13x improvement. We observed that the most successful teams employed a

variety of methods, yet two common threads emerged among the best solutions:

enhancing error detection and recovery, and improving the integration of

perception with decision-making processes. In this paper, we detail the results

and methodologies used, both in simulation and real-world settings. We discuss

the lessons learned and their implications for future research. Additionally,

we compare performance in real and simulated environments, emphasizing the

necessity for robust generalization to novel settings.

SoftServe Inc. developed a hybrid recommendation system for K-12 students within the AYO® personalized learning platform, which incorporates a dedicated fairness analysis framework. The system assesses algorithmic bias by analyzing precision differences in recommendations across various protected student groups and found that observed disparities in certain categories were due to external factors or upstream data rather than the recommendation algorithm itself.

We apply an ensemble of modified TransBTS, nnU-Net, and a combination of both

for the segmentation task of the BraTS 2021 challenge. In fact, we change the

original architecture of the TransBTS model by adding Squeeze-and-Excitation

blocks, an increasing number of CNN layers, replacing positional encoding in

Transformer block with a learnable Multilayer Perceptron (MLP) embeddings,

which makes Transformer adjustable to any input size during inference. With

these modifications, we are able to largely improve TransBTS performance.

Inspired by a nnU-Net framework we decided to combine it with our modified

TransBTS by changing the architecture inside nnU-Net to our custom model. On

the Validation set of BraTS 2021, the ensemble of these approaches achieves

0.8496, 0.8698, 0.9256 Dice score and 15.72, 11.057, 3.374 HD95 for enhancing

tumor, tumor core, and whole tumor, correspondingly. Our code is publicly

available.

Human head detection, keypoint estimation, and 3D head model fitting are

essential tasks with many applications. However, traditional real-world

datasets often suffer from bias, privacy, and ethical concerns, and they have

been recorded in laboratory environments, which makes it difficult for trained

models to generalize. Here, we introduce \method -- a large-scale synthetic

dataset generated with diffusion models for human head detection and 3D mesh

estimation. Our dataset comprises over 1 million high-resolution images, each

annotated with detailed 3D head meshes, facial landmarks, and bounding boxes.

Using this dataset, we introduce a new model architecture capable of

simultaneous head detection and head mesh reconstruction from a single image in

a single step. Through extensive experimental evaluations, we demonstrate that

models trained on our synthetic data achieve strong performance on real images.

Furthermore, the versatility of our dataset makes it applicable across a broad

spectrum of tasks, offering a general and comprehensive representation of human

heads.

This paper investigates the challenges and potential solutions for improving

machine learning systems for low-resource languages. State-of-the-art models in

natural language processing (NLP), text-to-speech (TTS), speech-to-text (STT),

and vision-language models (VLM) rely heavily on large datasets, which are

often unavailable for low-resource languages. This research explores key areas

such as defining "quality data," developing methods for generating appropriate

data and enhancing accessibility to model training. A comprehensive review of

current methodologies, including data augmentation, multilingual transfer

learning, synthetic data generation, and data selection techniques, highlights

both advancements and limitations. Several open research questions are

identified, providing a framework for future studies aimed at optimizing data

utilization, reducing the required data quantity, and maintaining high-quality

model performance. By addressing these challenges, the paper aims to make

advanced machine learning models more accessible for low-resource languages,

enhancing their utility and impact across various sectors.

Harvard Medical School University of PennsylvaniaMaastricht UniversityDana-Farber Cancer InstituteLudwig Maximilian University of MunichUniversity of California San FranciscoBrigham and Women’s HospitalUT Southwestern Medical CenterMass General BrighamBoston Children’s HospitalUkrainian Catholic UniversityThe Children’s Hospital of PhiladelphiaGeorge Washington School of Medicine and Health Sciences

University of PennsylvaniaMaastricht UniversityDana-Farber Cancer InstituteLudwig Maximilian University of MunichUniversity of California San FranciscoBrigham and Women’s HospitalUT Southwestern Medical CenterMass General BrighamBoston Children’s HospitalUkrainian Catholic UniversityThe Children’s Hospital of PhiladelphiaGeorge Washington School of Medicine and Health Sciences

University of PennsylvaniaMaastricht UniversityDana-Farber Cancer InstituteLudwig Maximilian University of MunichUniversity of California San FranciscoBrigham and Women’s HospitalUT Southwestern Medical CenterMass General BrighamBoston Children’s HospitalUkrainian Catholic UniversityThe Children’s Hospital of PhiladelphiaGeorge Washington School of Medicine and Health Sciences

University of PennsylvaniaMaastricht UniversityDana-Farber Cancer InstituteLudwig Maximilian University of MunichUniversity of California San FranciscoBrigham and Women’s HospitalUT Southwestern Medical CenterMass General BrighamBoston Children’s HospitalUkrainian Catholic UniversityThe Children’s Hospital of PhiladelphiaGeorge Washington School of Medicine and Health SciencesExtracranial tissues visible on brain magnetic resonance imaging (MRI) may

hold significant value for characterizing health conditions and clinical

decision-making, yet they are rarely quantified. Current tools have not been

widely validated, particularly in settings of developing brains or underlying

pathology. We present TissUnet, a deep learning model that segments skull bone,

subcutaneous fat, and muscle from routine three-dimensional T1-weighted MRI,

with or without contrast enhancement. The model was trained on 155 paired

MRI-computed tomography (CT) scans and validated across nine datasets covering

a wide age range and including individuals with brain tumors. In comparison to

AI-CT-derived labels from 37 MRI-CT pairs, TissUnet achieved a median Dice

coefficient of 0.79 [IQR: 0.77-0.81] in a healthy adult cohort. In a second

validation using expert manual annotations, median Dice was 0.83 [IQR:

0.83-0.84] in healthy individuals and 0.81 [IQR: 0.78-0.83] in tumor cases,

outperforming previous state-of-the-art method. Acceptability testing resulted

in an 89% acceptance rate after adjudication by a tie-breaker(N=108 MRIs), and

TissUnet demonstrated excellent performance in the blinded comparative review

(N=45 MRIs), including both healthy and tumor cases in pediatric populations.

TissUnet enables fast, accurate, and reproducible segmentation of extracranial

tissues, supporting large-scale studies on craniofacial morphology, treatment

effects, and cardiometabolic risk using standard brain T1w MRI.

CREDAL introduces the first systematic methodology for the 'close reading' of data models, enabling practitioners to uncover embedded biases, power dynamics, and societal implications within data systems. A qualitative study demonstrated the methodology's usability and usefulness in enhancing understanding, design, and critical evaluation of data models, even for those new to critical analysis.

KAIST

KAIST University of Cambridge

University of Cambridge Shanghai Jiao Tong University

Shanghai Jiao Tong University City University of Hong Kong

City University of Hong Kong University of California, San Diego

University of California, San Diego University of Texas at Austin

University of Texas at Austin Columbia University

Columbia University EPFLBielefeld University

EPFLBielefeld University MITMIT-IBM Watson AI LabUniversity of Colorado Boulder

MITMIT-IBM Watson AI LabUniversity of Colorado Boulder University of GroningenAix Marseille UniversityNara Institute of Science and TechnologyUniversity of TehranUniversity of Cape TownUniversity of the Basque CountrySomosNLPUkrainian Catholic UniversityHiTZ

University of GroningenAix Marseille UniversityNara Institute of Science and TechnologyUniversity of TehranUniversity of Cape TownUniversity of the Basque CountrySomosNLPUkrainian Catholic UniversityHiTZWe present BabyBabelLM, a multilingual collection of datasets modeling the language a person observes from birth until they acquire a native language. We curate developmentally plausible pretraining data aiming to cover the equivalent of 100M English words of content in each of 45 languages. We compile evaluation suites and train baseline models in each language. BabyBabelLM aims to facilitate multilingual pretraining and cognitive modeling.

This work investigates the effectiveness of different pseudonymization techniques, ranging from rule-based substitutions to using pre-trained Large Language Models (LLMs), on a variety of datasets and models used for two widely used NLP tasks: text classification and summarization. Our work provides crucial insights into the gaps between original and anonymized data (focusing on the pseudonymization technique) and model quality and fosters future research into higher-quality anonymization techniques to better balance the trade-offs between data protection and utility preservation. We make our code, pseudonymized datasets, and downstream models publicly available

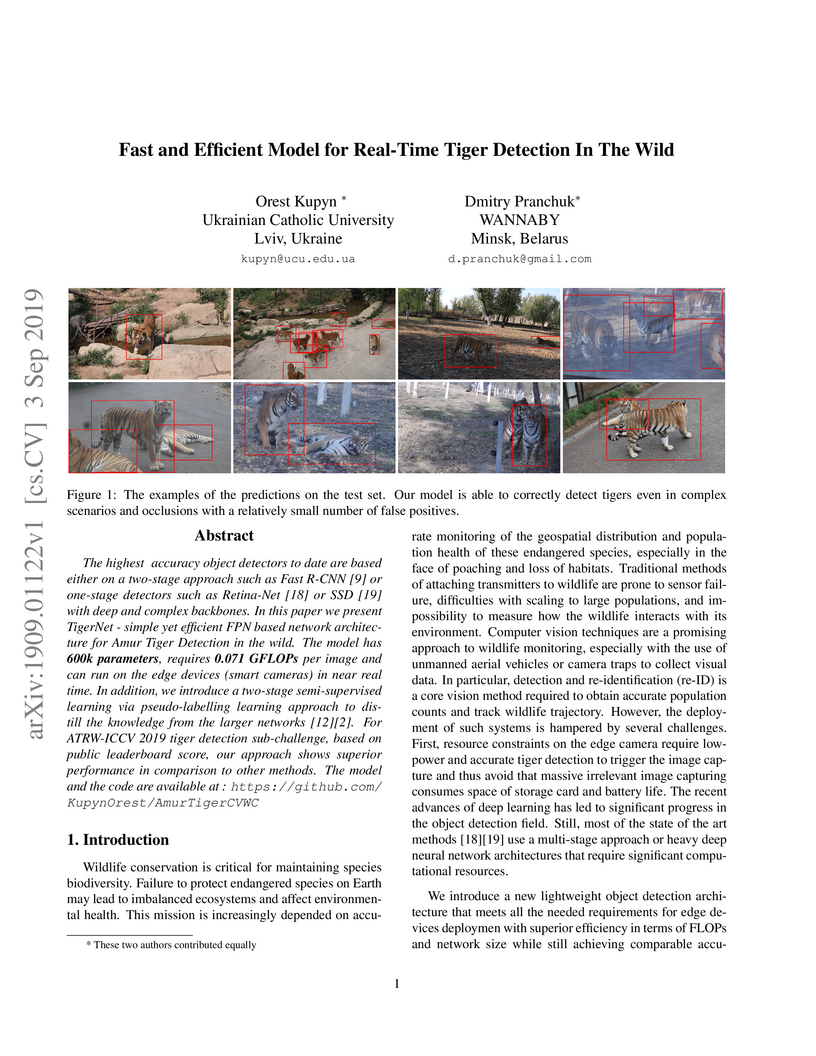

The highest accuracy object detectors to date are based either on a two-stage

approach such as Fast R-CNN or one-stage detectors such as Retina-Net or SSD

with deep and complex backbones. In this paper we present TigerNet - simple yet

efficient FPN based network architecture for Amur Tiger Detection in the wild.

The model has 600k parameters, requires 0.071 GFLOPs per image and can run on

the edge devices (smart cameras) in near real time. In addition, we introduce a

two-stage semi-supervised learning via pseudo-labelling learning approach to

distill the knowledge from the larger networks. For ATRW-ICCV 2019 tiger

detection sub-challenge, based on public leaderboard score, our approach shows

superior performance in comparison to other methods.

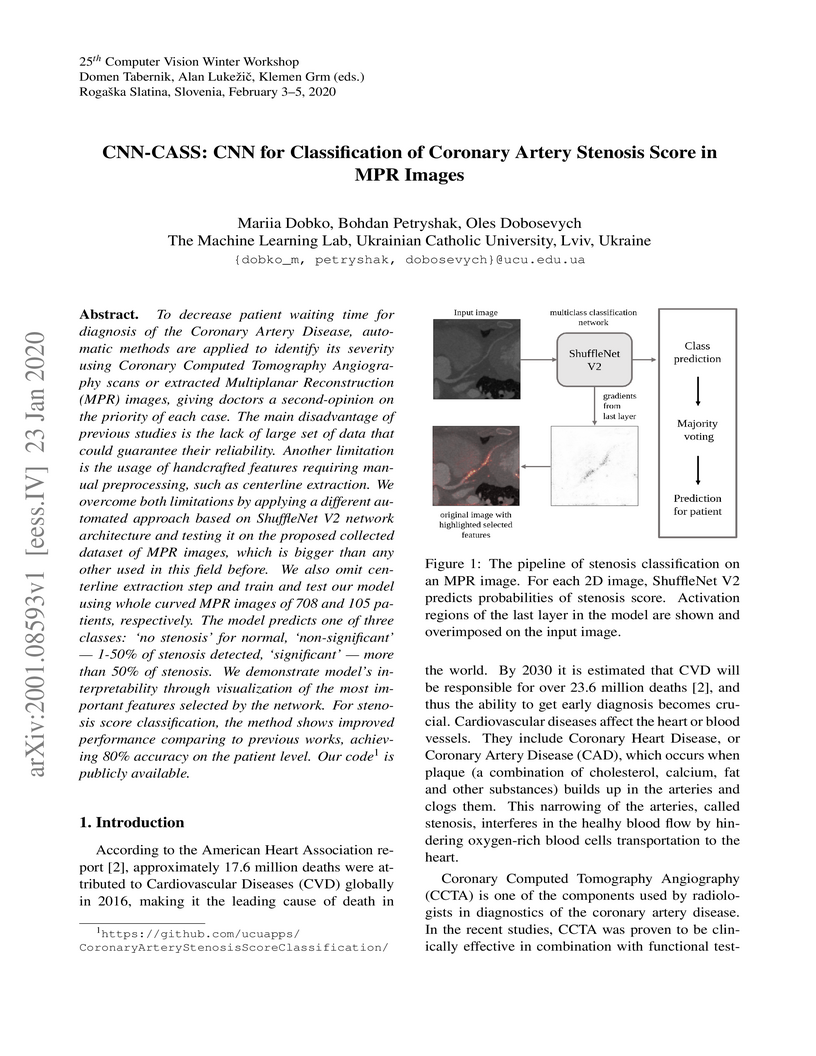

To decrease patient waiting time for diagnosis of the Coronary Artery

Disease, automatic methods are applied to identify its severity using Coronary

Computed Tomography Angiography scans or extracted Multiplanar Reconstruction

(MPR) images, giving doctors a second-opinion on the priority of each case. The

main disadvantage of previous studies is the lack of large set of data that

could guarantee their reliability. Another limitation is the usage of

handcrafted features requiring manual preprocessing, such as centerline

extraction. We overcome both limitations by applying a different automated

approach based on ShuffleNet V2 network architecture and testing it on the

proposed collected dataset of MPR images, which is bigger than any other used

in this field before. We also omit centerline extraction step and train and

test our model using whole curved MPR images of 708 and 105 patients,

respectively. The model predicts one of three classes: 'no stenosis' for

normal, 'non-significant' - 1-50% of stenosis detected, 'significant' - more

than 50% of stenosis. We demonstrate model's interpretability through

visualization of the most important features selected by the network. For

stenosis score classification, the method shows improved performance comparing

to previous works, achieving 80% accuracy on the patient level. Our code is

publicly available.

This paper introduces ActGAN - a novel end-to-end generative adversarial

network (GAN) for one-shot face reenactment. Given two images, the goal is to

transfer the facial expression of the source actor onto a target person in a

photo-realistic fashion. While existing methods require target identity to be

predefined, we address this problem by introducing a "many-to-many" approach,

which allows arbitrary persons both for source and target without additional

retraining. To this end, we employ the Feature Pyramid Network (FPN) as a core

generator building block - the first application of FPN in face reenactment,

producing finer results. We also introduce a solution to preserve a person's

identity between synthesized and target person by adopting the state-of-the-art

approach in deep face recognition domain. The architecture readily supports

reenactment in different scenarios: "many-to-many", "one-to-one",

"one-to-another" in terms of expression accuracy, identity preservation, and

overall image quality. We demonstrate that ActGAN achieves competitive

performance against recent works concerning visual quality.

There are no more papers matching your filters at the moment.