University of Bern

We present Apertus, a fully open suite of large language models (LLMs) designed to address two systemic shortcomings in today's open model ecosystem: data compliance and multilingual representation. Unlike many prior models that release weights without reproducible data pipelines or regard for content-owner rights, Apertus models are pretrained exclusively on openly available data, retroactively respecting `this http URL` exclusions and filtering for non-permissive, toxic, and personally identifiable content. To mitigate risks of memorization, we adopt the Goldfish objective during pretraining, strongly suppressing verbatim recall of data while retaining downstream task performance. The Apertus models also expand multilingual coverage, training on 15T tokens from over 1800 languages, with ~40% of pretraining data allocated to non-English content. Released at 8B and 70B scales, Apertus approaches state-of-the-art results among fully open models on multilingual benchmarks, rivalling or surpassing open-weight counterparts. Beyond model weights, we release all scientific artifacts from our development cycle with a permissive license, including data preparation scripts, checkpoints, evaluation suites, and training code, enabling transparent audit and extension.

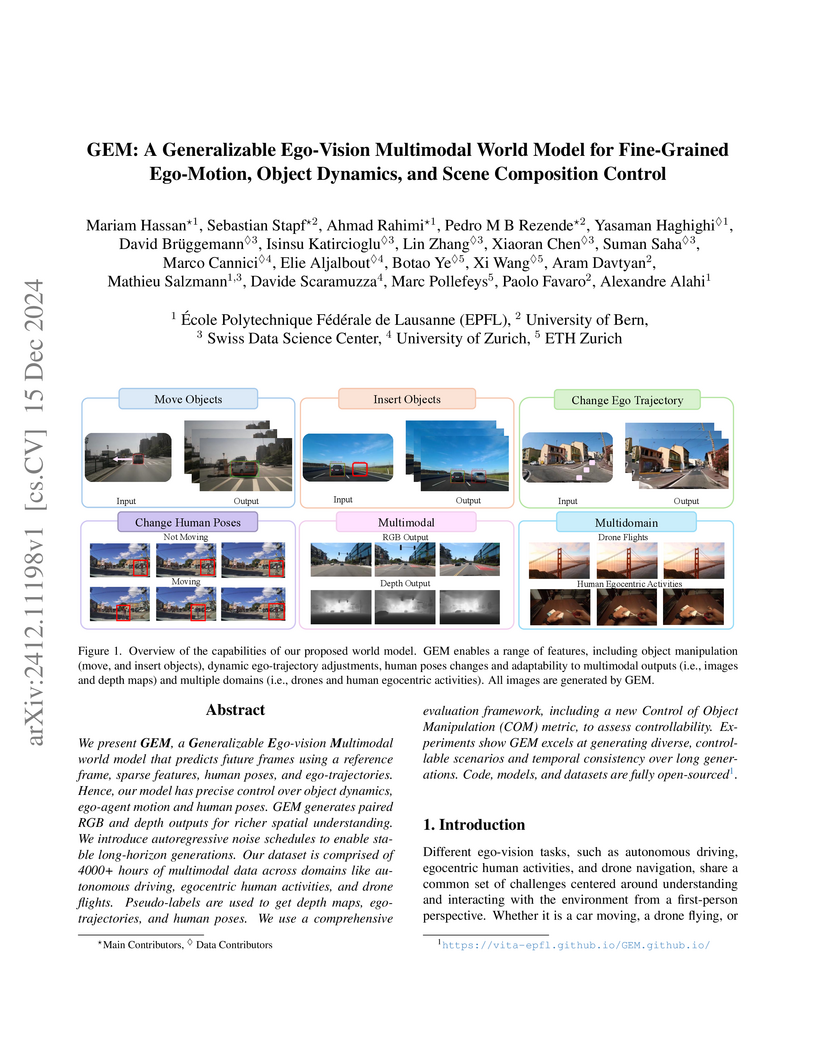

This paper introduces GEM, a generalizable world model for controlling ego-vision video generation with multiple modalities and domains.

Large language models (LLMs) have demonstrated that large-scale pretraining enables systems to adapt rapidly to new problems with little supervision in the language domain. This success, however, has not translated as effectively to the visual domain, where models, including LLMs, continue to struggle with compositional understanding, sample efficiency, and general-purpose problem-solving. We investigate Video Diffusion Models (VDMs) as a promising direction for bridging this gap. Pretraining on spatiotemporal data endows these models with strong inductive biases for structure and dynamics, which we hypothesize can support broad task adaptability. To test this, we design a controlled evaluation in which both a pretrained LLM and a pretrained VDM are equipped with lightweight adapters and presented with tasks in their natural modalities. Across benchmarks including ARC-AGI, ConceptARC, visual games, route planning, and cellular automata, VDMs demonstrate higher data efficiency than their language counterparts. Taken together, our results indicate that video pretraining offers inductive biases that support progress toward visual foundation models.

University of ZurichUniversity of Bern University of Pennsylvania

University of Pennsylvania Technical University of MunichHomi Bhabha National InstituteWashington University in St. LouisUniversity of California San FranciscoBern University HospitalThe Ohio State University Wexner Medical CenterUniversidade Federal de São PauloUniversity of Pittsburgh Medical CenterThe University of Alabama at BirminghamUniversity Children’s Hospital ZurichUniversity of Texas MD Anderson Cancer CenterNational Cancer InstituteTata Memorial hospitalSage BionetworksLeidos Biomedical Research, Inc.University Medical Center RostockThomas Jefferson University HospitalUnity Health TorontoMGH/Harvard Medical SchoolDiagnósticos da América SA

Technical University of MunichHomi Bhabha National InstituteWashington University in St. LouisUniversity of California San FranciscoBern University HospitalThe Ohio State University Wexner Medical CenterUniversidade Federal de São PauloUniversity of Pittsburgh Medical CenterThe University of Alabama at BirminghamUniversity Children’s Hospital ZurichUniversity of Texas MD Anderson Cancer CenterNational Cancer InstituteTata Memorial hospitalSage BionetworksLeidos Biomedical Research, Inc.University Medical Center RostockThomas Jefferson University HospitalUnity Health TorontoMGH/Harvard Medical SchoolDiagnósticos da América SA

University of Pennsylvania

University of Pennsylvania Technical University of MunichHomi Bhabha National InstituteWashington University in St. LouisUniversity of California San FranciscoBern University HospitalThe Ohio State University Wexner Medical CenterUniversidade Federal de São PauloUniversity of Pittsburgh Medical CenterThe University of Alabama at BirminghamUniversity Children’s Hospital ZurichUniversity of Texas MD Anderson Cancer CenterNational Cancer InstituteTata Memorial hospitalSage BionetworksLeidos Biomedical Research, Inc.University Medical Center RostockThomas Jefferson University HospitalUnity Health TorontoMGH/Harvard Medical SchoolDiagnósticos da América SA

Technical University of MunichHomi Bhabha National InstituteWashington University in St. LouisUniversity of California San FranciscoBern University HospitalThe Ohio State University Wexner Medical CenterUniversidade Federal de São PauloUniversity of Pittsburgh Medical CenterThe University of Alabama at BirminghamUniversity Children’s Hospital ZurichUniversity of Texas MD Anderson Cancer CenterNational Cancer InstituteTata Memorial hospitalSage BionetworksLeidos Biomedical Research, Inc.University Medical Center RostockThomas Jefferson University HospitalUnity Health TorontoMGH/Harvard Medical SchoolDiagnósticos da América SAThe BraTS 2021 challenge celebrates its 10th anniversary and is jointly organized by the Radiological Society of North America (RSNA), the American Society of Neuroradiology (ASNR), and the Medical Image Computing and Computer Assisted Interventions (MICCAI) society. Since its inception, BraTS has been focusing on being a common benchmarking venue for brain glioma segmentation algorithms, with well-curated multi-institutional multi-parametric magnetic resonance imaging (mpMRI) data. Gliomas are the most common primary malignancies of the central nervous system, with varying degrees of aggressiveness and prognosis. The RSNA-ASNR-MICCAI BraTS 2021 challenge targets the evaluation of computational algorithms assessing the same tumor compartmentalization, as well as the underlying tumor's molecular characterization, in pre-operative baseline mpMRI data from 2,040 patients. Specifically, the two tasks that BraTS 2021 focuses on are: a) the segmentation of the histologically distinct brain tumor sub-regions, and b) the classification of the tumor's O[6]-methylguanine-DNA methyltransferase (MGMT) promoter methylation status. The performance evaluation of all participating algorithms in BraTS 2021 will be conducted through the Sage Bionetworks Synapse platform (Task 1) and Kaggle (Task 2), concluding in distributing to the top ranked participants monetary awards of $60,000 collectively.

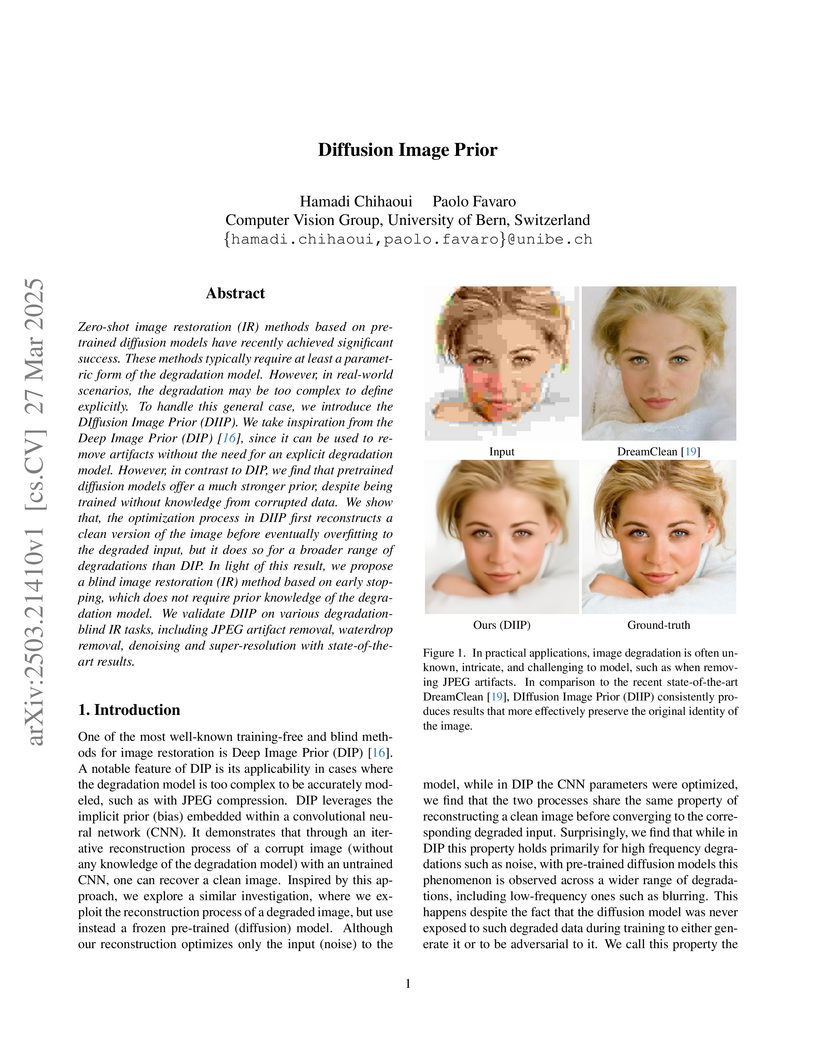

DIffusion Image Prior (DIIP) offers a training-free and fully blind image restoration method by optimizing the input noise to a frozen pre-trained diffusion model. This approach robustly handles diverse degradations, outperforming prior blind techniques with improvements such as a 1.32 PSNR gain over DreamClean for denoising and a 1.37 PSNR gain for JPEG de-artifacting on CelebA.

This research analyzes encoder-only transformer dynamics at inference time using a mean-field model, identifying three distinct dynamical phases: alignment, heat, and pairing. The work mathematically characterizes how token representations evolve through these phases on separated timescales, offering a unified explanation for progressive representation refinement that is directly applicable to modern LLM scaling strategies.

University of Washington

University of Washington Imperial College LondonUniversity of BernTUD Dresden University of TechnologyMayo ClinicUniversity Hospital RWTH AachenMedical University of ViennaUniversity of MilanUniversity Medical Center MainzGerman Cancer Research CenterFred Hutchinson Cancer CenterUniversity of LinzUniversity Hospital HeidelbergKepler University HospitalInternational Agency for Research on CancerUniversity Hospital Schleswig-HolsteinWorld Health OrganizationEuropean Institute of Oncology (IRCCS)

Imperial College LondonUniversity of BernTUD Dresden University of TechnologyMayo ClinicUniversity Hospital RWTH AachenMedical University of ViennaUniversity of MilanUniversity Medical Center MainzGerman Cancer Research CenterFred Hutchinson Cancer CenterUniversity of LinzUniversity Hospital HeidelbergKepler University HospitalInternational Agency for Research on CancerUniversity Hospital Schleswig-HolsteinWorld Health OrganizationEuropean Institute of Oncology (IRCCS)A deep learning framework, EAGLE, was developed to efficiently analyze whole slide pathology images, achieving an average AUROC of 0.742 across 31 tasks while processing images over 99% faster than previous methods by selectively focusing on critical regions.

In domains such as molecular and protein generation, physical systems exhibit inherent symmetries that are critical to model. Two main strategies have emerged for learning invariant distributions: designing equivariant network architectures and using data augmentation to approximate equivariance. While equivariant architectures preserve symmetry by design, they often involve greater complexity and pose optimization challenges. Data augmentation, on the other hand, offers flexibility but may fall short in fully capturing symmetries. Our framework enhances both approaches by reducing training variance and providing a provably lower-variance gradient estimator. We achieve this by interpreting data augmentation as a Monte Carlo estimator of the training gradient and applying Rao-Blackwellization. This leads to more stable optimization, faster convergence, and reduced variance, all while requiring only a single forward and backward pass per sample. We also present a practical implementation of this estimator incorporating the loss and sampling procedure through a method we call Orbit Diffusion. Theoretically, we guarantee that our loss admits equivariant minimizers. Empirically, Orbit Diffusion achieves state-of-the-art results on GEOM-QM9 for molecular conformation generation, improves crystal structure prediction, and advances text-guided crystal generation on the Perov-5 and MP-20 benchmarks. Additionally, it enhances protein designability in protein structure generation. Code is available at: this https URL.

07 Aug 2025

We report the creation and characterization of a molecular-scale negative differential conductance (NDC) device by assembling a triangular trimer of 4,5,9,10-tetrabromo-1,3,6,8-tetraazapyrene (TBTAP) molecules on a superconducting Pb(111) substrate. Using low-temperature scanning tunneling spectroscopy, we observe robust NDC behavior manifesting as a decrease in current with increasing voltage between 0.7-0.9 V arising from the interplay of Coulomb blockade and strong inter-molecular capacitive coupling within the molecular cluster. Gate-controlled charging and discharging processes are directly visualized via two-dimensional differential conductance mapping, which reveals the emergence of Coulomb rings and spatial regions of NDC. Theoretical modeling using a three-impurity Anderson model and master equation approach quantitatively reproduces the experimental observations and demonstrates that the NDC emerges purely from electron correlations, independent of the underlying superconductivity. By tuning the geometry to a hexamer structure, we further show that cluster topology provides versatile control over electronic properties at the molecular scale. These results establish a functional platform for implementing multifunctional molecular devices and highlight a strategy toward programmable and scalable nanoelectronics.

Video Diffusion Models (VDMs) demonstrate emergent few-shot learning capabilities, capable of adapting to diverse visual tasks from geometric transformations to abstract reasoning using minimal examples. Researchers from the University of Bern and EPFL show that by reformulating tasks as visual transitions and employing parameter-efficient fine-tuning, VDMs leverage their implicit understanding of the visual world to achieve strong performance.

In this paper we study the problem of image representation learning without human annotation. By following the principles of self-supervision, we build a convolutional neural network (CNN) that can be trained to solve Jigsaw puzzles as a pretext task, which requires no manual labeling, and then later repurposed to solve object classification and detection. To maintain the compatibility across tasks we introduce the context-free network (CFN), a siamese-ennead CNN. The CFN takes image tiles as input and explicitly limits the receptive field (or context) of its early processing units to one tile at a time. We show that the CFN includes fewer parameters than AlexNet while preserving the same semantic learning capabilities. By training the CFN to solve Jigsaw puzzles, we learn both a feature mapping of object parts as well as their correct spatial arrangement. Our experimental evaluations show that the learned features capture semantically relevant content. Our proposed method for learning visual representations outperforms state of the art methods in several transfer learning benchmarks.

ETH Zurich

ETH Zurich University of Washington

University of Washington CNRS

CNRS University of Pittsburgh

University of Pittsburgh University of CambridgeUniversity of FreiburgHeidelberg UniversityLeibniz University Hannover

University of CambridgeUniversity of FreiburgHeidelberg UniversityLeibniz University Hannover Northeastern University

Northeastern University UCLA

UCLA Imperial College London

Imperial College London University of ManchesterUniversity of Zurich

University of ManchesterUniversity of Zurich New York UniversityUniversity of BernUniversity of Stuttgart

New York UniversityUniversity of BernUniversity of Stuttgart UC Berkeley

UC Berkeley University College London

University College London Fudan University

Fudan University Georgia Institute of TechnologyNational Taiwan University

Georgia Institute of TechnologyNational Taiwan University the University of Tokyo

the University of Tokyo University of California, IrvineUniversity of BonnTechnical University of Berlin

University of California, IrvineUniversity of BonnTechnical University of Berlin University of Bristol

University of Bristol University of MichiganUniversity of EdinburghUniversity of Hong KongUniversity of Alabama at Birmingham

University of MichiganUniversity of EdinburghUniversity of Hong KongUniversity of Alabama at Birmingham Northwestern UniversityUniversity of Bamberg

Northwestern UniversityUniversity of Bamberg University of Florida

University of Florida Emory UniversityUniversity of CologneHarvard Medical School

Emory UniversityUniversity of CologneHarvard Medical School University of Pennsylvania

University of Pennsylvania University of SouthamptonFlorida State University

University of SouthamptonFlorida State University EPFL

EPFL University of Wisconsin-MadisonMassachusetts General HospitalChongqing UniversityKeio University

University of Wisconsin-MadisonMassachusetts General HospitalChongqing UniversityKeio University University of Alberta

University of Alberta King’s College LondonFriedrich-Alexander-Universität Erlangen-NürnbergUniversity of Luxembourg

King’s College LondonFriedrich-Alexander-Universität Erlangen-NürnbergUniversity of Luxembourg Technical University of MunichUniversity of Duisburg-EssenSapienza University of RomeUniversity of HeidelbergUniversity of Sheffield

Technical University of MunichUniversity of Duisburg-EssenSapienza University of RomeUniversity of HeidelbergUniversity of Sheffield HKUSTUniversity of GenevaWashington University in St. LouisTU BerlinUniversity of GlasgowUniversity of SiegenUniversity of PotsdamUniversidade Estadual de CampinasUniversity of Oldenburg

HKUSTUniversity of GenevaWashington University in St. LouisTU BerlinUniversity of GlasgowUniversity of SiegenUniversity of PotsdamUniversidade Estadual de CampinasUniversity of Oldenburg The Ohio State UniversityUniversity of LeicesterGerman Cancer Research Center (DKFZ)University of BremenUniversity of ToulouseUniversity of Miami

The Ohio State UniversityUniversity of LeicesterGerman Cancer Research Center (DKFZ)University of BremenUniversity of ToulouseUniversity of Miami Karlsruhe Institute of TechnologyPeking Union Medical CollegeUniversity of OuluUniversity of HamburgUniversity of RegensburgUniversity of BirminghamUniversity of LeedsChinese Academy of Medical SciencesINSERM

Karlsruhe Institute of TechnologyPeking Union Medical CollegeUniversity of OuluUniversity of HamburgUniversity of RegensburgUniversity of BirminghamUniversity of LeedsChinese Academy of Medical SciencesINSERM University of BaselPeking Union Medical College HospitalUniversity of LausanneUniversity of LilleUniversity of PoitiersUniversity of PassauUniversity of LübeckKing Fahd University of Petroleum and MineralsUniversity of LondonUniversity of NottinghamUniversity of Erlangen-NurembergUniversity of BielefeldSorbonne UniversityUniversity of South FloridaWake Forest UniversityUniversity of CalgaryUniversity of Picardie Jules VerneIBM

University of BaselPeking Union Medical College HospitalUniversity of LausanneUniversity of LilleUniversity of PoitiersUniversity of PassauUniversity of LübeckKing Fahd University of Petroleum and MineralsUniversity of LondonUniversity of NottinghamUniversity of Erlangen-NurembergUniversity of BielefeldSorbonne UniversityUniversity of South FloridaWake Forest UniversityUniversity of CalgaryUniversity of Picardie Jules VerneIBM University of GöttingenUniversity of BordeauxUniversity of MannheimUniversity of California San FranciscoNIHUniversity of KonstanzUniversity of Electro-CommunicationsUniversity of WuppertalUniversity of ReunionUNICAMPUniversity of TrierHasso Plattner InstituteUniversity of BayreuthHeidelberg University HospitalUniversity of StrasbourgDKFZUniversity of LorraineInselspital, Bern University Hospital, University of BernUniversity of WürzburgUniversity of La RochelleUniversity of LyonUniversity of HohenheimUniversity Medical Center Hamburg-EppendorfUniversity of UlmUniversity Hospital ZurichUniversity of TuebingenUniversity of KaiserslauternUniversity of NantesUniversity of MainzUniversity of PaderbornUniversity of KielMedical University of South CarolinaUniversity of RostockThe University of Texas MD Anderson Cancer CenterNational Research Council (CNR)Hannover Medical SchoolItalian National Research CouncilUniversity of MuensterUniversity of MontpellierUniversity of LeipzigUniversity of GreifswaldUniversity Hospital BernSiemens HealthineersThe University of Alabama at BirminghamNational Institutes of HealthUniversity of MarburgUniversity of Paris-SaclayUniversity of LimogesUniversity of Clermont AuvergneUniversity of DortmundUniversity of GiessenKITUniversity of ToulonChildren’s Hospital of PhiladelphiaUniversity of JenaNational Taiwan University HospitalUniversity of SaarlandUniversity of ErlangenNational Cancer InstituteUniversity Hospital HeidelbergSwiss Federal Institute of Technology LausanneUniversity of Texas Health Science Center at HoustonNational Institute of Biomedical Imaging and BioengineeringUniversity of New CaledoniaUniversity of Koblenz-LandauParis Diderot UniversityUniversity of ParisInselspital, Bern University HospitalUniversity of Grenoble AlpesUniversity Hospital BaselMD Anderson Cancer CenterUniversity of AngersUniversity of French PolynesiaUniversity of MagdeburgUniversity of Geneva, SwitzerlandOulu University HospitalUniversity of ToursFriedrich-Alexander-University Erlangen-NurnbergUniversity of Rennes 1Wake Forest School of MedicineNIH Clinical CenterParis Descartes UniversityUniversity of Rouen NormandieUniversity of Aix-MarseilleUniversity of Perpignan Via DomitiaUniversity of Caen NormandieUniversity of FrankfurtUniversity of BochumUniversity of Bourgogne-Franche-ComtéUniversity of Corsica Pasquale PaoliNational Institute of Neurological Disorders and StrokeUniversity of HannoverRoche DiagnosticsUniversity of South BrittanyUniversity of DüsseldorfUniversity of Reims Champagne-ArdenneUniversity of HalleIRCCS Fondazione Santa LuciaUniversity of Applied Sciences TrierUniversity of Southampton, UKUniversity of Nice–Sophia AntipolisUniversit

de LorraineUniversité Paris-Saclay["École Polytechnique Fédérale de Lausanne"]RWTH Aachen UniversityUniversity of Bern, Institute for Advanced Study in Biomedical InnovationCRIBIS University of AlbertaThe Cancer Imaging Archive (TCIA)Fraunhofer Institute for Medical Image Computing MEVISMedical School of HannoverIstituto di Ricovero e Cura a Carattere Scientifico NeuromedFondazione Santa Lucia IRCCSCEA, LIST, Laboratory of Image and Biomedical SystemsUniversity of Alberta, CanadaHeidelberg University Hospital, Department of NeuroradiologyUniversity of Bern, SwitzerlandUniversity of DresdenUniversity of SpeyerUniversity of Trier, GermanyUniversity of Lorraine, FranceUniversity of Le Havre NormandieUniversity of Bretagne OccidentaleUniversity of French GuianaUniversity of the AntillesUniversity of Bern, Institute of Surgical Technology and BiomechanicsUniversity of Bern, ARTORG Center for Biomedical Engineering ResearchUniversity of Geneva, Department of RadiologyUniversity of Zürich, Department of NeuroradiologyRuhr-University-Bochum

University of GöttingenUniversity of BordeauxUniversity of MannheimUniversity of California San FranciscoNIHUniversity of KonstanzUniversity of Electro-CommunicationsUniversity of WuppertalUniversity of ReunionUNICAMPUniversity of TrierHasso Plattner InstituteUniversity of BayreuthHeidelberg University HospitalUniversity of StrasbourgDKFZUniversity of LorraineInselspital, Bern University Hospital, University of BernUniversity of WürzburgUniversity of La RochelleUniversity of LyonUniversity of HohenheimUniversity Medical Center Hamburg-EppendorfUniversity of UlmUniversity Hospital ZurichUniversity of TuebingenUniversity of KaiserslauternUniversity of NantesUniversity of MainzUniversity of PaderbornUniversity of KielMedical University of South CarolinaUniversity of RostockThe University of Texas MD Anderson Cancer CenterNational Research Council (CNR)Hannover Medical SchoolItalian National Research CouncilUniversity of MuensterUniversity of MontpellierUniversity of LeipzigUniversity of GreifswaldUniversity Hospital BernSiemens HealthineersThe University of Alabama at BirminghamNational Institutes of HealthUniversity of MarburgUniversity of Paris-SaclayUniversity of LimogesUniversity of Clermont AuvergneUniversity of DortmundUniversity of GiessenKITUniversity of ToulonChildren’s Hospital of PhiladelphiaUniversity of JenaNational Taiwan University HospitalUniversity of SaarlandUniversity of ErlangenNational Cancer InstituteUniversity Hospital HeidelbergSwiss Federal Institute of Technology LausanneUniversity of Texas Health Science Center at HoustonNational Institute of Biomedical Imaging and BioengineeringUniversity of New CaledoniaUniversity of Koblenz-LandauParis Diderot UniversityUniversity of ParisInselspital, Bern University HospitalUniversity of Grenoble AlpesUniversity Hospital BaselMD Anderson Cancer CenterUniversity of AngersUniversity of French PolynesiaUniversity of MagdeburgUniversity of Geneva, SwitzerlandOulu University HospitalUniversity of ToursFriedrich-Alexander-University Erlangen-NurnbergUniversity of Rennes 1Wake Forest School of MedicineNIH Clinical CenterParis Descartes UniversityUniversity of Rouen NormandieUniversity of Aix-MarseilleUniversity of Perpignan Via DomitiaUniversity of Caen NormandieUniversity of FrankfurtUniversity of BochumUniversity of Bourgogne-Franche-ComtéUniversity of Corsica Pasquale PaoliNational Institute of Neurological Disorders and StrokeUniversity of HannoverRoche DiagnosticsUniversity of South BrittanyUniversity of DüsseldorfUniversity of Reims Champagne-ArdenneUniversity of HalleIRCCS Fondazione Santa LuciaUniversity of Applied Sciences TrierUniversity of Southampton, UKUniversity of Nice–Sophia AntipolisUniversit

de LorraineUniversité Paris-Saclay["École Polytechnique Fédérale de Lausanne"]RWTH Aachen UniversityUniversity of Bern, Institute for Advanced Study in Biomedical InnovationCRIBIS University of AlbertaThe Cancer Imaging Archive (TCIA)Fraunhofer Institute for Medical Image Computing MEVISMedical School of HannoverIstituto di Ricovero e Cura a Carattere Scientifico NeuromedFondazione Santa Lucia IRCCSCEA, LIST, Laboratory of Image and Biomedical SystemsUniversity of Alberta, CanadaHeidelberg University Hospital, Department of NeuroradiologyUniversity of Bern, SwitzerlandUniversity of DresdenUniversity of SpeyerUniversity of Trier, GermanyUniversity of Lorraine, FranceUniversity of Le Havre NormandieUniversity of Bretagne OccidentaleUniversity of French GuianaUniversity of the AntillesUniversity of Bern, Institute of Surgical Technology and BiomechanicsUniversity of Bern, ARTORG Center for Biomedical Engineering ResearchUniversity of Geneva, Department of RadiologyUniversity of Zürich, Department of NeuroradiologyRuhr-University-BochumGliomas are the most common primary brain malignancies, with different

degrees of aggressiveness, variable prognosis and various heterogeneous

histologic sub-regions, i.e., peritumoral edematous/invaded tissue, necrotic

core, active and non-enhancing core. This intrinsic heterogeneity is also

portrayed in their radio-phenotype, as their sub-regions are depicted by

varying intensity profiles disseminated across multi-parametric magnetic

resonance imaging (mpMRI) scans, reflecting varying biological properties.

Their heterogeneous shape, extent, and location are some of the factors that

make these tumors difficult to resect, and in some cases inoperable. The amount

of resected tumor is a factor also considered in longitudinal scans, when

evaluating the apparent tumor for potential diagnosis of progression.

Furthermore, there is mounting evidence that accurate segmentation of the

various tumor sub-regions can offer the basis for quantitative image analysis

towards prediction of patient overall survival. This study assesses the

state-of-the-art machine learning (ML) methods used for brain tumor image

analysis in mpMRI scans, during the last seven instances of the International

Brain Tumor Segmentation (BraTS) challenge, i.e., 2012-2018. Specifically, we

focus on i) evaluating segmentations of the various glioma sub-regions in

pre-operative mpMRI scans, ii) assessing potential tumor progression by virtue

of longitudinal growth of tumor sub-regions, beyond use of the RECIST/RANO

criteria, and iii) predicting the overall survival from pre-operative mpMRI

scans of patients that underwent gross total resection. Finally, we investigate

the challenge of identifying the best ML algorithms for each of these tasks,

considering that apart from being diverse on each instance of the challenge,

the multi-institutional mpMRI BraTS dataset has also been a continuously

evolving/growing dataset.

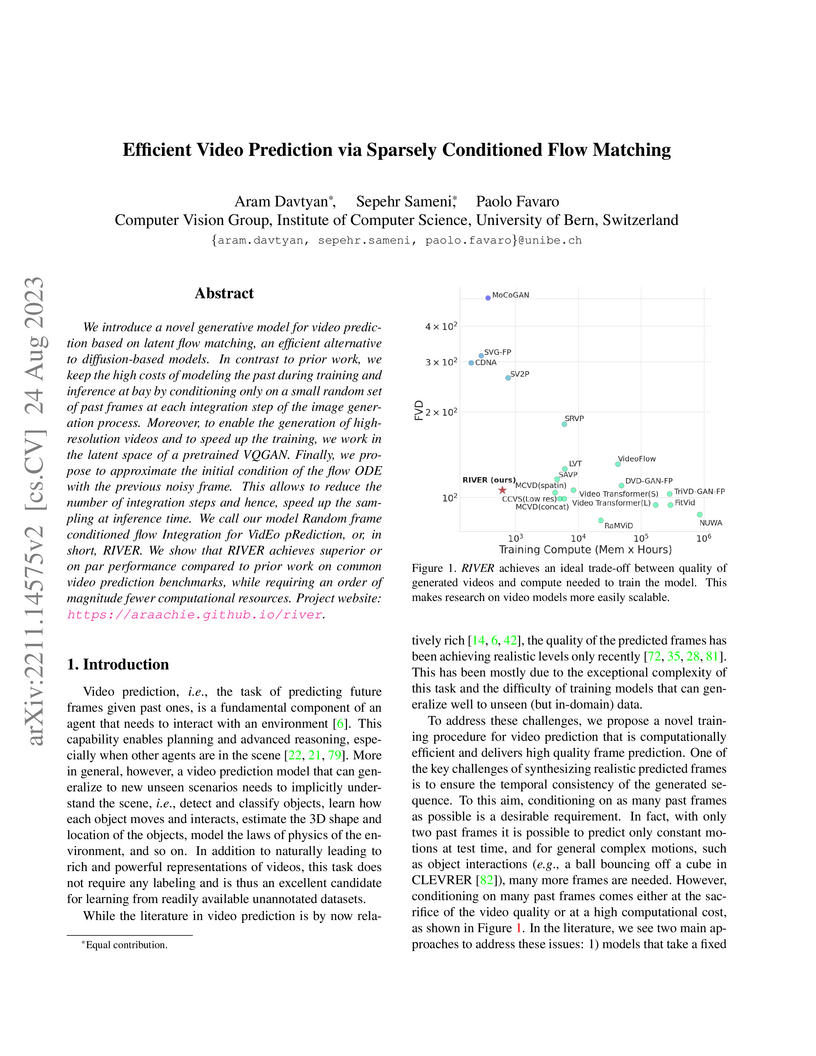

We introduce a novel generative model for video prediction based on latent

flow matching, an efficient alternative to diffusion-based models. In contrast

to prior work, we keep the high costs of modeling the past during training and

inference at bay by conditioning only on a small random set of past frames at

each integration step of the image generation process. Moreover, to enable the

generation of high-resolution videos and to speed up the training, we work in

the latent space of a pretrained VQGAN. Finally, we propose to approximate the

initial condition of the flow ODE with the previous noisy frame. This allows to

reduce the number of integration steps and hence, speed up the sampling at

inference time. We call our model Random frame conditioned flow Integration for

VidEo pRediction, or, in short, RIVER. We show that RIVER achieves superior or

on par performance compared to prior work on common video prediction

benchmarks, while requiring an order of magnitude fewer computational

resources.

CNRS

CNRS University of California, Santa Barbara

University of California, Santa Barbara Sun Yat-Sen UniversityUniversity of Bern

Sun Yat-Sen UniversityUniversity of Bern Shanghai Jiao Tong UniversityUniversity of Edinburgh

Shanghai Jiao Tong UniversityUniversity of Edinburgh Northwestern University

Northwestern University Space Telescope Science Institute

Space Telescope Science Institute University of Arizona

University of Arizona University of Central Florida

University of Central Florida University of VirginiaAmerican Museum of Natural HistoryEuropean Space AgencyTsung-Dao Lee InstituteNational Institutes of Natural SciencesUniv Grenoble AlpesIPAGTrinity College Dublin, The University of DublinUniversit•at Duisburg{EssenMax Planck Institut für Astronomie

University of VirginiaAmerican Museum of Natural HistoryEuropean Space AgencyTsung-Dao Lee InstituteNational Institutes of Natural SciencesUniv Grenoble AlpesIPAGTrinity College Dublin, The University of DublinUniversit•at Duisburg{EssenMax Planck Institut für AstronomieWe present JWST NIRSpec/PRISM IFU time-resolved observations of 2M1207 A and b (TWA 27), a ∼10 Myr binary system consisting of a ∼2500 K sub-stellar primary hosting a ∼1300 K companion. Our data provide 20 time-resolved spectra over an observation spanning 12.56 hours. We provide an empirical characterization for the spectra of both objects across time. For 2M1207 A, non-linear trend models are statistically favored within the ranges 0.6-2.3 μm and 3.8-5.3 μm. However, most of the periods constrained from sinusoidal models exceed the observing window, setting a lower limit of 12.56 hours. We find the data at Hα and beyond 4.35 μm show a moderate time correlation, as well as a pair of light curves at 0.73-0.80 μm and 3.36-3.38 μm. For 2M1207 b, light curves integrated across 0.86-1.77 μm and 3.29-4.34 μm support linear trend models. Following the interpretation of Zhang et. al. (2025), we model the 2M1207 b data with two 1D atmospheric components, both with silicate and iron condensates. The model of time variability as changes to the cloud filling factor shows broad consistency with the variability amplitudes derived from our data. Our amplitudes, however, disagree with the models at ≈0.86-1 μm. While an additional model component such as rainout chemistry may be considered here, our analysis is limited by a low signal-to-noise ratio. Our results demonstrate the capability of JWST to simultaneously monitor the spectral variability of a planetary-mass companion and host at low contrast.

Large, high-quality datasets are crucial for training Large Language Models (LLMs). However, so far, there are few datasets available for specialized critical domains such as law and the available ones are often only for the English language. We curate and release MultiLegalPile, a 689GB corpus in 24 languages from 17 jurisdictions. The MultiLegalPile corpus, which includes diverse legal data sources with varying licenses, allows for pretraining NLP models under fair use, with more permissive licenses for the Eurlex Resources and Legal mC4 subsets. We pretrain two RoBERTa models and one Longformer multilingually, and 24 monolingual models on each of the language-specific subsets and evaluate them on LEXTREME. Additionally, we evaluate the English and multilingual models on LexGLUE. Our multilingual models set a new SotA on LEXTREME and our English models on LexGLUE. We release the dataset, the trained models, and all of the code under the most open possible licenses.

12 Apr 2025

Tohoku UniversityNational Astronomical Observatory of JapanUniversity of Bern

Tohoku UniversityNational Astronomical Observatory of JapanUniversity of Bern Osaka University

Osaka University the University of Tokyo

the University of Tokyo Kyoto University

Kyoto University RIKEN

RIKEN Space Telescope Science Institute

Space Telescope Science Institute Université Paris-SaclayThe Graduate University for Advanced Studies (SOKENDAI)The University of Western OntarioAstrobiology CenterJapan Aerospace Exploration AgencyISAS/JAXA

Université Paris-SaclayThe Graduate University for Advanced Studies (SOKENDAI)The University of Western OntarioAstrobiology CenterJapan Aerospace Exploration AgencyISAS/JAXAExoJAX2 is presented as an updated differentiable spectral modeling framework for exoplanet and substellar atmospheres, enabling efficient and memory-optimized high-resolution emission, transmission, and reflection spectroscopy. It successfully performs Bayesian retrievals on JWST and ground-based data, yielding detailed atmospheric compositions and properties without requiring data binning.

CNRSNational Astronomical Observatory of Japan

CNRSNational Astronomical Observatory of Japan Chinese Academy of Sciences

Chinese Academy of Sciences Université de MontréalUniversity of Bern

Université de MontréalUniversity of Bern University of Oxford

University of Oxford University of Bristol

University of Bristol University of Copenhagen

University of Copenhagen NASA Goddard Space Flight CenterAustrian Academy of SciencesWeizmann Institute of ScienceTechnical University BerlinUniversité de GenèveUniversity of Geneva

NASA Goddard Space Flight CenterAustrian Academy of SciencesWeizmann Institute of ScienceTechnical University BerlinUniversité de GenèveUniversity of Geneva University of WarwickUniversidade do PortoObservatoire de ParisUniversité de LiègeUniversité Côte d’AzurUniversity of BirminghamUniversity of GrazInstituto de Astrofísica e Ciências do EspaçoUniversidad Nacional Autónoma de MéxicoInstituto de Astrofísica de CanariasUniversidad de ChileEuropean Space Agency

University of WarwickUniversidade do PortoObservatoire de ParisUniversité de LiègeUniversité Côte d’AzurUniversity of BirminghamUniversity of GrazInstituto de Astrofísica e Ciências do EspaçoUniversidad Nacional Autónoma de MéxicoInstituto de Astrofísica de CanariasUniversidad de ChileEuropean Space Agency European Southern ObservatoryUniversidade de AveiroGerman Aerospace Center (DLR)Shanghai Astronomical ObservatoryUniversidad de La LagunaCavendish LaboratoryUniv Grenoble AlpesSpace Research InstituteUniversidade de CoimbraUniversidad Católica del NorteUniversity of SilesiaMillennium Institute for AstrophysicsCentro de Astrobiología (CSIC-INTA)Observatoire de Haute-ProvenceUnidad Mixta Internacional Franco-Chilena de AstronomíaUniversit PSL* North–West University

European Southern ObservatoryUniversidade de AveiroGerman Aerospace Center (DLR)Shanghai Astronomical ObservatoryUniversidad de La LagunaCavendish LaboratoryUniv Grenoble AlpesSpace Research InstituteUniversidade de CoimbraUniversidad Católica del NorteUniversity of SilesiaMillennium Institute for AstrophysicsCentro de Astrobiología (CSIC-INTA)Observatoire de Haute-ProvenceUnidad Mixta Internacional Franco-Chilena de AstronomíaUniversit PSL* North–West UniversityThe distribution of close-in exoplanets is shaped by the interplay between atmospheric and dynamical processes. The Neptunian Desert, Ridge, and Savanna illustrate the sensitivity of these worlds to such processes, making them ideal to disentangle their roles. Determining how many Neptunes were brought close-in by early disk-driven migration (DDM; maintaining primordial spin-orbit alignment) or late high-eccentricity migration (HEM; generating large misalignments) is essential to understand how much atmosphere they lost. We propose a unified view of the Neptunian landscape to guide its exploration, speculating that the Ridge is a hot spot for evolutionary processes. Low-density Neptunes would mainly undergo DDM, getting fully eroded at shorter periods than the Ridge, while denser Neptunes would be brought to the Ridge and Desert by HEM. We embark on this exploration via ATREIDES, which relies on spectroscopy and photometry of 60 close-in Neptunes, their reduction with robust pipelines, and their interpretation through internal structure, atmospheric, and evolutionary models. We carried out a systematic RM census with VLT/ESPRESSO to measure the distribution of 3D spin-orbit angles, correlate its shape with system properties and thus relate the fraction of aligned-misaligned systems to DDM, HEM, and atmospheric erosion. Our first target, TOI-421c, lies in the Savanna with a neighboring sub-Neptune TOI-421b. We measured their 3D spin-orbit angles (Psib = 57+11-15 deg; Psic = 44.9+4.4-4.1 deg). Together with the eccentricity and possibly large mutual inclination of their orbits, this hints at a chaotic dynamical origin that could result from DDM followed by HEM. ATREIDES will provide the community with a wealth of constraints for formation and evolution models. We welcome collaborations that will contribute to pushing our understanding of the Neptunian landscape forward.

The recent success of machine learning methods applied to time series collected from Intensive Care Units (ICU) exposes the lack of standardized machine learning benchmarks for developing and comparing such methods. While raw datasets, such as MIMIC-IV or eICU, can be freely accessed on Physionet, the choice of tasks and pre-processing is often chosen ad-hoc for each publication, limiting comparability across publications. In this work, we aim to improve this situation by providing a benchmark covering a large spectrum of ICU-related tasks. Using the HiRID dataset, we define multiple clinically relevant tasks in collaboration with clinicians. In addition, we provide a reproducible end-to-end pipeline to construct both data and labels. Finally, we provide an in-depth analysis of current state-of-the-art sequence modeling methods, highlighting some limitations of deep learning approaches for this type of data. With this benchmark, we hope to give the research community the possibility of a fair comparison of their work.

19 Jul 2020

We present a method for designing smooth cross fields on surfaces that automatically align to sharp features of an underlying geometry. Our approach introduces a novel class of energies based on a representation of cross fields in the spherical harmonic basis. We provide theoretical analysis of these energies in the smooth setting, showing that they penalize deviations from surface creases while otherwise promoting intrinsically smooth fields. We demonstrate the applicability of our method to quad-meshing and include an extensive benchmark comparing our fields to other automatic approaches for generating feature-aligned cross fields on triangle meshes.

Purpose: Surgical scene understanding plays a critical role in the technology stack of tomorrow's intervention-assisting systems in endoscopic surgeries. For this, tracking the endoscope pose is a key component, but remains challenging due to illumination conditions, deforming tissues and the breathing motion of organs. Method: We propose a solution for stereo endoscopes that estimates depth and optical flow to minimize two geometric losses for camera pose estimation. Most importantly, we introduce two learned adaptive per-pixel weight mappings that balance contributions according to the input image content. To do so, we train a Deep Declarative Network to take advantage of the expressiveness of deep-learning and the robustness of a novel geometric-based optimization approach. We validate our approach on the publicly available SCARED dataset and introduce a new in-vivo dataset, StereoMIS, which includes a wider spectrum of typically observed surgical settings. Results: Our method outperforms state-of-the-art methods on average and more importantly, in difficult scenarios where tissue deformations and breathing motion are visible. We observed that our proposed weight mappings attenuate the contribution of pixels on ambiguous regions of the images, such as deforming tissues. Conclusion: We demonstrate the effectiveness of our solution to robustly estimate the camera pose in challenging endoscopic surgical scenes. Our contributions can be used to improve related tasks like simultaneous localization and mapping (SLAM) or 3D reconstruction, therefore advancing surgical scene understanding in minimally-invasive surgery.

There are no more papers matching your filters at the moment.