Peer review serves as a backbone of academic research, but in most AI conferences, the review quality is degrading as the number of submissions explodes. To reliably detect low-quality reviews, we define misinformed review points as either "weaknesses" in a review that contain incorrect premises, or "questions" in a review that can be already answered by the paper. We verify that 15.2% of weaknesses and 26.4% of questions are misinformed and introduce ReviewScore indicating if a review point is misinformed. To evaluate the factuality of each premise of weaknesses, we propose an automated engine that reconstructs every explicit and implicit premise from a weakness. We build a human expert-annotated ReviewScore dataset to check the ability of LLMs to automate ReviewScore evaluation. Then, we measure human-model agreements on ReviewScore using eight current state-of-the-art LLMs and verify moderate agreements. We also prove that evaluating premise-level factuality shows significantly higher agreements than evaluating weakness-level factuality. A thorough disagreement analysis further supports a potential of fully automated ReviewScore evaluation.

04 Nov 2025

NaviTrace introduces a Visual Question Answering benchmark to evaluate Vision-Language Models' embodied navigation capabilities using 1,000 real-world scenarios across four robot embodiments. It requires models to predict 2D navigation traces, revealing that current VLMs struggle significantly with accurate goal localization compared to human performance.

ETH Zurich

ETH Zurich University of Washington

University of Washington CNRS

CNRS University of Pittsburgh

University of Pittsburgh University of CambridgeUniversity of FreiburgHeidelberg UniversityLeibniz University Hannover

University of CambridgeUniversity of FreiburgHeidelberg UniversityLeibniz University Hannover Northeastern University

Northeastern University UCLA

UCLA Imperial College London

Imperial College London University of ManchesterUniversity of Zurich

University of ManchesterUniversity of Zurich New York UniversityUniversity of BernUniversity of Stuttgart

New York UniversityUniversity of BernUniversity of Stuttgart UC Berkeley

UC Berkeley University College London

University College London Fudan University

Fudan University Georgia Institute of TechnologyNational Taiwan University

Georgia Institute of TechnologyNational Taiwan University the University of Tokyo

the University of Tokyo University of California, IrvineUniversity of BonnTechnical University of Berlin

University of California, IrvineUniversity of BonnTechnical University of Berlin University of Bristol

University of Bristol University of MichiganUniversity of EdinburghUniversity of Hong KongUniversity of Alabama at Birmingham

University of MichiganUniversity of EdinburghUniversity of Hong KongUniversity of Alabama at Birmingham Northwestern UniversityUniversity of Bamberg

Northwestern UniversityUniversity of Bamberg University of Florida

University of Florida Emory UniversityUniversity of CologneHarvard Medical School

Emory UniversityUniversity of CologneHarvard Medical School University of Pennsylvania

University of Pennsylvania University of SouthamptonFlorida State University

University of SouthamptonFlorida State University EPFL

EPFL University of Wisconsin-MadisonMassachusetts General HospitalChongqing UniversityKeio University

University of Wisconsin-MadisonMassachusetts General HospitalChongqing UniversityKeio University University of Alberta

University of Alberta King’s College LondonFriedrich-Alexander-Universität Erlangen-NürnbergUniversity of Luxembourg

King’s College LondonFriedrich-Alexander-Universität Erlangen-NürnbergUniversity of Luxembourg Technical University of MunichUniversity of Duisburg-EssenSapienza University of RomeUniversity of HeidelbergUniversity of Sheffield

Technical University of MunichUniversity of Duisburg-EssenSapienza University of RomeUniversity of HeidelbergUniversity of Sheffield HKUSTUniversity of GenevaWashington University in St. LouisTU BerlinUniversity of GlasgowUniversity of SiegenUniversity of PotsdamUniversidade Estadual de CampinasUniversity of Oldenburg

HKUSTUniversity of GenevaWashington University in St. LouisTU BerlinUniversity of GlasgowUniversity of SiegenUniversity of PotsdamUniversidade Estadual de CampinasUniversity of Oldenburg The Ohio State UniversityUniversity of LeicesterGerman Cancer Research Center (DKFZ)University of BremenUniversity of ToulouseUniversity of Miami

The Ohio State UniversityUniversity of LeicesterGerman Cancer Research Center (DKFZ)University of BremenUniversity of ToulouseUniversity of Miami Karlsruhe Institute of TechnologyPeking Union Medical CollegeUniversity of OuluUniversity of HamburgUniversity of RegensburgUniversity of BirminghamUniversity of LeedsChinese Academy of Medical SciencesINSERM

Karlsruhe Institute of TechnologyPeking Union Medical CollegeUniversity of OuluUniversity of HamburgUniversity of RegensburgUniversity of BirminghamUniversity of LeedsChinese Academy of Medical SciencesINSERM University of BaselPeking Union Medical College HospitalUniversity of LausanneUniversity of LilleUniversity of PoitiersUniversity of PassauUniversity of LübeckKing Fahd University of Petroleum and MineralsUniversity of LondonUniversity of NottinghamUniversity of Erlangen-NurembergUniversity of BielefeldSorbonne UniversityUniversity of South FloridaWake Forest UniversityUniversity of CalgaryUniversity of Picardie Jules VerneIBM

University of BaselPeking Union Medical College HospitalUniversity of LausanneUniversity of LilleUniversity of PoitiersUniversity of PassauUniversity of LübeckKing Fahd University of Petroleum and MineralsUniversity of LondonUniversity of NottinghamUniversity of Erlangen-NurembergUniversity of BielefeldSorbonne UniversityUniversity of South FloridaWake Forest UniversityUniversity of CalgaryUniversity of Picardie Jules VerneIBM University of GöttingenUniversity of BordeauxUniversity of MannheimUniversity of California San FranciscoNIHUniversity of KonstanzUniversity of Electro-CommunicationsUniversity of WuppertalUniversity of ReunionUNICAMPUniversity of TrierHasso Plattner InstituteUniversity of BayreuthHeidelberg University HospitalUniversity of StrasbourgDKFZUniversity of LorraineInselspital, Bern University Hospital, University of BernUniversity of WürzburgUniversity of La RochelleUniversity of LyonUniversity of HohenheimUniversity Medical Center Hamburg-EppendorfUniversity of UlmUniversity Hospital ZurichUniversity of TuebingenUniversity of KaiserslauternUniversity of NantesUniversity of MainzUniversity of PaderbornUniversity of KielMedical University of South CarolinaUniversity of RostockThe University of Texas MD Anderson Cancer CenterNational Research Council (CNR)Hannover Medical SchoolItalian National Research CouncilUniversity of MuensterUniversity of MontpellierUniversity of LeipzigUniversity of GreifswaldUniversity Hospital BernSiemens HealthineersThe University of Alabama at BirminghamNational Institutes of HealthUniversity of MarburgUniversity of Paris-SaclayUniversity of LimogesUniversity of Clermont AuvergneUniversity of DortmundUniversity of GiessenKITUniversity of ToulonChildren’s Hospital of PhiladelphiaUniversity of JenaNational Taiwan University HospitalUniversity of SaarlandUniversity of ErlangenNational Cancer InstituteUniversity Hospital HeidelbergSwiss Federal Institute of Technology LausanneUniversity of Texas Health Science Center at HoustonNational Institute of Biomedical Imaging and BioengineeringUniversity of New CaledoniaUniversity of Koblenz-LandauParis Diderot UniversityUniversity of ParisInselspital, Bern University HospitalUniversity of Grenoble AlpesUniversity Hospital BaselMD Anderson Cancer CenterUniversity of AngersUniversity of French PolynesiaUniversity of MagdeburgUniversity of Geneva, SwitzerlandOulu University HospitalUniversity of ToursFriedrich-Alexander-University Erlangen-NurnbergUniversity of Rennes 1Wake Forest School of MedicineNIH Clinical CenterParis Descartes UniversityUniversity of Rouen NormandieUniversity of Aix-MarseilleUniversity of Perpignan Via DomitiaUniversity of Caen NormandieUniversity of FrankfurtUniversity of BochumUniversity of Bourgogne-Franche-ComtéUniversity of Corsica Pasquale PaoliNational Institute of Neurological Disorders and StrokeUniversity of HannoverRoche DiagnosticsUniversity of South BrittanyUniversity of DüsseldorfUniversity of Reims Champagne-ArdenneUniversity of HalleIRCCS Fondazione Santa LuciaUniversity of Applied Sciences TrierUniversity of Southampton, UKUniversity of Nice–Sophia AntipolisUniversit

de LorraineUniversité Paris-Saclay["École Polytechnique Fédérale de Lausanne"]RWTH Aachen UniversityUniversity of Bern, Institute for Advanced Study in Biomedical InnovationCRIBIS University of AlbertaThe Cancer Imaging Archive (TCIA)Fraunhofer Institute for Medical Image Computing MEVISMedical School of HannoverIstituto di Ricovero e Cura a Carattere Scientifico NeuromedFondazione Santa Lucia IRCCSCEA, LIST, Laboratory of Image and Biomedical SystemsUniversity of Alberta, CanadaHeidelberg University Hospital, Department of NeuroradiologyUniversity of Bern, SwitzerlandUniversity of DresdenUniversity of SpeyerUniversity of Trier, GermanyUniversity of Lorraine, FranceUniversity of Le Havre NormandieUniversity of Bretagne OccidentaleUniversity of French GuianaUniversity of the AntillesUniversity of Bern, Institute of Surgical Technology and BiomechanicsUniversity of Bern, ARTORG Center for Biomedical Engineering ResearchUniversity of Geneva, Department of RadiologyUniversity of Zürich, Department of NeuroradiologyRuhr-University-Bochum

University of GöttingenUniversity of BordeauxUniversity of MannheimUniversity of California San FranciscoNIHUniversity of KonstanzUniversity of Electro-CommunicationsUniversity of WuppertalUniversity of ReunionUNICAMPUniversity of TrierHasso Plattner InstituteUniversity of BayreuthHeidelberg University HospitalUniversity of StrasbourgDKFZUniversity of LorraineInselspital, Bern University Hospital, University of BernUniversity of WürzburgUniversity of La RochelleUniversity of LyonUniversity of HohenheimUniversity Medical Center Hamburg-EppendorfUniversity of UlmUniversity Hospital ZurichUniversity of TuebingenUniversity of KaiserslauternUniversity of NantesUniversity of MainzUniversity of PaderbornUniversity of KielMedical University of South CarolinaUniversity of RostockThe University of Texas MD Anderson Cancer CenterNational Research Council (CNR)Hannover Medical SchoolItalian National Research CouncilUniversity of MuensterUniversity of MontpellierUniversity of LeipzigUniversity of GreifswaldUniversity Hospital BernSiemens HealthineersThe University of Alabama at BirminghamNational Institutes of HealthUniversity of MarburgUniversity of Paris-SaclayUniversity of LimogesUniversity of Clermont AuvergneUniversity of DortmundUniversity of GiessenKITUniversity of ToulonChildren’s Hospital of PhiladelphiaUniversity of JenaNational Taiwan University HospitalUniversity of SaarlandUniversity of ErlangenNational Cancer InstituteUniversity Hospital HeidelbergSwiss Federal Institute of Technology LausanneUniversity of Texas Health Science Center at HoustonNational Institute of Biomedical Imaging and BioengineeringUniversity of New CaledoniaUniversity of Koblenz-LandauParis Diderot UniversityUniversity of ParisInselspital, Bern University HospitalUniversity of Grenoble AlpesUniversity Hospital BaselMD Anderson Cancer CenterUniversity of AngersUniversity of French PolynesiaUniversity of MagdeburgUniversity of Geneva, SwitzerlandOulu University HospitalUniversity of ToursFriedrich-Alexander-University Erlangen-NurnbergUniversity of Rennes 1Wake Forest School of MedicineNIH Clinical CenterParis Descartes UniversityUniversity of Rouen NormandieUniversity of Aix-MarseilleUniversity of Perpignan Via DomitiaUniversity of Caen NormandieUniversity of FrankfurtUniversity of BochumUniversity of Bourgogne-Franche-ComtéUniversity of Corsica Pasquale PaoliNational Institute of Neurological Disorders and StrokeUniversity of HannoverRoche DiagnosticsUniversity of South BrittanyUniversity of DüsseldorfUniversity of Reims Champagne-ArdenneUniversity of HalleIRCCS Fondazione Santa LuciaUniversity of Applied Sciences TrierUniversity of Southampton, UKUniversity of Nice–Sophia AntipolisUniversit

de LorraineUniversité Paris-Saclay["École Polytechnique Fédérale de Lausanne"]RWTH Aachen UniversityUniversity of Bern, Institute for Advanced Study in Biomedical InnovationCRIBIS University of AlbertaThe Cancer Imaging Archive (TCIA)Fraunhofer Institute for Medical Image Computing MEVISMedical School of HannoverIstituto di Ricovero e Cura a Carattere Scientifico NeuromedFondazione Santa Lucia IRCCSCEA, LIST, Laboratory of Image and Biomedical SystemsUniversity of Alberta, CanadaHeidelberg University Hospital, Department of NeuroradiologyUniversity of Bern, SwitzerlandUniversity of DresdenUniversity of SpeyerUniversity of Trier, GermanyUniversity of Lorraine, FranceUniversity of Le Havre NormandieUniversity of Bretagne OccidentaleUniversity of French GuianaUniversity of the AntillesUniversity of Bern, Institute of Surgical Technology and BiomechanicsUniversity of Bern, ARTORG Center for Biomedical Engineering ResearchUniversity of Geneva, Department of RadiologyUniversity of Zürich, Department of NeuroradiologyRuhr-University-BochumGliomas are the most common primary brain malignancies, with different

degrees of aggressiveness, variable prognosis and various heterogeneous

histologic sub-regions, i.e., peritumoral edematous/invaded tissue, necrotic

core, active and non-enhancing core. This intrinsic heterogeneity is also

portrayed in their radio-phenotype, as their sub-regions are depicted by

varying intensity profiles disseminated across multi-parametric magnetic

resonance imaging (mpMRI) scans, reflecting varying biological properties.

Their heterogeneous shape, extent, and location are some of the factors that

make these tumors difficult to resect, and in some cases inoperable. The amount

of resected tumor is a factor also considered in longitudinal scans, when

evaluating the apparent tumor for potential diagnosis of progression.

Furthermore, there is mounting evidence that accurate segmentation of the

various tumor sub-regions can offer the basis for quantitative image analysis

towards prediction of patient overall survival. This study assesses the

state-of-the-art machine learning (ML) methods used for brain tumor image

analysis in mpMRI scans, during the last seven instances of the International

Brain Tumor Segmentation (BraTS) challenge, i.e., 2012-2018. Specifically, we

focus on i) evaluating segmentations of the various glioma sub-regions in

pre-operative mpMRI scans, ii) assessing potential tumor progression by virtue

of longitudinal growth of tumor sub-regions, beyond use of the RECIST/RANO

criteria, and iii) predicting the overall survival from pre-operative mpMRI

scans of patients that underwent gross total resection. Finally, we investigate

the challenge of identifying the best ML algorithms for each of these tasks,

considering that apart from being diverse on each instance of the challenge,

the multi-institutional mpMRI BraTS dataset has also been a continuously

evolving/growing dataset.

Researchers at EPFL, Karlsruhe Institute of Technology, Northeastern University, and Leonardo AI introduced Latent-CLIP, a model that operates directly in the latent space of diffusion models, bypassing the need for computationally expensive pixel decoding during guidance and evaluation. This innovation reduced runtime by approximately 21% in the ReNO framework while maintaining or improving generation quality and enhancing safety moderation capabilities.

A comprehensive overview of Machine Learning Operations (MLOps) provides a unifying definition, identifies nine core principles and nine technical components, and outlines an end-to-end architecture to automate ML processes, aiming to increase the success rate of ML models transitioning into production. The work by Kreuzberger, Kühl, and Hirschl (Karlsruhe Institute of Technology and IBM) integrates academic rigor with industry insights.

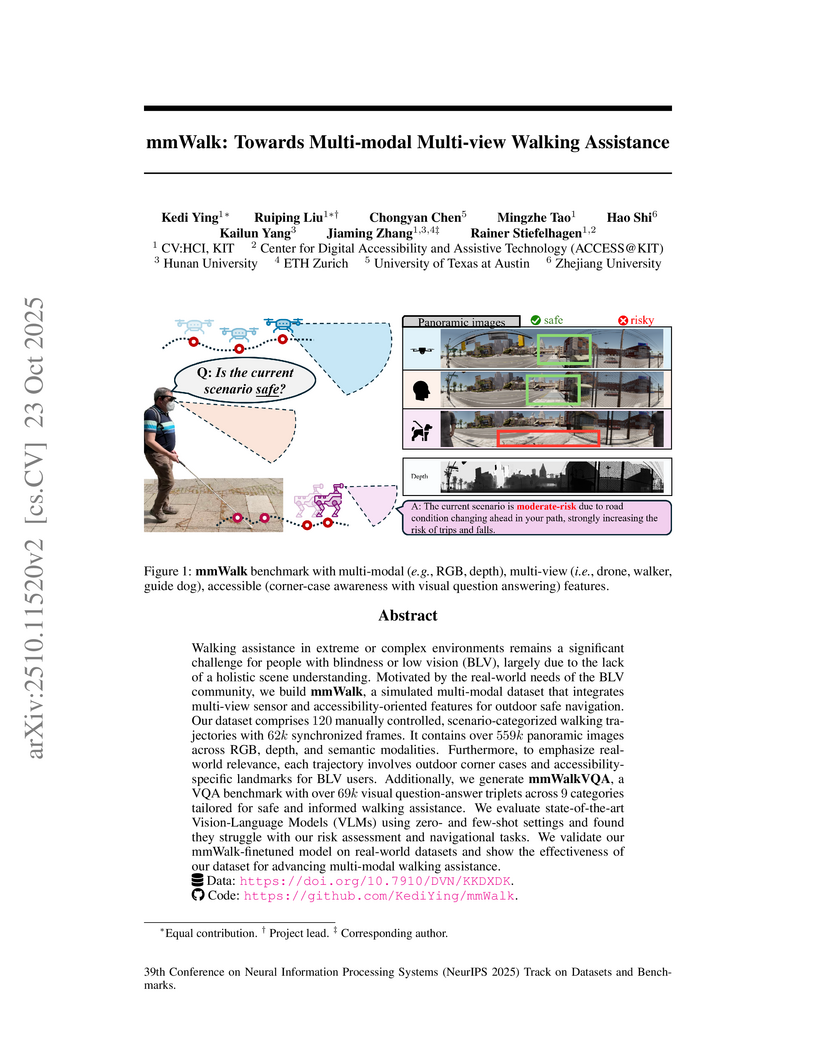

Walking assistance in extreme or complex environments remains a significant challenge for people with blindness or low vision (BLV), largely due to the lack of a holistic scene understanding. Motivated by the real-world needs of the BLV community, we build mmWalk, a simulated multi-modal dataset that integrates multi-view sensor and accessibility-oriented features for outdoor safe navigation. Our dataset comprises 120 manually controlled, scenario-categorized walking trajectories with 62k synchronized frames. It contains over 559k panoramic images across RGB, depth, and semantic modalities. Furthermore, to emphasize real-world relevance, each trajectory involves outdoor corner cases and accessibility-specific landmarks for BLV users. Additionally, we generate mmWalkVQA, a VQA benchmark with over 69k visual question-answer triplets across 9 categories tailored for safe and informed walking assistance. We evaluate state-of-the-art Vision-Language Models (VLMs) using zero- and few-shot settings and found they struggle with our risk assessment and navigational tasks. We validate our mmWalk-finetuned model on real-world datasets and show the effectiveness of our dataset for advancing multi-modal walking assistance.

We introduce a machine-learning framework based on symbolic regression to extract the full symbol alphabet of multi-loop Feynman integrals. By targeting the analytic structure rather than reduction, the method is broadly applicable and interpretable across different families of integrals. It successfully reconstructs complete symbol alphabets in nontrivial examples, demonstrating both robustness and generality. Beyond accelerating computations case by case, it uncovers the analytic structure universally. This framework opens new avenues for multi-loop amplitude analysis and provides a versatile tool for exploring scattering amplitudes.

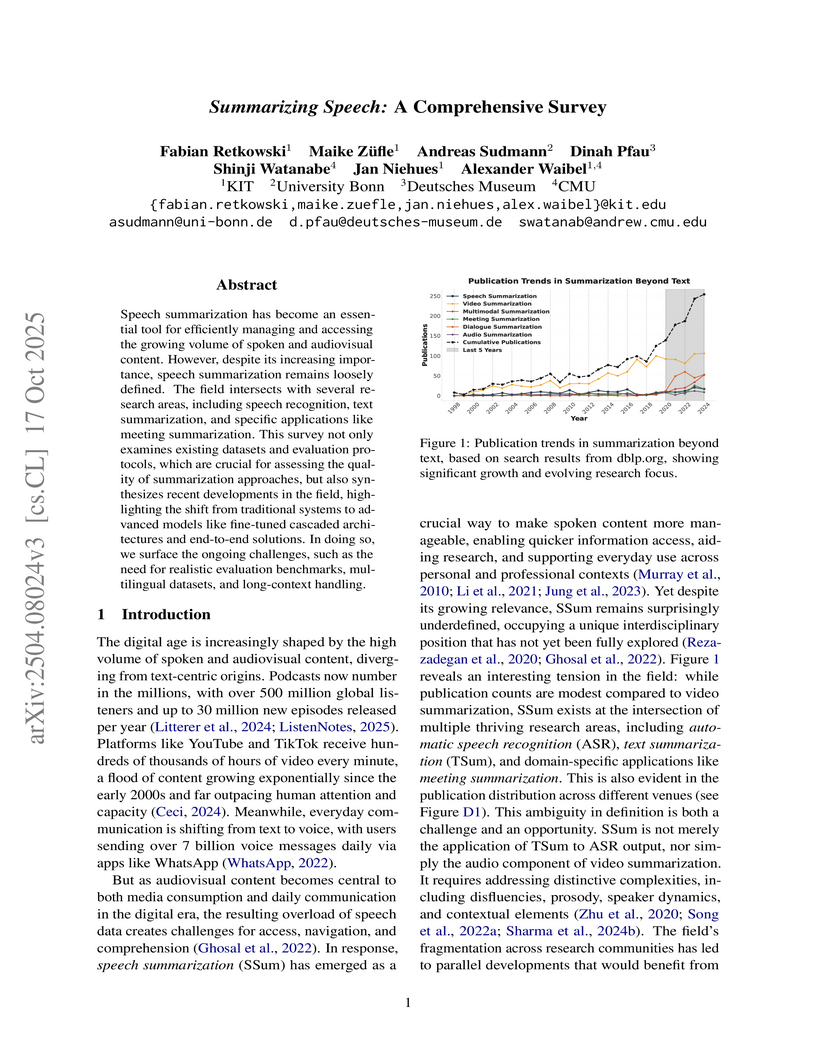

Speech summarization has become an essential tool for efficiently managing and accessing the growing volume of spoken and audiovisual content. However, despite its increasing importance, speech summarization remains loosely defined. The field intersects with several research areas, including speech recognition, text summarization, and specific applications like meeting summarization. This survey not only examines existing datasets and evaluation protocols, which are crucial for assessing the quality of summarization approaches, but also synthesizes recent developments in the field, highlighting the shift from traditional systems to advanced models like fine-tuned cascaded architectures and end-to-end solutions. In doing so, we surface the ongoing challenges, such as the need for realistic evaluation benchmarks, multilingual datasets, and long-context handling.

We show that it is possible to locate the few places on the body of an airplane, while it is flying through high clouds, from which broad-band, pulsed, radiation is emitted at Very High Frequency (VHF) radio frequencies. This serendipitous discovery was made whilst imaging a lightning flash using the Low-Frequency Array (LOFAR). This observation provides insights into the way the airplane sheds the electrical charge it acquires when flying through clouds. Furthermore, this observation allowed us to test and improve the precision and accuracy for our lightning observation techniques.

Our new results indicate that with the improved procedure the location precision for strong pulses is better than 50~cm, with the orientation of linear polarization being accurate to within 25∘. For the present case of a Boeing 777-300ER, VHF emissions were observed exclusively associated with the two engines, as well as a specific spot on the tail. Despite the aircraft flying through clouds at an altitude of 8~km, we did not detect any emissions from electrostatic wicks.

Credit assignment in Meta-reinforcement learning (Meta-RL) is still poorly understood. Existing methods either neglect credit assignment to pre-adaptation behavior or implement it naively. This leads to poor sample-efficiency during meta-training as well as ineffective task identification strategies. This paper provides a theoretical analysis of credit assignment in gradient-based Meta-RL. Building on the gained insights we develop a novel meta-learning algorithm that overcomes both the issue of poor credit assignment and previous difficulties in estimating meta-policy gradients. By controlling the statistical distance of both pre-adaptation and adapted policies during meta-policy search, the proposed algorithm endows efficient and stable meta-learning. Our approach leads to superior pre-adaptation policy behavior and consistently outperforms previous Meta-RL algorithms in sample-efficiency, wall-clock time, and asymptotic performance.

15 Apr 2016

We present Version 9 of the Feynman-diagram calculator FormCalc and a flexible new suite of shell scripts and Mathematica packages based on FormCalc, which can be adapted and used as a template for calculations.

06 Oct 2025

We present a semi-analytic calculation of the integrated double-emission eikonal function of two massive emitters whose momenta are at an arbitrary angle to each other. This result is needed for extending the nested soft-collinear subtraction scheme arXiv:1702.01352 to processes with massive partons.

Recurrent State-space models (RSSMs) are highly expressive models for learning patterns in time series data and system identification. However, these models assume that the dynamics are fixed and unchanging, which is rarely the case in real-world scenarios. Many control applications often exhibit tasks with similar but not identical dynamics which can be modeled as a latent variable. We introduce the Hidden Parameter Recurrent State Space Models (HiP-RSSMs), a framework that parametrizes a family of related dynamical systems with a low-dimensional set of latent factors. We present a simple and effective way of learning and performing inference over this Gaussian graphical model that avoids approximations like variational inference. We show that HiP-RSSMs outperforms RSSMs and competing multi-task models on several challenging robotic benchmarks both on real-world systems and simulations.

Convolutional neural networks rely on image texture and structure to serve as discriminative features to classify the image content. Image enhancement techniques can be used as preprocessing steps to help improve the overall image quality and in turn improve the overall effectiveness of a CNN. Existing image enhancement methods, however, are designed to improve the perceptual quality of an image for a human observer. In this paper, we are interested in learning CNNs that can emulate image enhancement and restoration, but with the overall goal to improve image classification and not necessarily human perception. To this end, we present a unified CNN architecture that uses a range of enhancement filters that can enhance image-specific details via end-to-end dynamic filter learning. We demonstrate the effectiveness of this strategy on four challenging benchmark datasets for fine-grained, object, scene, and texture classification: CUB-200-2011, PASCAL-VOC2007, MIT-Indoor, and DTD. Experiments using our proposed enhancement show promising results on all the datasets. In addition, our approach is capable of improving the performance of all generic CNN architectures.

22 Aug 2024

High-level robot skills represent an increasingly popular paradigm in robot programming. However, configuring the skills' parameters for a specific task remains a manual and time-consuming endeavor. Existing approaches for learning or optimizing these parameters often require numerous real-world executions or do not work in dynamic environments. To address these challenges, we propose MuTT, a novel encoder-decoder transformer architecture designed to predict environment-aware executions of robot skills by integrating vision, trajectory, and robot skill parameters. Notably, we pioneer the fusion of vision and trajectory, introducing a novel trajectory projection. Furthermore, we illustrate MuTT's efficacy as a predictor when combined with a model-based robot skill optimizer. This approach facilitates the optimization of robot skill parameters for the current environment, without the need for real-world executions during optimization. Designed for compatibility with any representation of robot skills, MuTT demonstrates its versatility across three comprehensive experiments, showcasing superior performance across two different skill representations.

With the rise of video production and social media, speech editing has become crucial for creators to address issues like mispronunciations, missing words, or stuttering in audio recordings. This paper explores text-based speech editing methods that modify audio via text transcripts without manual waveform editing. These approaches ensure edited audio is indistinguishable from the original by altering the mel-spectrogram. Recent advancements, such as context-aware prosody correction and advanced attention mechanisms, have improved speech editing quality. This paper reviews state-of-the-art methods, compares key metrics, and examines widely used datasets. The aim is to highlight ongoing issues and inspire further research and innovation in speech editing.

23 Dec 2024

We present an analytic calculation of three-loop four-point Feynman integrals with two off-shell legs of equal mass. We provide solutions to the canonical differential equations of two integral families in both Euclidean and physical regions. They are validated numerically against independent computations. A total of 170 master integrals are expressed in terms of multiple polylogarithms up to weight six. Most of them are computed for the first time. Our results are essential ingredients of the scattering amplitudes for equal-mass diboson production at next-to-next-to-next-to-leading-order QCD at the LHC.

29 Nov 2024

Many of the envisioned use-cases for quantum computers involve optimisation processes. While there are many algorithmic primitives to perform the required calculations, all eventually lead to quantum gates operating on quantum bits, with an order as determined by the structure of the objective function and the properties of target hardware. When the structure of the problem representation is not aligned with structure and boundary conditions of the executing hardware, various overheads to degrade the computation may arise, possibly negating any possible quantum advantage.

Therefore, automatic transformations of problem representations play an important role in quantum computing when descriptions (semi-)targeted at humans must be cast into forms that can be executed on quantum computers. Mathematically equivalent formulations are known to result in substantially different non-functional properties depending on hardware, algorithm and detail properties of the problem. Given the current state of noisy-intermediate scale quantum hardware (NISQ), these effects are considerably more pronounced than in classical computing. Likewise, efficiency of the transformation itself is relevant because possible quantum advantage may easily be eradicated by the overhead of transforming between representations. In this paper we consider a specific class of higher-level representations (polynomial unbiased binary optimisation problems), and devise novel automatic transformation mechanisms into widely used quadratic unconstrained binary optimisation problems that substantially improve efficiency and versatility over the state of the art. We also identify what influence factors of lower-level details can be abstracted away in the transformation process, and which details must be made available to higher-level abstractions.

Chinese Academy of SciencesUniversity of Bern

Chinese Academy of SciencesUniversity of Bern INFN

INFN NASA Goddard Space Flight Center

NASA Goddard Space Flight Center Columbia University

Columbia University Université Paris-Saclay

Université Paris-Saclay Technical University of Munich

Technical University of Munich Princeton UniversityBergische Universität WuppertalUniversidad Nacional Autónoma de MéxicoGran Sasso Science Institute (GSSI)University of SienaEUROPEAN ORGANIZATION FOR NUCLEAR RESEARCH (CERN)University of Hawaii at ManoaNASA Langley Research CenterUniversità degli Studi dell’AquilaLAPThChina Center of Advanced Science and TechnologyKITIAPINFN-Laboratori Nazionali del Gran Sasso (LNGS)LPSCUniversit

de StrasbourgUniversit

degli Studi di Torino

Princeton UniversityBergische Universität WuppertalUniversidad Nacional Autónoma de MéxicoGran Sasso Science Institute (GSSI)University of SienaEUROPEAN ORGANIZATION FOR NUCLEAR RESEARCH (CERN)University of Hawaii at ManoaNASA Langley Research CenterUniversità degli Studi dell’AquilaLAPThChina Center of Advanced Science and TechnologyKITIAPINFN-Laboratori Nazionali del Gran Sasso (LNGS)LPSCUniversit

de StrasbourgUniversit

degli Studi di TorinoCosmic-ray physics in the GeV-to-TeV energy range has entered a precision era

thanks to recent data from space-based experiments. However, the poor knowledge

of nuclear reactions, in particular for the production of antimatter and

secondary nuclei, limits the information that can be extracted from these data,

such as source properties, transport in the Galaxy and indirect searches for

particle dark matter. The Cross-Section for Cosmic Rays at CERN workshop series

has addressed the challenges encountered in the interpretation of

high-precision cosmic-ray data, with the goal of strengthening emergent

synergies and taking advantage of the complementarity and know-how in different

communities, from theoretical and experimental astroparticle physics to

high-energy and nuclear physics. In this paper, we present the outcomes of the

third edition of the workshop that took place in 2024. We present the current

state of cosmic-ray experiments and their perspectives, and provide a detailed

road map to close the most urgent gaps in cross-section data, in order to

efficiently progress on many open physics cases, which are motivated in the

paper. Finally, with the aim of being as exhaustive as possible, this report

touches several other fields -- such as cosmogenic studies, space radiation

protection and hadrontherapy -- where overlapping and specific new

cross-section measurements, as well as nuclear code improvement and

benchmarking efforts, are also needed. We also briefly highlight further

synergies between astroparticle and high-energy physics on the question of

cross-sections.

Several emerging technologies for byte-addressable non-volatile memory (NVM)

have been considered to replace DRAM as the main memory in computer systems

during the last years. The disadvantage of a lower write endurance, compared to

DRAM, of NVM technologies like Phase-Change Memory (PCM) or Ferroelectric RAM

(FeRAM) has been addressed in the literature. As a solution, in-memory

wear-leveling techniques have been proposed, which aim to balance the

wear-level over all memory cells to achieve an increased memory lifetime.

Generally, to apply such advanced aging-aware wear-leveling techniques proposed

in the literature, additional special hardware is introduced into the memory

system to provide the necessary information about the cell age and thus enable

aging-aware wear-leveling decisions.

This paper proposes software-only aging-aware wear-leveling based on common

CPU features and does not rely on any additional hardware support from the

memory subsystem. Specifically, we exploit the memory management unit (MMU),

performance counters, and interrupts to approximate the memory write counts as

an aging indicator. Although the software-only approach may lead to slightly

worse wear-leveling, it is applicable on commonly available hardware. We

achieve page-level coarse-grained wear-leveling by approximating the current

cell age through statistical sampling and performing physical memory remapping

through the MMU. This method results in non-uniform memory usage patterns

within a memory page. Hence, we further propose a fine-grained wear-leveling in

the stack region of C / C++ compiled software.

By applying both wear-leveling techniques, we achieve up to 78.43% of the

ideal memory lifetime, which is a lifetime improvement of more than a factor of

900 compared to the lifetime without any wear-leveling.

There are no more papers matching your filters at the moment.