University of Michigan – Shanghai Jiao Tong University Joint Institute

Although deep reinforcement learning has become a promising machine learning approach for sequential decision-making problems, it is still not mature enough for high-stake domains such as autonomous driving or medical applications. In such contexts, a learned policy needs for instance to be interpretable, so that it can be inspected before any deployment (e.g., for safety and verifiability reasons). This survey provides an overview of various approaches to achieve higher interpretability in reinforcement learning (RL). To that aim, we distinguish interpretability (as a property of a model) and explainability (as a post-hoc operation, with the intervention of a proxy) and discuss them in the context of RL with an emphasis on the former notion. In particular, we argue that interpretable RL may embrace different facets: interpretable inputs, interpretable (transition/reward) models, and interpretable decision-making. Based on this scheme, we summarize and analyze recent work related to interpretable RL with an emphasis on papers published in the past 10 years. We also discuss briefly some related research areas and point to some potential promising research directions.

28 Dec 2022

We present a simple new way - called Schrodingerisation - to simulate general

linear partial differential equations via quantum simulation. Using a simple

new transform, referred to as the warped phase transformation, any linear

partial differential equation can be recast into a system of Schrodinger's

equations - in real time - in a straightforward way. This can be seen directly

on the level of the dynamical equations without more sophisticated methods.

This approach is not only applicable to PDEs for classical problems but also

those for quantum problems - like the preparation of quantum ground states,

Gibbs states and the simulation of quantum states in random media in the

semiclassical limit.

05 Dec 2024

Quantum computing has emerged as a promising avenue for achieving significant

speedup, particularly in large-scale PDE simulations, compared to classical

computing. One of the main quantum approaches involves utilizing Hamiltonian

simulation, which is directly applicable only to Schr\"odinger-type equations.

To address this limitation, Schr\"odingerisation techniques have been

developed, employing the warped transformation to convert general linear PDEs

into Schr\"odinger-type equations. However, despite the development of

Schr\"odingerisation techniques, the explicit implementation of the

corresponding quantum circuit for solving general PDEs remains to be designed.

In this paper, we present detailed implementation of a quantum algorithm for

general PDEs using Schr\"odingerisation techniques. We provide examples of the

heat equation, and the advection equation approximated by the upwind scheme, to

demonstrate the effectiveness of our approach. Complexity analysis is also

carried out to demonstrate the quantum advantages of these algorithms in high

dimensions over their classical counterparts.

14 Apr 2025

On Schrödingerization based quantum algorithms for linear dynamical systems with inhomogeneous terms

On Schrödingerization based quantum algorithms for linear dynamical systems with inhomogeneous terms

We analyze the Schrödingerization method for quantum simulation of a general class of non-unitary dynamics with inhomogeneous source terms. The Schrödingerization technique, introduced in [31], transforms any linear ordinary and partial differential equations with non-unitary dynamics into a system under unitary dynamics via a warped phase transition that maps the equations into a higher dimension, making them suitable for quantum simulation. This technique can also be applied to these equations with inhomogeneous terms modeling source or forcing terms, or boundary and interface conditions, and discrete dynamical systems such as iterative methods in numerical linear algebra, through extra equations in the system. Difficulty arises with the presence of inhomogeneous terms since they can change the stability of the original system. In this paper, we systematically study-both theoretically and numerically-the important issue of recovering the original variables from the Schrödingerized equations, even when the evolution operator contains unstable modes. We show that, even with unstable modes, one can still construct a stable scheme; however, to recover the original variable, one needs to use suitable data in the extended space. We analyze and compare both the discrete and continuous Fourier transforms used in the extended dimension and derive corresponding error estimates, which allow one to use the more appropriate transform for specific equations. We also provide a smoother initialization for the Schrödingerized system to gain higher-order accuracy in the extended space. We homogenize the inhomogeneous terms with a stretch transformation, making it easier to recover the original variable. Our recovery technique also provides a simple and generic framework to solve general ill-posed problems in a computationally stable way.

16 Jun 2021

Continuous-variable quantum information, encoded into infinite-dimensional

quantum systems, is a promising platform for the realization of many quantum

information protocols, including quantum computation, quantum metrology,

quantum cryptography, and quantum communication. To successfully demonstrate

these protocols, an essential step is the certification of multimode

continuous-variable quantum states and quantum devices. This problem is well

studied under the assumption that multiple uses of the same device result into

identical and independently distributed (i.i.d.) operations. However, in

realistic scenarios, identical and independent state preparation and calls to

the quantum devices cannot be generally guaranteed. Important instances include

adversarial scenarios and instances of time-dependent and correlated noise. In

this paper, we propose the first set of reliable protocols for verifying

multimode continuous-variable entangled states and devices in these non-i.i.d

scenarios. Although not fully universal, these protocols are applicable to

Gaussian quantum states, non-Gaussian hypergraph states, as well as

amplification, attenuation, and purification of noisy coherent states.

17 Nov 2024

The Schrödingerisation method combined with the autonomozation technique in \cite{cjL23} converts general non-autonomous linear differential equations with non-unitary dynamics into systems of autonomous Schrödinger-type equations, via the so-called warped phase transformation that maps the equation into two higher dimension. Despite the success of Schrödingerisation techniques, they typically require the black box of the sparse Hamiltonian simulation, suitable for continuous-variable based analog quantum simulation. For qubit-based general quantum computing one needs to design the quantum circuits for practical implementation.

This paper explicitly constructs a quantum circuit for Maxwell's equations with perfect electric conductor (PEC) boundary conditions and time-dependent source terms, based on Schrödingerization and autonomozation, with corresponding computational complexity analysis. Through initial value smoothing and high-order approximation to the delta function, the increase in qubits from the extra dimensions only requires minor rise in computational complexity, almost loglog1/ε where ε is the desired precision. Our analysis demonstrates that quantum algorithms constructed using Schrödingerisation exhibit polynomial acceleration in computational complexity compared to the classical Finite Difference Time Domain (FDTD) format.

31 Jan 2024

The layered, air-stable van der Waals antiferromagnetic compound CrSBr exhibits pronounced coupling between its optical, electronic, and magnetic properties. As an example, exciton dynamics can be significantly influenced by lattice vibrations through exciton-phonon coupling. Using low-temperature photoluminescence spectroscopy, we demonstrate the effective coupling between excitons and phonons in nanometer-thick CrSBr. By careful analysis, we identify that the satellite peaks predominantly arise from the interaction between the exciton and an optical phonon with a frequency of 118 cm-1 (~14.6 meV) due to the out-of-plane vibration of Br atoms. Power-dependent and temperature-dependent photoluminescence measurements support exciton-phonon coupling and indicate a coupling between magnetic and optical properties, suggesting the possibility of carrier localization in the material. The presence of strong coupling between the exciton and the lattice may have important implications for the design of light-matter interactions in magnetic semiconductors and provides new insights into the exciton dynamics in CrSBr. This highlights the potential for exploiting exciton-phonon coupling to control the optical properties of layered antiferromagnetic materials.

27 Jan 2025

One of the most promising applications of quantum computers is solving partial differential equations (PDEs). By using the Schrodingerisation technique - which converts non-conservative PDEs into Schrodinger equations - the problem can be reduced to Hamiltonian simulations. The particular class of Hamiltonians we consider is shown to be sufficient for simulating almost any linear PDE. In particular, these Hamiltonians consist of discretizations of polynomial products and sums of position and momentum operators. This paper addresses an important gap by efficiently loading these Hamiltonians into the quantum computer through block-encoding. The construction is explicit and efficient in terms of one- and two-qubit operations, forming a fundamental building block for constructing the unitary evolution operator for that class of Hamiltonians. The proposed algorithm demonstrates a squared logarithmic scaling with respect to the spatial partitioning size, offering a polynomial speedup over classical finite-difference methods in the context of spatial partitioning for PDE solving. Furthermore, the algorithm is extended to the multi-dimensional case, achieving an exponential acceleration with respect to the number of dimensions, alleviating the curse of dimensionality problem. This work provides an essential foundation for developing explicit and efficient quantum circuits for PDEs, Hamiltonian simulations, and ground state and thermal state preparation.

25 Jun 2025

Quantum computers are known for their potential to achieve up-to-exponential speedup compared to classical computers for certain problems. To exploit the advantages of quantum computers, we propose quantum algorithms for linear stochastic differential equations, utilizing the Schrödingerisation method for the corresponding approximate equation by treating the noise term as a (discrete-in-time) forcing term. Our algorithms are applicable to stochastic differential equations with both Gaussian noise and α-stable Lévy noise. The gate complexity of our algorithms exhibits an O(dlog(Nd)) dependence on the dimensions d and sample sizes N, where its corresponding classical counterpart requires nearly exponentially larger complexity in scenarios involving large sample sizes. In the Gaussian noise case, we show the strong convergence of first order in the mean square norm for the approximate equations. The algorithms are numerically verified for the Ornstein-Uhlenbeck processes, geometric Brownian motions, and one-dimensional Lévy flights.

While electron microscopy offers crucial atomic-resolution insights into structure-property relationships, radiation damage severely limits its use on beam-sensitive materials like proteins and 2D materials. To overcome this challenge, we push beyond the electron dose limits of conventional electron microscopy by adapting principles from multi-image super-resolution (MISR) that have been widely used in remote sensing. Our method fuses multiple low-resolution, sub-pixel-shifted views and enhances the reconstruction with a convolutional neural network (CNN) that integrates features from synthetic, multi-angle observations. We developed a dual-path, attention-guided network for 4D-STEM that achieves atomic-scale super-resolution from ultra-low-dose data. This provides robust atomic-scale visualization across amorphous, semi-crystalline, and crystalline beam-sensitive specimens. Systematic evaluations on representative materials demonstrate comparable spatial resolution to conventional ptychography under ultra-low-dose conditions. Our work expands the capabilities of 4D-STEM, offering a new and generalizable method for the structural analysis of radiation-vulnerable materials.

18 Jun 2024

The dynamics simulation of multibody systems (MBS) using spatial velocities

(non-holonomic velocities) requires time integration of the dynamics equations

together with the kinematic reconstruction equations (relating time derivatives

of configuration variables to rigid body velocities). The latter are specific

to the geometry of the rigid body motion underlying a particular formulation,

and thus to the used configuration space (c-space). The proper c-space of a

rigid body is the Lie group SE(3), and the geometry is that of the screw

motions. The rigid bodies within a MBS are further subjected to geometric

constraints, often due to lower kinematic pairs that define SE(3) subgroups.

Traditionally, however, in MBS dynamics the translations and rotations are

parameterized independently, which implies the use of the direct product group

SO(3)×R3 as rigid body c-space, although this

does not account for rigid body motions. Hence, its appropriateness was

recently put into perspective. In this paper the significance of the c-space

for the constraint satisfaction in numerical time stepping schemes is analyzed

for holonomicaly constrained MBS modeled with the 'absolute coordinate'

approach, i.e. using the Newton-Euler equations for the individual bodies

subjected to geometric constraints. It is shown that the geometric constraints

a body is subjected to are exactly satisfied if they constrain the motion to a

subgroup of its c-space. Since only the SE(3) subgroups have a

practical significance it is regarded as the appropriate c-space for the

constrained rigid body. Consequently the constraints imposed by lower pair

joints are exactly satisfied if the joint connects a body to the ground. For a

general MBS, where the motions are not constrained to a subgroup, the SE(3) and

SO(3)×R3 yield the same order of accuracy.

20 Mar 2025

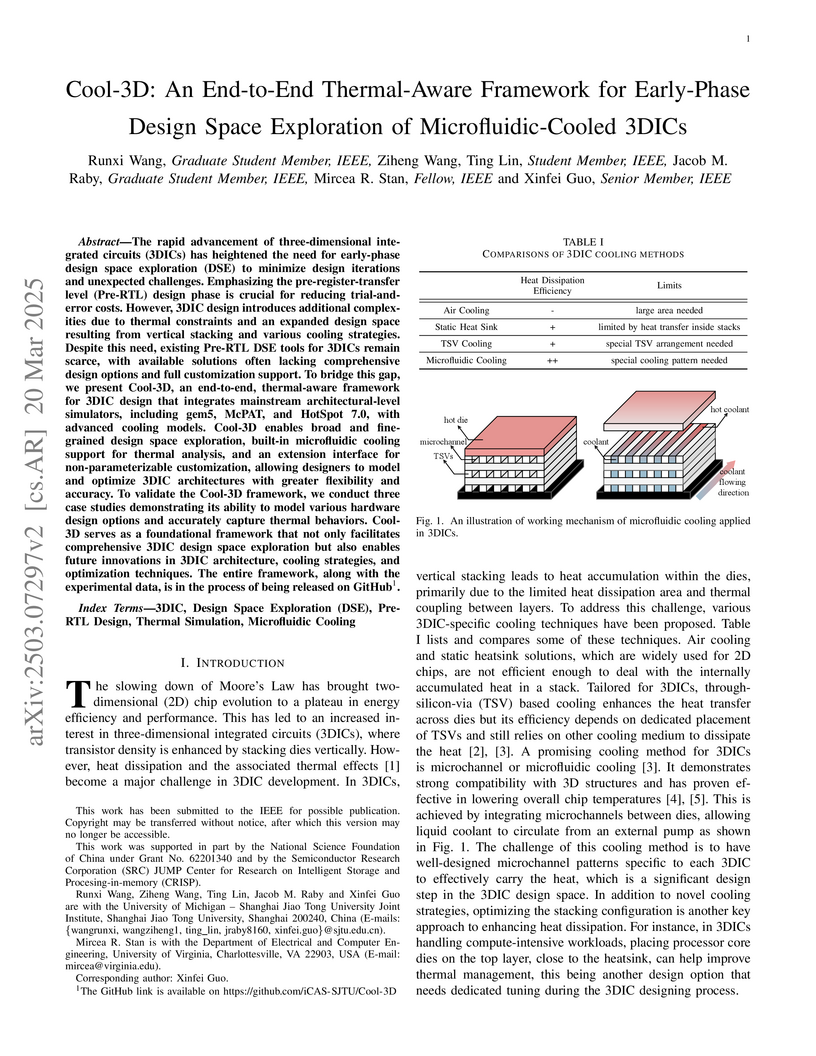

The rapid advancement of three-dimensional integrated circuits (3DICs) has

heightened the need for early-phase design space exploration (DSE) to minimize

design iterations and unexpected challenges. Emphasizing the

pre-register-transfer level (Pre-RTL) design phase is crucial for reducing

trial-and-error costs. However, 3DIC design introduces additional complexities

due to thermal constraints and an expanded design space resulting from vertical

stacking and various cooling strategies. Despite this need, existing Pre-RTL

DSE tools for 3DICs remain scarce, with available solutions often lacking

comprehensive design options and full customization support. To bridge this

gap, we present Cool-3D, an end-to-end, thermal-aware framework for 3DIC design

that integrates mainstream architectural-level simulators, including gem5,

McPAT, and HotSpot 7.0, with advanced cooling models. Cool-3D enables broad and

fine-grained design space exploration, built-in microfluidic cooling support

for thermal analysis, and an extension interface for non-parameterizable

customization, allowing designers to model and optimize 3DIC architectures with

greater flexibility and accuracy. To validate the Cool-3D framework, we conduct

three case studies demonstrating its ability to model various hardware design

options and accurately capture thermal behaviors. Cool-3D serves as a

foundational framework that not only facilitates comprehensive 3DIC design

space exploration but also enables future innovations in 3DIC architecture,

cooling strategies, and optimization techniques. The entire framework, along

with the experimental data, is in the process of being released on GitHub.

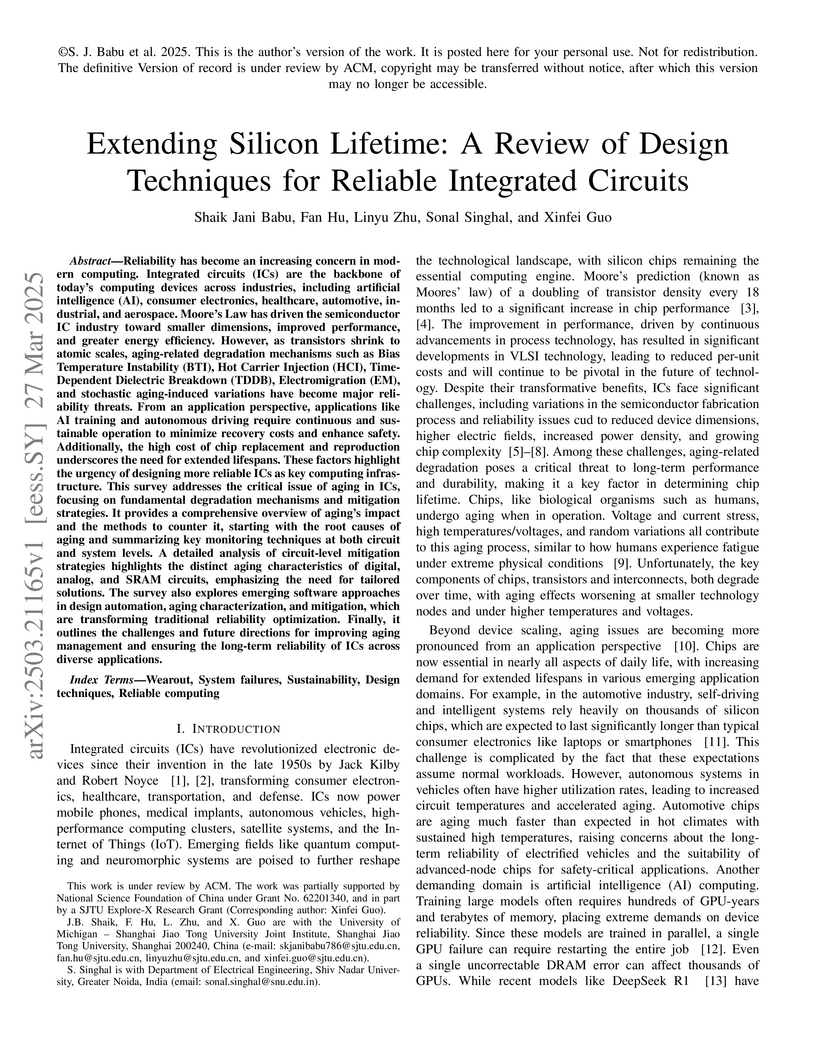

Reliability has become an increasing concern in modern computing. Integrated

circuits (ICs) are the backbone of modern computing devices across industries,

including artificial intelligence (AI), consumer electronics, healthcare,

automotive, industrial, and aerospace. Moore Law has driven the semiconductor

IC industry toward smaller dimensions, improved performance, and greater energy

efficiency. However, as transistors shrink to atomic scales, aging-related

degradation mechanisms such as Bias Temperature Instability (BTI), Hot Carrier

Injection (HCI), Time-Dependent Dielectric Breakdown (TDDB), Electromigration

(EM), and stochastic aging-induced variations have become major reliability

threats. From an application perspective, applications like AI training and

autonomous driving require continuous and sustainable operation to minimize

recovery costs and enhance safety. Additionally, the high cost of chip

replacement and reproduction underscores the need for extended lifespans. These

factors highlight the urgency of designing more reliable ICs. This survey

addresses the critical aging issues in ICs, focusing on fundamental degradation

mechanisms and mitigation strategies. It provides a comprehensive overview of

aging impact and the methods to counter it, starting with the root causes of

aging and summarizing key monitoring techniques at both circuit and system

levels. A detailed analysis of circuit-level mitigation strategies highlights

the distinct aging characteristics of digital, analog, and SRAM circuits,

emphasizing the need for tailored solutions. The survey also explores emerging

software approaches in design automation, aging characterization, and

mitigation, which are transforming traditional reliability optimization.

Finally, it outlines the challenges and future directions for improving aging

management and ensuring the long-term reliability of ICs across diverse

applications.

This paper applies the recent fast iterative neural network framework,

Momentum-Net, using appropriate models to low-dose X-ray computed tomography

(LDCT) image reconstruction. At each layer of the proposed Momentum-Net, the

model-based image reconstruction module solves the majorized penalized weighted

least-square problem, and the image refining module uses a four-layer

convolutional neural network (CNN). Experimental results with the NIH AAPM-Mayo

Clinic Low Dose CT Grand Challenge dataset show that the proposed Momentum-Net

architecture significantly improves image reconstruction accuracy, compared to

a state-of-the-art noniterative image denoising deep neural network (NN),

WavResNet (in LDCT). We also investigated the spectral normalization technique

that applies to image refining NN learning to satisfy the nonexpansive NN

property; however, experimental results show that this does not improve the

image reconstruction performance of Momentum-Net.

16 Mar 2022

The phase field approach is widely used to model fracture behaviors due to

the absence of the need to track the crack topology and the ability to predict

crack nucleation and branching. In this work, the asynchronous variational

integrators (AVI) is adapted for the phase field approach of dynamic brittle

fractures. The AVI is derived from Hamilton's principle and allows each element

in the mesh to have its own local time step that may be different from others'.

While the displacement field is explicitly updated, the phase field is

implicitly solved, with upper and lower bounds strictly and conveniently

enforced. In particular, the AT1 and AT2 variants are equally easily

implemented. Three benchmark problems are used to study the performances of

both AT1 and AT2 models and the results show that AVI for phase field approach

significantly speeds up the computational efficiency and successfully captures

the complicated dynamic fracture behavior.

03 Sep 2019

We demonstrate that photoacoustic microscopy (PAM) can be a potential novel imaging tool to investigate the Li metal dendrite growth, a critical issue leading to short circuit and even explosion of Li metal batteries. Our results suggest several advantages of PAM imaging of Li metal batteries: high resolution (micrometers), 3D imaging capability, deep penetration in a separator, and high contrast from bulk Li metal. Further, PAM has potential for in situ real-time imaging of Li metal batteries.

19 Oct 2017

Dual energy CT (DECT) enhances tissue characterization because it can produce images of basis materials such as soft-tissue and bone. DECT is of great interest in applications to medical imaging, security inspection and nondestructive testing. Theoretically, two materials with different linear attenuation coefficients can be accurately reconstructed using DECT technique. However, the ability to reconstruct three or more basis materials is clinically and industrially important. Under the assumption that there are at most three materials in each pixel, there are a few methods that estimate multiple material images from DECT measurements by enforcing sum-to-one and a box constraint ([0 1]) derived from both the volume and mass conservation assumption. The recently proposed image-domain multi-material decomposition (MMD) method introduces edge-preserving regularization for each material image which neglects the relations among material images, and enforced the assumption that there are at most three materials in each pixel using a time-consuming loop over all possible material-triplet in each iteration of optimizing its cost function. We propose a new image-domain MMD method for DECT that considers the prior information that different material images have common edges and encourages sparsity of material composition in each pixel using regularization.

Decision support systems (e.g., for ecological conservation) and autonomous systems (e.g., adaptive controllers in smart cities) start to be deployed in real applications. Although their operations often impact many users or stakeholders, no fairness consideration is generally taken into account in their design, which could lead to completely unfair outcomes for some users or stakeholders. To tackle this issue, we advocate for the use of social welfare functions that encode fairness and present this general novel problem in the context of (deep) reinforcement learning, although it could possibly be extended to other machine learning tasks.

Signal models based on sparse representations have received considerable attention in recent years. On the other hand, deep models consisting of a cascade of functional layers, commonly known as deep neural networks, have been highly successful for the task of object classification and have been recently introduced to image reconstruction. In this work, we develop a new image reconstruction approach based on a novel multi-layer model learned in an unsupervised manner by combining both sparse representations and deep models. The proposed framework extends the classical sparsifying transform model for images to a Multi-lAyer Residual Sparsifying transform (MARS) model, wherein the transform domain data are jointly sparsified over layers. We investigate the application of MARS models learned from limited regular-dose images for low-dose CT reconstruction using Penalized Weighted Least Squares (PWLS) optimization. We propose new formulations for multi-layer transform learning and image reconstruction. We derive an efficient block coordinate descent algorithm to learn the transforms across layers, in an unsupervised manner from limited regular-dose images. The learned model is then incorporated into the low-dose image reconstruction phase. Low-dose CT experimental results with both the XCAT phantom and Mayo Clinic data show that the MARS model outperforms conventional methods such as FBP and PWLS methods based on the edge-preserving (EP) regularizer in terms of two numerical metrics (RMSE and SSIM) and noise suppression. Compared with the single-layer learned transform (ST) model, the MARS model performs better in maintaining some subtle details.

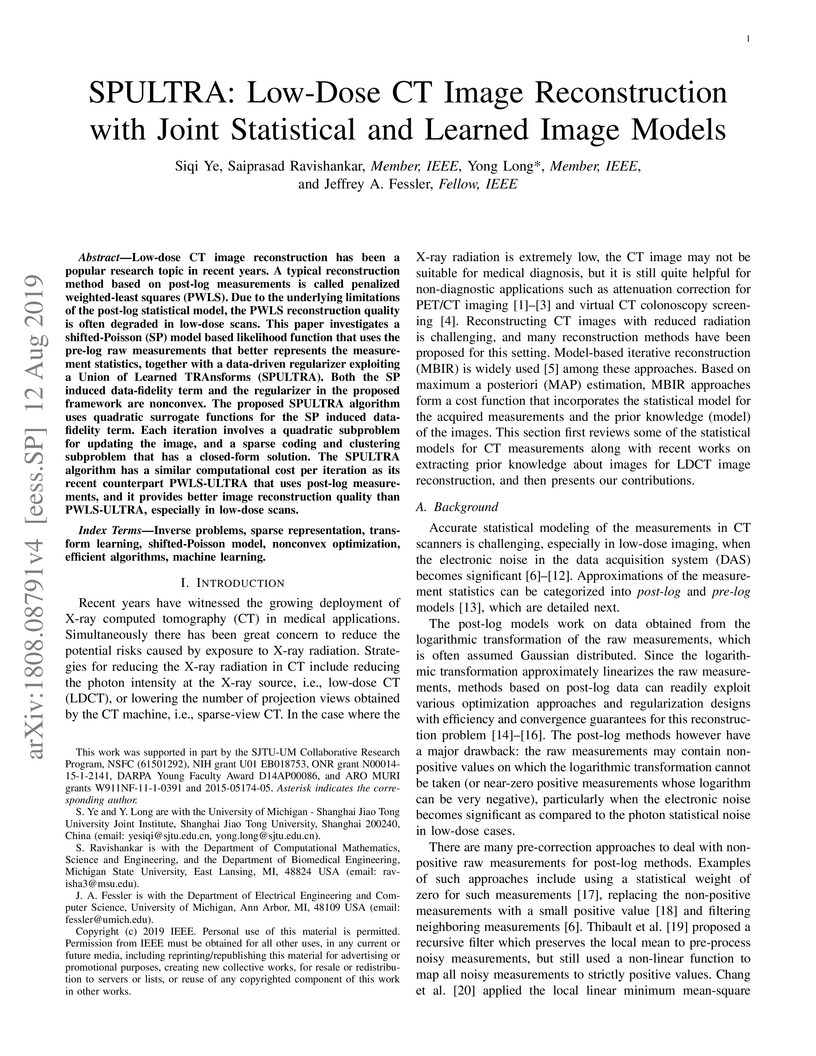

Low-dose CT image reconstruction has been a popular research topic in recent

years. A typical reconstruction method based on post-log measurements is called

penalized weighted-least squares (PWLS). Due to the underlying limitations of

the post-log statistical model, the PWLS reconstruction quality is often

degraded in low-dose scans. This paper investigates a shifted-Poisson (SP)

model based likelihood function that uses the pre-log raw measurements that

better represents the measurement statistics, together with a data-driven

regularizer exploiting a Union of Learned TRAnsforms (SPULTRA). Both the SP

induced data-fidelity term and the regularizer in the proposed framework are

nonconvex. The proposed SPULTRA algorithm uses quadratic surrogate functions

for the SP induced data-fidelity term. Each iteration involves a quadratic

subproblem for updating the image, and a sparse coding and clustering

subproblem that has a closed-form solution. The SPULTRA algorithm has a similar

computational cost per iteration as its recent counterpart PWLS-ULTRA that uses

post-log measurements, and it provides better image reconstruction quality than

PWLS-ULTRA, especially in low-dose scans.

There are no more papers matching your filters at the moment.