University of Peradeniya

Semantic segmentation has a broad range of applications in a variety of domains including land coverage analysis, autonomous driving, and medical image analysis. Convolutional neural networks (CNN) and Vision Transformers (ViTs) provide the architecture models for semantic segmentation. Even though ViTs have proven success in image classification, they cannot be directly applied to dense prediction tasks such as image segmentation and object detection since ViT is not a general purpose backbone due to its patch partitioning scheme. In this survey, we discuss some of the different ViT architectures that can be used for semantic segmentation and how their evolution managed the above-stated challenge. The rise of ViT and its performance with a high success rate motivated the community to slowly replace the traditional convolutional neural networks in various computer vision tasks. This survey aims to review and compare the performances of ViT architectures designed for semantic segmentation using benchmarking datasets. This will be worthwhile for the community to yield knowledge regarding the implementations carried out in semantic segmentation and to discover more efficient methodologies using ViTs.

Periodontitis, a chronic inflammatory disease causing alveolar bone loss, significantly affects oral health and quality of life. Accurate assessment of bone loss severity and pattern is critical for diagnosis and treatment planning. In this study, we propose a novel AI-based deep learning framework to automatically detect and quantify alveolar bone loss and its patterns using intraoral periapical (IOPA) radiographs. Our method combines YOLOv8 for tooth detection with Keypoint R-CNN models to identify anatomical landmarks, enabling precise calculation of bone loss severity. Additionally, YOLOv8x-seg models segment bone levels and tooth masks to determine bone loss patterns (horizontal vs. angular) via geometric analysis. Evaluated on a large, expertly annotated dataset of 1000 radiographs, our approach achieved high accuracy in detecting bone loss severity (intra-class correlation coefficient up to 0.80) and bone loss pattern classification (accuracy 87%). This automated system offers a rapid, objective, and reproducible tool for periodontal assessment, reducing reliance on subjective manual evaluation. By integrating AI into dental radiographic analysis, our framework has the potential to improve early diagnosis and personalized treatment planning for periodontitis, ultimately enhancing patient care and clinical outcomes.

17 Oct 2025

The clinical adoption of biomedical vision-language models is hindered by prompt optimization techniques that produce either uninterpretable latent vectors or single textual prompts. This lack of transparency and failure to capture the multi-faceted nature of clinical diagnosis, which relies on integrating diverse observations, limits their trustworthiness in high-stakes settings. To address this, we introduce BiomedXPro, an evolutionary framework that leverages a large language model as both a biomedical knowledge extractor and an adaptive optimizer to automatically generate a diverse ensemble of interpretable, natural-language prompt pairs for disease diagnosis. Experiments on multiple biomedical benchmarks show that BiomedXPro consistently outperforms state-of-the-art prompt-tuning methods, particularly in data-scarce few-shot settings. Furthermore, our analysis demonstrates a strong semantic alignment between the discovered prompts and statistically significant clinical features, grounding the model's performance in verifiable concepts. By producing a diverse ensemble of interpretable prompts, BiomedXPro provides a verifiable basis for model predictions, representing a critical step toward the development of more trustworthy and clinically-aligned AI systems.

Denoising Diffusion Probabilistic Models (DDPMs) have accomplished much in

the realm of generative AI. With the tremendous level of popularity the

Generative AI algorithms have achieved, the demand for higher levels of

performance continues to increase. Under this backdrop, careful scrutinization

of algorithm performance under sample fidelity type measures is essential to

ascertain how, effectively, the underlying structures of the data distribution

were learned. In this context, minimizing the mean squared error between the

additive and predicted noise alone does not impose structural integrity

constraints on the predicted noise, for instance, isotropic. Under this

premise, we were motivated to utilize the isotropy of the additive noise as a

constraint on the objective function to enhance the fidelity of DDPMs. Our

approach is simple and can be applied to any DDPM variant. We validate our

approach by presenting experiments conducted on four synthetic 2D datasets as

well as on unconditional image generation. As demonstrated by the results, the

incorporation of this constraint improves the fidelity metrics, Precision and

Density, and the results clearly indicate how the structural imposition was

effective.

Holistic understanding and reasoning in 3D scenes are crucial for the success

of autonomous driving systems. The evolution of 3D semantic occupancy

prediction as a pretraining task for autonomous driving and robotic

applications captures finer 3D details compared to traditional 3D detection

methods. Vision-based 3D semantic occupancy prediction is increasingly

overlooked in favor of LiDAR-based approaches, which have shown superior

performance in recent years. However, we present compelling evidence that there

is still potential for enhancing vision-based methods. Existing approaches

predominantly focus on spatial cues such as tri-perspective view (TPV)

embeddings, often overlooking temporal cues. This study introduces S2TPVFormer,

a spatiotemporal transformer architecture designed to predict temporally

coherent 3D semantic occupancy. By introducing temporal cues through a novel

Temporal Cross-View Hybrid Attention mechanism (TCVHA), we generate

Spatiotemporal TPV (S2TPV) embeddings that enhance the prior process.

Experimental evaluations on the nuScenes dataset demonstrate a significant

+4.1% of absolute gain in mean Intersection over Union (mIoU) for 3D semantic

occupancy compared to baseline TPVFormer, validating the effectiveness of

S2TPVFormer in advancing 3D scene perception.

Semantic Change Detection (SCD) from remote sensing imagery requires models balancing extensive spatial context, computational efficiency, and sensitivity to class-imbalanced land-cover transitions. While Convolutional Neural Networks excel at local feature extraction but lack global context, Transformers provide global modeling at high computational costs. Recent Mamba architectures based on state-space models offer compelling solutions through linear complexity and efficient long-range modeling. In this study, we introduce Mamba-FCS, a SCD framework built upon Visual State Space Model backbone incorporating, a Joint Spatio-Frequency Fusion block incorporating log-amplitude frequency domain features to enhance edge clarity and suppress illumination artifacts, a Change-Guided Attention (CGA) module that explicitly links the naturally intertwined BCD and SCD tasks, and a Separated Kappa (SeK) loss tailored for class-imbalanced performance optimization. Extensive evaluation on SECOND and Landsat-SCD datasets shows that Mamba-FCS achieves state-of-the-art metrics, 88.62% Overall Accuracy, 65.78% F_scd, and 25.50% SeK on SECOND, 96.25% Overall Accuracy, 89.27% F_scd, and 60.26% SeK on Landsat-SCD. Ablation analyses confirm distinct contributions of each novel component, with qualitative assessments highlighting significant improvements in SCD. Our results underline the substantial potential of Mamba architectures, enhanced by proposed techniques, setting a new benchmark for effective and scalable semantic change detection in remote sensing applications. The complete source code, configuration files, and pre-trained models will be publicly available upon publication.

Large Language Models are increasingly being used for various tasks including

content generation and as chatbots. Despite their impressive performances in

general tasks, LLMs need to be aligned when applying for domain specific tasks

to mitigate the problems of hallucination and producing harmful answers.

Retrieval Augmented Generation (RAG) allows to easily attach and manipulate a

non-parametric knowledgebases to LLMs. Applications of RAG in the field of

medical education are discussed in this paper. A combined extractive and

abstractive summarization method for large unstructured textual data using

representative vectors is proposed.

Synthetic network traffic generation has emerged as a promising alternative for various data-driven applications in the networking domain. It enables the creation of synthetic data that preserves real-world characteristics while addressing key challenges such as data scarcity, privacy concerns, and purity constraints associated with real data. In this survey, we provide a comprehensive review of synthetic network traffic generation approaches, covering essential aspects such as data types, generation models, and evaluation methods. With the rapid advancements in AI and machine learning, we focus particularly on deep learning-based techniques while also providing a detailed discussion of statistical methods and their extensions, including commercially available tools. Furthermore, we highlight open challenges in this domain and discuss potential future directions for further research and development. This survey serves as a foundational resource for researchers and practitioners, offering a structured analysis of existing methods, challenges, and opportunities in synthetic network traffic generation.

06 Oct 2025

In this letter, we investigate the design of chaotic signal-based transmit waveforms in a multi-functional reconfigurable intelligent surface (MF-RIS)-aided set-up for simultaneous wireless information and power transfer. We propose a differential chaos shift keying-based MF-RIS-aided set-up, where the MF-RIS is partitioned into three non-overlapping surfaces. The elements of the first sub-surface perform energy harvesting (EH), which in turn, provide the required power to the other two sub-surfaces responsible for transmission and reflection of the incident signal. By considering a frequency selective scenario and a realistic EH model, we characterize the chaotic MF-RIS-aided system in terms of its EH performance and the associated bit error rate. Thereafter, we characterize the harvested energy-bit error rate trade-off and derive a lower bound on the number of elements required to operate in the EH mode. Accordingly, we propose novel transmit waveform designs to demonstrate the importance of the choice of appropriate system parameters in the context of achieving self-sustainability.

Group activity detection in multi-person scenes is challenging due to complex human interactions, occlusions, and variations in appearance over time. This work presents a computer vision based framework for group activity recognition and action spotting using a combination of deep learning models and graph based relational reasoning. The system first applies Mask R-CNN to obtain accurate actor localization through bounding boxes and instance masks. Multiple backbone networks, including Inception V3, MobileNet, and VGG16, are used to extract feature maps, and RoIAlign is applied to preserve spatial alignment when generating actor specific features. The mask information is then fused with the feature maps to obtain refined masked feature representations for each actor. To model interactions between individuals, we construct Actor Relation Graphs that encode appearance similarity and positional relations using methods such as normalized cross correlation, sum of absolute differences, and dot product. Graph Convolutional Networks operate on these graphs to reason about relationships and predict both individual actions and group level activities. Experiments on the Collective Activity dataset demonstrate that the combination of mask based feature refinement, robust similarity search, and graph neural network reasoning leads to improved recognition performance across both crowded and non crowded scenarios. This approach highlights the potential of integrating segmentation, feature extraction, and relational graph reasoning for complex video understanding tasks.

Multiple string matching is known as locating all the occurrences of a given number of patterns in an arbitrary string. It is used in bio-computing applications where the algorithms are commonly used for retrieval of information such as sequence analysis and gene/protein identification. Extremely large amount of data in the form of strings has to be processed in such bio-computing applications. Therefore, improving the performance of multiple string matching algorithms is always desirable. Multicore architectures are capable of providing better performance by parallelizing the multiple string matching algorithms. The Aho-Corasick algorithm is the one that is commonly used in exact multiple string matching algorithms. The focus of this paper is the acceleration of Aho-Corasick algorithm through a multicore CPU based software implementation. Through our implementation and evaluation of results, we prove that our method performs better compared to the state of the art.

Damage Assessment after Natural Disasters with UAVs: Semantic Feature Extraction using Deep Learning

Damage Assessment after Natural Disasters with UAVs: Semantic Feature Extraction using Deep Learning

Unmanned aerial vehicle-assisted disaster recovery missions have been promoted recently due to their reliability and flexibility. Machine learning algorithms running onboard significantly enhance the utility of UAVs by enabling real-time data processing and efficient decision-making, despite being in a resource-constrained environment. However, the limited bandwidth and intermittent connectivity make transmitting the outputs to ground stations challenging. This paper proposes a novel semantic extractor that can be adopted into any machine learning downstream task for identifying the critical data required for decision-making. The semantic extractor can be executed onboard which results in a reduction of data that needs to be transmitted to ground stations. We test the proposed architecture together with the semantic extractor on two publicly available datasets, FloodNet and RescueNet, for two downstream tasks: visual question answering and disaster damage level classification. Our experimental results demonstrate the proposed method maintains high accuracy across different downstream tasks while significantly reducing the volume of transmitted data, highlighting the effectiveness of our semantic extractor in capturing task-specific salient information.

Tuberculosis (TB) is a contagious bacterial airborne disease, and is one of the top 10 causes of death worldwide. According to the World Health Organization (WHO), around 1.8 billion people are infected with TB and 1.6 million deaths were reported in 2018. More importantly,95% of cases and deaths were from developing countries. Yet, TB is a completely curable disease through early diagnosis. To achieve this goal one of the key requirements is efficient utilization of existing diagnostic technologies, among which chest X-ray is the first line of diagnostic tool used for screening for active TB. The presented deep learning pipeline consists of three different state of the art deep learning architectures, to generate, segment and classify lung X-rays. Apart from this image preprocessing, image augmentation, genetic algorithm based hyper parameter tuning and model ensembling were used to to improve the diagnostic process. We were able to achieve classification accuracy of 97.1% (Youden's index-0.941,sensitivity of 97.9% and specificity of 96.2%) which is a considerable improvement compared to the existing work in the literature. In our work, we present an highly accurate, automated TB screening system using chest X-rays, which would be helpful especially for low income countries with low access to qualified medical professionals.

Researchers at the University of Peradeniya developed BandRC, a novel activation function for Implicit Neural Representations that utilizes a frequency-shifted raised cosine filter and adaptive parameter adjustment. This approach effectively mitigates spectral bias and enhances robustness to noise, achieving state-of-the-art performance across multiple computer vision inverse problems.

04 Oct 2023

Multispectral imaging coupled with Artificial Intelligence, Machine Learning

and Signal Processing techniques work as a feasible alternative for laboratory

testing, especially in food quality control. Most of the recent related

research has been focused on reflectance multispectral imaging but a system

with both reflectance, transmittance capabilities would be ideal for a wide

array of specimen types including solid and liquid samples. In this paper, a

device which includes a dedicated reflectance mode and a dedicated

transmittance mode is proposed. Dual mode operation where fast switching

between two modes is facilitated. An innovative merged mode is introduced in

which both reflectance and transmittance information of a specimen are combined

to form a higher dimensional dataset with more features. Spatial and temporal

variations of measurements are analyzed to ensure the quality of measurements.

The concept is validated using a standard color palette and specific case

studies are done for standard food samples such as turmeric powder and coconut

oil proving the validity of proposed contributions. The classification accuracy

of standard color palette testing was over 90% and the accuracy of coconut oil

adulteration was over 95%. while the merged mode was able to provide the best

accuracy of 99% for the turmeric adulteration. A linear functional mapping was

done for coconut oil adulteration with an R2 value of 0.9558.

Tuberculosis (TB) is a contagious bacterial airborne disease, and is one of the top 10 causes of death worldwide. According to the World Health Organization (WHO), around 1.8 billion people are infected with TB and 1.6 million deaths were reported in 2018. More importantly,95% of cases and deaths were from developing countries. Yet, TB is a completely curable disease through early diagnosis. To achieve this goal one of the key requirements is efficient utilization of existing diagnostic technologies, among which chest X-ray is the first line of diagnostic tool used for screening for active TB. The presented deep learning pipeline consists of three different state of the art deep learning architectures, to generate, segment and classify lung X-rays. Apart from this image preprocessing, image augmentation, genetic algorithm based hyper parameter tuning and model ensembling were used to to improve the diagnostic process. We were able to achieve classification accuracy of 97.1% (Youden's index-0.941,sensitivity of 97.9% and specificity of 96.2%) which is a considerable improvement compared to the existing work in the literature. In our work, we present an highly accurate, automated TB screening system using chest X-rays, which would be helpful especially for low income countries with low access to qualified medical professionals.

13 Dec 2024

The study of biomechanics during locomotion provides valuable insights into

the effects of varying conditions on specific movement patterns. This research

focuses on examining the influence of different shoe parameters on walking

biomechanics, aiming to understand their impact on gait patterns. To achieve

this, various methodologies are explored to estimate human body biomechanics,

including computer vision techniques and wearable devices equipped with

advanced sensors. Given privacy considerations and the need for robust,

accurate measurements, this study employs wearable devices with Inertial

Measurement Unit (IMU) sensors. These devices offer a non-invasive, precise,

and high-resolution approach to capturing biomechanical data during locomotion.

Raw sensor data collected from wearable devices is processed using an Extended

Kalman Filter to reduce noise and extract meaningful information. This includes

calculating joint angles throughout the gait cycle, enabling a detailed

analysis of movement dynamics. The analysis identifies correlations between

shoe parameters and key gait characteristics, such as stability, mobility, step

time, and propulsion forces. The findings provide deeper insights into how

footwear design influences walking efficiency and biomechanics. This study

paves the way for advancements in footwear technology and contributes to the

development of personalized solutions for enhancing gait performance and

mobility.

02 Nov 2017

Non-orthogonal multiple access (NOMA) is an interesting concept to provide

higher capacity for future wireless communications. In this article, we

consider the feasibility and benefits of combining full-duplex operation with

NOMA for modern communication systems. Specifically, we provide a comprehensive

overview on application of full-duplex NOMA in cellular networks, cooperative

and cognitive radio networks, and characterize gains possible due to

full-duplex operation. Accordingly, we discuss challenges, particularly the

self-interference and inter-user interference and provide potential solutions

to interference mitigation and quality-of-service provision based on

beamforming, power control, and link scheduling. We further discuss future

research challenges and interesting directions to pursue to bring full-duplex

NOMA into maturity and use in practice.

27 Oct 2021

With the expansion of internet-based platforms, social media and sharing economy, most individuals are tempted to review products or services that they consume.

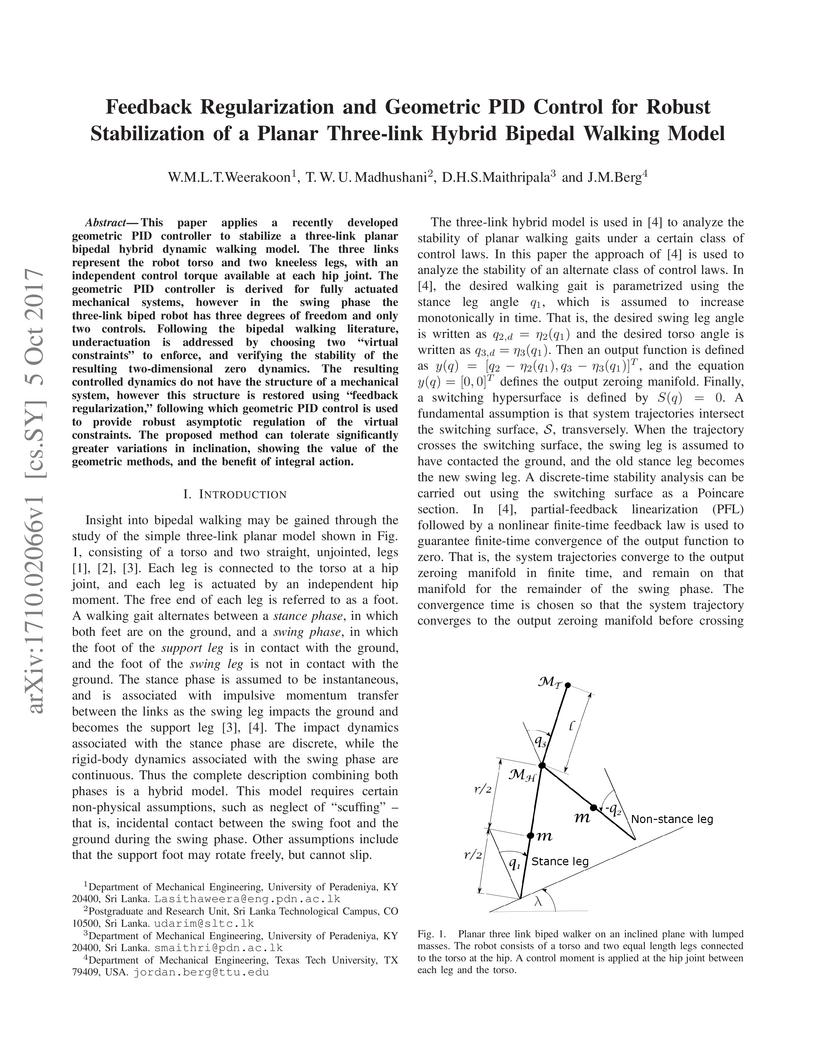

This paper applies a recently developed geometric PID controller to stabilize a three-link planar bipedal hybrid dynamic walking model. The three links represent the robot torso and two kneeless legs, with an independent control torque available at each hip joint. The geometric PID controller is derived for fully actuated mechanical systems, however in the swing phase the three-link biped robot has three degrees of freedom and only two controls. Following the bipedal walking literature, underactuation is addressed by choosing two "virtual constraints" to enforce, and verifying the stability of the resulting two-dimensional zero dynamics. The resulting controlled dynamics do not have the structure of a mechanical system, however this structure is restored using "feedback regularization," following which geometric PID control is used to provide robust asymptotic regulation of the virtual constraints. The proposed method can tolerate significantly greater variations in inclination, showing the value of the geometric methods, and the benefit of integral action.

There are no more papers matching your filters at the moment.