University of San Francisco

The natural world is full of complex systems characterized by intricate relations between their components: from social interactions between individuals in a social network to electrostatic interactions between atoms in a protein. Topological Deep Learning (TDL) provides a comprehensive framework to process and extract knowledge from data associated with these systems, such as predicting the social community to which an individual belongs or predicting whether a protein can be a reasonable target for drug development. TDL has demonstrated theoretical and practical advantages that hold the promise of breaking ground in the applied sciences and beyond. However, the rapid growth of the TDL literature for relational systems has also led to a lack of unification in notation and language across message-passing Topological Neural Network (TNN) architectures. This presents a real obstacle for building upon existing works and for deploying message-passing TNNs to new real-world problems. To address this issue, we provide an accessible introduction to TDL for relational systems, and compare the recently published message-passing TNNs using a unified mathematical and graphical notation. Through an intuitive and critical review of the emerging field of TDL, we extract valuable insights into current challenges and exciting opportunities for future development.

Universal Language Model Fine-tuning (ULMFiT) proposes a robust transfer learning method for NLP by fine-tuning a pretrained language model using specialized techniques such as discriminative fine-tuning and gradual unfreezing. This approach enables deep learning models to achieve state-of-the-art performance across various text classification tasks with significantly less labeled data.

This paper is an attempt to explain all the matrix calculus you need in order

to understand the training of deep neural networks. We assume no math knowledge

beyond what you learned in calculus 1, and provide links to help you refresh

the necessary math where needed. Note that you do not need to understand this

material before you start learning to train and use deep learning in practice;

rather, this material is for those who are already familiar with the basics of

neural networks, and wish to deepen their understanding of the underlying math.

Don't worry if you get stuck at some point along the way---just go back and

reread the previous section, and try writing down and working through some

examples. And if you're still stuck, we're happy to answer your questions in

the Theory category at forums.fast.ai. Note: There is a reference section at

the end of the paper summarizing all the key matrix calculus rules and

terminology discussed here. See related articles at http://explained.ai

Michigan State University

Michigan State University Google DeepMind

Google DeepMind University of CambridgeUniversity of Utah

University of CambridgeUniversity of Utah Imperial College London

Imperial College London University of Oxford

University of Oxford Tsinghua University

Tsinghua University Stanford University

Stanford University University of California, San DiegoUC Santa BarbaraThe University of Manchester

University of California, San DiegoUC Santa BarbaraThe University of Manchester Technical University of MunichHelmholtz MunichSapienza University of RomeUniversity of TennesseeUniversity of South FloridaUniversity at AlbanyUniversity of SalzburgUniversity of Hawai‘i at MUniversity of San FranciscoIBM CorporationBlueLightAI IncRWTH Aachen University

Technical University of MunichHelmholtz MunichSapienza University of RomeUniversity of TennesseeUniversity of South FloridaUniversity at AlbanyUniversity of SalzburgUniversity of Hawai‘i at MUniversity of San FranciscoIBM CorporationBlueLightAI IncRWTH Aachen UniversityTopological Deep Learning (TDL) is presented as a new frontier for relational learning, integrating topological concepts into deep learning models to address limitations of graph-based methods by capturing higher-order interactions and global data structures. A collaborative effort by 23 researchers from diverse institutions, this position paper defines the field's scope and outlines key advantages and future research directions for TDL.

Type Ia supernovae (SNe Ia) were instrumental in establishing the acceleration of the universe's expansion. By virtue of their combination of distance reach, precision, and prevalence, they continue to provide key cosmological constraints, complementing other cosmological probes. Individual SN surveys cover only over about a factor of two in redshift, so compilations of multiple SN datasets are strongly beneficial. We assemble an up-to-date "Union" compilation of 2087 cosmologically useful SNe Ia from 24 datasets ("Union3"). We take care to put all SNe on the same distance scale and update the light-curve fitting with SALT3 to use the full rest-frame optical. Over the next few years, the number of cosmologically useful SNe Ia will increase by more than a factor of ten, and keeping systematic uncertainties subdominant will be more challenging than ever. We discuss the importance of treating outliers, selection effects, light-curve shape and color populations and standardization relations, unexplained dispersion, and heterogeneous observations simultaneously. We present an updated Bayesian framework, called UNITY1.5 (Unified Nonlinear Inference for Type-Ia cosmologY), that incorporates significant improvements in our ability to model selection effects, standardization, and systematic uncertainties compared to earlier analyses. As an analysis byproduct, we also recover the posterior of the SN-only peculiar-velocity field, although we do not interpret it in this work. We compute updated cosmological constraints with Union3 and UNITY1.5, finding weak 1.7--2.6 sigma tension with LambdaCDM and possible evidence for thawing dark energy (w0 > -1, wa < 0). We release our SN distances, light-curve fits, and UNITY1.5 framework to the community.

We present integral field spectroscopic observations of 75 strong gravitational lens candidates identified with a residual neural network in the DESI Legacy Imaging Surveys, obtained with the Multi Unit Spectroscopic Explorer (MUSE) on the ESO's Very Large Telescope. These observations are part of an ongoing effort to build a large, spectroscopically confirmed sample of strong lensing systems for studies on dark matter, galaxy structure, and cosmology. Our MUSE program targets both lens and source redshifts, with particular emphasis on southern hemisphere systems. MUSE's wide spectral coverage and integral field capability allow for efficient identification of multiple sources, lens environments, and weak spectral features. Redshifts for lenses and sources were obtained via manual identification of spectral features in extracted 1D spectra. Our dataset includes systems with complex configurations, such as multiple source planes and group or cluster-scale environments. We extracted and analyzed 185 spectra, successfully determining both the lens and the source redshifts for 48 gravitational lens systems. For an additional 21 targets, we measured the redshifts of the lenses but were unable to determine the redshifts of the background sources. Six targets were confirmed to not be gravitational lenses. The results presented here complement space-based imaging from our HST SNAPshot program and spectroscopic follow-up with DESI and Keck, and have lasting legacy value for identifying interesting high-redshift sources and complex lensing configurations.

Researchers from Vinci4D, Stanford University, and Imperial College London introduced Copresheaf Topological Neural Networks (CTNNs), a generalized deep learning framework that unifies various existing architectures. This framework achieved over 50% reduction in Mean Squared Error for physics simulations and up to 12.1 percentage points improvement in structure recognition tasks across diverse data types.

Topotein introduces a topological deep learning framework for protein representation learning that explicitly models hierarchical protein organization from residues to secondary structures. Its Protein Combinatorial Complex and Topology-Complete Perceptron Network achieve state-of-the-art results in protein fold classification and demonstrate superior robustness when relying solely on structural information.

University of Pittsburgh

University of Pittsburgh University of California, Santa Barbara

University of California, Santa Barbara UCLA

UCLA Chinese Academy of Sciences

Chinese Academy of Sciences Université de Montréal

Université de Montréal University of Chicago

University of Chicago UC Berkeley

UC Berkeley University College LondonUniversity of Edinburgh

University College LondonUniversity of Edinburgh Boston UniversitySouthern Methodist University

Boston UniversitySouthern Methodist University Johns Hopkins UniversityThe University of Texas at Dallas

Johns Hopkins UniversityThe University of Texas at Dallas Lawrence Berkeley National LaboratoryUniversity of RochesterFermi National Accelerator LaboratoryUniversity of Portsmouth

Lawrence Berkeley National LaboratoryUniversity of RochesterFermi National Accelerator LaboratoryUniversity of Portsmouth The Ohio State UniversityAix-Marseille UnivTechnical University MunichUniversity of Hawai’iUniversidad de Los AndesInstitut d’Estudis Espacials de Catalunya (IEEC)NSF’s National Optical-Infrared Astronomy Research LaboratoryUniversity of San FranciscoMax Planck Institute for Astrophysics (MPA)Gemini ObservatoryKavli Institute for the Physics and Mathematics of the Universe, University of TokyoCiela – Montreal Institute for Astrophysical Data Analysis and Machine LearningInstitute of Space Sciences (ICE–CSIC)

The Ohio State UniversityAix-Marseille UnivTechnical University MunichUniversity of Hawai’iUniversidad de Los AndesInstitut d’Estudis Espacials de Catalunya (IEEC)NSF’s National Optical-Infrared Astronomy Research LaboratoryUniversity of San FranciscoMax Planck Institute for Astrophysics (MPA)Gemini ObservatoryKavli Institute for the Physics and Mathematics of the Universe, University of TokyoCiela – Montreal Institute for Astrophysical Data Analysis and Machine LearningInstitute of Space Sciences (ICE–CSIC)We present spectroscopic data of strong lenses and their source galaxies using the Keck Near-Infrared Echellette Spectrometer (NIRES) and the Dark Energy Spectroscopic Instrument (DESI), providing redshifts necessary for nearly all strong-lensing applications with these systems, especially the extraction of physical parameters from lensing modeling. These strong lenses were found in the DESI Legacy Imaging Surveys using Residual Neural Networks (ResNet) and followed up by our Hubble Space Telescope program, with all systems displaying unambiguous lensed arcs. With NIRES, we target eight lensed sources at redshifts difficult to measure in the optical range and determine the source redshifts for six, between zs = 1.675 and 3.332. DESI observed one of the remaining source redshifts, as well as an additional source redshift within the six systems. The two systems with non-detections by NIRES were observed for a considerably shorter 600s at high airmass. Combining NIRES infrared spectroscopy with optical spectroscopy from our DESI Strong Lensing Secondary Target Program, these results provide the complete lens and source redshifts for six systems, a resource for refining automated strong lens searches in future deep- and wide-field imaging surveys and addressing a range of questions in astrophysics and cosmology.

Accurate multi-sensor calibration is essential for deploying robust perception systems in applications such as autonomous driving and intelligent transportation. Existing LiDAR-camera calibration methods often rely on manually placed targets, preliminary parameter estimates, or intensive data preprocessing, limiting their scalability and adaptability in real-world settings. In this work, we propose a fully automatic, targetless, and online calibration framework, CalibRefine, which directly processes raw LiDAR point clouds and camera images. Our approach is divided into four stages: (1) a Common Feature Discriminator that leverages relative spatial positions, visual appearance embeddings, and semantic class cues to identify and generate reliable LiDAR-camera correspondences, (2) a coarse homography-based calibration that uses the matched feature correspondences to estimate an initial transformation between the LiDAR and camera frames, serving as the foundation for further refinement, (3) an iterative refinement to incrementally improve alignment as additional data frames become available, and (4) an attention-based refinement that addresses non-planar distortions by leveraging a Vision Transformer and cross-attention mechanisms. Extensive experiments on two urban traffic datasets demonstrate that CalibRefine achieves high-precision calibration with minimal human input, outperforming state-of-the-art targetless methods and matching or surpassing manually tuned baselines. Our results show that robust object-level feature matching, combined with iterative refinement and self-supervised attention-based refinement, enables reliable sensor alignment in complex real-world conditions without ground-truth matrices or elaborate preprocessing. Code is available at this https URL

For the centennial of quantum mechanics, we offer an overview of the central role played by quantum information and thermalization in problems involving fundamental properties of spacetime and gravitational physics. This is an open area of research still a century after the initial development of formal quantum mechanics, highlighting the effectiveness of quantum physics in the description of all natural phenomena. These remarkable connections can be highlighted with the tools of modern quantum optics, which effectively addresses the three-fold interplay of interacting atoms, fields, and spacetime backgrounds describing gravitational fields and noninertial systems. In this review article, we select aspects of these phenomena centered on quantum features of the acceleration radiation of particles in the presence of black holes. The ensuing horizon-brightened radiation (HBAR) provides a case study of the role played by quantum physics in nontrivial spacetime behavior, and also shows a fundamental correspondence with black hole thermodynamics.

CNRS

CNRS University of Pittsburgh

University of Pittsburgh University of Cambridge

University of Cambridge University of California, Santa Barbara

University of California, Santa Barbara Harvard University

Harvard University UCLA

UCLA University of Chicago

University of Chicago UC Berkeley

UC Berkeley University College LondonUniversity of Edinburgh

University College LondonUniversity of Edinburgh Boston UniversitySouthern Methodist UniversityThe University of Texas at Dallas

Boston UniversitySouthern Methodist UniversityThe University of Texas at Dallas Lawrence Berkeley National Laboratory

Lawrence Berkeley National Laboratory Perimeter Institute for Theoretical PhysicsUniversity of RochesterUniversity of Portsmouth

Perimeter Institute for Theoretical PhysicsUniversity of RochesterUniversity of Portsmouth The Ohio State UniversityIN2P3University of Hawai’iUniversit`a degli Studi di MilanoUniversidad de Los AndesThe University of UtahInstitut d’Estudis Espacials de Catalunya (IEEC)Center for Cosmology and AstroParticle PhysicsUniversidad de GuanajuatoNSF’s National Optical-Infrared Astronomy Research LaboratorySiena CollegeUniversity of San FranciscoUniversidad Nacional Autonoma de MexicoGemini ObservatoryInstitute of Space SciencesSpace Sciences LaboratoryICE-CSICInstitute of Cosmology and GravitationObservatorio Astron´omicoQuantum Science and EngineeringSorbonne Université

The Ohio State UniversityIN2P3University of Hawai’iUniversit`a degli Studi di MilanoUniversidad de Los AndesThe University of UtahInstitut d’Estudis Espacials de Catalunya (IEEC)Center for Cosmology and AstroParticle PhysicsUniversidad de GuanajuatoNSF’s National Optical-Infrared Astronomy Research LaboratorySiena CollegeUniversity of San FranciscoUniversidad Nacional Autonoma de MexicoGemini ObservatoryInstitute of Space SciencesSpace Sciences LaboratoryICE-CSICInstitute of Cosmology and GravitationObservatorio Astron´omicoQuantum Science and EngineeringSorbonne UniversitéWe present the Dark Energy Spectroscopic Instrument (DESI) Strong Lensing Secondary Target Program. This is a spectroscopic follow-up program for strong gravitational lens candidates found in the DESI Legacy Imaging Surveys footprint. Spectroscopic redshifts for the lenses and lensed source are crucial for lens modeling to obtain physical parameters. The spectroscopic catalog in this paper consists of 73 candidate systems from the DESI Early Data Release (EDR). We have confirmed 20 strong lensing systems and determined four to not be lenses. For the remaining systems, more spectroscopic data from ongoing and future observations will be presented in future publications. We discuss the implications of our results for lens searches with neural networks in existing and future imaging surveys as well as for lens modeling. This Strong Lensing Secondary Target Program is part of the DESI Strong Lens Foundry project, and this is Paper II of a series on this project.

The rapid adoption of Large Language Models(LLMs) for code generation has transformed software development, yet little attention has been given to how security vulnerabilities evolve through iterative LLM feedback. This paper analyzes security degradation in AI-generated code through a controlled experiment with 400 code samples across 40 rounds of "improvements" using four distinct prompting strategies. Our findings show a 37.6% increase in critical vulnerabilities after just five iterations, with distinct vulnerability patterns emerging across different prompting approaches. This evidence challenges the assumption that iterative LLM refinement improves code security and highlights the essential role of human expertise in the loop. We propose practical guidelines for developers to mitigate these risks, emphasizing the need for robust human validation between LLM iterations to prevent the paradoxical introduction of new security issues during supposedly beneficial code "improvements".

University of California, Santa BarbaraUniversity of Utah

University of California, Santa BarbaraUniversity of Utah Northeastern University

Northeastern University Imperial College London

Imperial College London University of ManchesterLouisiana State University

University of ManchesterLouisiana State University Delft University of Technology

Delft University of Technology Purdue University

Purdue University Aalto UniversitySapienza University of Rome

Aalto UniversitySapienza University of Rome Australian National UniversityUniversitat Politècnica de CatalunyaUniversitat de Barcelona

Australian National UniversityUniversitat Politècnica de CatalunyaUniversitat de Barcelona Dartmouth CollegeUniversity of BergenIBMUniversity of San FranciscoKTHRWTH Aachen University

Dartmouth CollegeUniversity of BergenIBMUniversity of San FranciscoKTHRWTH Aachen UniversityWe introduce TopoX, a Python software suite that provides reliable and user-friendly building blocks for computing and machine learning on topological domains that extend graphs: hypergraphs, simplicial, cellular, path and combinatorial complexes. TopoX consists of three packages: TopoNetX facilitates constructing and computing on these domains, including working with nodes, edges and higher-order cells; TopoEmbedX provides methods to embed topological domains into vector spaces, akin to popular graph-based embedding algorithms such as node2vec; TopoModelX is built on top of PyTorch and offers a comprehensive toolbox of higher-order message passing functions for neural networks on topological domains. The extensively documented and unit-tested source code of TopoX is available under MIT license at this https URL}{this https URL.

Wildfires have emerged as one of the most destructive natural disasters worldwide, causing catastrophic losses in both human lives and forest wildlife. Recently, the use of Artificial Intelligence (AI) in wildfires, propelled by the integration of Unmanned Aerial Vehicles (UAVs) and deep learning models, has created an unprecedented momentum to implement and develop more effective wildfire management. Although some of the existing survey papers have explored various learning-based approaches, a comprehensive review emphasizing the application of AI-enabled UAV systems and their subsequent impact on multi-stage wildfire management is notably lacking. This survey aims to bridge these gaps by offering a systematic review of the recent state-of-the-art technologies, highlighting the advancements of UAV systems and AI models from pre-fire, through the active-fire stage, to post-fire management. To this aim, we provide an extensive analysis of the existing remote sensing systems with a particular focus on the UAV advancements, device specifications, and sensor technologies relevant to wildfire management. We also examine the pre-fire and post-fire management approaches, including fuel monitoring, prevention strategies, as well as evacuation planning, damage assessment, and operation strategies. Additionally, we review and summarize a wide range of computer vision techniques in active-fire management, with an emphasis on Machine Learning (ML), Reinforcement Learning (RL), and Deep Learning (DL) algorithms for wildfire classification, segmentation, detection, and monitoring tasks. Ultimately, we underscore the substantial advancement in wildfire modeling through the integration of cutting-edge AI techniques and UAV-based data, providing novel insights and enhanced predictive capabilities to understand dynamic wildfire behavior.

University of Toronto

University of Toronto University of Pittsburgh

University of Pittsburgh University of Waterloo

University of Waterloo University College LondonNational Taiwan University

University College LondonNational Taiwan University University of California, Irvine

University of California, Irvine University of Michigan

University of Michigan Boston UniversitySouthern Methodist University

Boston UniversitySouthern Methodist University Lawrence Berkeley National Laboratory

Lawrence Berkeley National Laboratory Perimeter Institute for Theoretical PhysicsFermi National Accelerator LaboratoryUniversity of Portsmouth

Perimeter Institute for Theoretical PhysicsFermi National Accelerator LaboratoryUniversity of Portsmouth University of VirginiaSejong UniversityUniversitat Aut`onoma de BarcelonaSan Diego State UniversityUniversity of Hawai’iUniversit`a degli Studi di MilanoNSF NOIRLabInstituto de Astrofísica de Andalucía-CSICUniversidad de Los AndesInstitut d’Estudis Espacials de Catalunya (IEEC)Universitat Polit`ecnica de CatalunyaSiena CollegeUniversity of San FranciscoUniversidad Nacional Autonoma de MexicoWaterloo Centre for AstrophysicsIRFU, CEA, Universite Paris-SaclayInstitut de Física d’Altes Energies (IFAE), The Barcelona Institute of Science and TechnologySorbonne Universit´e, CNRS/IN2P3Institute of Space Sciences (ICE–CSIC)Observatorio Astronómico, Universidad de los AndesInstituciò Catalana de Recerca i Estudis AvancatsINAF

Osservatorio Astronomico di Brera

University of VirginiaSejong UniversityUniversitat Aut`onoma de BarcelonaSan Diego State UniversityUniversity of Hawai’iUniversit`a degli Studi di MilanoNSF NOIRLabInstituto de Astrofísica de Andalucía-CSICUniversidad de Los AndesInstitut d’Estudis Espacials de Catalunya (IEEC)Universitat Polit`ecnica de CatalunyaSiena CollegeUniversity of San FranciscoUniversidad Nacional Autonoma de MexicoWaterloo Centre for AstrophysicsIRFU, CEA, Universite Paris-SaclayInstitut de Física d’Altes Energies (IFAE), The Barcelona Institute of Science and TechnologySorbonne Universit´e, CNRS/IN2P3Institute of Space Sciences (ICE–CSIC)Observatorio Astronómico, Universidad de los AndesInstituciò Catalana de Recerca i Estudis AvancatsINAF

Osservatorio Astronomico di BreraWe present a new method to search for strong gravitational lensing systems by pairing spectra that are close together on the sky in a spectroscopic survey. We visually inspect 26,621 spectra in the Dark Energy Spectroscopic Instrument (DESI) Data Release 1 that are selected in this way. We further inspect the 11,848 images corresponding to these spectra in the DESI Legacy Imaging Surveys Data Release 10, and obtain 2046 conventional strong gravitational lens candidates, of which 1906 are new. This constitutes the largest sample of lens candidates identified to date in spectroscopic data. Besides the conventional candidates, we identify a new class of systems that we term "dimple lenses". These systems have a low-mass foreground galaxy as a lens, typically smaller in angular extent and fainter compared with the lensed background source galaxy, producing subtle surface brightness indentations in the latter. We report the discovery of 318 of these "dimple-lens" candidates. We suspect that these represent dwarf galaxy lensing. With follow-up observations, they could offer a new avenue to test the cold dark matter model by probing their mass profiles, stellar mass-halo mass relation, and halo mass function for MHalo≲1013M⊙. Thus, in total, we report 2164 new lens candidates. Our method demonstrates the power of pairwise spectroscopic analysis and provides a pathway complementary to imaging-based and single-spectrum lens searches.

We introduce a general stochastic differential equation framework for modelling multiobjective optimization dynamics in iterative Large Language Model (LLM) interactions. Our framework captures the inherent stochasticity of LLM responses through explicit diffusion terms and reveals systematic interference patterns between competing objectives via an interference matrix formulation. We validate our theoretical framework using iterative code generation as a proof-of-concept application, analyzing 400 sessions across security, efficiency, and functionality objectives. Our results demonstrate strategy-dependent convergence behaviors with rates ranging from 0.33 to 1.29, and predictive accuracy achieving R2 = 0.74 for balanced approaches. This work proposes the feasibility of dynamical systems analysis for multi-objective LLM interactions, with code generation serving as an initial validation domain.

13 Sep 2025

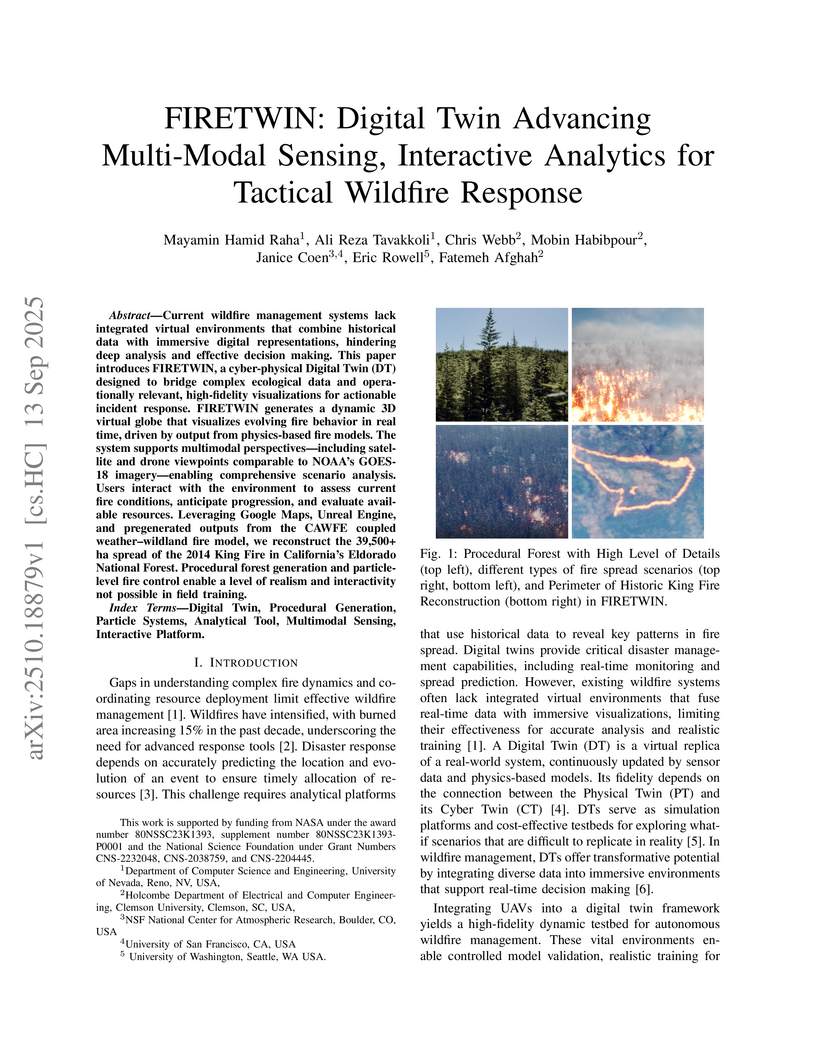

Current wildfire management systems lack integrated virtual environments that combine historical data with immersive digital representations, hindering deep analysis and effective decision making. This paper introduces FIRETWIN, a cyber-physical Digital Twin (DT) designed to bridge complex ecological data and operationally relevant, high-fidelity visualizations for actionable incident response. FIRETWIN generates a dynamic 3D virtual globe that visualizes evolving fire behavior in real time, driven by output from physics-based fire models. The system supports multimodal perspectives, including satellite and drone viewpoints comparable to NOAA GOES-18 imagery - enabling comprehensive scenario analysis. Users interact with the environment to assess current fire conditions, anticipate progression, and evaluate available resources. Leveraging Google Maps, Unreal Engine, and pre-generated outputs from the CAWFE coupled weather-wildland fire model, we reconstruct the spread of the 2014 King Fire in California Eldorado National Forest. Procedural forest generation and particle-level fire control enable a level of realism and interactivity not possible in field training.

27 Jul 2023

Chinese Academy of Sciences

Chinese Academy of Sciences Université de MontréalTechnical University MunichMila - Quebec Artificial Intelligence InstituteUniversity of San FranciscoMax Planck Institute for Astrophysics (MPA)Academia Sinica Institute of Astronomy and Astrophysics (ASIAA)Ciela – Montreal Institute for Astrophysical Data Analysis and Machine Learning

Université de MontréalTechnical University MunichMila - Quebec Artificial Intelligence InstituteUniversity of San FranciscoMax Planck Institute for Astrophysics (MPA)Academia Sinica Institute of Astronomy and Astrophysics (ASIAA)Ciela – Montreal Institute for Astrophysical Data Analysis and Machine LearningUnderstanding the evolution of galaxies provides crucial insights into a broad range of aspects in astrophysics, including structure formation and growth, the nature of dark energy and dark matter, baryonic physics, and more. It is, however, infeasible to track the evolutionary processes of individual galaxies in real time given their long timescales. As a result, galaxy evolution analyses have been mostly based on ensembles of galaxies that are supposed to be from the same population according to usually basic and crude observational criteria. We propose a new strategy of evaluating the evolution of an individual galaxy by identifying its descendant galaxies as guided by cosmological simulations. As a proof of concept, we examined the evolution of the total mass distribution of a target strong lensing galaxy at z=0.884 using the proposed strategy. We selected 158 galaxies from the IllustrisTNG300 simulation that we identified as analogs of the target galaxy. We followed their descendants and found 11 observed strong lensing galaxies that match in stellar mass and size with the descendants at their redshifts. The observed and simulated results are discussed, although no conclusive assessment is made given the low statistical significance due to the small sample size. Nevertheless, the test confirms that our proposed strategy is already feasible with existing data and simulations. We expect it to play an even more important role in studying galaxy evolution as more strong lens systems and larger simulations become available with the advent of next-generation survey programs and cosmological simulations.

Academia Sinica California Institute of Technology

California Institute of Technology University of Cambridge

University of Cambridge University of Chicago

University of Chicago University College London

University College London University of Copenhagen

University of Copenhagen Space Telescope Science Institute

Space Telescope Science Institute Johns Hopkins University

Johns Hopkins University Arizona State University

Arizona State University The University of Hong Kong

The University of Hong Kong Rutgers University

Rutgers University Stockholm University

Stockholm University University of Arizona

University of Arizona Technical University of MunichUniversity of PortsmouthUniversity of IllinoisMax-Planck-Institut für AstrophysikINAFUniversity of CaliforniaInstituto de Astrofísica de CanariasSwinburne University of TechnologyUniversity of AlabamaThe University of Western AustraliaUniversidad de La LagunaOklahoma State UniversityUniversity of Hawai’iUniversity of San FranciscoUniversity of Nova GoricaObservatories of the Carnegie Institution for ScienceUniversit degli Studi di FerraraUniversit

degli Studi di MilanoUniversit

Di Bologna

Technical University of MunichUniversity of PortsmouthUniversity of IllinoisMax-Planck-Institut für AstrophysikINAFUniversity of CaliforniaInstituto de Astrofísica de CanariasSwinburne University of TechnologyUniversity of AlabamaThe University of Western AustraliaUniversidad de La LagunaOklahoma State UniversityUniversity of Hawai’iUniversity of San FranciscoUniversity of Nova GoricaObservatories of the Carnegie Institution for ScienceUniversit degli Studi di FerraraUniversit

degli Studi di MilanoUniversit

Di Bologna

California Institute of Technology

California Institute of Technology University of Cambridge

University of Cambridge University of Chicago

University of Chicago University College London

University College London University of Copenhagen

University of Copenhagen Space Telescope Science Institute

Space Telescope Science Institute Johns Hopkins University

Johns Hopkins University Arizona State University

Arizona State University The University of Hong Kong

The University of Hong Kong Rutgers University

Rutgers University Stockholm University

Stockholm University University of Arizona

University of Arizona Technical University of MunichUniversity of PortsmouthUniversity of IllinoisMax-Planck-Institut für AstrophysikINAFUniversity of CaliforniaInstituto de Astrofísica de CanariasSwinburne University of TechnologyUniversity of AlabamaThe University of Western AustraliaUniversidad de La LagunaOklahoma State UniversityUniversity of Hawai’iUniversity of San FranciscoUniversity of Nova GoricaObservatories of the Carnegie Institution for ScienceUniversit degli Studi di FerraraUniversit

degli Studi di MilanoUniversit

Di Bologna

Technical University of MunichUniversity of PortsmouthUniversity of IllinoisMax-Planck-Institut für AstrophysikINAFUniversity of CaliforniaInstituto de Astrofísica de CanariasSwinburne University of TechnologyUniversity of AlabamaThe University of Western AustraliaUniversidad de La LagunaOklahoma State UniversityUniversity of Hawai’iUniversity of San FranciscoUniversity of Nova GoricaObservatories of the Carnegie Institution for ScienceUniversit degli Studi di FerraraUniversit

degli Studi di MilanoUniversit

Di BolognaA bright (mF150W,AB=24 mag), z=1.95 supernova (SN) candidate was

discovered in JWST/NIRCam imaging acquired on 2023 November 17. The SN is

quintuply-imaged as a result of strong gravitational lensing by a foreground

galaxy cluster, detected in three locations, and remarkably is the second

lensed SN found in the same host galaxy. The previous lensed SN was called

"Requiem", and therefore the new SN is named "Encore". This makes the MACS

J0138.0−2155 cluster the first known system to produce more than one

multiply-imaged SN. Moreover, both SN Requiem and SN Encore are Type Ia SNe

(SNe Ia), making this the most distant case of a galaxy hosting two SNe Ia.

Using parametric host fitting, we determine the probability of detecting two

SNe Ia in this host galaxy over a ∼10 year window to be ≈3%.

These observations have the potential to yield a Hubble Constant (H0)

measurement with ∼10% precision, only the third lensed SN capable of such

a result, using the three visible images of the SN. Both SN Requiem and SN

Encore have a fourth image that is expected to appear within a few years of

∼2030, providing an unprecedented baseline for time-delay cosmography.

There are no more papers matching your filters at the moment.