University of Vaasa

Although Generative AI (GenAI) has the potential for persona development,

many challenges must be addressed. This research systematically reviews 52

articles from 2022-2024, with important findings. First, closed commercial

models are frequently used in persona development, creating a monoculture

Second, GenAI is used in various stages of persona development (data

collection, segmentation, enrichment, and evaluation). Third, similar to other

quantitative persona development techniques, there are major gaps in persona

evaluation for AI generated personas. Fourth, human-AI collaboration models are

underdeveloped, despite human oversight being crucial for maintaining ethical

standards. These findings imply that realizing the full potential of

AI-generated personas will require substantial efforts across academia and

industry. To that end, we provide a list of research avenues to inspire future

work.

AI-based systems, including Large Language Models (LLM), impact millions by

supporting diverse tasks but face issues like misinformation, bias, and misuse.

AI ethics is crucial as new technologies and concerns emerge, but objective,

practical guidance remains debated. This study examines the use of LLMs for AI

ethics in practice, assessing how LLM trustworthiness-enhancing techniques

affect software development in this context. Using the Design Science Research

(DSR) method, we identify techniques for LLM trustworthiness: multi-agents,

distinct roles, structured communication, and multiple rounds of debate. We

design a multi-agent prototype LLM-MAS, where agents engage in structured

discussions on real-world AI ethics issues from the AI Incident Database. We

evaluate the prototype across three case scenarios using thematic analysis,

hierarchical clustering, comparative (baseline) studies, and running source

code. The system generates approximately 2,000 lines of code per case, compared

to only 80 lines in baseline trials. Discussions reveal terms like bias

detection, transparency, accountability, user consent, GDPR compliance,

fairness evaluation, and EU AI Act compliance, showing this prototype ability

to generate extensive source code and documentation addressing often overlooked

AI ethics issues. However, practical challenges in source code integration and

dependency management may limit its use by practitioners.

Extracting meaning from uncertain, noisy data is a fundamental problem across time series analysis, pattern recognition, and language modeling. This survey presents a unified mathematical framework that connects classical estimation theory, statistical inference, and modern machine learning, including deep learning and large language models. By analyzing how techniques such as maximum likelihood estimation, Bayesian inference, and attention mechanisms address uncertainty, the paper illustrates that many AI methods are rooted in shared probabilistic principles. Through illustrative scenarios including system identification, image classification, and language generation, we show how increasingly complex models build upon these foundations to tackle practical challenges like overfitting, data sparsity, and interpretability. In other words, the work demonstrates that maximum likelihood, MAP estimation, Bayesian classification, and deep learning all represent different facets of a shared goal: inferring hidden causes from noisy and/or biased observations. It serves as both a theoretical synthesis and a practical guide for students and researchers navigating the evolving landscape of machine learning.

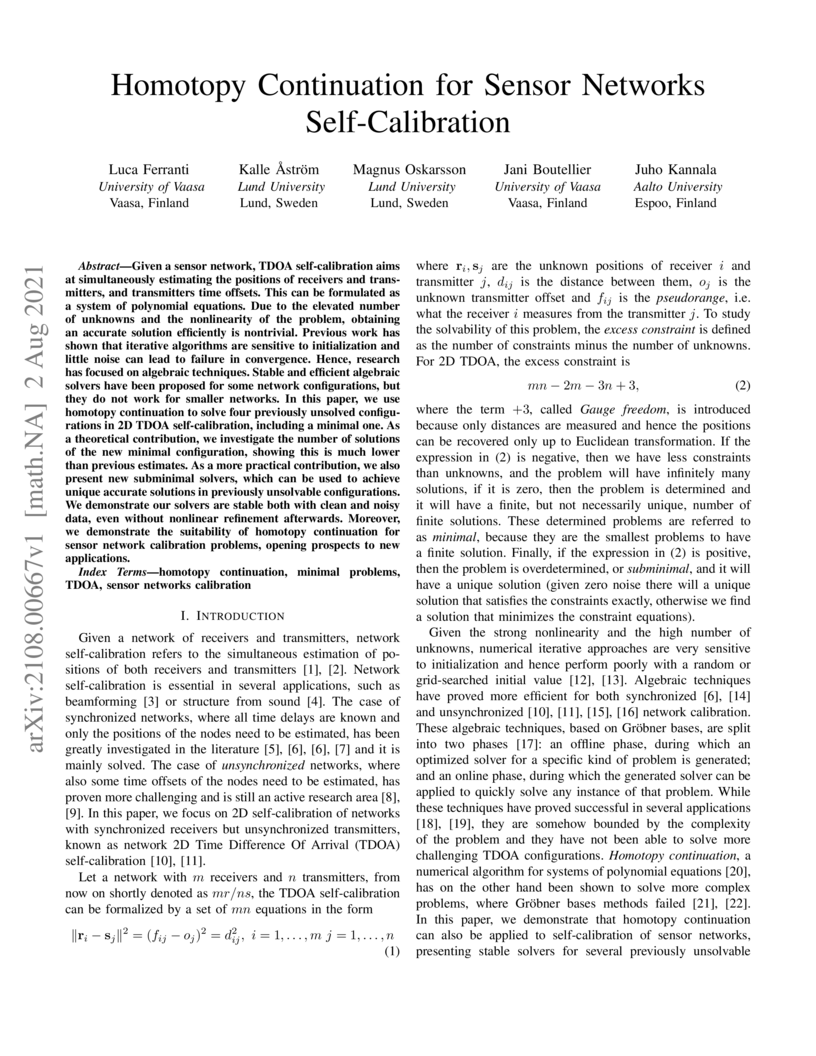

02 Aug 2021

Given a sensor network, TDOA self-calibration aims at simultaneously estimating the positions of receivers and transmitters, and transmitters time offsets. This can be formulated as a system of polynomial equations. Due to the elevated number of unknowns and the nonlinearity of the problem, obtaining an accurate solution efficiently is nontrivial. Previous work has shown that iterative algorithms are sensitive to initialization and little noise can lead to failure in convergence. Hence, research has focused on algebraic techniques. Stable and efficient algebraic solvers have been proposed for some network configurations, but they do not work for smaller networks. In this paper, we use homotopy continuation to solve four previously unsolved configurations in 2D TDOA self-calibration, including a minimal one. As a theoretical contribution, we investigate the number of solutions of the new minimal configuration, showing this is much lower than previous estimates. As a more practical contribution, we also present new subminimal solvers, which can be used to achieve unique accurate solutions in previously unsolvable configurations. We demonstrate our solvers are stable both with clean and noisy data, even without nonlinear refinement afterwards. Moreover, we demonstrate the suitability of homotopy continuation for sensor network calibration problems, opening prospects to new applications.

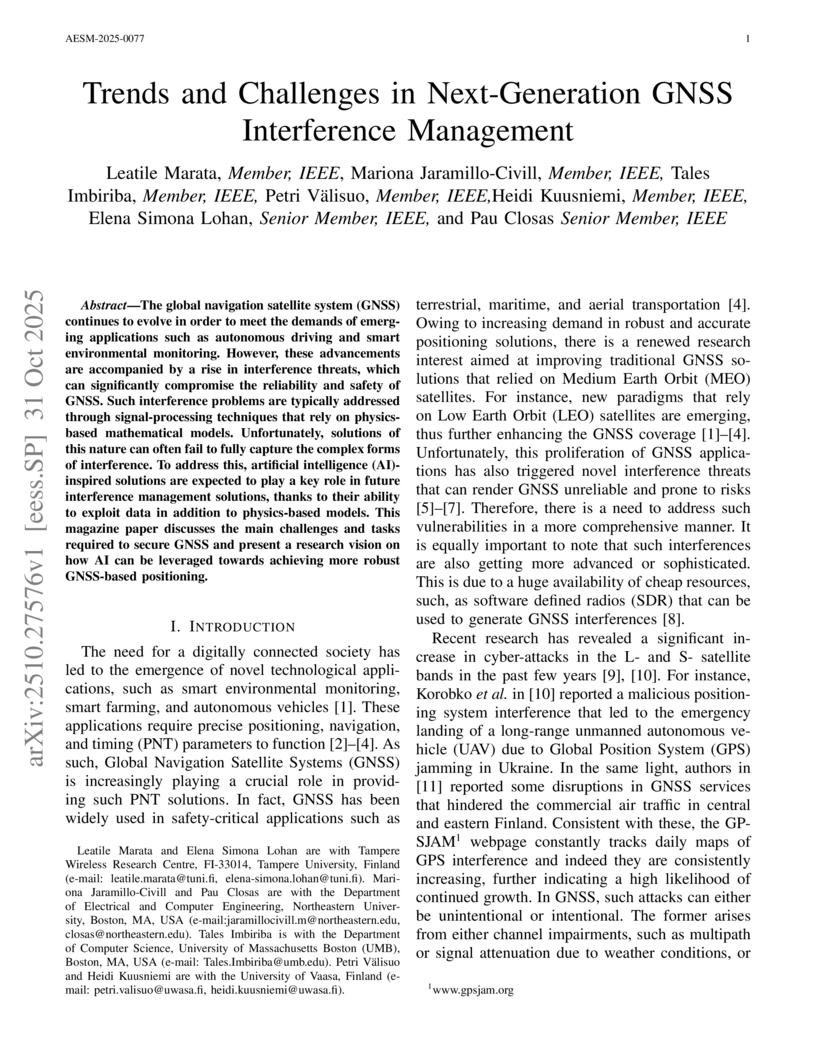

31 Oct 2025

The global navigation satellite system (GNSS) continues to evolve in order to meet the demands of emerging applications such as autonomous driving and smart environmental monitoring. However, these advancements are accompanied by a rise in interference threats, which can significantly compromise the reliability and safety of GNSS. Such interference problems are typically addressed through signal-processing techniques that rely on physics-based mathematical models. Unfortunately, solutions of this nature can often fail to fully capture the complex forms of interference. To address this, artificial intelligence (AI)-inspired solutions are expected to play a key role in future interference management solutions, thanks to their ability to exploit data in addition to physics-based models. This magazine paper discusses the main challenges and tasks required to secure GNSS and present a research vision on how AI can be leveraged towards achieving more robust GNSS-based positioning.

Researchers at the University of Vaasa empirically investigated employee experiences with Emotion AI in a Finnish workplace environment. The study found that while employees generally welcomed wellbeing monitoring, persistent privacy concerns necessitate transparency and open communication regarding data practices to foster trust.

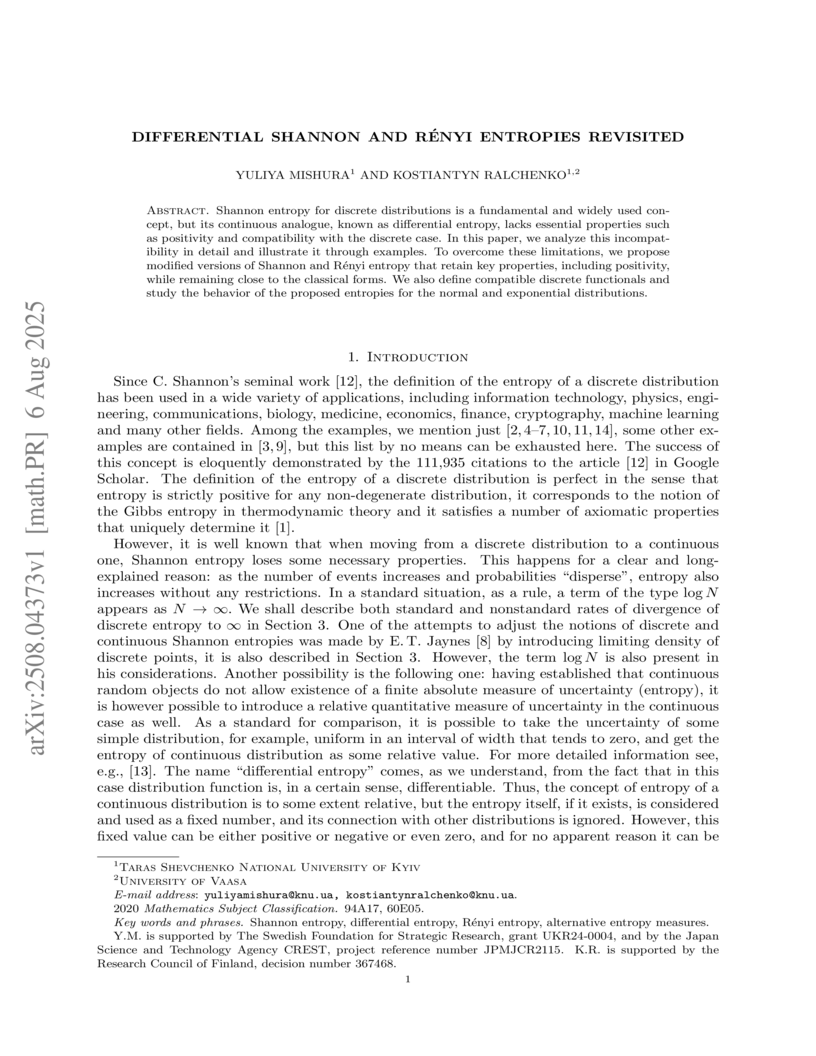

06 Aug 2025

Shannon entropy for discrete distributions is a fundamental and widely used concept, but its continuous analogue, known as differential entropy, lacks essential properties such as positivity and compatibility with the discrete case. In this paper, we analyze this incompatibility in detail and illustrate it through examples. To overcome these limitations, we propose modified versions of Shannon and Rényi entropy that retain key properties, including positivity, while remaining close to the classical forms. We also define compatible discrete functionals and study the behavior of the proposed entropies for the normal and exponential distributions.

31 Jan 2017

This study deals with the problem of pricing European currency options in

discrete time setting, whose prices follow the fractional Black Scholes model

with transaction costs. Both the pricing formula and the fractional partial

differential equation for European call currency options are obtained by

applying the delta-hedging strategy. Some Greeks and the estimator of

volatility are also provided. The empirical studies and the simulation findings

show that the fractional Black Scholes with transaction costs is a satisfactory

model.

We calculate and analyze various entropy measures and their properties for selected probability distributions. The entropies considered include Shannon, Rényi, generalized Rényi, Tsallis, Sharma-Mittal, and modified Shannon entropy, along with the Kullback-Leibler divergence. These measures are examined for several distributions, including gamma, chi-squared, exponential, Laplace, and log-normal distributions. We investigate the dependence of the entropy on the parameters of the respective distribution. We also study the convergence of Shannon entropy for certain probability distributions. Furthermore, we identify the extreme values of Shannon entropy for Gaussian vectors.

22 Sep 2025

Fractional Brownian motion (fBm) is a canonical model for long-memory phenomena. In the presence of large amounts of potentially memory-bearing data, the data are often averaged, which can change the structure of the underlying relationships and affect standard estimation procedures. To address this, we introduce the normalized integrated fractional Brownian motion (nifBm), defined as the average of fBm over a fixed interval. We derive its covariance structure, investigate the stationarity and self-similarity, and extend the framework to linear combinations of independent nifBms and models with deterministic drift. For such linear combinations, we establish stationarity of increments, investigate the asymptotic behavior of the autocovariance function, and prove an ergodic theorem essential for statistical inference. We consider two statistical models: one driven by a single nifBm and another by a linear combination of two independent nifBms, including cases with deterministic drift. For both models, we propose estimators that are strongly consistent and asymptotically normal for both the drift and the full parameter set. Numerical simulations illustrate the theoretical findings, providing a foundation for modeling averaged fractional dynamics, with potential applications in finance, energy markets, and environmental studies.

We present this http URL, a compact (approx. 200 lines) Python script that automates the collection of key compiler metrics, i.e., gate depth, two-qubit-gate count, wall-clock compilation time, and memory footprint, across multiple open-source quantum circuit transpilers. The suite ships with six didactic circuits (3 to 8 qubits) implementing fundamental quantum algorithms and supports Qiskit, tket, Cirq, and the Qiskit-Braket provider; in this paper we showcase results for Qiskit 0.46 and Braket 1.16. The entire run completes in under three minutes on a laptop, emits a single CSV plus publisheable plot, and reproduces the figure here with one command. We release the code under the MIT licence to serve as a quick-start regression harness for NISQ compiler research.

26 May 2025

Governments around the world have increasingly adopted digital transformation

(DT) initiatives to increase their strategic competitiveness in the global

market. To support successful DT, governments have to introduce new governance

logics and revise IT strategies to facilitate DT initiatives. In this study, we

report a case study of how Enterprise Architecture (EA) concepts were

introduced and translated into practices in Vietnamese government agencies over

a span of 15 years. This translation process has enabled EA concepts to

facilitate various DT initiatives such as e-government, digitalization, to name

a few. Our findings suggest two mechanisms in the translation process: a

theorization mechanism to generalize local practices into field-level abstract

concepts, making them easier to spread, while a contextualization mechanism

unpacks these concepts into practical, adaptable approaches, aligning EA with

adopters' priorities and increasing its chances of dissemination. Furthermore,

our findings illustrate how translation happened when the initial concepts are

ambiguous and not-well-understood by adopters. In this situation, there is a

need for widespread experiments and sense-making among pioneers before field-

and organizational-level translation can occur.

13 Apr 2023

In an ever more connected world, awareness has grown towards the hazards and

vulnerabilities that the networking on sensitive digitized information pose for

all parties involved. This vulnerability rests in a number of factors, both

human and technical.From an ethical perspective, this means people seeking to

maximise their own gain, and accomplish their goals through exploiting

information existing in cyber space at the expense of other individuals and

parties. One matter that is yet to be fully explored is the eventuality of not

only financial information and other sensitive material being globally

connected on the information highways, but also the people themselves as

physical beings. Humans are natural born cyborgs who have integrated technology

into their being throughout history. Issues of cyber security are extended to

cybernetic security, which not only has severe ethical implications for how we,

policy makers, academics, scientists, designers etc., define ethics in relation

to humanity and human rights, but also the security and safety of merged

organic and artificial systems and ecosystems.

This paper introduces the Bi-linear consensus Alternating Direction Method of Multipliers (Bi-cADMM), aimed at solving large-scale regularized Sparse Machine Learning (SML) problems defined over a network of computational nodes. Mathematically, these are stated as minimization problems with convex local loss functions over a global decision vector, subject to an explicit ℓ0 norm constraint to enforce the desired sparsity. The considered SML problem generalizes different sparse regression and classification models, such as sparse linear and logistic regression, sparse softmax regression, and sparse support vector machines. Bi-cADMM leverages a bi-linear consensus reformulation of the original non-convex SML problem and a hierarchical decomposition strategy that divides the problem into smaller sub-problems amenable to parallel computing. In Bi-cADMM, this decomposition strategy is based on a two-phase approach. Initially, it performs a sample decomposition of the data and distributes local datasets across computational nodes. Subsequently, a delayed feature decomposition of the data is conducted on Graphics Processing Units (GPUs) available to each node. This methodology allows Bi-cADMM to undertake computationally intensive data-centric computations on GPUs, while CPUs handle more cost-effective computations. The proposed algorithm is implemented within an open-source Python package called Parallel Sparse Fitting Toolbox (PsFiT), which is publicly available. Finally, computational experiments demonstrate the efficiency and scalability of our algorithm through numerical benchmarks across various SML problems featuring distributed datasets.

20 Aug 2024

We consider the jump-diffusion risky asset model and study its conditional prediction laws. Next, we explain the conditional least square hedging strategy and calculate its closed form for the jump-diffusion model, considering the Black-Scholes framework with interpretations related to investor priorities and transaction costs. We investigate the explicit form of this result for the particular case of the European call option under transaction costs and formulate recursive hedging strategies. Finally, we present a decision tree, table of values, and figures to support our results.

17 Oct 2024

This paper studies two related stochastic processes driven by Brownian

motion: the Cox-Ingersoll-Ross (CIR) process and the Bessel process. We

investigate their shared and distinct properties, focusing on time-asymptotic

growth rates, distance between the processes in integral norms, and parameter

estimation. The squared Bessel process is shown to be a phase transition of the

CIR process and can be approximated by a sequence of CIR processes. Differences

in stochastic stability are also highlighted, with the Bessel process

displaying instability, while the CIR process remains ergodic and stable.

Dense point cloud generation from a sparse or incomplete point cloud is a

crucial and challenging problem in 3D computer vision and computer graphics. So

far, the existing methods are either computationally too expensive, suffer from

limited resolution, or both. In addition, some methods are strictly limited to

watertight surfaces -- another major obstacle for a number of applications. To

address these issues, we propose a lightweight Convolutional Neural Network

that learns and predicts the unsigned distance field for arbitrary 3D shapes

for dense point cloud generation using the recently emerged concept of implicit

function learning. Experiments demonstrate that the proposed architecture

outperforms the state of the art by 7.8x less model parameters, 2.4x faster

inference time and up to 24.8% improved generation quality compared to the

state-of-the-art.

The emergence of generative artificial intelligence (GAI) and large language models (LLMs) such ChatGPT has enabled the realization of long-harbored desires in software and robotic development. The technology however, has brought with it novel ethical challenges. These challenges are compounded by the application of LLMs in other machine learning systems, such as multi-robot systems. The objectives of the study were to examine novel ethical issues arising from the application of LLMs in multi-robot systems. Unfolding ethical issues in GPT agent behavior (deliberation of ethical concerns) was observed, and GPT output was compared with human experts. The article also advances a model for ethical development of multi-robot systems. A qualitative workshop-based method was employed in three workshops for the collection of ethical concerns: two human expert workshops (N=16 participants) and one GPT-agent-based workshop (N=7 agents; two teams of 6 agents plus one judge). Thematic analysis was used to analyze the qualitative data. The results reveal differences between the human-produced and GPT-based ethical concerns. Human experts placed greater emphasis on new themes related to deviance, data privacy, bias and unethical corporate conduct. GPT agents emphasized concerns present in existing AI ethics guidelines. The study contributes to a growing body of knowledge in context-specific AI ethics and GPT application. It demonstrates the gap between human expert thinking and LLM output, while emphasizing new ethical concerns emerging in novel technology.

The paper extends the analysis of the entropies of the Poisson distribution with parameter λ. It demonstrates that the Tsallis and Sharma-Mittal entropies exhibit monotonic behavior with respect to λ, whereas two generalized forms of the Rényi entropy may exhibit "anomalous" (non-monotonic) behavior. Additionally, we examine the asymptotic behavior of the entropies as λ→∞ and provide both lower and upper bounds for them.

31 Jan 2025

The paper is devoted to the properties of the entropy of the exponent-Wiener-integral fractional Gaussian process (EWIFG-process), that is a Wiener integral of the exponent with respect to fractional Brownian motion. Unlike fractional Brownian motion, whose entropy has very simple monotonicity properties in Hurst index, the behavior of the entropy of EWIFG-process is much more involved and depends on the moment of time. We consider these properties of monotonicity in great detail.

There are no more papers matching your filters at the moment.