University of Westminster

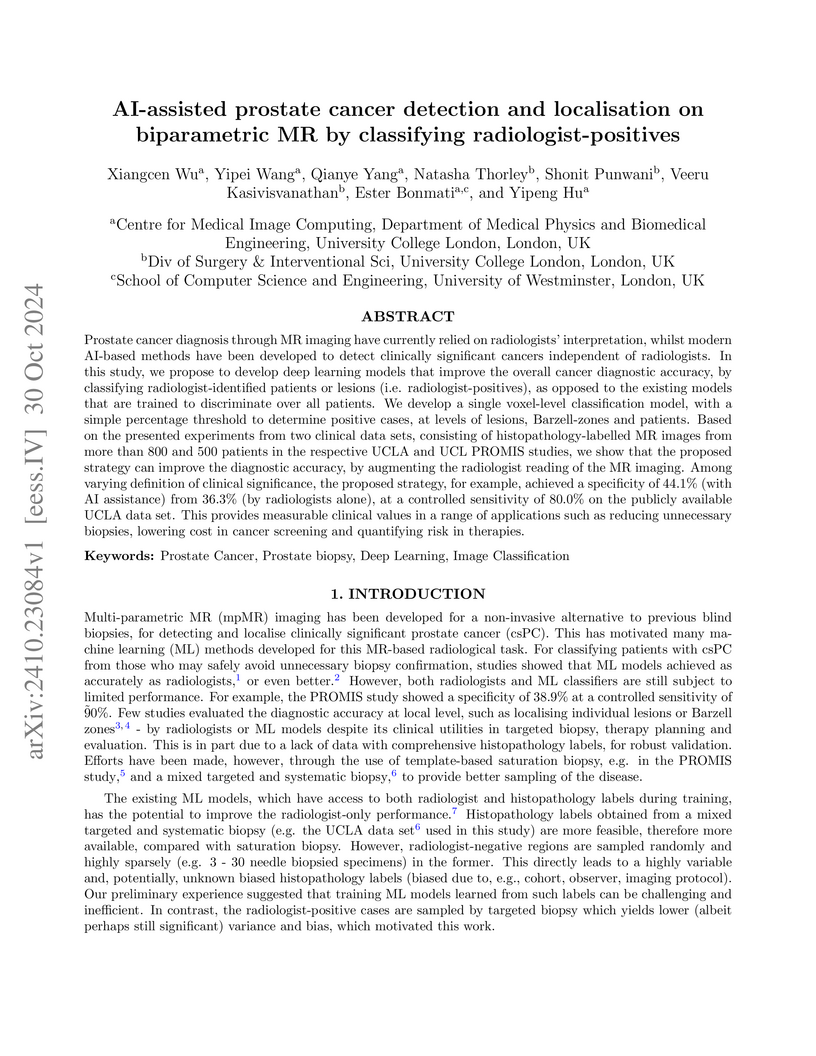

Prostate cancer diagnosis through MR imaging have currently relied on radiologists' interpretation, whilst modern AI-based methods have been developed to detect clinically significant cancers independent of radiologists. In this study, we propose to develop deep learning models that improve the overall cancer diagnostic accuracy, by classifying radiologist-identified patients or lesions (i.e. radiologist-positives), as opposed to the existing models that are trained to discriminate over all patients. We develop a single voxel-level classification model, with a simple percentage threshold to determine positive cases, at levels of lesions, Barzell-zones and patients. Based on the presented experiments from two clinical data sets, consisting of histopathology-labelled MR images from more than 800 and 500 patients in the respective UCLA and UCL PROMIS studies, we show that the proposed strategy can improve the diagnostic accuracy, by augmenting the radiologist reading of the MR imaging. Among varying definition of clinical significance, the proposed strategy, for example, achieved a specificity of 44.1% (with AI assistance) from 36.3% (by radiologists alone), at a controlled sensitivity of 80.0% on the publicly available UCLA data set. This provides measurable clinical values in a range of applications such as reducing unnecessary biopsies, lowering cost in cancer screening and quantifying risk in therapies.

The Modulation Transfer Function (MTF) and the Noise Power Spectrum (NPS)

characterize imaging system sharpness/resolution and noise, respectively. Both

measures are based on linear system theory but are applied routinely to systems

employing non-linear, content-aware image processing. For such systems,

MTFs/NPSs are derived inaccurately from traditional test charts containing

edges, sinusoids, noise or uniform tone signals, which are unrepresentative of

natural scene signals. The dead leaves test chart delivers improved

measurements, but still has limitations when describing the performance of

scene-dependent systems. In this paper, we validate several novel

scene-and-process-dependent MTF (SPD-MTF) and NPS (SPD-NPS) measures that

characterize, either: i) system performance concerning one scene, or ii)

average real-world performance concerning many scenes, or iii) the level of

system scene-dependency. We also derive novel SPD-NPS and SPD-MTF measures

using the dead leaves chart. We demonstrate that all the proposed measures are

robust and preferable for scene-dependent systems than current measures.

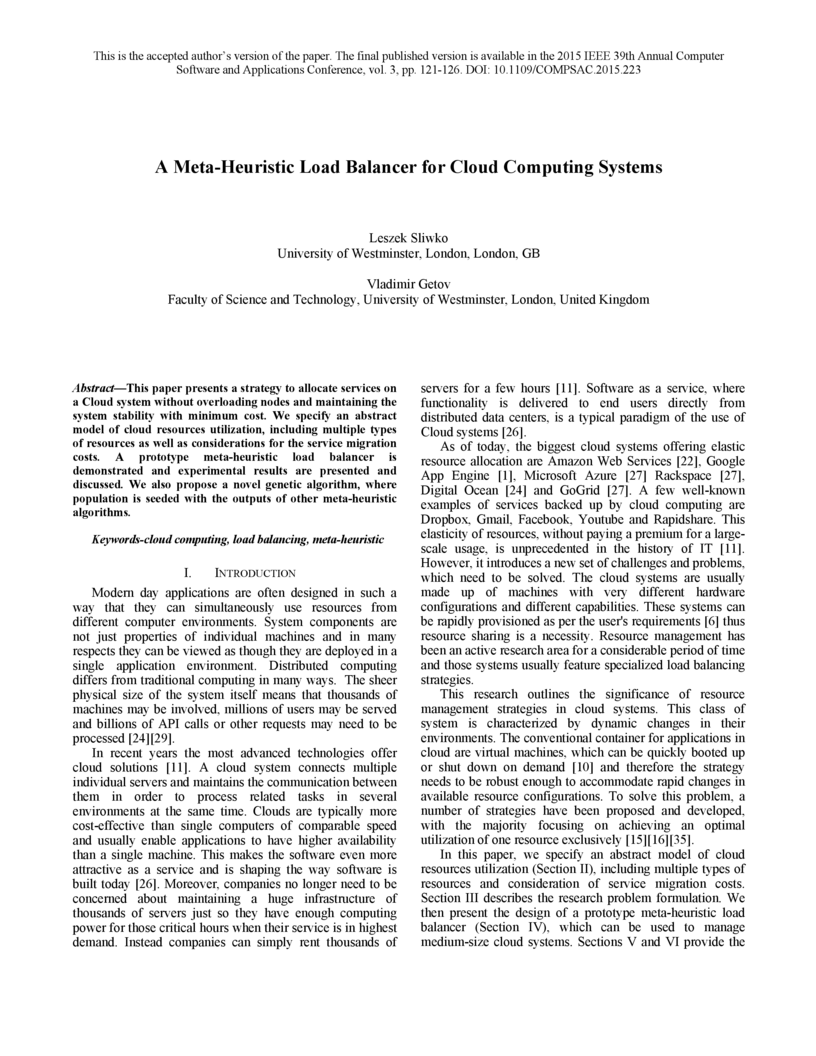

This paper presents a strategy to allocate services on a Cloud system without overloading nodes and maintaining the system stability with minimum cost. We specify an abstract model of cloud resources utilization, including multiple types of resources as well as considerations for the service migration costs. A prototype meta-heuristic load balancer is demonstrated and experimental results are presented and discussed. We also propose a novel genetic algorithm, where population is seeded with the outputs of other meta-heuristic algorithms.

15 Sep 2020

This article presents a new, holistic model for the air traffic management

system, built during the Vista project. The model is an agent-driven simulator,

featuring various stakeholders such as the Network Manager and airlines. It is

a microscopic model based on individual passenger itineraries in Europe during

one day of operations. The article focuses on the technical description of the

model, including data and calibration issues, and presents selected key results

for 2035 and 2050. In particular, we show clear trends regarding emissions,

delay reduction, uncertainty, and increasing airline schedule buffers.

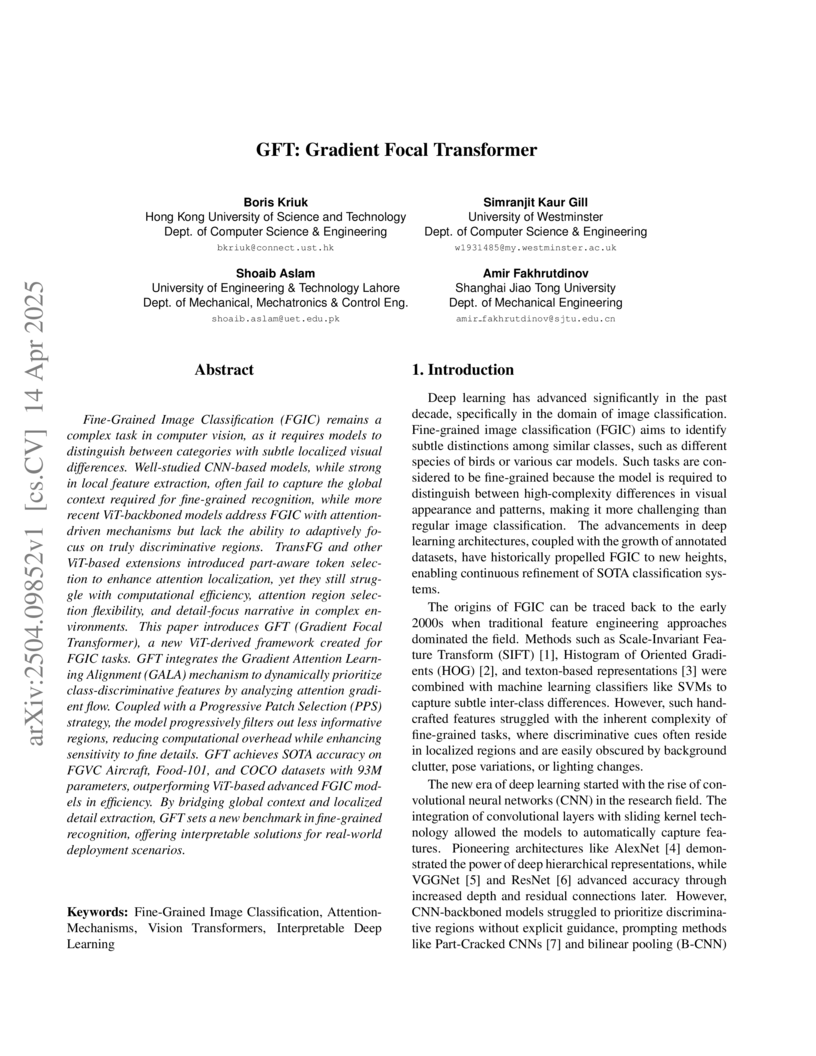

Fine-Grained Image Classification (FGIC) remains a complex task in computer

vision, as it requires models to distinguish between categories with subtle

localized visual differences. Well-studied CNN-based models, while strong in

local feature extraction, often fail to capture the global context required for

fine-grained recognition, while more recent ViT-backboned models address FGIC

with attention-driven mechanisms but lack the ability to adaptively focus on

truly discriminative regions. TransFG and other ViT-based extensions introduced

part-aware token selection to enhance attention localization, yet they still

struggle with computational efficiency, attention region selection flexibility,

and detail-focus narrative in complex environments. This paper introduces GFT

(Gradient Focal Transformer), a new ViT-derived framework created for FGIC

tasks. GFT integrates the Gradient Attention Learning Alignment (GALA)

mechanism to dynamically prioritize class-discriminative features by analyzing

attention gradient flow. Coupled with a Progressive Patch Selection (PPS)

strategy, the model progressively filters out less informative regions,

reducing computational overhead while enhancing sensitivity to fine details.

GFT achieves SOTA accuracy on FGVC Aircraft, Food-101, and COCO datasets with

93M parameters, outperforming ViT-based advanced FGIC models in efficiency. By

bridging global context and localized detail extraction, GFT sets a new

benchmark in fine-grained recognition, offering interpretable solutions for

real-world deployment scenarios.

01 Apr 2025

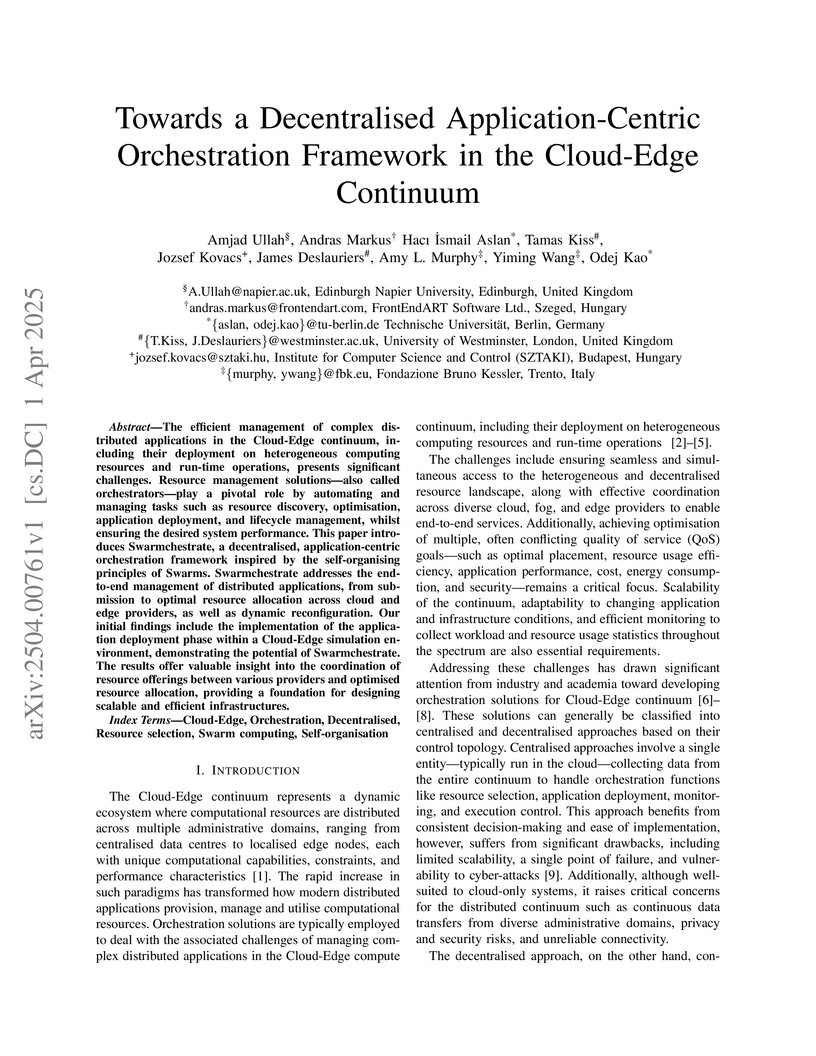

The efficient management of complex distributed applications in the

Cloud-Edge continuum, including their deployment on heterogeneous computing

resources and run-time operations, presents significant challenges. Resource

management solutions -- also called orchestrators -- play a pivotal role by

automating and managing tasks such as resource discovery, optimisation,

application deployment, and lifecycle management, whilst ensuring the desired

system performance. This paper introduces Swarmchestrate, a decentralised,

application-centric orchestration framework inspired by the self-organising

principles of Swarms. Swarmchestrate addresses the end-to-end management of

distributed applications, from submission to optimal resource allocation across

cloud and edge providers, as well as dynamic reconfiguration. Our initial

findings include the implementation of the application deployment phase within

a Cloud-Edge simulation environment, demonstrating the potential of

Swarmchestrate. The results offer valuable insight into the coordination of

resource offerings between various providers and optimised resource allocation,

providing a foundation for designing scalable and efficient infrastructures.

The escalating sophistication and volume of cyber threats in cloud environments necessitate a paradigm shift in strategies. Recognising the need for an automated and precise response to cyber threats, this research explores the application of AI and ML and proposes an AI-powered cyber incident response system for cloud environments. This system, encompassing Network Traffic Classification, Web Intrusion Detection, and post-incident Malware Analysis (built as a Flask application), achieves seamless integration across platforms like Google Cloud and Microsoft Azure. The findings from this research highlight the effectiveness of the Random Forest model, achieving an accuracy of 90% for the Network Traffic Classifier and 96% for the Malware Analysis Dual Model application. Our research highlights the strengths of AI-powered cyber security. The Random Forest model excels at classifying cyber threats, offering an efficient and robust solution. Deep learning models significantly improve accuracy, and their resource demands can be managed using cloud-based TPUs and GPUs. Cloud environments themselves provide a perfect platform for hosting these AI/ML systems, while container technology ensures both efficiency and scalability. These findings demonstrate the contribution of the AI-led system in guaranteeing a robust and scalable cyber incident response solution in the cloud.

Tiny Machine Learning (TinyML) is an upsurging research field that proposes

to democratize the use of Machine Learning and Deep Learning on highly

energy-efficient frugal Microcontroller Units. Considering the general

assumption that TinyML can only run inference, growing interest in the domain

has led to work that makes them reformable, i.e., solutions that permit models

to improve once deployed. This work presents a survey on reformable TinyML

solutions with the proposal of a novel taxonomy. Here, the suitability of each

hierarchical layer for reformability is discussed. Furthermore, we explore the

workflow of TinyML and analyze the identified deployment schemes, available

tools and the scarcely available benchmarking tools. Finally, we discuss how

reformable TinyML can impact a few selected industrial areas and discuss the

challenges and future directions.

This paper presents an effective color normalization method for thin blood

film images of peripheral blood specimens. Thin blood film images can easily be

separated to foreground (cell) and background (plasma) parts. The color of the

plasma region is used to estimate and reduce the differences arising from

different illumination conditions. A second stage normalization based on the

database-gray world algorithm transforms the color of the foreground objects to

match a reference color character. The quantitative experiments demonstrate the

effectiveness of the method and its advantages against two other general

purpose color correction methods: simple gray world and Retinex.

Spatial image quality metrics designed for camera systems generally employ

the Modulation Transfer Function (MTF), the Noise Power Spectrum (NPS), and a

visual contrast detection model. Prior art indicates that scene-dependent

characteristics of non-linear, content-aware image processing are unaccounted

for by MTFs and NPSs measured using traditional methods. We present two novel

metrics: the log Noise Equivalent Quanta (log NEQ) and Visual log NEQ. They

both employ scene-and-process-dependent MTF (SPD-MTF) and NPS (SPD-NPS)

measures, which account for signal-transfer and noise scene-dependency,

respectively. We also investigate implementing contrast detection and

discrimination models that account for scene-dependent visual masking. Also,

three leading camera metrics are revised that use the above scene-dependent

measures. All metrics are validated by examining correlations with the

perceived quality of images produced by simulated camera pipelines. Metric

accuracy improved consistently when the SPD-MTFs and SPD-NPSs were implemented.

The novel metrics outperformed existing metrics of the same genre.

In recent years, there has been an increasing interest in the use of artificial intelligence (AI) and extended reality (XR) in the beauty industry. In this paper, we present an AI-assisted skin care recommendation system integrated into an XR platform. The system uses a convolutional neural network (CNN) to analyse an individual's skin type and recommend personalised skin care products in an immersive and interactive manner. Our methodology involves collecting data from individuals through a questionnaire and conducting skin analysis using a provided facial image in an immersive environment. This data is then used to train the CNN model, which recognises the skin type and existing issues and allows the recommendation engine to suggest personalised skin care products. We evaluate our system in terms of the accuracy of the CNN model, which achieves an average score of 93% in correctly classifying existing skin issues. Being integrated into an XR system, this approach has the potential to significantly enhance the beauty industry by providing immersive and engaging experiences to users, leading to more efficient and consistent skincare routines.

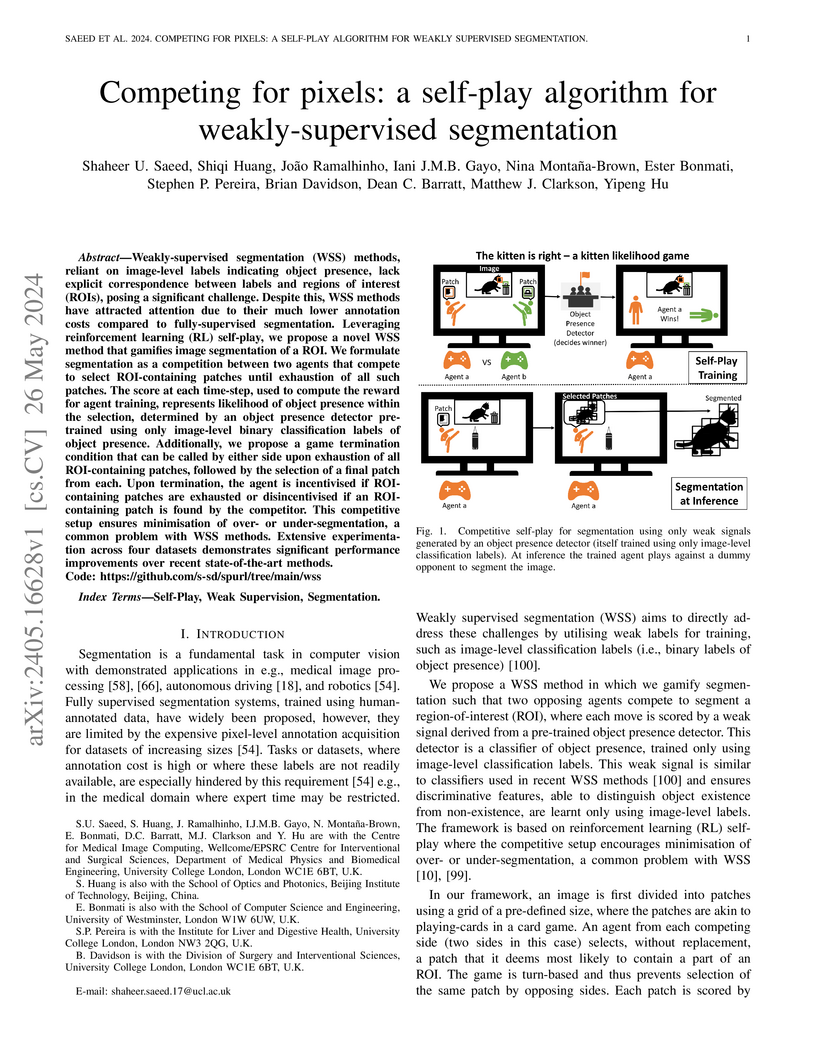

Weakly-supervised segmentation (WSS) methods, reliant on image-level labels

indicating object presence, lack explicit correspondence between labels and

regions of interest (ROIs), posing a significant challenge. Despite this, WSS

methods have attracted attention due to their much lower annotation costs

compared to fully-supervised segmentation. Leveraging reinforcement learning

(RL) self-play, we propose a novel WSS method that gamifies image segmentation

of a ROI. We formulate segmentation as a competition between two agents that

compete to select ROI-containing patches until exhaustion of all such patches.

The score at each time-step, used to compute the reward for agent training,

represents likelihood of object presence within the selection, determined by an

object presence detector pre-trained using only image-level binary

classification labels of object presence. Additionally, we propose a game

termination condition that can be called by either side upon exhaustion of all

ROI-containing patches, followed by the selection of a final patch from each.

Upon termination, the agent is incentivised if ROI-containing patches are

exhausted or disincentivised if an ROI-containing patch is found by the

competitor. This competitive setup ensures minimisation of over- or

under-segmentation, a common problem with WSS methods. Extensive

experimentation across four datasets demonstrates significant performance

improvements over recent state-of-the-art methods. Code:

this https URL

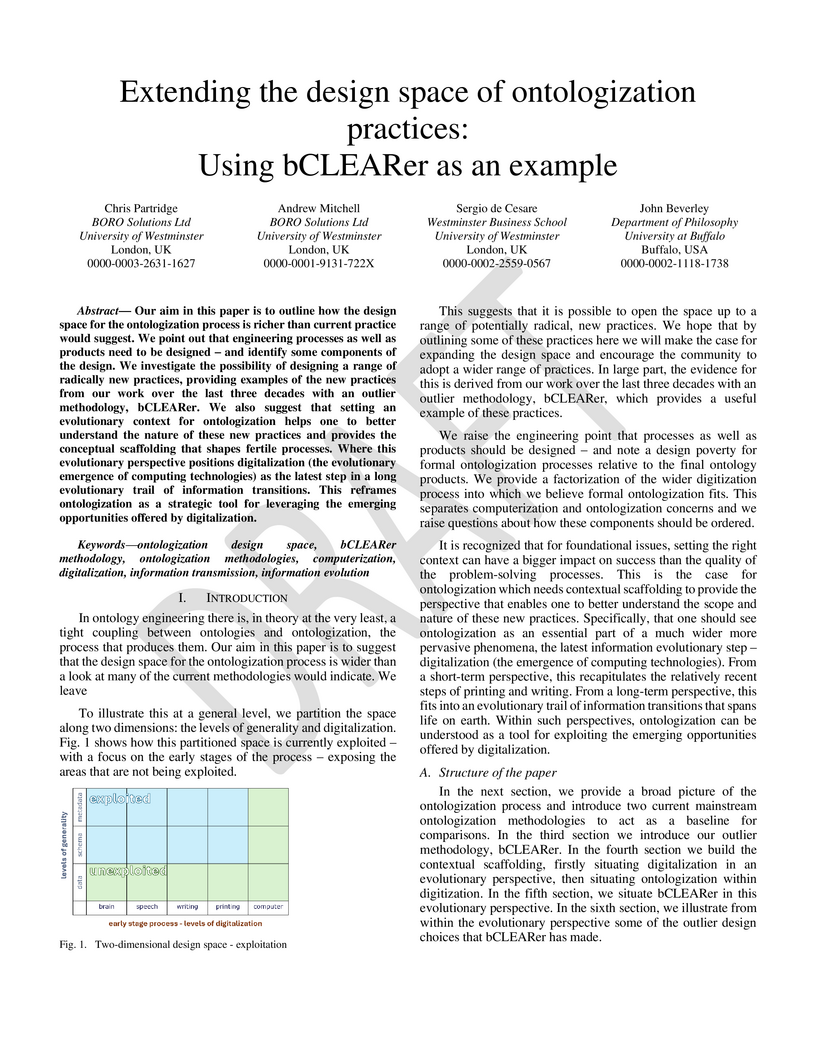

Our aim in this paper is to outline how the design space for the ontologization process is richer than current practice would suggest. We point out that engineering processes as well as products need to be designed - and identify some components of the design. We investigate the possibility of designing a range of radically new practices, providing examples of the new practices from our work over the last three decades with an outlier methodology, bCLEARer. We also suggest that setting an evolutionary context for ontologization helps one to better understand the nature of these new practices and provides the conceptual scaffolding that shapes fertile processes. Where this evolutionary perspective positions digitalization (the evolutionary emergence of computing technologies) as the latest step in a long evolutionary trail of information transitions. This reframes ontologization as a strategic tool for leveraging the emerging opportunities offered by digitalization.

Supervised machine learning-based medical image computing applications necessitate expert label curation, while unlabelled image data might be relatively abundant. Active learning methods aim to prioritise a subset of available image data for expert annotation, for label-efficient model training. We develop a controller neural network that measures priority of images in a sequence of batches, as in batch-mode active learning, for multi-class segmentation tasks. The controller is optimised by rewarding positive task-specific performance gain, within a Markov decision process (MDP) environment that also optimises the task predictor. In this work, the task predictor is a segmentation network. A meta-reinforcement learning algorithm is proposed with multiple MDPs, such that the pre-trained controller can be adapted to a new MDP that contains data from different institutes and/or requires segmentation of different organs or structures within the abdomen. We present experimental results using multiple CT datasets from more than one thousand patients, with segmentation tasks of nine different abdominal organs, to demonstrate the efficacy of the learnt prioritisation controller function and its cross-institute and cross-organ adaptability. We show that the proposed adaptable prioritisation metric yields converging segmentation accuracy for the novel class of kidney, unseen in training, using between approximately 40\% to 60\% of labels otherwise required with other heuristic or random prioritisation metrics. For clinical datasets of limited size, the proposed adaptable prioritisation offers a performance improvement of 22.6\% and 10.2\% in Dice score, for tasks of kidney and liver vessel segmentation, respectively, compared to random prioritisation and alternative active sampling strategies.

31 Aug 2023

Recently, several algorithms have been proposed for decomposing reactive synthesis specifications into independent and simpler sub-specifications. Being inspired by one of the approaches, developed by Antonio Iannopollo (2018), who designed the so-called (DC) algorithm, we present here our solution that takes his ideas further and provides mathematical formalisation of the strategy behind DC. We rigorously define the main notions involved in the algorithm, explain the technique, and demonstrate its application on examples. The core technique of DC is based on the detection of independent variables in linear temporal logic formulae by exploiting the power and efficiency of a model checker. Although the DC algorithm is sound, it is not complete, as its author already pointed out. In this paper, we provide a counterexample that shows this fact and propose relevant changes to adapt the original DC strategy to ensure its correctness. The modification of DC and the detailed proof of its soundness and completeness are the main contributions of this work.

Effective management of construction and demolition waste (C&DW) is crucial for sustainable development, as the industry accounts for 40% of the waste generated globally. The effectiveness of the C&DW management relies on the proper quantification of C&DW to be generated. Despite demolition activities having larger contributions to C&DW generation, extant studies have focused on construction waste. The few extant studies on demolition are often from the regional level perspective and provide no circularity insights. Thus, this study advances demolition quantification via Variable Modelling (VM) with Machine Learning (ML). The demolition dataset of 2280 projects were leveraged for the ML modelling, with XGBoost model emerging as the best (based on the Copeland algorithm), achieving R2 of 0.9977 and a Mean Absolute Error of 5.0910 on the testing dataset. Through the integration of the ML model with Building Information Modelling (BIM), the study developed a system for predicting quantities of recyclable and landfill materials from building demolitions. This provides detailed insights into the circularity of demolition waste and facilitates better planning and management. The SHapley Additive exPlanations (SHAP) method highlighted the implications of the features for demolition waste circularity. The study contributes to empirical studies on pre-demolition auditing at the project level and provides practical tools for implementation. Its findings would benefit stakeholders in driving a circular economy in the industry.

18 Oct 2024

For the first time in history, humankind might conceivably begin to imagine itself as a multi-planetary species. This goal will entail technical innovation in a number of contexts, including that of healthcare. All life on Earth shares an evolution that is coupled to specific environmental conditions, including gravitational and magnetic fields. While the human body may be able to adjust to short term disruption of these fields during space flights, any long term settlement would have to take into consideration the effects that different fields will have on biological systems, within the space of one lifetime, but also across generations. Magnetic fields, for example, influence the growth of stem cells in regenerative processes. Circadian rhythms are profoundly influenced by magnetic fields, a fact that will likely have an effect on mental as well as physical health. Even the brain responds to small perturbations of this field. One possible mechanism for the effects of weak magnetic fields on biological systems has been suggested to be the radical pair mechanism. The radical pair mechanism originated in the context of spin chemistry to describe how magnetic fields influence the yields of chemical reactions. This mechanism was subsequently incorporated into the field of quantum biology. Quantum biology, most generally, is the study of whether non-trivial quantum effects play any meaningful role in biological systems. The radical pair mechanism has been used most consistently in this context to describe the avian compass. Recently, however, a number of studies have investigated other biological contexts in which the radical pair might play a role, from the action of anaesthetics and antidepressants, to microtubule development and the proper function of the circadian clock... (full abstract in the manuscript)

The use of Air traffic management (ATM) simulators for planing and operations can be challenging due to their modelling complexity. This paper presents XALM (eXplainable Active Learning Metamodel), a three-step framework integrating active learning and SHAP (SHapley Additive exPlanations) values into simulation metamodels for supporting ATM decision-making. XALM efficiently uncovers hidden relationships among input and output variables in ATM simulators, those usually of interest in policy analysis. Our experiments show XALM's predictive performance comparable to the XGBoost metamodel with fewer simulations. Additionally, XALM exhibits superior explanatory capabilities compared to non-active learning metamodels.

Using the `Mercury' (flight and passenger) ATM simulator, XALM is applied to a real-world scenario in Paris Charles de Gaulle airport, extending an arrival manager's range and scope by analysing six variables. This case study illustrates XALM's effectiveness in enhancing simulation interpretability and understanding variable interactions. By addressing computational challenges and improving explainability, XALM complements traditional simulation-based analyses.

Lastly, we discuss two practical approaches for reducing the computational burden of the metamodelling further: we introduce a stopping criterion for active learning based on the inherent uncertainty of the metamodel, and we show how the simulations used for the metamodel can be reused across key performance indicators, thus decreasing the overall number of simulations needed.

The objective of augmented reality (AR) is to add digital content to natural images and videos to create an interactive experience between the user and the environment. Scene analysis and object recognition play a crucial role in AR, as they must be performed quickly and accurately. In this study, a new approach is proposed that involves using oriented bounding boxes with a detection and recognition deep network to improve performance and processing time. The approach is evaluated using two datasets: a real image dataset (DOTA dataset) commonly used for computer vision tasks, and a synthetic dataset that simulates different environmental, lighting, and acquisition conditions. The focus of the evaluation is on small objects, which are difficult to detect and recognise. The results indicate that the proposed approach tends to produce better Average Precision and greater accuracy for small objects in most of the tested conditions.

The increasing sophistication of modern cyber threats, particularly file-less

malware relying on living-off-the-land techniques, poses significant challenges

to traditional detection mechanisms. Memory forensics has emerged as a crucial

method for uncovering such threats by analysing dynamic changes in memory. This

research introduces SPECTRE (Snapshot Processing, Emulation, Comparison, and

Threat Reporting Engine), a modular Cyber Incident Response System designed to

enhance threat detection, investigation, and visualization. By adopting

Volatility JSON format as an intermediate output, SPECTRE ensures compatibility

with widely used DFIR tools, minimizing manual data transformations and

enabling seamless integration into established workflows. Its emulation

capabilities safely replicate realistic attack scenarios, such as credential

dumping and malicious process injections, for controlled experimentation and

validation. The anomaly detection module addresses critical attack vectors,

including RunDLL32 abuse and malicious IP detection, while the IP forensics

module enhances threat intelligence by integrating tools like Virus Total and

geolocation APIs. SPECTRE advanced visualization techniques transform raw

memory data into actionable insights, aiding Red, Blue and Purple teams in

refining strategies and responding effectively to threats. Bridging gaps

between memory and network forensics, SPECTRE offers a scalable, robust

platform for advancing threat detection, team training, and forensic research

in combating sophisticated cyber threats.

There are no more papers matching your filters at the moment.