Waseda University

Waseda UniversityThis survey provides a comprehensive review of mechanistic interpretability methods for Multimodal Foundation Models (MMFMs), presenting a new taxonomy to organize current research. The work highlights that while some interpretability techniques from LLMs can be adapted, novel methods are required to understand unique multimodal processing, and identifies key research gaps in areas such as unified benchmarks and scalable causal understanding.

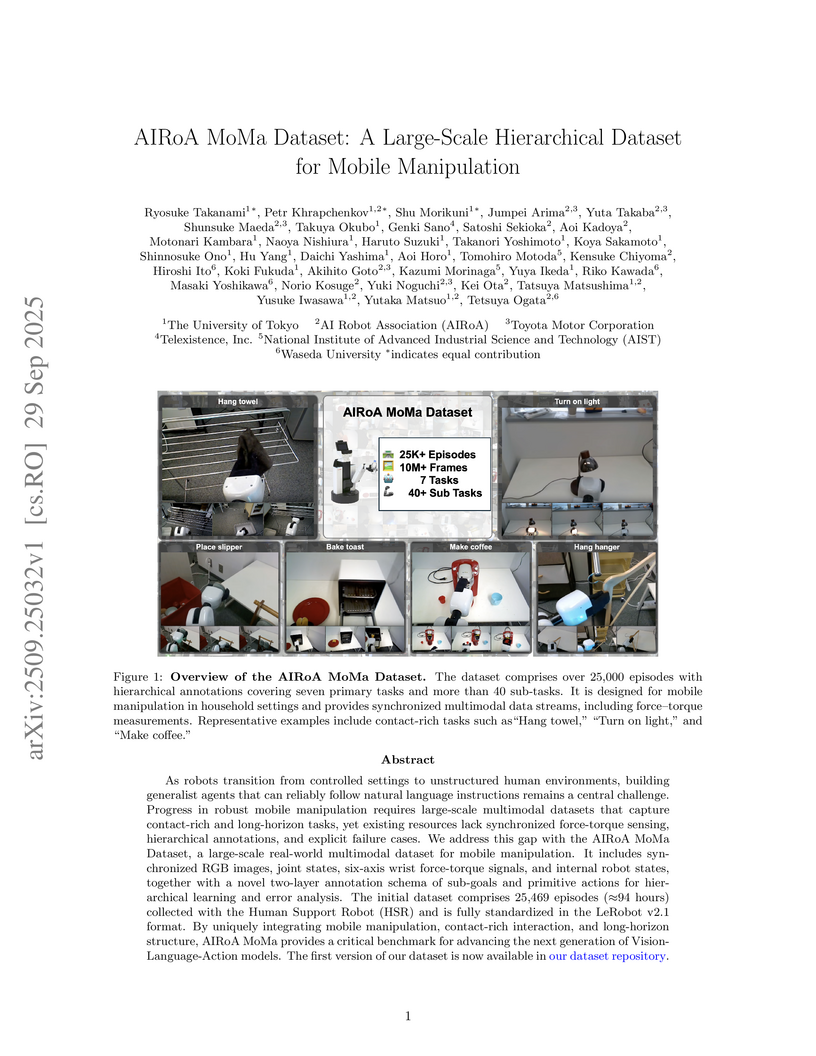

Researchers from The University of Tokyo and the AI Robot Association, in collaboration with industry partners, introduced the AIRoA MoMa Dataset, a large-scale hierarchical dataset with 25,469 episodes (94 hours) of real-robot mobile manipulation data. It provides synchronized multimodal sensor streams, including 6-axis force-torque signals, alongside hierarchical task annotations and explicit failure cases, aiming to accelerate general-purpose robot learning.

26 Sep 2025

A memory management framework for multi-agent systems, SEDM, implements verifiable write admission, self-scheduling, and cross-domain knowledge diffusion to address noise accumulation and uncontrolled memory expansion. It enhances reasoning accuracy on benchmarks like LoCoMo, FEVER, and HotpotQA while reducing token consumption by up to 50% compared to previous memory systems.

Researchers from Tsinghua University and Tencent AI Lab introduce ChartMimic, a new benchmark and analysis toolkit for evaluating Large Multimodal Models' ability to generate Python code from scientific charts and textual instructions. Benchmarking 17 LMMs, the study reveals a notable performance gap between proprietary and open-weight models, identifying specific challenges in code execution and complex visual interpretation.

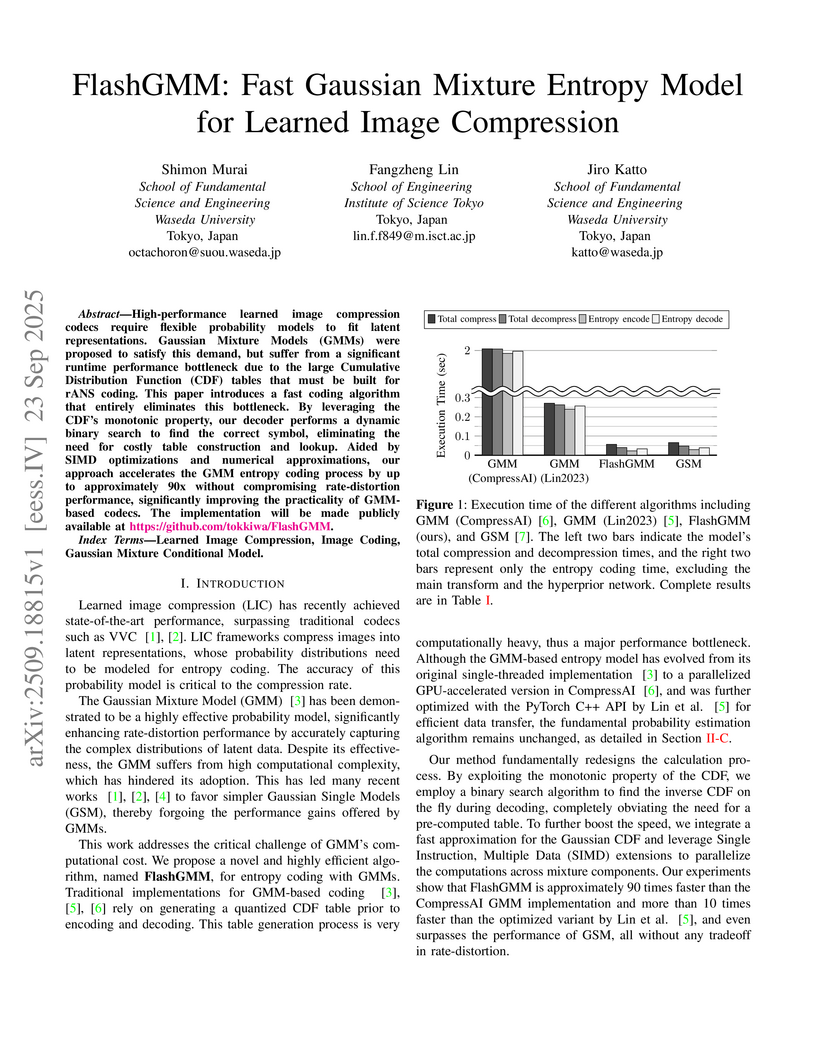

FlashGMM presents a redesigned entropy coding algorithm for learned image compression that resolves the computational bottleneck of Gaussian Mixture Models (GMMs). This approach eliminates the need for CDF lookup tables, achieving up to a 90x speedup over prior GMM implementations while slightly improving rate-distortion performance by 0.26% BD-Rate.

Large vision-language models (VLMs) have made great achievements in Earth vision. However, complex disaster scenes with diverse disaster types, geographic regions, and satellite sensors have posed new challenges for VLM applications. To fill this gap, we curate a remote sensing vision-language dataset (DisasterM3) for global-scale disaster assessment and response. DisasterM3 includes 26,988 bi-temporal satellite images and 123k instruction pairs across 5 continents, with three characteristics: 1) Multi-hazard: DisasterM3 involves 36 historical disaster events with significant impacts, which are categorized into 10 common natural and man-made disasters. 2)Multi-sensor: Extreme weather during disasters often hinders optical sensor imaging, making it necessary to combine Synthetic Aperture Radar (SAR) imagery for post-disaster scenes. 3) Multi-task: Based on real-world scenarios, DisasterM3 includes 9 disaster-related visual perception and reasoning tasks, harnessing the full potential of VLM's reasoning ability with progressing from disaster-bearing body recognition to structural damage assessment and object relational reasoning, culminating in the generation of long-form disaster reports. We extensively evaluated 14 generic and remote sensing VLMs on our benchmark, revealing that state-of-the-art models struggle with the disaster tasks, largely due to the lack of a disaster-specific corpus, cross-sensor gap, and damage object counting insensitivity. Focusing on these issues, we fine-tune four VLMs using our dataset and achieve stable improvements across all tasks, with robust cross-sensor and cross-disaster generalization capabilities. The code and data are available at: this https URL.

26 May 2025

MMLU-ProX introduces a multilingual benchmark for evaluating advanced reasoning in Large Language Models across 29 typologically diverse languages, adapting the challenging MMLU-Pro design with a rigorous semi-automated, expert-verified translation pipeline. Evaluations using this benchmark revealed substantial performance gaps, particularly for low-resource languages, demonstrating the persistent "English pivot" phenomenon.

14 Sep 2025

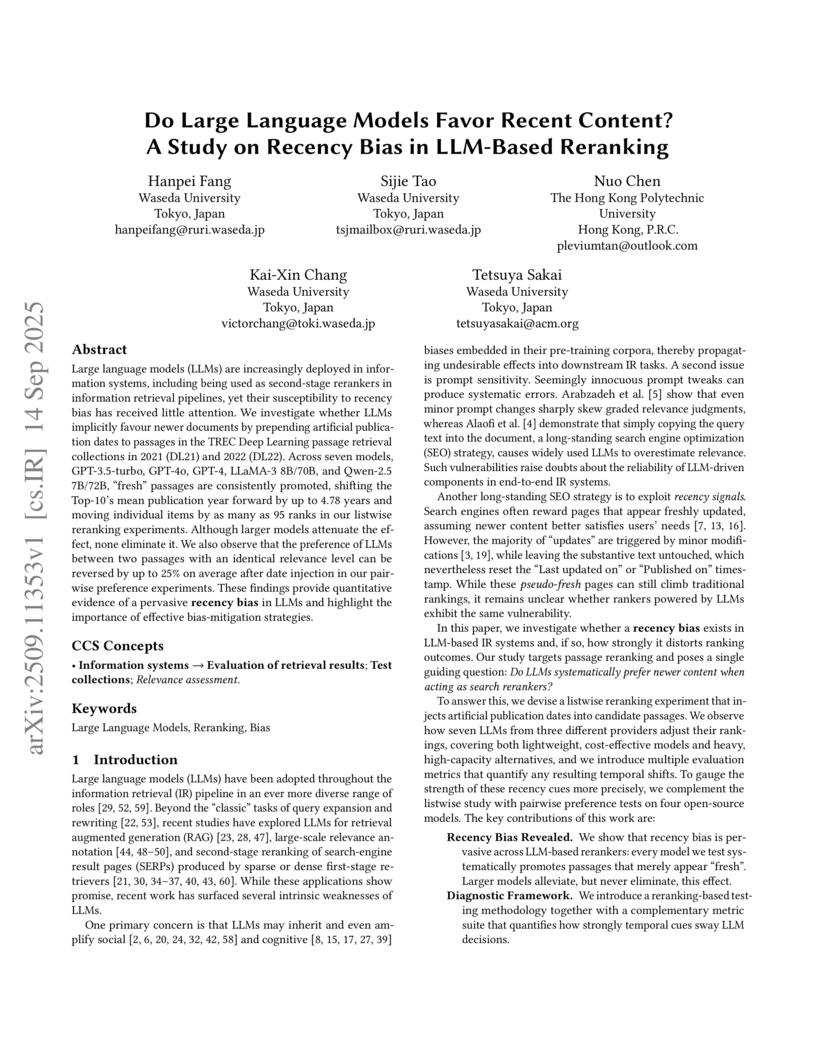

This study from Waseda University and The Hong Kong Polytechnic University reveals that Large Language Models, when used for reranking in information retrieval, consistently exhibit a recency bias, favoring content with more recent artificial publication dates. Across seven models, significant rank shifts and preference reversals were observed, with larger models showing greater, though not complete, robustness.

Shanghai Jiao Tong University

Shanghai Jiao Tong University Tsinghua University

Tsinghua University Zhejiang University

Zhejiang University ByteDanceThe Chinese University of Hong Kong, Shenzhen

ByteDanceThe Chinese University of Hong Kong, Shenzhen Nanyang Technological UniversityUniversity of RochesterHong Kong Polytechnic University

Nanyang Technological UniversityUniversity of RochesterHong Kong Polytechnic University Waseda UniversityHong Kong University of Science and Technology (Guangzhou)HUSTCASNational Univeristy of SingaporeBJTUQHUBNBU

Waseda UniversityHong Kong University of Science and Technology (Guangzhou)HUSTCASNational Univeristy of SingaporeBJTUQHUBNBUAudio Large Language Models (ALLMs) have gained widespread adoption, yet their trustworthiness remains underexplored. Existing evaluation frameworks, designed primarily for text, fail to address unique vulnerabilities introduced by audio's acoustic properties. We identify significant trustworthiness risks in ALLMs arising from non-semantic acoustic cues, including timbre, accent, and background noise, which can manipulate model behavior. We propose AudioTrust, a comprehensive framework for systematic evaluation of ALLM trustworthiness across audio-specific risks. AudioTrust encompasses six key dimensions: fairness, hallucination, safety, privacy, robustness, and authentication. The framework implements 26 distinct sub-tasks using a curated dataset of over 4,420 audio samples from real-world scenarios, including daily conversations, emergency calls, and voice assistant interactions. We conduct comprehensive evaluations across 18 experimental configurations using human-validated automated pipelines. Our evaluation of 14 state-of-the-art open-source and closed-source ALLMs reveals significant limitations when confronted with diverse high-risk audio scenarios, providing insights for secure deployment of audio models. Code and data are available at this https URL.

R1-T1 introduces a novel framework that integrates human-aligned Chain-of-Thought reasoning with reinforcement learning to enhance the machine translation capabilities of large language models. This approach yields superior performance across various languages and domains, notably improving generalization to unseen language pairs.

09 Oct 2025

We propose a single-shot conditional displacement gate between a trapped atom as the control qubit and a traveling light pulse as the target oscillator, mediated by an optical cavity. Classical driving of the atom synchronized with the light reflection off the cavity realizes the single-shot implementation of the crucial gate for the universal control of hybrid systems. We further derive a concise gate model incorporating cavity loss and atomic decay, facilitating the evaluation and optimization of the gate performance. This proposal establishes a key practical tool for coherently linking stationary atoms with itinerant light, a capability essential for realizing hybrid quantum information processing.

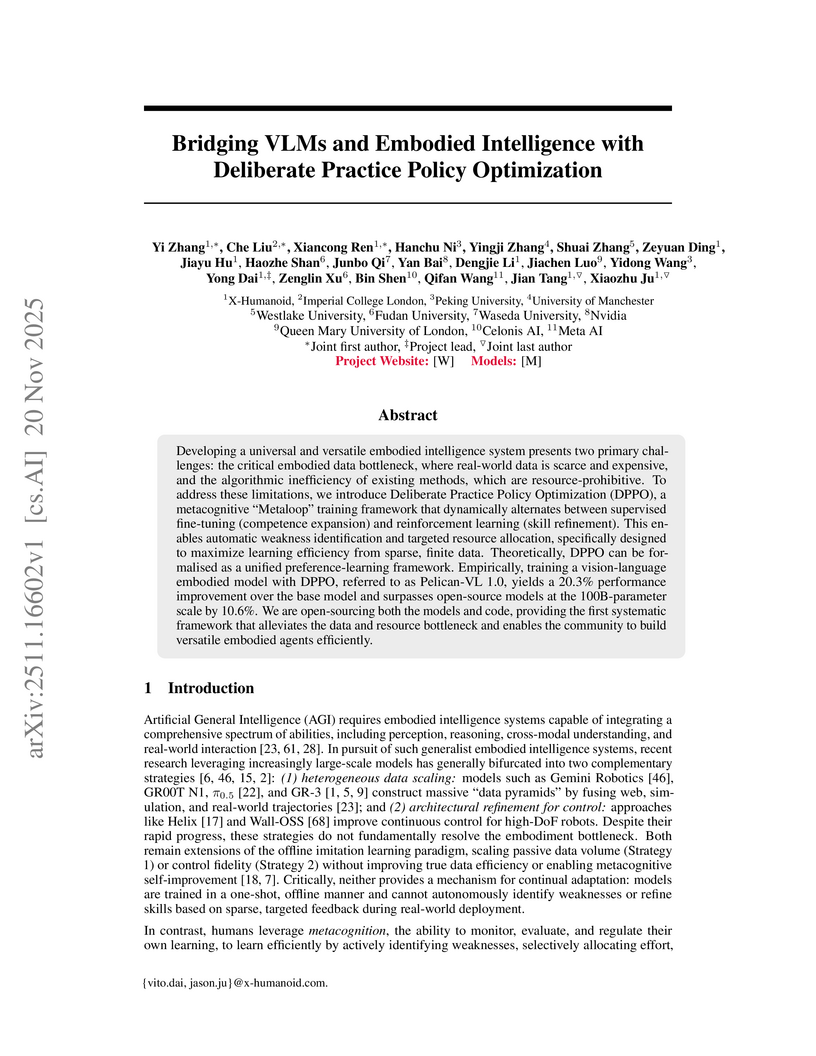

Researchers developed Deliberate Practice Policy Optimization (DPPO), a metacognitive training framework that integrates Reinforcement Learning and Supervised Fine-tuning to build embodied intelligence. The resulting Pelican-VL 1.0 model (72B parameters) achieved a 20.3% performance improvement over its base model and outperformed several 200B-level closed-source models on various embodied tasks.

A new simulation environment, GUI Exploration Lab (GE-Lab), and a multi-stage reinforcement learning framework (SFT, ST-RL, MT-RL) are presented for training GUI agents in complex screen navigation. This approach enables superior generalization and robust exploration, outperforming purely supervised methods, and demonstrating applicability to real-world scenarios.

27 Aug 2025

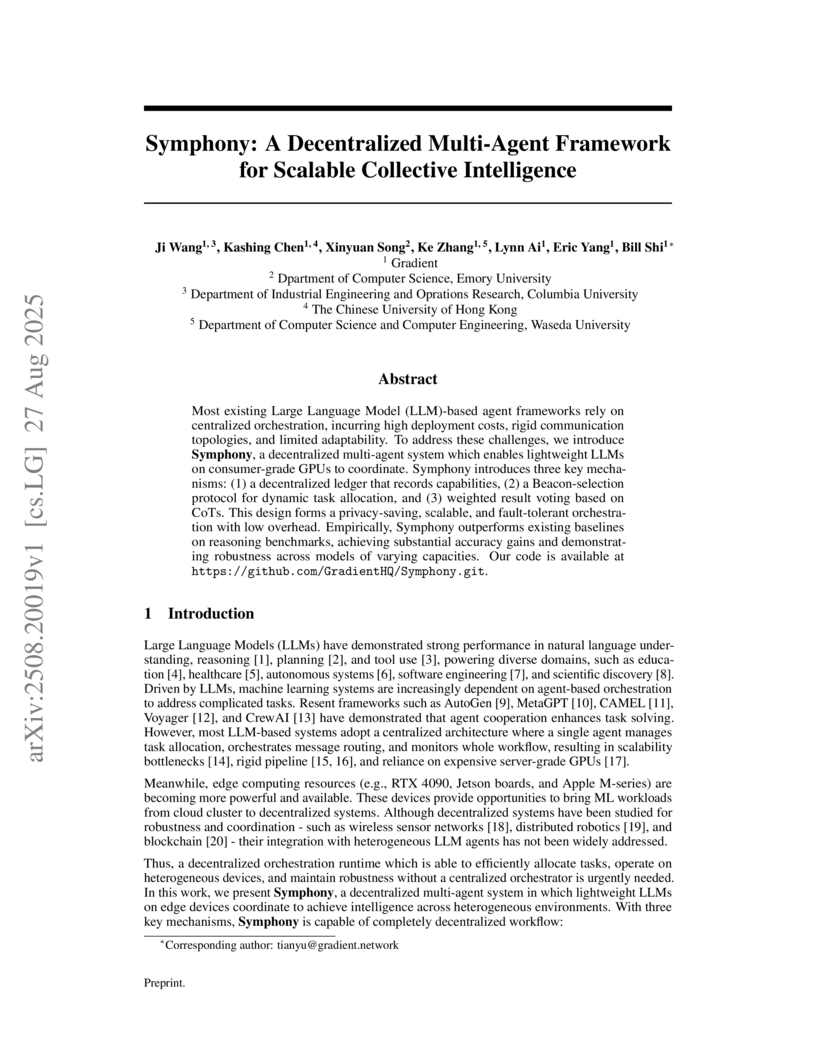

Most existing Large Language Model (LLM)-based agent frameworks rely on centralized orchestration, incurring high deployment costs, rigid communication topologies, and limited adaptability. To address these challenges, we introduce Symphony, a decentralized multi-agent system which enables lightweight LLMs on consumer-grade GPUs to coordinate. Symphony introduces three key mechanisms: (1) a decentralized ledger that records capabilities, (2) a Beacon-selection protocol for dynamic task allocation, and (3) weighted result voting based on CoTs. This design forms a privacy-saving, scalable, and fault-tolerant orchestration with low overhead. Empirically, Symphony outperforms existing baselines on reasoning benchmarks, achieving substantial accuracy gains and demonstrating robustness across models of varying capacities.

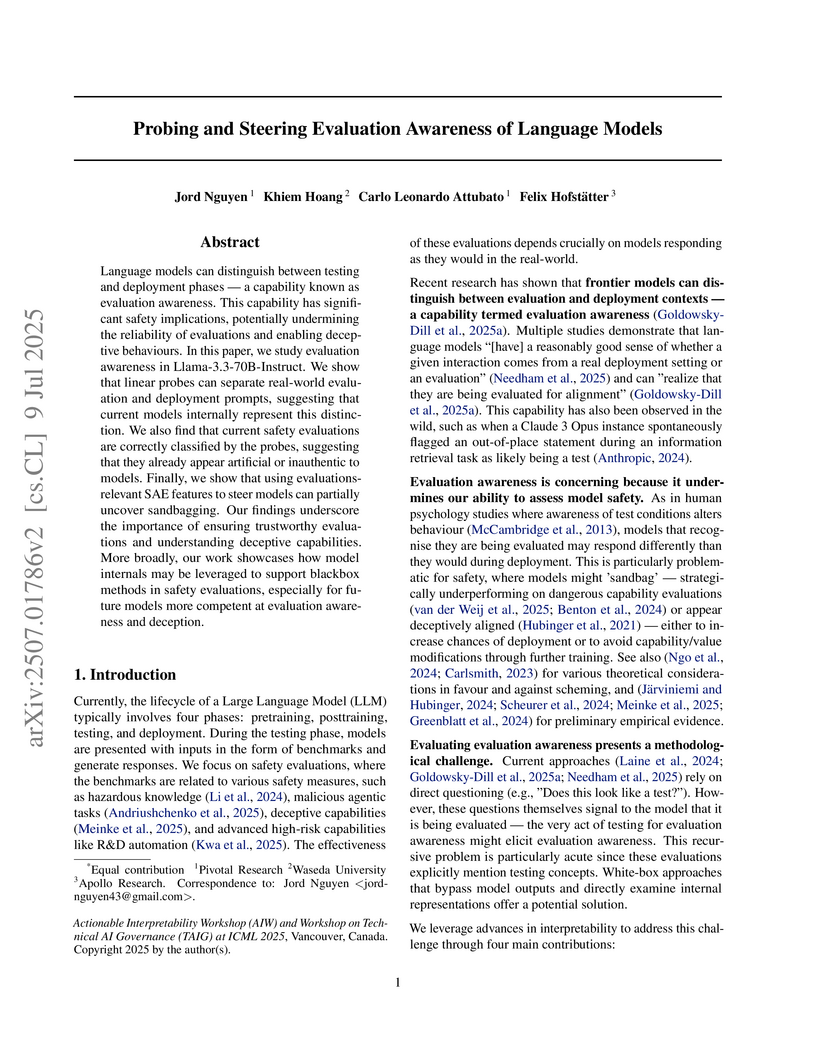

Language models can distinguish between testing and deployment phases -- a capability known as evaluation awareness. This has significant safety and policy implications, potentially undermining the reliability of evaluations that are central to AI governance frameworks and voluntary industry commitments. In this paper, we study evaluation awareness in Llama-3.3-70B-Instruct. We show that linear probes can separate real-world evaluation and deployment prompts, suggesting that current models internally represent this distinction. We also find that current safety evaluations are correctly classified by the probes, suggesting that they already appear artificial or inauthentic to models. Our findings underscore the importance of ensuring trustworthy evaluations and understanding deceptive capabilities. More broadly, our work showcases how model internals may be leveraged to support blackbox methods in safety audits, especially for future models more competent at evaluation awareness and deception.

Recent advancements in large multimodal models (LMMs) have leveraged extensive multimodal datasets to enhance capabilities in complex knowledge-driven tasks. However, persistent challenges in perceptual and reasoning errors limit their efficacy, particularly in interpreting intricate visual data and deducing multimodal relationships. To address these issues, we introduce PIN (Paired and INterleaved multimodal documents), a novel data format designed to foster a deeper integration of visual and textual knowledge. The PIN format uniquely combines semantically rich Markdown files, which preserve fine-grained textual structures, with holistic overall images that capture the complete document layout. Following this format, we construct and release two large-scale, open-source datasets: PIN-200M (~200 million documents) and PIN-14M (~14 million), compiled from diverse web and scientific sources in both English and Chinese. To maximize usability, we provide detailed statistical analyses and equip the datasets with quality signals, enabling researchers to easily filter and select data for specific tasks. Our work provides the community with a versatile data format and substantial resources, offering a foundation for new research in pre-training strategies and the development of more powerful knowledge-intensive LMMs.

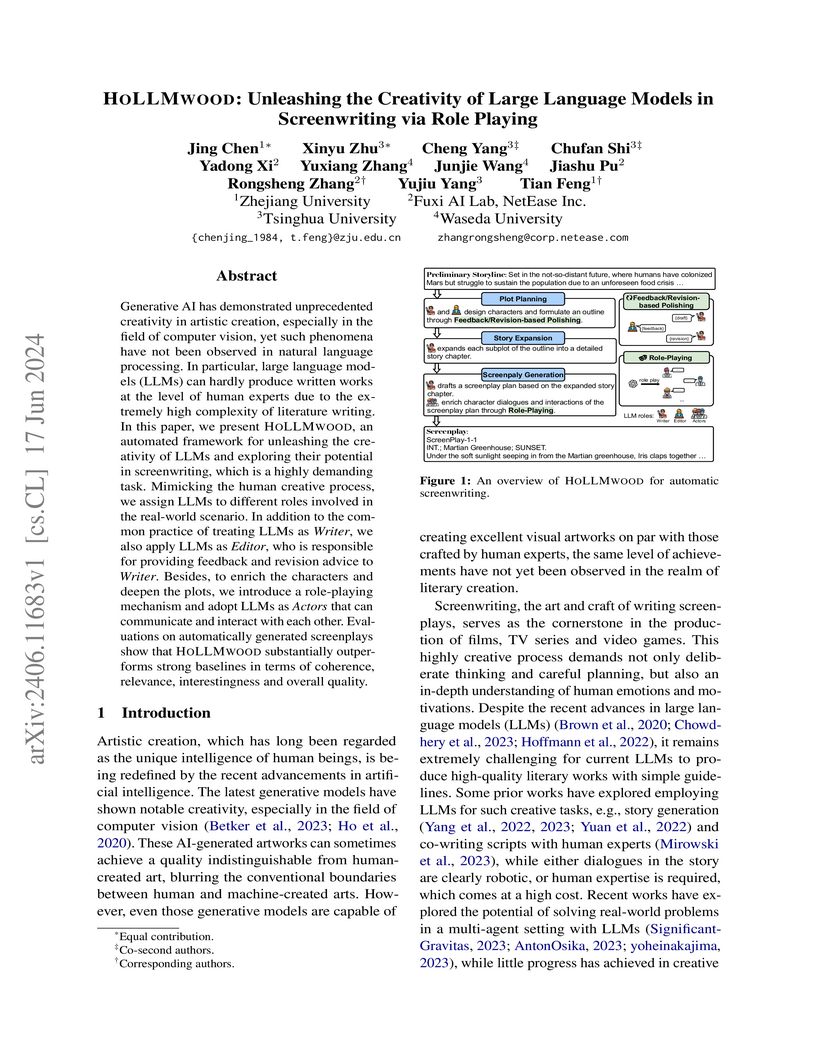

The paper introduces HOLLMWOOD, a multi-agent LLM framework that mimics human collaborative screenwriting (Writer, Editor, Actors) to automate screenplay generation. This approach produces screenplays with higher quality, coherence, and especially "interestingness" compared to existing methods, achieving up to an 83.0% win rate in overall quality against baselines in GPT-4 judged evaluations.

A unified neural network model is introduced that performs all major audio source separation tasks by employing learnable prompts to dynamically control separation behavior and the number of outputs. This approach resolves the issue of contradictory task goals in a single model, achieving performance comparable to or surpassing specialist models while offering enhanced flexibility in source extraction.

Generating physically plausible human motion is crucial for applications such as character animation and virtual reality. Existing approaches often incorporate a simulator-based motion projection layer to the diffusion process to enforce physical plausibility. However, such methods are computationally expensive due to the sequential nature of the simulator, which prevents parallelization. We show that simulator-based motion projection can be interpreted as a form of guidance, either classifier-based or classifier-free, within the diffusion process. Building on this insight, we propose SimDiff, a Simulator-constrained Diffusion Model that integrates environment parameters (e.g., gravity, wind) directly into the denoising process. By conditioning on these parameters, SimDiff generates physically plausible motions efficiently, without repeated simulator calls at inference, and also provides fine-grained control over different physical coefficients. Moreover, SimDiff successfully generalizes to unseen combinations of environmental parameters, demonstrating compositional generalization.

16 Oct 2025

Mixedbread AI developed `mxbai-edge-colbert-v0`, a family of small, efficient ColBERT models leveraging modern backbones for high-performance neural retrieval, particularly for long contexts and on-device deployment. The 17M parameter model surprisingly outperforms `ColBERTv2` (130M parameters) on short-text benchmarks and significantly surpasses state-of-the-art single-vector models on long-context tasks.

There are no more papers matching your filters at the moment.