Wrocław University of Science and Technology

The next generation of gaseous photon detectors is requested to overcome the

limitations of the available technology, in terms of resolution and robustness.

The quest for a novel photocathode, sensitive in the far vacuum ultra violet

wavelength range and more robust than present ones, motivated an R&D programme

to explore nanodiamond based photoconverters, which represent the most

promising alternative to cesium iodine. A procedure for producing the novel

photocathodes has been defined and applied on THGEMs samples. Systematic

measurements of the photo emission in different Ar/CH4 and Ar/CO2 gas mixtures

with various types of nanodiamond powders have been performed. A comparative

study of the response of THGEMs before and after coating demonstrated their

full compatibility with the novel photocathodes.

FactSelfCheck, developed by researchers at Wrocław University of Science and Technology and University of Technology Sydney, introduces a black-box, sampling-based method for detecting factual hallucinations in LLM outputs at a fine-grained, fact-level, which substantially improves the effectiveness of downstream hallucination correction compared to sentence-level detection.

Text-to-Speech (TTS) technology offers notable benefits, such as providing a voice for individuals with speech impairments, but it also facilitates the creation of audio deepfakes and spoofing attacks. AI-based detection methods can help mitigate these risks; however, the performance of such models is inherently dependent on the quality and diversity of their training data. Presently, the available datasets are heavily skewed towards English and Chinese audio, which limits the global applicability of these anti-spoofing systems.

To address this limitation, this paper presents the Multi-Language Audio Anti-Spoofing Dataset (MLAAD), version 8, created using 119 TTS models, comprising 58 different architectures, to generate 570.3 hours of synthetic voice in 40 different languages. We train and evaluate three state-of-the-art deepfake detection models with MLAAD and observe that it demonstrates superior performance over comparable datasets like InTheWild and Fake-Or-Real when used as a training resource. Moreover, compared to the renowned ASVspoof 2019 dataset, MLAAD proves to be a complementary resource. In tests across eight datasets, MLAAD and ASVspoof 2019 alternately outperformed each other, each excelling on four datasets. By publishing MLAAD and making a trained model accessible via an interactive webserver, we aim to democratize anti-spoofing technology, making it accessible beyond the realm of specialists, and contributing to global efforts against audio spoofing and deepfakes.

04 Sep 2025

We propose a practical benchmarking suite inspired by physical dynamics to challenge both quantum and classical computers. Using a parallel in time encoding, we convert the real-time propagator of an n-qubit, possibly non-Hermitian, Hamiltonian into a quadratic-unconstrained binary optimisation (QUBO) problem. The resulting QUBO instances are executed on D-Wave quantum annealers as well as using two classical solvers, Simulated Annealing and VeloxQ, a state-of-the-art classical heuristic solver. This enables a direct comparison. To stress-test the workflow, we use eight representative models, divided into three groups: (i)~single-qubit rotations, (ii)~multi-qubit entangling gates (Bell, GHZ, cluster), and (iii)~PT-symmetric, parity-conserving and other non-Hermitian generators. Across this diverse suite we track the success probability and time to solution, which are well established measures in the realm of heuristic combinatorial optimisation. Our results show that D-Wave Advantage2 consistently surpasses its predecessor, while VeloxQ presently retains the overall lead, reflecting the maturity of classical optimisers. We highlight the rapid progress of analog quantum optimisation, and suggest a clear trajectory toward quantum competitive dynamics simulation, by establishing the parallel in time QUBO framework as a versatile test-bed for tracking and evaluating that progress.

The OBSR benchmark provides a standardized, multi-task, and modality-agnostic framework for evaluating geospatial embedders and GeoAI models across 7 diverse datasets and 5 downstream tasks. Initial evaluations highlight the impact of spatial resolution on model performance and the current limitations of OpenStreetMap-based embeddings for dynamic trajectory predictions.

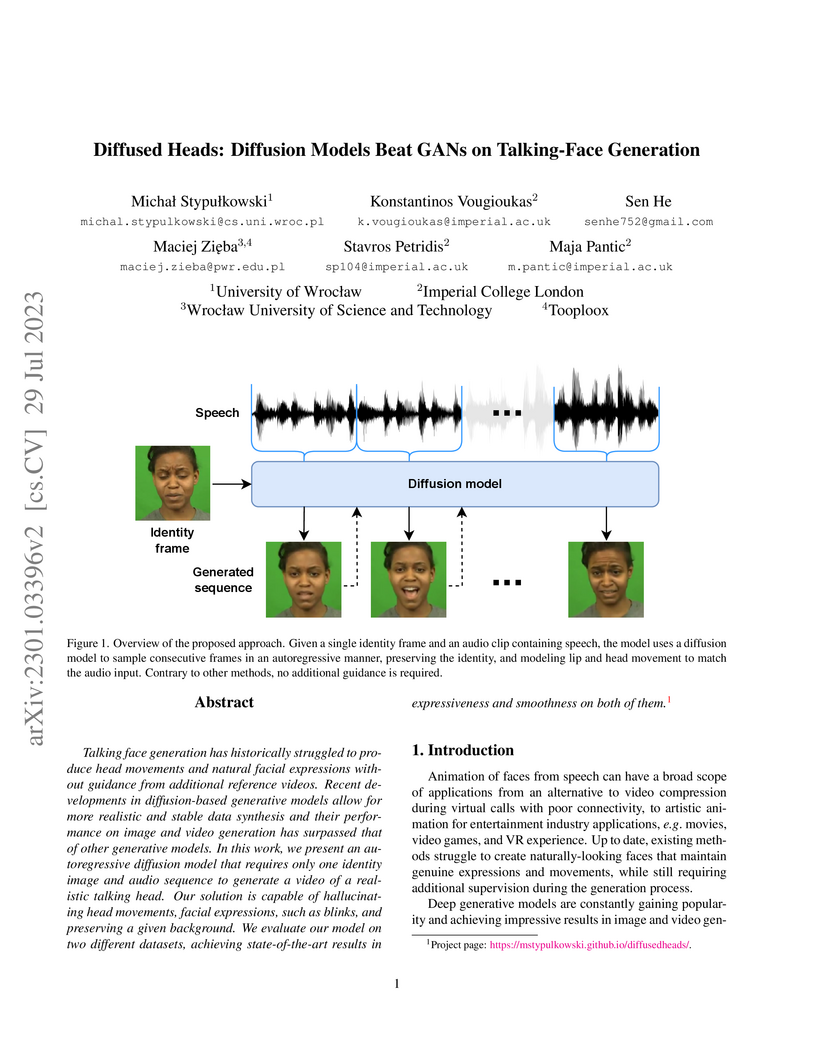

Talking face generation has historically struggled to produce head movements and natural facial expressions without guidance from additional reference videos. Recent developments in diffusion-based generative models allow for more realistic and stable data synthesis and their performance on image and video generation has surpassed that of other generative models. In this work, we present an autoregressive diffusion model that requires only one identity image and audio sequence to generate a video of a realistic talking human head. Our solution is capable of hallucinating head movements, facial expressions, such as blinks, and preserving a given background. We evaluate our model on two different datasets, achieving state-of-the-art results on both of them.

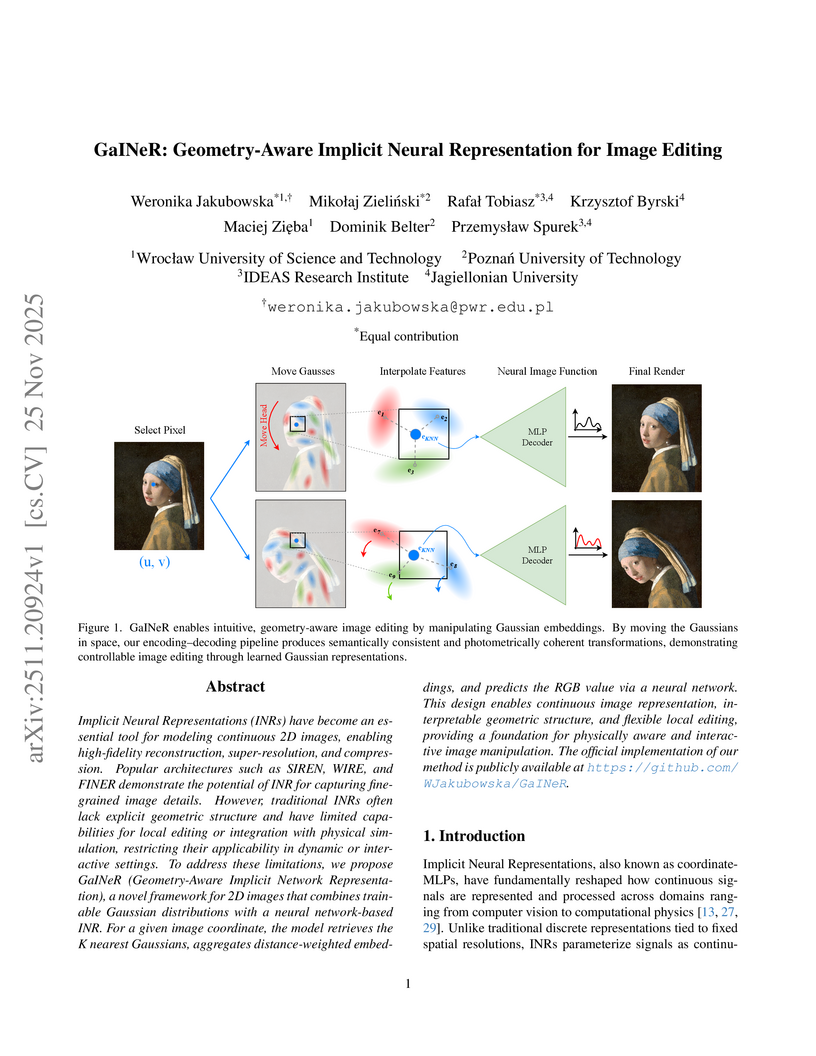

GaINeR introduces a framework that merges continuous implicit neural representations with trainable Gaussian embeddings for 2D images. This approach facilitates geometry-aware local editing and robust physical simulation integration without introducing visual artifacts, achieving a PSNR of 77.09 on the Kodak dataset.

06 Aug 2025

Since the majority of audio DeepFake (DF) detection methods are trained on English-centric datasets, their applicability to non-English languages remains largely unexplored. In this work, we present a benchmark for the multilingual audio DF detection challenge by evaluating various adaptation strategies. Our experiments focus on analyzing models trained on English benchmark datasets, as well as intra-linguistic (same-language) and cross-linguistic adaptation approaches. Our results indicate considerable variations in detection efficacy, highlighting the difficulties of multilingual settings. We show that limiting the dataset to English negatively impacts the efficacy, while stressing the importance of the data in the target language.

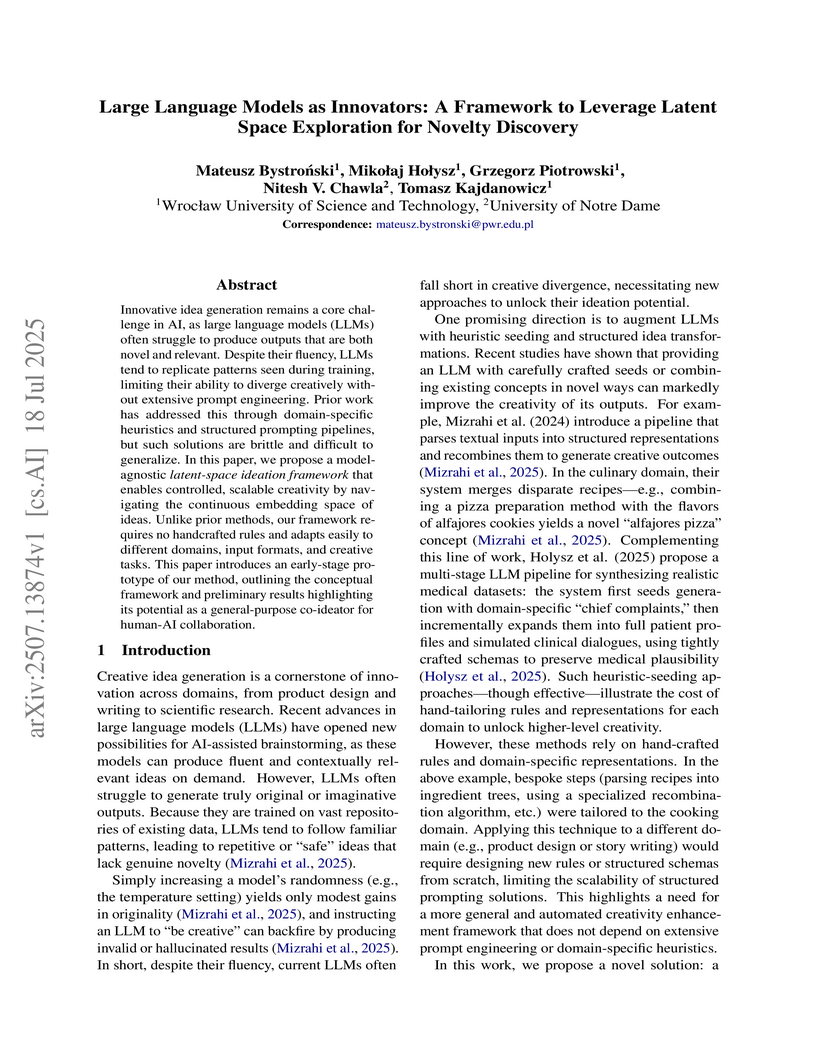

Researchers from Wrocław University of Science and Technology and the University of Notre Dame developed a model-agnostic latent-space ideation framework that enables Large Language Models to generate more novel and relevant ideas. The framework achieves modest improvements in originality and fluency by exploring the continuous conceptual space of ideas through operations like interpolation, rather than relying solely on explicit textual prompts.

26 May 2023

This work explores the use of gradient boosting in the context of classification. Four popular implementations, including original GBM algorithm and selected state-of-the-art gradient boosting frameworks (i.e. XGBoost, LightGBM and CatBoost), have been thoroughly compared on several publicly available real-world datasets of sufficient diversity. In the study, special emphasis was placed on hyperparameter optimization, specifically comparing two tuning strategies, i.e. randomized search and Bayesian optimization using the Tree-stuctured Parzen Estimator. The performance of considered methods was investigated in terms of common classification accuracy metrics as well as runtime and tuning time. Additionally, obtained results have been validated using appropriate statistical testing. An attempt was made to indicate a gradient boosting variant showing the right balance between effectiveness, reliability and ease of use.

We extend the neural basis expansion analysis (NBEATS) to incorporate

exogenous factors. The resulting method, called NBEATSx, improves on a well

performing deep learning model, extending its capabilities by including

exogenous variables and allowing it to integrate multiple sources of useful

information. To showcase the utility of the NBEATSx model, we conduct a

comprehensive study of its application to electricity price forecasting (EPF)

tasks across a broad range of years and markets. We observe state-of-the-art

performance, significantly improving the forecast accuracy by nearly 20% over

the original NBEATS model, and by up to 5% over other well established

statistical and machine learning methods specialized for these tasks.

Additionally, the proposed neural network has an interpretable configuration

that can structurally decompose time series, visualizing the relative impact of

trend and seasonal components and revealing the modeled processes' interactions

with exogenous factors. To assist related work we made the code available in

https://github.com/cchallu/nbeatsx.

We show how replay attacks undermine audio deepfake detection: By playing and

re-recording deepfake audio through various speakers and microphones, we make

spoofed samples appear authentic to the detection model. To study this

phenomenon in more detail, we introduce ReplayDF, a dataset of recordings

derived from M-AILABS and MLAAD, featuring 109 speaker-microphone combinations

across six languages and four TTS models. It includes diverse acoustic

conditions, some highly challenging for detection. Our analysis of six

open-source detection models across five datasets reveals significant

vulnerability, with the top-performing W2V2-AASIST model's Equal Error Rate

(EER) surging from 4.7% to 18.2%. Even with adaptive Room Impulse Response

(RIR) retraining, performance remains compromised with an 11.0% EER. We release

ReplayDF for non-commercial research use.

With a recent influx of voice generation methods, the threat introduced by audio DeepFake (DF) is ever-increasing. Several different detection methods have been presented as a countermeasure. Many methods are based on so-called front-ends, which, by transforming the raw audio, emphasize features crucial for assessing the genuineness of the audio sample. Our contribution contains investigating the influence of the state-of-the-art Whisper automatic speech recognition model as a DF detection front-end. We compare various combinations of Whisper and well-established front-ends by training 3 detection models (LCNN, SpecRNet, and MesoNet) on a widely used ASVspoof 2021 DF dataset and later evaluating them on the DF In-The-Wild dataset. We show that using Whisper-based features improves the detection for each model and outperforms recent results on the In-The-Wild dataset by reducing Equal Error Rate by 21%.

Recent advances have shown that optimizing prompts for Large Language Models (LLMs) can significantly improve task performance, yet many optimization techniques rely on heuristics or manual exploration. We present LatentPrompt, a model-agnostic framework for prompt optimization that leverages latent semantic space to automatically generate, evaluate, and refine candidate prompts without requiring hand-crafted rules. Beginning with a set of seed prompts, our method embeds them in a continuous latent space and systematically explores this space to identify prompts that maximize task-specific performance. In a proof-of-concept study on the Financial PhraseBank sentiment classification benchmark, LatentPrompt increased classification accuracy by approximately 3 percent after a single optimization cycle. The framework is broadly applicable, requiring only black-box access to an LLM and an automatic evaluation metric, making it suitable for diverse domains and tasks.

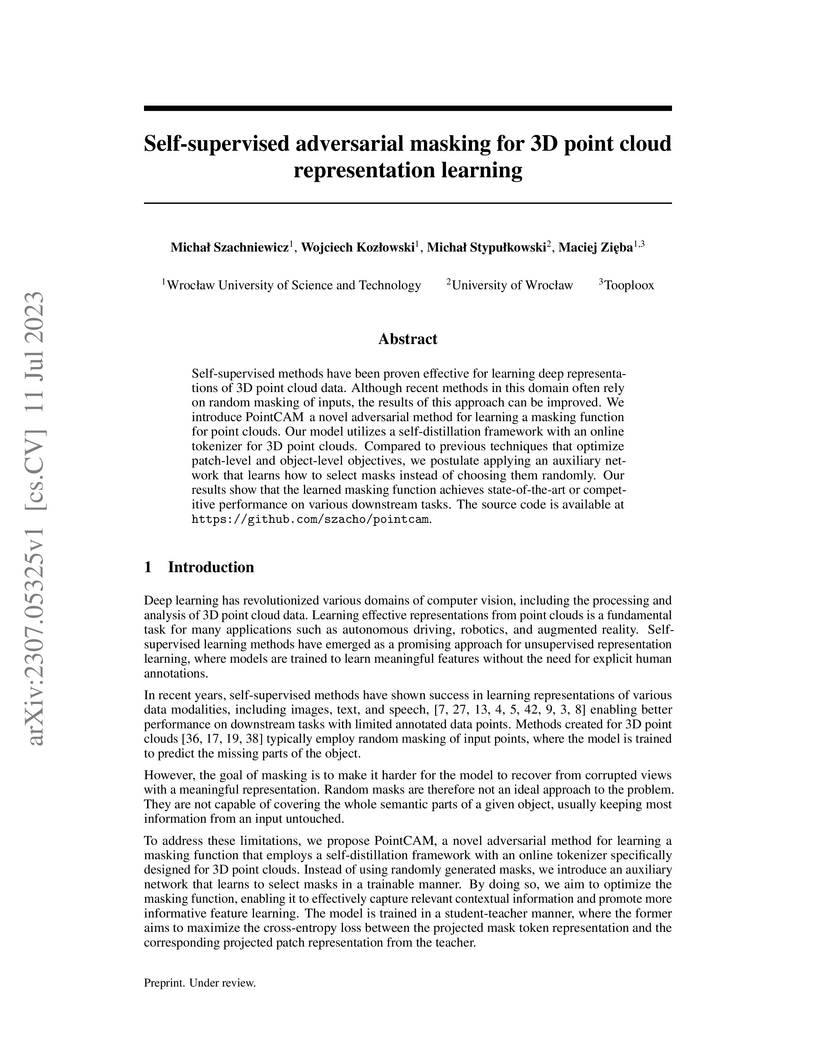

Self-supervised methods have been proven effective for learning deep

representations of 3D point cloud data. Although recent methods in this domain

often rely on random masking of inputs, the results of this approach can be

improved. We introduce PointCAM, a novel adversarial method for learning a

masking function for point clouds. Our model utilizes a self-distillation

framework with an online tokenizer for 3D point clouds. Compared to previous

techniques that optimize patch-level and object-level objectives, we postulate

applying an auxiliary network that learns how to select masks instead of

choosing them randomly. Our results show that the learned masking function

achieves state-of-the-art or competitive performance on various downstream

tasks. The source code is available at https://github.com/szacho/pointcam.

Representation learning of spatial and geographic data is a rapidly

developing field which allows for similarity detection between areas and

high-quality inference using deep neural networks. Past approaches however

concentrated on embedding raster imagery (maps, street or satellite photos),

mobility data or road networks. In this paper we propose the first approach to

learning vector representations of OpenStreetMap regions with respect to urban

functions and land-use in a micro-region grid. We identify a subset of OSM tags

related to major characteristics of land-use, building and urban region

functions, types of water, green or other natural areas. Through manual

verification of tagging quality, we selected 36 cities were for training region

representations. Uber's H3 index was used to divide the cities into hexagons,

and OSM tags were aggregated for each hexagon. We propose the hex2vec method

based on the Skip-gram model with negative sampling. The resulting vector

representations showcase semantic structures of the map characteristics,

similar to ones found in vector-based language models. We also present insights

from region similarity detection in six Polish cities and propose a region

typology obtained through agglomerative clustering.

20 Jun 2024

University of WashingtonUniversity of New South Wales

University of WashingtonUniversity of New South Wales Monash University

Monash University Beihang University

Beihang University Nanyang Technological University

Nanyang Technological University Purdue UniversityIndian Institute of Technology, BombaySingapore University of Technology and DesignS. N. Bose National Centre for Basic SciencesUniversity of WollongongUniversität WürzburgUniversity of Twente

Purdue UniversityIndian Institute of Technology, BombaySingapore University of Technology and DesignS. N. Bose National Centre for Basic SciencesUniversity of WollongongUniversität WürzburgUniversity of Twente University of VirginiaWrocław University of Science and TechnologyMax Planck Institute for Chemical Physics of SolidsJulius-Maximilians-Universität WürzburgAustralian Synchrotron

University of VirginiaWrocław University of Science and TechnologyMax Planck Institute for Chemical Physics of SolidsJulius-Maximilians-Universität WürzburgAustralian Synchrotron2D topological insulators promise novel approaches towards electronic,

spintronic, and quantum device applications. This is owing to unique features

of their electronic band structure, in which bulk-boundary correspondences

enforces the existence of 1D spin-momentum locked metallic edge states - both

helical and chiral - surrounding an electrically insulating bulk. Forty years

since the first discoveries of topological phases in condensed matter, the

abstract concept of band topology has sprung into realization with several

materials now available in which sizable bulk energy gaps - up to a few hundred

meV - promise to enable topology for applications even at room-temperature.

Further, the possibility of combining 2D TIs in heterostructures with

functional materials such as multiferroics, ferromagnets, and superconductors,

vastly extends the range of applicability beyond their intrinsic properties.

While 2D TIs remain a unique testbed for questions of fundamental condensed

matter physics, proposals seek to control the topologically protected bulk or

boundary states electrically, or even induce topological phase transitions to

engender switching functionality. Induction of superconducting pairing in 2D

TIs strives to realize non-Abelian quasiparticles, promising avenues towards

fault-tolerant topological quantum computing. This roadmap aims to present a

status update of the field, reviewing recent advances and remaining challenges

in theoretical understanding, materials synthesis, physical characterization

and, ultimately, device perspectives.

The emergence of Large Language Models (LLMs) has transformed information access, with current LLMs also powering deep research systems that can generate comprehensive report-style answers, through planned iterative search, retrieval, and reasoning. Still, current deep research systems lack the geo-temporal capabilities that are essential for answering context-rich questions involving geographic and/or temporal constraints, frequently occurring in domains like public health, environmental science, or socio-economic analysis. This paper reports our vision towards next generation systems, identifying important technical, infrastructural, and evaluative challenges in integrating geo-temporal reasoning into deep research pipelines. We argue for augmenting retrieval and synthesis processes with the ability to handle geo-temporal constraints, supported by open and reproducible infrastructures and rigorous evaluation protocols. Our vision outlines a path towards more advanced and geo-temporally aware deep research systems, of potential impact to the future of AI-driven information access.

15 Aug 2025

We introduce the concept of temporal hierarchy forecasting (THieF) in predicting day-ahead electricity prices and show that reconciling forecasts for hourly products, 2- to 12-hour blocks, and baseload contracts significantly (up to 13%) improves accuracy at all levels. These results remain consistent throughout a challenging 4-year test period (2021-2024) in the German power market and across model architectures, including linear regression, a shallow neural network, gradient boosting, and a state-of-the-art transformer. Given that (i) trading of block products is becoming more common and (ii) the computational cost of reconciliation is comparable to that of predicting hourly prices alone, we recommend using it in daily forecasting practice.

Implicit neural representations (INR) have gained prominence for efficiently encoding multimedia data, yet their applications in audio signals remain limited. This study introduces the Kolmogorov-Arnold Network (KAN), a novel architecture using learnable activation functions, as an effective INR model for audio representation. KAN demonstrates superior perceptual performance over previous INRs, achieving the lowest Log-SpectralDistance of 1.29 and the highest Perceptual Evaluation of Speech Quality of 3.57 for 1.5 s audio. To extend KAN's utility, we propose FewSound, a hypernetwork-based architecture that enhances INR parameter updates. FewSound outperforms the state-of-the-art HyperSound, with a 33.3% improvement in MSE and 60.87% in SI-SNR. These results show KAN as a robust and adaptable audio representation with the potential for scalability and integration into various hypernetwork frameworks. The source code can be accessed at this https URL.

There are no more papers matching your filters at the moment.