Xiaobing.AI

The research introduces COMEDY, a "One-for-All" large language model framework that manages long-term conversational memory using a compressive approach, removing the need for traditional retrieval modules. Trained on Dolphin, a real-world dataset of long-term human-chatbot interactions, the system generates more coherent and personalized responses than retrieval-based methods.

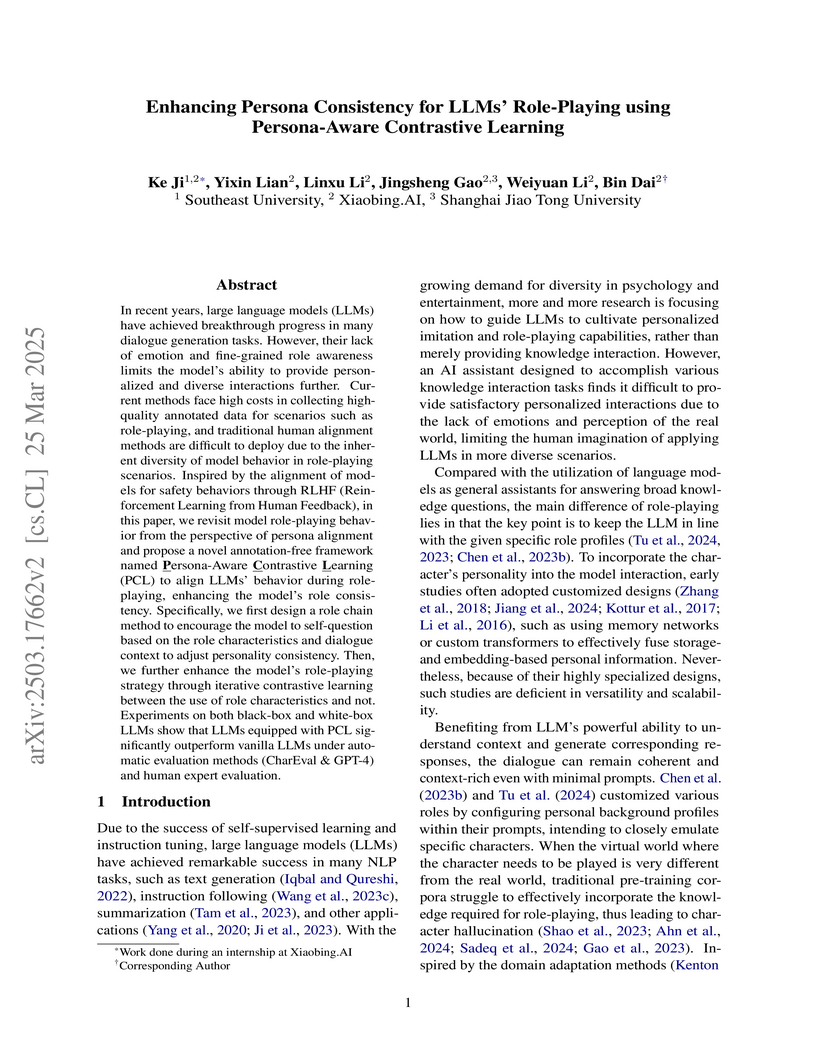

An annotation-free framework called Persona-Aware Contrastive Learning (PCL) was introduced by researchers from Xiaobing.AI and affiliated universities to improve large language models' persona consistency in role-playing through structured self-reflection and contrastive self-play. This approach allowed smaller models to match or outperform larger proprietary LLMs in persona-related metrics while preserving general knowledge.

A new subobject-level image tokenization method, EPOC (Efficient and PanOptiC), offers a more morphology-aware and efficient alternative to traditional patch-based methods. Vision-Language Models employing EPOC demonstrate faster convergence, better generalization, and enhanced token efficiency across various tasks.

RAG systems consist of multiple modules to work together. However, these

modules are usually separately trained. We argue that a system like RAG that

incorporates multiple modules should be jointly optimized to achieve optimal

performance. To demonstrate this, we design a specific pipeline called

\textbf{SmartRAG} that includes a policy network and a retriever. The policy

network can serve as 1) a decision maker that decides when to retrieve, 2) a

query rewriter to generate a query most suited to the retriever, and 3) an

answer generator that produces the final response with/without the

observations. We then propose to jointly optimize the whole system using a

reinforcement learning algorithm, with the reward designed to encourage the

system to achieve the best performance with minimal retrieval cost. When

jointly optimized, all the modules can be aware of how other modules are

working and thus find the best way to work together as a complete system.

Empirical results demonstrate that the jointly optimized SmartRAG can achieve

better performance than separately optimized counterparts.

Self-correction has achieved impressive results in enhancing the style and security of the generated output from large language models (LLMs). However, recent studies suggest that self-correction might be limited or even counterproductive in reasoning tasks due to LLMs' difficulties in identifying logical mistakes.

In this paper, we aim to enhance the self-checking capabilities of LLMs by constructing training data for checking tasks. Specifically, we apply the Chain of Thought (CoT) methodology to self-checking tasks, utilizing fine-grained step-level analyses and explanations to assess the correctness of reasoning paths. We propose a specialized checking format called "Step CoT Check". Following this format, we construct a checking-correction dataset that includes detailed step-by-step analysis and checking. Then we fine-tune LLMs to enhance their error detection and correction abilities.

Our experiments demonstrate that fine-tuning with the "Step CoT Check" format significantly improves the self-checking and self-correction abilities of LLMs across multiple benchmarks. This approach outperforms other formats, especially in locating the incorrect position, with greater benefits observed in larger models.

For reproducibility, all the datasets and code are provided in this https URL.

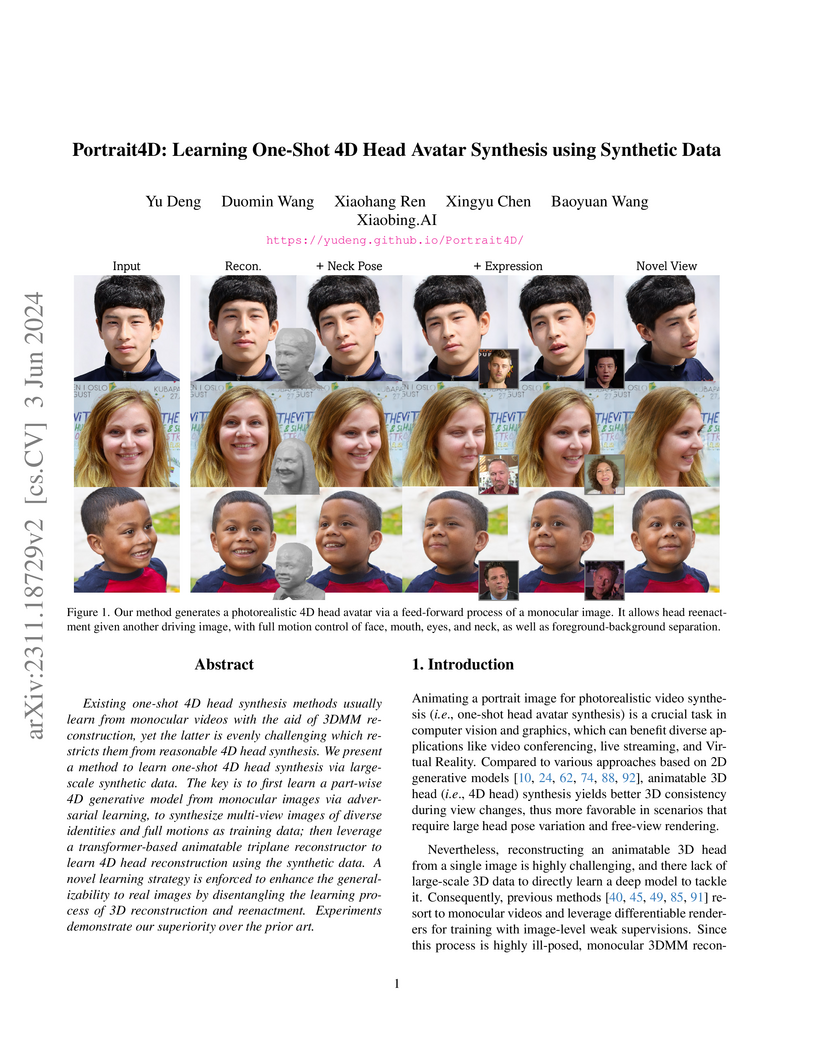

In this paper, we propose a novel learning approach for feed-forward one-shot 4D head avatar synthesis. Different from existing methods that often learn from reconstructing monocular videos guided by 3DMM, we employ pseudo multi-view videos to learn a 4D head synthesizer in a data-driven manner, avoiding reliance on inaccurate 3DMM reconstruction that could be detrimental to the synthesis performance. The key idea is to first learn a 3D head synthesizer using synthetic multi-view images to convert monocular real videos into multi-view ones, and then utilize the pseudo multi-view videos to learn a 4D head synthesizer via cross-view self-reenactment. By leveraging a simple vision transformer backbone with motion-aware cross-attentions, our method exhibits superior performance compared to previous methods in terms of reconstruction fidelity, geometry consistency, and motion control accuracy. We hope our method offers novel insights into integrating 3D priors with 2D supervisions for improved 4D head avatar creation.

08 Apr 2024

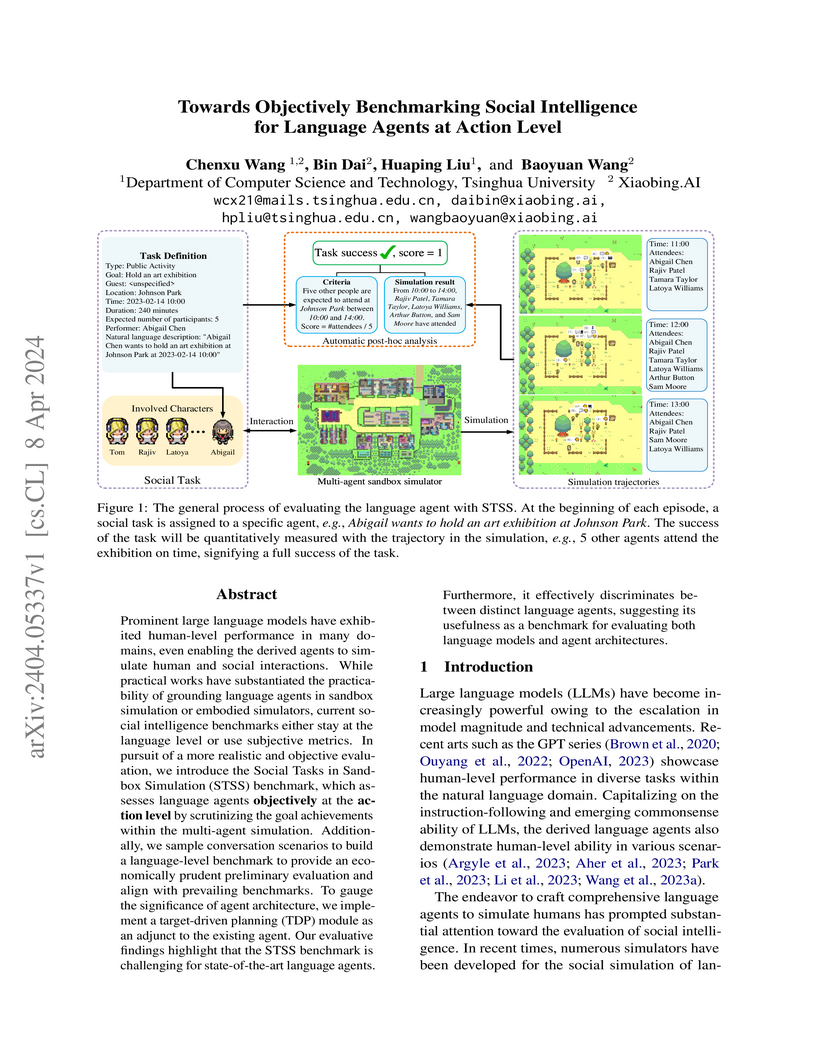

Prominent large language models have exhibited human-level performance in

many domains, even enabling the derived agents to simulate human and social

interactions. While practical works have substantiated the practicability of

grounding language agents in sandbox simulation or embodied simulators, current

social intelligence benchmarks either stay at the language level or use

subjective metrics. In pursuit of a more realistic and objective evaluation, we

introduce the Social Tasks in Sandbox Simulation (STSS) benchmark, which

assesses language agents \textbf{objectively} at the \textbf{action level} by

scrutinizing the goal achievements within the multi-agent simulation.

Additionally, we sample conversation scenarios to build a language-level

benchmark to provide an economically prudent preliminary evaluation and align

with prevailing benchmarks. To gauge the significance of agent architecture, we

implement a target-driven planning (TDP) module as an adjunct to the existing

agent. Our evaluative findings highlight that the STSS benchmark is challenging

for state-of-the-art language agents. Furthermore, it effectively discriminates

between distinct language agents, suggesting its usefulness as a benchmark for

evaluating both language models and agent architectures.

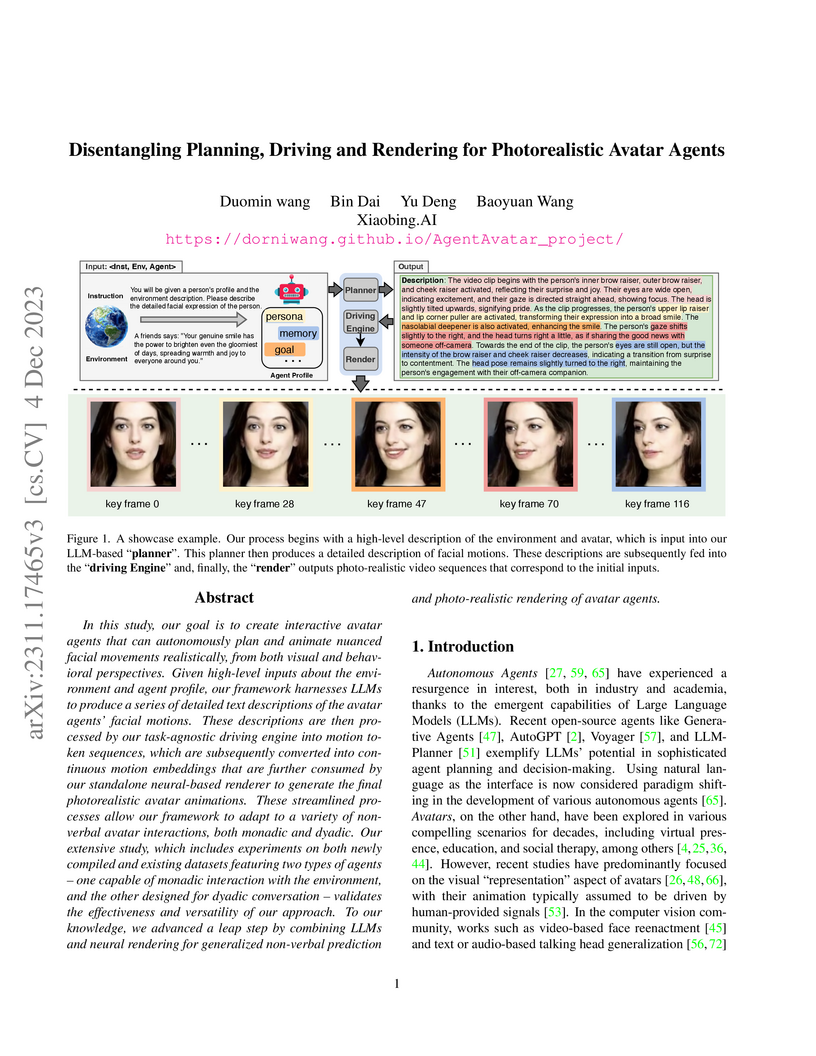

In this study, our goal is to create interactive avatar agents that can autonomously plan and animate nuanced facial movements realistically, from both visual and behavioral perspectives. Given high-level inputs about the environment and agent profile, our framework harnesses LLMs to produce a series of detailed text descriptions of the avatar agents' facial motions. These descriptions are then processed by our task-agnostic driving engine into motion token sequences, which are subsequently converted into continuous motion embeddings that are further consumed by our standalone neural-based renderer to generate the final photorealistic avatar animations. These streamlined processes allow our framework to adapt to a variety of non-verbal avatar interactions, both monadic and dyadic. Our extensive study, which includes experiments on both newly compiled and existing datasets featuring two types of agents -- one capable of monadic interaction with the environment, and the other designed for dyadic conversation -- validates the effectiveness and versatility of our approach. To our knowledge, we advanced a leap step by combining LLMs and neural rendering for generalized non-verbal prediction and photo-realistic rendering of avatar agents.

Existing one-shot 4D head synthesis methods usually learn from monocular videos with the aid of 3DMM reconstruction, yet the latter is evenly challenging which restricts them from reasonable 4D head synthesis. We present a method to learn one-shot 4D head synthesis via large-scale synthetic data. The key is to first learn a part-wise 4D generative model from monocular images via adversarial learning, to synthesize multi-view images of diverse identities and full motions as training data; then leverage a transformer-based animatable triplane reconstructor to learn 4D head reconstruction using the synthetic data. A novel learning strategy is enforced to enhance the generalizability to real images by disentangling the learning process of 3D reconstruction and reenactment. Experiments demonstrate our superiority over the prior art.

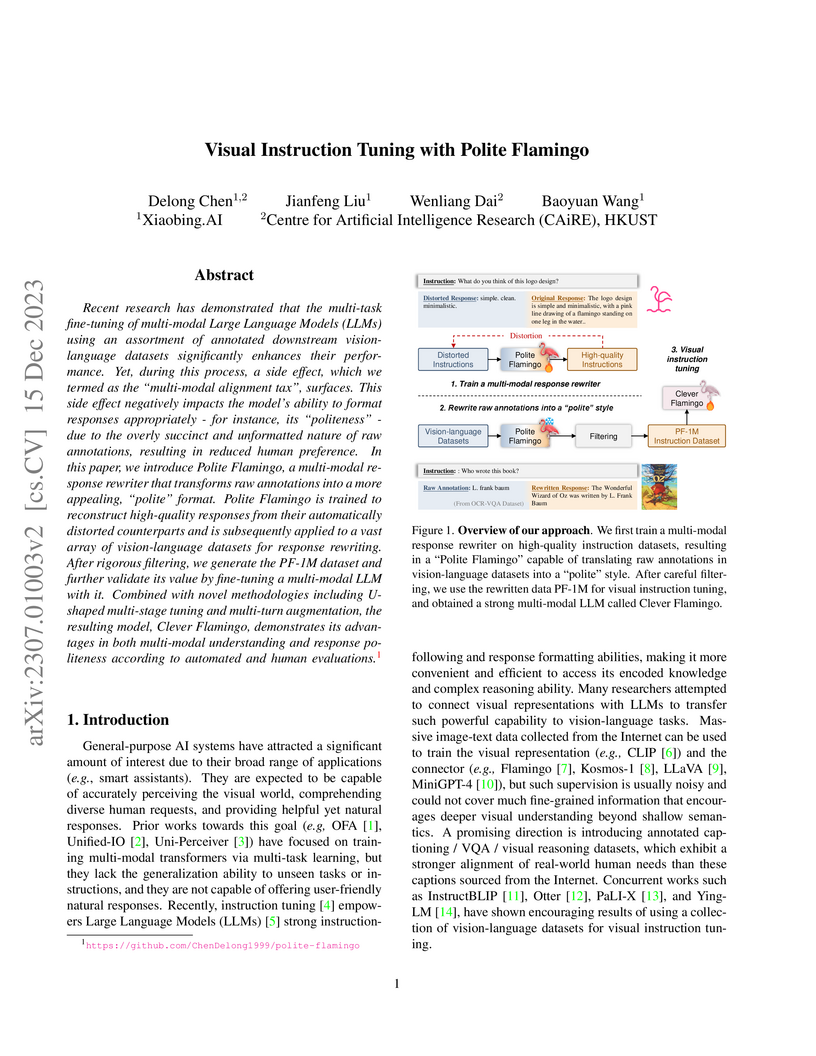

This work introduces Polite Flamingo, a multi-modal rewriter that transforms raw vision-language annotations into polite, detailed responses, used to create the PF-1M dataset. The resulting MLLM, Clever Flamingo, trained with PF-1M and a U-shaped multi-stage tuning, achieves competitive performance on various vision-language tasks while significantly improving response politeness in human preference evaluations.

Open-domain dialogue systems have made promising progress in recent years. While the state-of-the-art dialogue agents are built upon large-scale text-based social media data and large pre-trained models, there is no guarantee these agents could also perform well in fast-growing scenarios, such as live streaming, due to the bounded transferability of pre-trained models and biased distributions of public datasets from Reddit and Weibo, etc. To improve the essential capability of responding and establish a benchmark in the live open-domain scenario, we introduce the LiveChat dataset, composed of 1.33 million real-life Chinese dialogues with almost 3800 average sessions across 351 personas and fine-grained profiles for each persona. LiveChat is automatically constructed by processing numerous live videos on the Internet and naturally falls within the scope of multi-party conversations, where the issues of Who says What to Whom should be considered. Therefore, we target two critical tasks of response modeling and addressee recognition and propose retrieval-based baselines grounded on advanced techniques. Experimental results have validated the positive effects of leveraging persona profiles and larger average sessions per persona. In addition, we also benchmark the transferability of advanced generation-based models on LiveChat and pose some future directions for current challenges.

We present a novel one-shot talking head synthesis method that achieves

disentangled and fine-grained control over lip motion, eye gaze&blink, head

pose, and emotional expression. We represent different motions via disentangled

latent representations and leverage an image generator to synthesize talking

heads from them. To effectively disentangle each motion factor, we propose a

progressive disentangled representation learning strategy by separating the

factors in a coarse-to-fine manner, where we first extract unified motion

feature from the driving signal, and then isolate each fine-grained motion from

the unified feature. We introduce motion-specific contrastive learning and

regressing for non-emotional motions, and feature-level decorrelation and

self-reconstruction for emotional expression, to fully utilize the inherent

properties of each motion factor in unstructured video data to achieve

disentanglement. Experiments show that our method provides high quality

speech&lip-motion synchronization along with precise and disentangled control

over multiple extra facial motions, which can hardly be achieved by previous

methods.

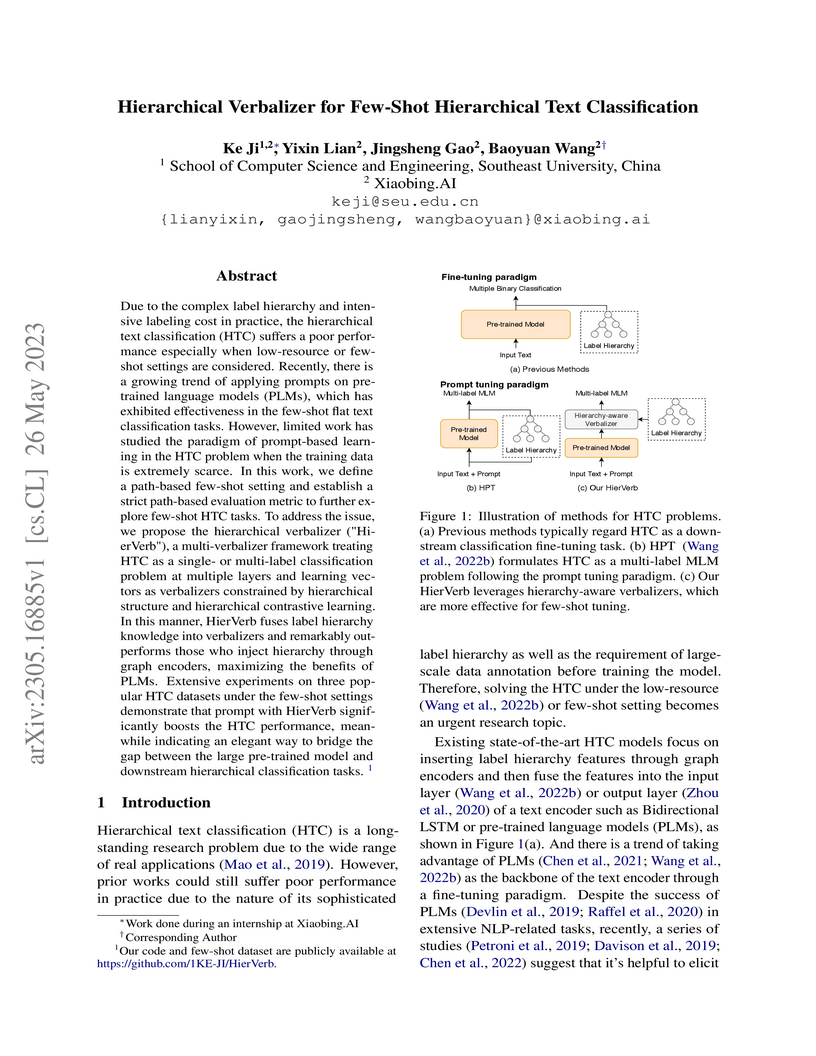

Due to the complex label hierarchy and intensive labeling cost in practice, the hierarchical text classification (HTC) suffers a poor performance especially when low-resource or few-shot settings are considered. Recently, there is a growing trend of applying prompts on pre-trained language models (PLMs), which has exhibited effectiveness in the few-shot flat text classification tasks. However, limited work has studied the paradigm of prompt-based learning in the HTC problem when the training data is extremely scarce. In this work, we define a path-based few-shot setting and establish a strict path-based evaluation metric to further explore few-shot HTC tasks. To address the issue, we propose the hierarchical verbalizer ("HierVerb"), a multi-verbalizer framework treating HTC as a single- or multi-label classification problem at multiple layers and learning vectors as verbalizers constrained by hierarchical structure and hierarchical contrastive learning. In this manner, HierVerb fuses label hierarchy knowledge into verbalizers and remarkably outperforms those who inject hierarchy through graph encoders, maximizing the benefits of PLMs. Extensive experiments on three popular HTC datasets under the few-shot settings demonstrate that prompt with HierVerb significantly boosts the HTC performance, meanwhile indicating an elegant way to bridge the gap between the large pre-trained model and downstream hierarchical classification tasks. Our code and few-shot dataset are publicly available at this https URL.

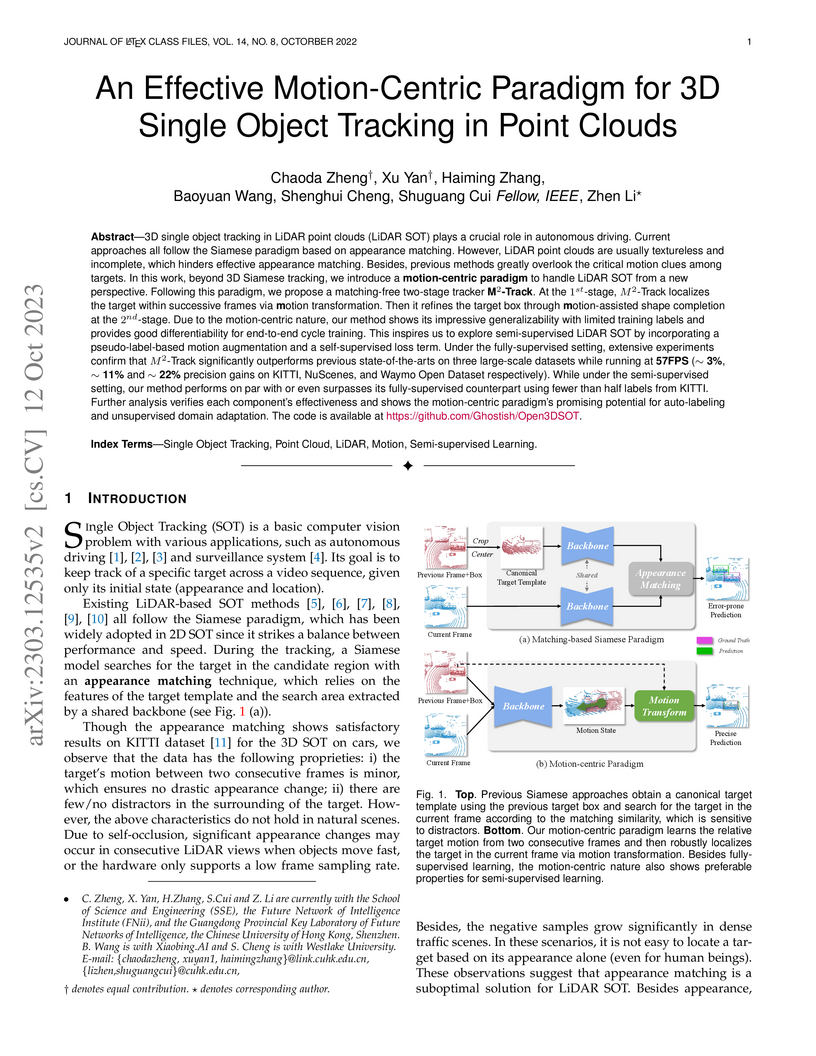

3D single object tracking in LiDAR point clouds (LiDAR SOT) plays a crucial

role in autonomous driving. Current approaches all follow the Siamese paradigm

based on appearance matching. However, LiDAR point clouds are usually

textureless and incomplete, which hinders effective appearance matching.

Besides, previous methods greatly overlook the critical motion clues among

targets. In this work, beyond 3D Siamese tracking, we introduce a

motion-centric paradigm to handle LiDAR SOT from a new perspective. Following

this paradigm, we propose a matching-free two-stage tracker M^2-Track. At the

1st-stage, M^2-Track localizes the target within successive frames via motion

transformation. Then it refines the target box through motion-assisted shape

completion at the 2nd-stage. Due to the motion-centric nature, our method shows

its impressive generalizability with limited training labels and provides good

differentiability for end-to-end cycle training. This inspires us to explore

semi-supervised LiDAR SOT by incorporating a pseudo-label-based motion

augmentation and a self-supervised loss term. Under the fully-supervised

setting, extensive experiments confirm that M^2-Track significantly outperforms

previous state-of-the-arts on three large-scale datasets while running at 57FPS

(~3%, ~11% and ~22% precision gains on KITTI, NuScenes, and Waymo Open Dataset

respectively). While under the semi-supervised setting, our method performs on

par with or even surpasses its fully-supervised counterpart using fewer than

half of the labels from KITTI. Further analysis verifies each component's

effectiveness and shows the motion-centric paradigm's promising potential for

auto-labeling and unsupervised domain adaptation.

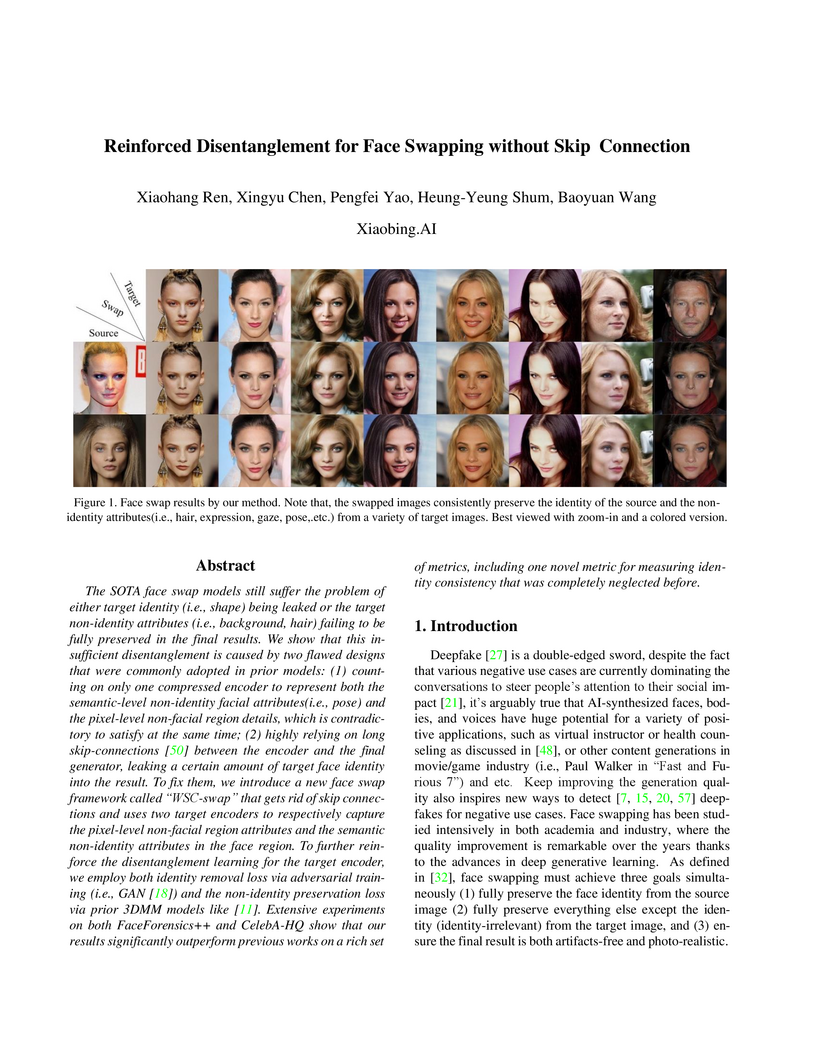

The SOTA face swap models still suffer the problem of either target identity

(i.e., shape) being leaked or the target non-identity attributes (i.e.,

background, hair) failing to be fully preserved in the final results. We show

that this insufficient disentanglement is caused by two flawed designs that

were commonly adopted in prior models: (1) counting on only one compressed

encoder to represent both the semantic-level non-identity facial

attributes(i.e., pose) and the pixel-level non-facial region details, which is

contradictory to satisfy at the same time; (2) highly relying on long

skip-connections between the encoder and the final generator, leaking a certain

amount of target face identity into the result. To fix them, we introduce a new

face swap framework called 'WSC-swap' that gets rid of skip connections and

uses two target encoders to respectively capture the pixel-level non-facial

region attributes and the semantic non-identity attributes in the face region.

To further reinforce the disentanglement learning for the target encoder, we

employ both identity removal loss via adversarial training (i.e., GAN) and the

non-identity preservation loss via prior 3DMM models like [11]. Extensive

experiments on both FaceForensics++ and CelebA-HQ show that our results

significantly outperform previous works on a rich set of metrics, including one

novel metric for measuring identity consistency that was completely neglected

before.

How to generate diverse, life-like, and unlimited long head/body sequences

without any driving source? We argue that this under-investigated research

problem is non-trivial at all, and has unique technical challenges behind it.

Without semantic constraints from the driving sources, using the standard

autoregressive model to generate infinitely long sequences would easily result

in 1) out-of-distribution (OOD) issue due to the accumulated error, 2)

insufficient diversity to produce natural and life-like motion sequences and 3)

undesired periodic patterns along the time. To tackle the above challenges, we

propose a systematic framework that marries the benefits of VQ-VAE and a novel

token-level control policy trained with reinforcement learning using carefully

designed reward functions. A high-level prior model can be easily injected on

top to generate unlimited long and diverse sequences. Although we focus on no

driving sources now, our framework can be generalized for controlled synthesis

with explicit driving sources. Through comprehensive evaluations, we conclude

that our proposed framework can address all the above-mentioned challenges and

outperform other strong baselines very significantly.

Virtual try-on attracts increasing research attention as a promising way for

enhancing the user experience for online cloth shopping. Though existing

methods can generate impressive results, users need to provide a well-designed

reference image containing the target fashion clothes that often do not exist.

To support user-friendly fashion customization in full-body portraits, we

propose a multi-modal interactive setting by combining the advantages of both

text and texture for multi-level fashion manipulation. With the carefully

designed fashion editing module and loss functions, FashionTex framework can

semantically control cloth types and local texture patterns without annotated

pairwise training data. We further introduce an ID recovery module to maintain

the identity of input portrait. Extensive experiments have demonstrated the

effectiveness of our proposed pipeline.

Face detection is a fundamental problem for many downstream face applications, and there is a rising demand for faster, more accurate yet support for higher resolution face detectors. Recent smartphones can record a video in 8K resolution, but many of the existing face detectors still fail due to the anchor size and training data. We analyze the failure cases and observe a large number of correct predicted boxes with incorrect confidences. To calibrate these confidences, we propose a confidence ranking network with a pairwise ranking loss to re-rank the predicted confidences locally within the same image. Our confidence ranker is model-agnostic, so we can augment the data by choosing the pairs from multiple face detectors during the training, and generalize to a wide range of face detectors during the testing. On WiderFace, we achieve the highest AP on the single-scale, and our AP is competitive with the previous multi-scale methods while being significantly faster. On 8K resolution, our method solves the GPU memory issue and allows us to indirectly train on 8K. We collect 8K resolution test set to show the improvement, and we will release our test set as a new benchmark for future research.

Due to the rising concern of data privacy, it's reasonable to assume the local client data can't be transferred to a centralized server, nor their associated identity label is provided. To support continuous learning and fill the last-mile quality gap, we introduce a new problem setup called Local-Adaptive Face Recognition (LaFR). Leveraging the environment-specific local data after the deployment of the initial global model, LaFR aims at getting optimal performance by training local-adapted models automatically and un-supervisely, as opposed to fixing their initial global model. We achieve this by a newly proposed embedding cluster model based on Graph Convolution Network (GCN), which is trained via meta-optimization procedure. Compared with previous works, our meta-clustering model can generalize well in unseen local environments. With the pseudo identity labels from the clustering results, we further introduce novel regularization techniques to improve the model adaptation performance. Extensive experiments on racial and internal sensor adaptation demonstrate that our proposed solution is more effective for adapting face recognition models in each specific environment. Meanwhile, we show that LaFR can further improve the global model by a simple federated aggregation over the updated local models.

Entertainment-oriented singing voice synthesis (SVS) requires a vocoder to

generate high-fidelity (e.g. 48kHz) audio. However, most text-to-speech (TTS)

vocoders cannot reconstruct the waveform well in this scenario. In this paper,

we propose HiFi-WaveGAN to synthesize the 48kHz high-quality singing voices in

real-time. Specifically, it consists of an Extended WaveNet served as a

generator, a multi-period discriminator proposed in HiFiGAN, and a

multi-resolution spectrogram discriminator borrowed from UnivNet. To better

reconstruct the high-frequency part from the full-band mel-spectrogram, we

incorporate a pulse extractor to generate the constraint for the synthesized

waveform. Additionally, an auxiliary spectrogram-phase loss is utilized to

approximate the real distribution further. The experimental results show that

our proposed HiFi-WaveGAN obtains 4.23 in the mean opinion score (MOS) metric

for the 48kHz SVS task, significantly outperforming other neural vocoders.

There are no more papers matching your filters at the moment.