Yahoo Japan Corporation

We tackle the problem of machine translation of manga, Japanese comics. Manga translation involves two important problems in machine translation: context-aware and multimodal translation. Since text and images are mixed up in an unstructured fashion in Manga, obtaining context from the image is essential for manga translation. However, it is still an open problem how to extract context from image and integrate into MT models. In addition, corpus and benchmarks to train and evaluate such model is currently unavailable. In this paper, we make the following four contributions that establishes the foundation of manga translation research. First, we propose multimodal context-aware translation framework. We are the first to incorporate context information obtained from manga image. It enables us to translate texts in speech bubbles that cannot be translated without using context information (e.g., texts in other speech bubbles, gender of speakers, etc.). Second, for training the model, we propose the approach to automatic corpus construction from pairs of original manga and their translations, by which large parallel corpus can be constructed without any manual labeling. Third, we created a new benchmark to evaluate manga translation. Finally, on top of our proposed methods, we devised a first comprehensive system for fully automated manga translation.

Modeling and predicting human mobility trajectories in urban areas is an essential task for various applications. The recent availability of large-scale human movement data collected from mobile devices have enabled the development of complex human mobility prediction models. However, human mobility prediction methods are often trained and tested on different datasets, due to the lack of open-source large-scale human mobility datasets amid privacy concerns, posing a challenge towards conducting fair performance comparisons between methods. To this end, we created an open-source, anonymized, metropolitan scale, and longitudinal (90 days) dataset of 100,000 individuals' human mobility trajectories, using mobile phone location data. The location pings are spatially and temporally discretized, and the metropolitan area is undisclosed to protect users' privacy. The 90-day period is composed of 75 days of business-as-usual and 15 days during an emergency. To promote the use of the dataset, we will host a human mobility prediction data challenge (`HuMob Challenge 2023') using the human mobility dataset, which will be held in conjunction with ACM SIGSPATIAL 2023.

Ranking interfaces are everywhere in online platforms. There is thus an ever growing interest in their Off-Policy Evaluation (OPE), aiming towards an accurate performance evaluation of ranking policies using logged data. A de-facto approach for OPE is Inverse Propensity Scoring (IPS), which provides an unbiased and consistent value estimate. However, it becomes extremely inaccurate in the ranking setup due to its high variance under large action spaces. To deal with this problem, previous studies assume either independent or cascade user behavior, resulting in some ranking versions of IPS. While these estimators are somewhat effective in reducing the variance, all existing estimators apply a single universal assumption to every user, causing excessive bias and variance. Therefore, this work explores a far more general formulation where user behavior is diverse and can vary depending on the user context. We show that the resulting estimator, which we call Adaptive IPS (AIPS), can be unbiased under any complex user behavior. Moreover, AIPS achieves the minimum variance among all unbiased estimators based on IPS. We further develop a procedure to identify the appropriate user behavior model to minimize the mean squared error (MSE) of AIPS in a data-driven fashion. Extensive experiments demonstrate that the empirical accuracy improvement can be significant, enabling effective OPE of ranking systems even under diverse user behavior.

Sequence-to-sequence (S2S) modeling is becoming a popular paradigm for automatic speech recognition (ASR) because of its ability to jointly optimize all the conventional ASR components in an end-to-end (E2E) fashion. This report investigates the ability of E2E ASR from standard close-talk to far-field applications by encompassing entire multichannel speech enhancement and ASR components within the S2S model. There have been previous studies on jointly optimizing neural beamforming alongside E2E ASR for denoising. It is clear from both recent challenge outcomes and successful products that far-field systems would be incomplete without solving both denoising and dereverberation simultaneously. This report uses a recently developed architecture for far-field ASR by composing neural extensions of dereverberation and beamforming modules with the S2S ASR module as a single differentiable neural network and also clearly defining the role of each subnetwork. The original implementation of this architecture was successfully applied to the noisy speech recognition task (CHiME-4), while we applied this implementation to noisy reverberant tasks (DIRHA and REVERB). Our investigation shows that the method achieves better performance than conventional pipeline methods on the DIRHA English dataset and comparable performance on the REVERB dataset. It also has additional advantages of being neither iterative nor requiring parallel noisy and clean speech data.

Researchers from Tokyo Institute of Technology, Cornell University, Yale University, and Yahoo Japan Corporation developed Cascade Doubly Robust (Cascade-DR), an off-policy evaluation method for ranking policies that interprets user interactions under the cascade assumption as a Markov Decision Process. This approach reduces variance and improves the worst-case performance of offline policy estimates by over 50% on real-world e-commerce data compared to existing methods.

Neural end-to-end (E2E) models have become a promising technique to realize

practical automatic speech recognition (ASR) systems. When realizing such a

system, one important issue is the segmentation of audio to deal with streaming

input or long recording. After audio segmentation, the ASR model with a small

real-time factor (RTF) is preferable because the latency of the system can be

faster. Recently, E2E ASR based on non-autoregressive models becomes a

promising approach since it can decode an N-length token sequence with less

than N iterations. We propose a system to concatenate audio segmentation and

non-autoregressive ASR to realize high accuracy and low RTF ASR. As a

non-autoregressive ASR, the insertion-based model is used. In addition, instead

of concatenating separated models for segmentation and ASR, we introduce a new

architecture that realizes audio segmentation and non-autoregressive ASR by a

single neural network. Experimental results on Japanese and English dataset

show that the method achieved a reasonable trade-off between accuracy and RTF

compared with baseline autoregressive Transformer and connectionist temporal

classification.

Self-supervised learning (SSL) of speech has shown impressive results in speech-related tasks, particularly in automatic speech recognition (ASR). While most methods employ the output of intermediate layers of the SSL model as real-valued features for downstream tasks, there is potential in exploring alternative approaches that use discretized token sequences. This approach offers benefits such as lower storage requirements and the ability to apply techniques from natural language processing. In this paper, we propose a new protocol that utilizes discretized token sequences in ASR tasks, which includes de-duplication and sub-word modeling to enhance the input sequence. It reduces computational cost by decreasing the length of the sequence. Our experiments on the LibriSpeech dataset demonstrate that our proposed protocol performs competitively with conventional ASR systems using continuous input features, while reducing computational and storage costs.

This work presents our end-to-end (E2E) automatic speech recognition (ASR)

model targetting at robust speech recognition, called Integraded speech

Recognition with enhanced speech Input for Self-supervised learning

representation (IRIS). Compared with conventional E2E ASR models, the proposed

E2E model integrates two important modules including a speech enhancement (SE)

module and a self-supervised learning representation (SSLR) module. The SE

module enhances the noisy speech. Then the SSLR module extracts features from

enhanced speech to be used for speech recognition (ASR). To train the proposed

model, we establish an efficient learning scheme. Evaluation results on the

monaural CHiME-4 task show that the IRIS model achieves the best performance

reported in the literature for the single-channel CHiME-4 benchmark (2.0% for

the real development and 3.9% for the real test) thanks to the powerful

pre-trained SSLR module and the fine-tuned SE module.

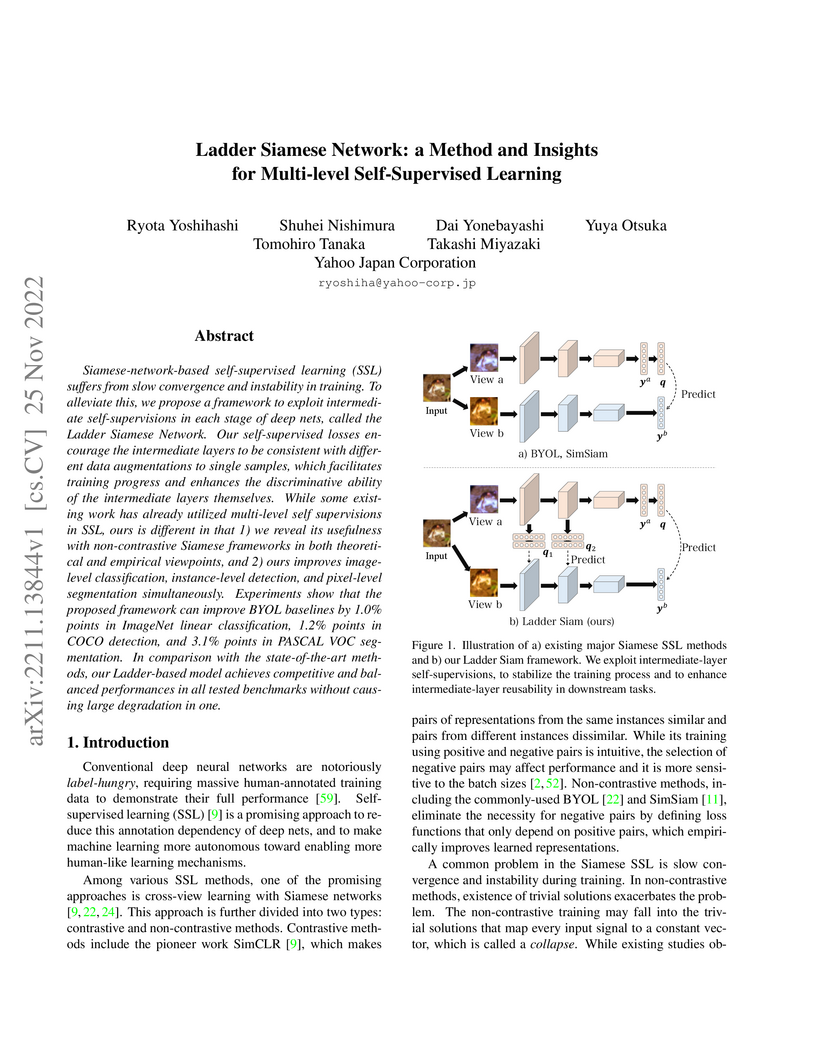

Siamese-network-based self-supervised learning (SSL) suffers from slow convergence and instability in training. To alleviate this, we propose a framework to exploit intermediate self-supervisions in each stage of deep nets, called the Ladder Siamese Network. Our self-supervised losses encourage the intermediate layers to be consistent with different data augmentations to single samples, which facilitates training progress and enhances the discriminative ability of the intermediate layers themselves. While some existing work has already utilized multi-level self supervisions in SSL, ours is different in that 1) we reveal its usefulness with non-contrastive Siamese frameworks in both theoretical and empirical viewpoints, and 2) ours improves image-level classification, instance-level detection, and pixel-level segmentation simultaneously. Experiments show that the proposed framework can improve BYOL baselines by 1.0% points in ImageNet linear classification, 1.2% points in COCO detection, and 3.1% points in PASCAL VOC segmentation. In comparison with the state-of-the-art methods, our Ladder-based model achieves competitive and balanced performances in all tested benchmarks without causing large degradation in one.

Non-autoregressive (NAR) models simultaneously generate multiple outputs in a

sequence, which significantly reduces the inference speed at the cost of

accuracy drop compared to autoregressive baselines. Showing great potential for

real-time applications, an increasing number of NAR models have been explored

in different fields to mitigate the performance gap against AR models. In this

work, we conduct a comparative study of various NAR modeling methods for

end-to-end automatic speech recognition (ASR). Experiments are performed in the

state-of-the-art setting using ESPnet. The results on various tasks provide

interesting findings for developing an understanding of NAR ASR, such as the

accuracy-speed trade-off and robustness against long-form utterances. We also

show that the techniques can be combined for further improvement and applied to

NAR end-to-end speech translation. All the implementations are publicly

available to encourage further research in NAR speech processing.

The advance of generative models for images has inspired various training

techniques for image recognition utilizing synthetic images. In semantic

segmentation, one promising approach is extracting pseudo-masks from attention

maps in text-to-image diffusion models, which enables

real-image-and-annotation-free training. However, the pioneering training

method using the diffusion-synthetic images and pseudo-masks, i.e., DiffuMask

has limitations in terms of mask quality, scalability, and ranges of applicable

domains. To overcome these limitations, this work introduces three techniques

for diffusion-synthetic semantic segmentation training. First,

reliability-aware robust training, originally used in weakly supervised

learning, helps segmentation with insufficient synthetic mask quality. %Second,

large-scale pretraining of whole segmentation models, not only backbones, on

synthetic ImageNet-1k-class images with pixel-labels benefits downstream

segmentation tasks. Second, we introduce prompt augmentation, data augmentation

to the prompt text set to scale up and diversify training images with a limited

text resources. Finally, LoRA-based adaptation of Stable Diffusion enables the

transfer to a distant domain, e.g., auto-driving images. Experiments in PASCAL

VOC, ImageNet-S, and Cityscapes show that our method effectively closes gap

between real and synthetic training in semantic segmentation.

11 Sep 2017

The BM25 ranking function is one of the most well known query relevance

document scoring functions and many variations of it are proposed. The BM25F

function is one of its adaptations designed for modeling documents with

multiple fields. The Expanded Span method extends a BM25-like function by

taking into considerations of the proximity between term occurrences. In this

note, we combine these two variations into one scoring method in view of

proximity-based scoring of documents with multiple fields.

Despite the importance of predicting evacuation mobility dynamics after large

scale disasters for effective first response and disaster relief, our general

understanding of evacuation behavior remains limited because of the lack of

empirical evidence on the evacuation movement of individuals across multiple

disaster instances. Here we investigate the GPS trajectories of a total of more

than 1 million anonymized mobile phone users whose positions are tracked for a

period of 2 months before and after four of the major earthquakes that occurred

in Japan. Through a cross comparative analysis between the four disaster

instances, we find that in contrast with the assumed complexity of evacuation

decision making mechanisms in crisis situations, the individuals' evacuation

probability is strongly dependent on the seismic intensity that they

experience. In fact, we show that the evacuation probabilities in all

earthquakes collapse into a similar pattern, with a critical threshold at

around seismic intensity 5.5. This indicates that despite the diversity in the

earthquakes profiles and urban characteristics, evacuation behavior is

similarly dependent on seismic intensity. Moreover, we found that probability

density functions of the distances that individuals evacuate are not dependent

on seismic intensities that individuals experience. These insights from

empirical analysis on evacuation from multiple earthquake instances using large

scale mobility data contributes to a deeper understanding of how people react

to earthquakes, and can potentially assist decision makers to simulate and

predict the number of evacuees in urban areas with little computational time

and cost, by using population density information and seismic intensity which

can be observed instantaneously after the shock.

End-to-end (E2E) automatic speech recognition (ASR) with sequence-to-sequence models has gained attention because of its simple model training compared with conventional hidden Markov model based ASR. Recently, several studies report the state-of-the-art E2E ASR results obtained by Transformer. Compared to recurrent neural network (RNN) based E2E models, training of Transformer is more efficient and also achieves better performance on various tasks. However, self-attention used in Transformer requires computation quadratic in its input length. In this paper, we propose to apply lightweight and dynamic convolution to E2E ASR as an alternative architecture to the self-attention to make the computational order linear. We also propose joint training with connectionist temporal classification, convolution on the frequency axis, and combination with self-attention. With these techniques, the proposed architectures achieve better performance than RNN-based E2E model and performance competitive to state-of-the-art Transformer on various ASR benchmarks including noisy/reverberant tasks.

In community-based question answering (CQA) platforms, it takes time for a user to get useful information from among many answers. Although one solution is an answer ranking method, the user still needs to read through the top-ranked answers carefully. This paper proposes a new task of selecting a diverse and non-redundant answer set rather than ranking the answers. Our method is based on determinantal point processes (DPPs), and it calculates the answer importance and similarity between answers by using BERT. We built a dataset focusing on a Japanese CQA site, and the experiments on this dataset demonstrated that the proposed method outperformed several baseline methods.

24 Jul 2018

Recently emerged intelligent assistants on smartphones and home electronics

(e.g., Siri and Alexa) can be seen as novel hybrids of domain-specific

task-oriented spoken dialogue systems and open-domain non-task-oriented ones.

To realize such hybrid dialogue systems, this paper investigates determining

whether or not a user is going to have a chat with the system. To address the

lack of benchmark datasets for this task, we construct a new dataset consisting

of 15; 160 utterances collected from the real log data of a commercial

intelligent assistant (and will release the dataset to facilitate future

research activity). In addition, we investigate using tweets and Web search

queries for handling open-domain user utterances, which characterize the task

of chat detection. Experiments demonstrated that, while simple supervised

methods are effective, the use of the tweets and search queries further

improves the F1-score from 86.21 to 87.53.

This paper addresses the problem of automatic speech recognition (ASR) of a target speaker in background speech. The novelty of our approach is that we focus on a wakeup keyword, which is usually used for activating ASR systems like smart speakers. The proposed method firstly utilizes a DNN-based mask estimator to separate the mixture signal into the keyword signal uttered by the target speaker and the remaining background speech. Then the separated signals are used for calculating a beamforming filter to enhance the subsequent utterances from the target speaker. Experimental evaluations show that the trained DNN-based mask can selectively separate the keyword and background speech from the mixture signal. The effectiveness of the proposed method is also verified with Japanese ASR experiments, and we confirm that the character error rates are significantly improved by the proposed method for both simulated and real recorded test sets.

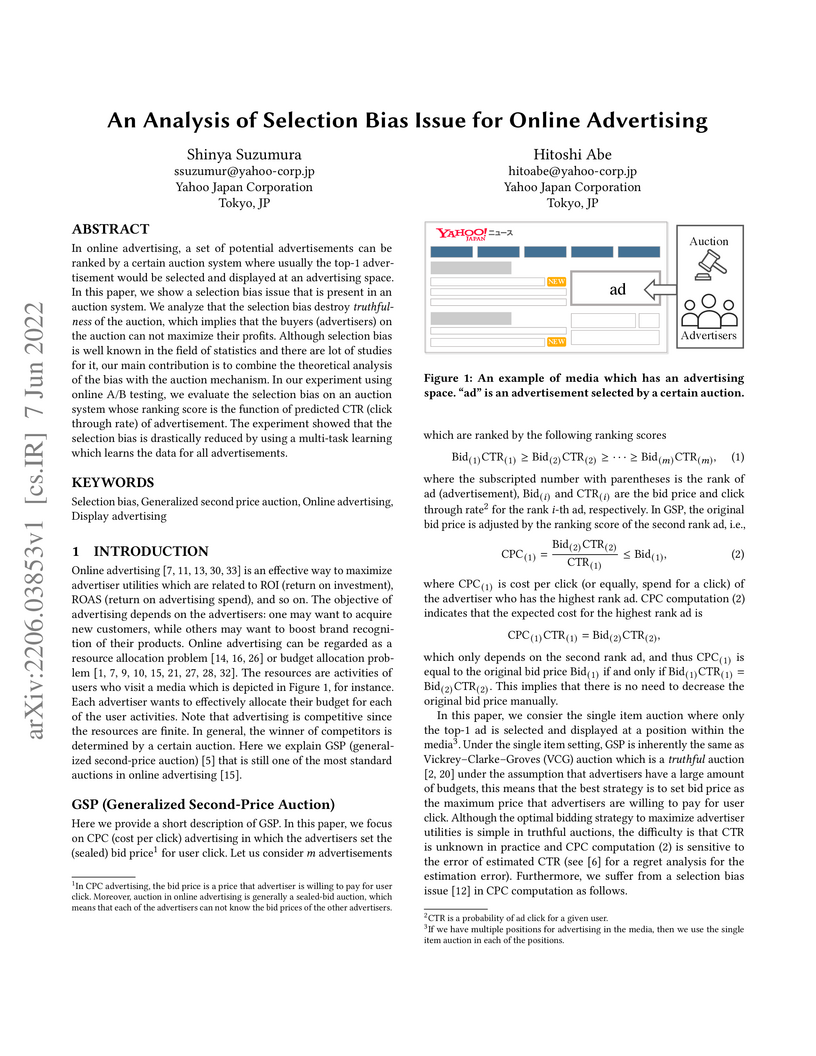

In online advertising, a set of potential advertisements can be ranked by a

certain auction system where usually the top-1 advertisement would be selected

and displayed at an advertising space. In this paper, we show a selection bias

issue that is present in an auction system. We analyze that the selection bias

destroy truthfulness of the auction, which implies that the buyers

(advertisers) on the auction can not maximize their profits. Although selection

bias is well known in the field of statistics and there are lot of studies for

it, our main contribution is to combine the theoretical analysis of the bias

with the auction mechanism. In our experiment using online A/B testing, we

evaluate the selection bias on an auction system whose ranking score is the

function of predicted CTR (click through rate) of advertisement. The experiment

showed that the selection bias is drastically reduced by using a multi-task

learning which learns the data for all advertisements.

This paper explores an incremental training strategy for the skip-gram model with negative sampling (SGNS) from both empirical and theoretical perspectives. Existing methods of neural word embeddings, including SGNS, are multi-pass algorithms and thus cannot perform incremental model update. To address this problem, we present a simple incremental extension of SGNS and provide a thorough theoretical analysis to demonstrate its validity. Empirical experiments demonstrated the correctness of the theoretical analysis as well as the practical usefulness of the incremental algorithm.

COVID-19 has disrupted the global economy and well-being of people at an unprecedented scale and magnitude. To contain the disease, an effective early warning system that predicts the locations of outbreaks is of crucial importance. Studies have shown the effectiveness of using large-scale mobility data to monitor the impacts of non-pharmaceutical interventions (e.g., lockdowns) through population density analysis. However, predicting the locations of potential outbreak occurrence is difficult using mobility data alone. Meanwhile, web search queries have been shown to be good predictors of the disease spread. In this study, we utilize a unique dataset of human mobility trajectories (GPS traces) and web search queries with common user identifiers (> 450K users), to predict COVID-19 hotspot locations beforehand. More specifically, web search query analysis is conducted to identify users with high risk of COVID-19 contraction, and social contact analysis was further performed on the mobility patterns of these users to quantify the risk of an outbreak. Our approach is empirically tested using data collected from users in Tokyo, Japan. We show that by integrating COVID-19 related web search query analytics with social contact networks, we are able to predict COVID-19 hotspot locations 1-2 weeks beforehand, compared to just using social contact indexes or web search data analysis. This study proposes a novel method that can be used in early warning systems for disease outbreak hotspots, which can assist government agencies to prepare effective strategies to prevent further disease spread.

There are no more papers matching your filters at the moment.