Yerevan State University

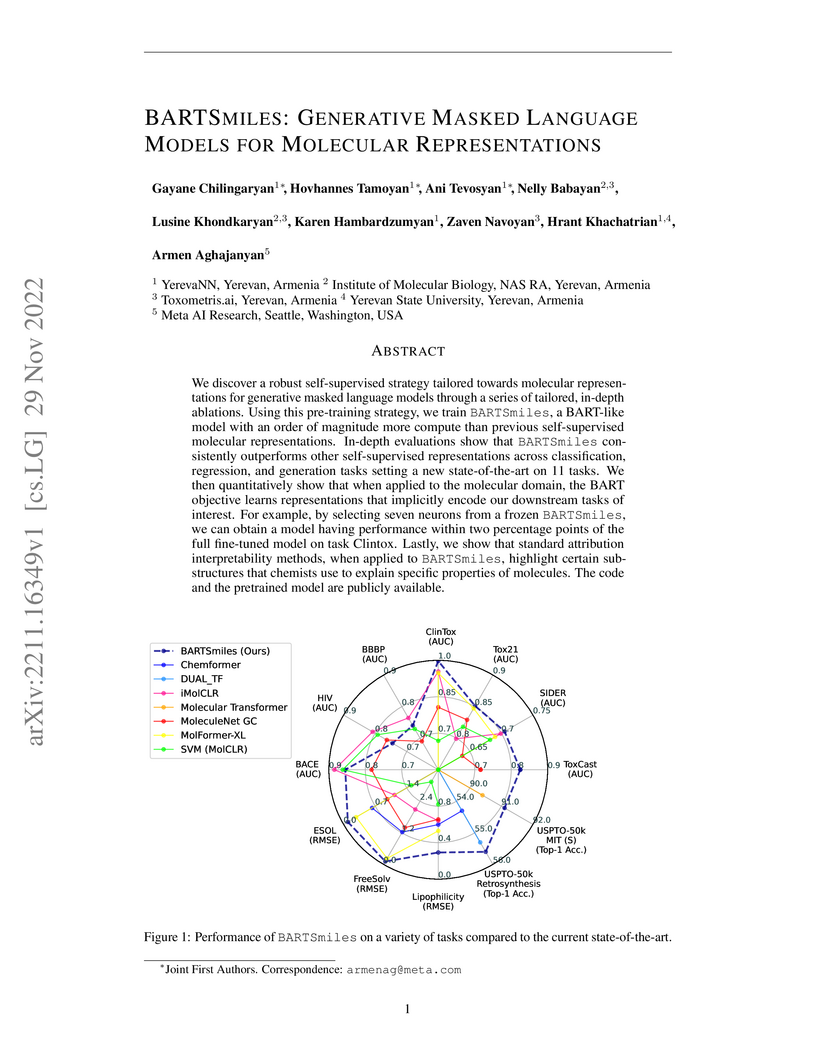

We discover a robust self-supervised strategy tailored towards molecular

representations for generative masked language models through a series of

tailored, in-depth ablations. Using this pre-training strategy, we train

BARTSmiles, a BART-like model with an order of magnitude more compute than

previous self-supervised molecular representations. In-depth evaluations show

that BARTSmiles consistently outperforms other self-supervised representations

across classification, regression, and generation tasks setting a new

state-of-the-art on 11 tasks. We then quantitatively show that when applied to

the molecular domain, the BART objective learns representations that implicitly

encode our downstream tasks of interest. For example, by selecting seven

neurons from a frozen BARTSmiles, we can obtain a model having performance

within two percentage points of the full fine-tuned model on task Clintox.

Lastly, we show that standard attribution interpretability methods, when

applied to BARTSmiles, highlight certain substructures that chemists use to

explain specific properties of molecules. The code and the pretrained model are

publicly available.

Recent advancements in large language models have opened new possibilities

for generative molecular drug design. We present Chemlactica and Chemma, two

language models fine-tuned on a novel corpus of 110M molecules with computed

properties, totaling 40B tokens. These models demonstrate strong performance in

generating molecules with specified properties and predicting new molecular

characteristics from limited samples. We introduce a novel optimization

algorithm that leverages our language models to optimize molecules for

arbitrary properties given limited access to a black box oracle. Our approach

combines ideas from genetic algorithms, rejection sampling, and prompt

optimization. It achieves state-of-the-art performance on multiple molecular

optimization benchmarks, including an 8% improvement on Practical Molecular

Optimization compared to previous methods. We publicly release the training

corpus, the language models and the optimization algorithm.

01 Oct 2025

Recently, LHAASO detected a gamma-ray emission extending beyond 100TeV from 4 sources associated to powerful microquasars. We propose that such sources are the main Galactic PeVatrons and investigate their contribution to the proton and gamma-ray fluxes by modeling their entire population. We find that the presence of only ∼10 active powerful microquasars in the Galaxy at any given time is sufficient to account for the proton flux around the knee and to provide a very good explanation of cosmic-ray and gamma-ray data in a self-consistent picture. The 10TeV bump and the 300TeV hardening in the cosmic-ray spectrum naturally appear, and the diffuse background measured by LHAASO above a few tens of TeV is accounted for. This supports the paradigm in which cosmic rays around the knee are predominantly accelerated in a very limited number of powerful microquasars.

07 Oct 2025

University of Science and Technology of ChinaTianfu Cosmic Ray Research CenterDublin Institute for Advanced StudiesYerevan State UniversityKonan UniversityInstitute for Cosmic Ray Research, University of TokyoColumbia Astrophysics Laboratory, Columbia UniversityMax-Planck-Institut f¨ur KernphysikKavli Institute for the Physics and Mathematics of the Universe (WPI), The University of TokyoRemote Sensing Technology Center of JapanDepartment of Physics, Rikkyo UniversityDepartment of Earth and Space Science, Graduate School of Science, Osaka UniversityKey Laboratory of Particle Astrophyics, Institute of High Energy Physics, Chinese Academy of SciencesFaculty of Science, Kanagawa UniversityInterdisciplinary Theoretical & Mathematical Science Center (iTHEMS), RIKENDepartment of Physics and Astronomy, University of ManitobaDepartment of Physics, Graduate School of Science, Kyoto University

University of Science and Technology of ChinaTianfu Cosmic Ray Research CenterDublin Institute for Advanced StudiesYerevan State UniversityKonan UniversityInstitute for Cosmic Ray Research, University of TokyoColumbia Astrophysics Laboratory, Columbia UniversityMax-Planck-Institut f¨ur KernphysikKavli Institute for the Physics and Mathematics of the Universe (WPI), The University of TokyoRemote Sensing Technology Center of JapanDepartment of Physics, Rikkyo UniversityDepartment of Earth and Space Science, Graduate School of Science, Osaka UniversityKey Laboratory of Particle Astrophyics, Institute of High Energy Physics, Chinese Academy of SciencesFaculty of Science, Kanagawa UniversityInterdisciplinary Theoretical & Mathematical Science Center (iTHEMS), RIKENDepartment of Physics and Astronomy, University of ManitobaDepartment of Physics, Graduate School of Science, Kyoto UniversityNaomi Tsuji et al. conducted the first direct proper motion measurements of X-ray knots in the large-scale jets of the SS 433 microquasar, finding these acceleration regions to be largely stationary. This observational constraint supports the presence of standing recollimation shocks and implies highly efficient particle acceleration to PeV energies.

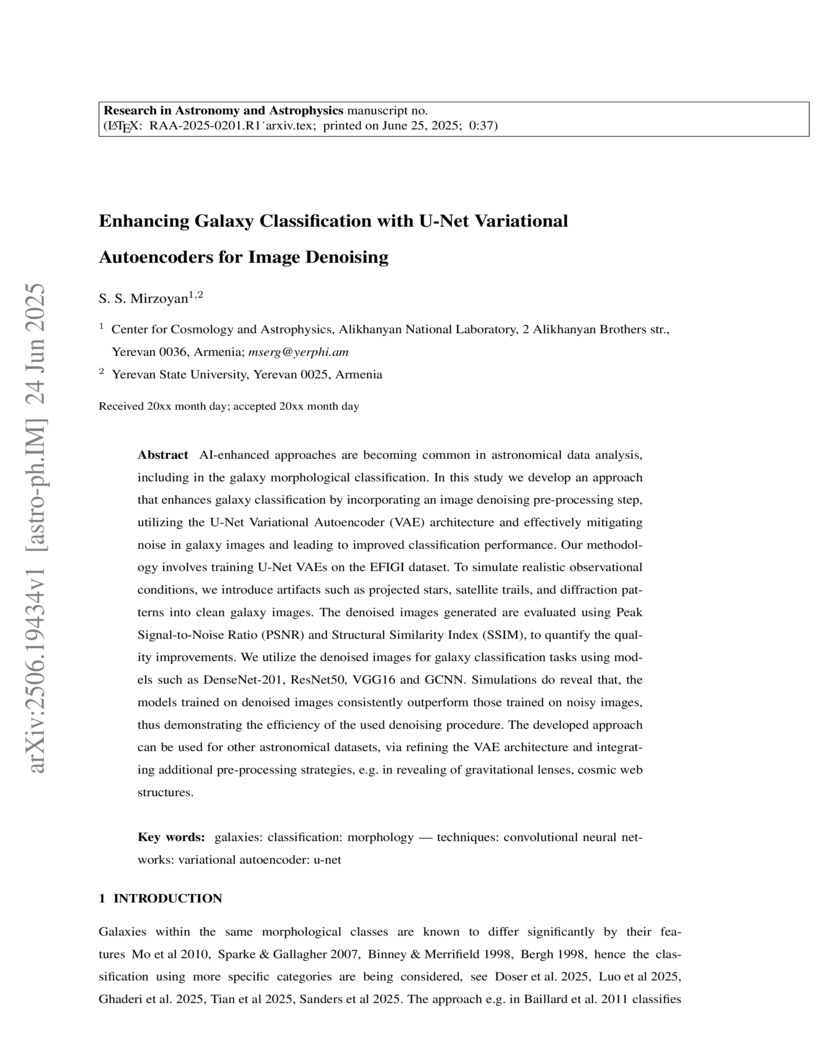

AI-enhanced approaches are becoming common in astronomical data analysis, including in the galaxy morphological classification. In this study we develop an approach that enhances galaxy classification by incorporating an image denoising pre-processing step, utilizing the U-Net Variational Autoencoder (VAE) architecture and effectively mitigating noise in galaxy images and leading to improved classification performance. Our methodology involves training U-Net VAEs on the EFIGI dataset. To simulate realistic observational conditions, we introduce artifacts such as projected stars, satellite trails, and diffraction patterns into clean galaxy images. The denoised images generated are evaluated using Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM), to quantify the quality improvements. We utilize the denoised images for galaxy classification tasks using models such as DenseNet-201, ResNet50, VGG16 and GCNN. Simulations do reveal that, the models trained on denoised images consistently outperform those trained on noisy images, thus demonstrating the efficiency of the used denoising procedure. The developed approach can be used for other astronomical datasets, via refining the VAE architecture and integrating additional pre-processing strategies, e.g. in revealing of gravitational lenses, cosmic web structures.

Transfer learning from pretrained language models recently became the

dominant approach for solving many NLP tasks. A common approach to transfer

learning for multiple tasks that maximize parameter sharing trains one or more

task-specific layers on top of the language model. In this paper, we present an

alternative approach based on adversarial reprogramming, which extends earlier

work on automatic prompt generation. Adversarial reprogramming attempts to

learn task-specific word embeddings that, when concatenated to the input text,

instruct the language model to solve the specified task. Using up to 25K

trainable parameters per task, this approach outperforms all existing methods

with up to 25M trainable parameters on the public leaderboard of the GLUE

benchmark. Our method, initialized with task-specific human-readable prompts,

also works in a few-shot setting, outperforming GPT-3 on two SuperGLUE tasks

with just 32 training samples.

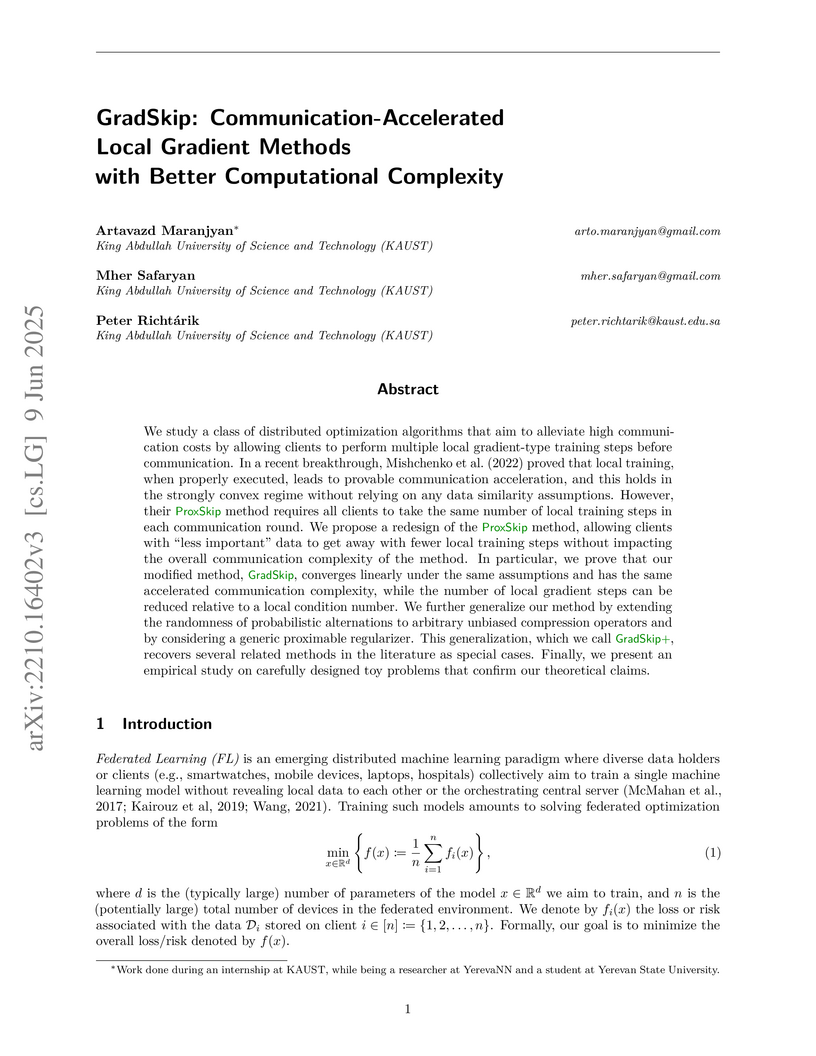

We study a class of distributed optimization algorithms that aim to alleviate high communication costs by allowing clients to perform multiple local gradient-type training steps before communication. In a recent breakthrough, Mishchenko et al. (2022) proved that local training, when properly executed, leads to provable communication acceleration, and this holds in the strongly convex regime without relying on any data similarity assumptions. However, their ProxSkip method requires all clients to take the same number of local training steps in each communication round. We propose a redesign of the ProxSkip method, allowing clients with ``less important'' data to get away with fewer local training steps without impacting the overall communication complexity of the method. In particular, we prove that our modified method, GradSkip, converges linearly under the same assumptions and has the same accelerated communication complexity, while the number of local gradient steps can be reduced relative to a local condition number. We further generalize our method by extending the randomness of probabilistic alternations to arbitrary unbiased compression operators and by considering a generic proximable regularizer. This generalization, which we call GradSkip+, recovers several related methods in the literature as special cases. Finally, we present an empirical study on carefully designed toy problems that confirm our theoretical claims.

12 Nov 2025

National University of SingaporeYerevan State UniversityNational Research University “Higher School of Economics”Institute for Functional Intelligent MaterialsMoscow Center for Advanced StudiesInstitute of Microelectronics Technology and High Purity Materials, Russian Academy of SciencesCenter for Neurophysics and Neuromorphic TechnologiesInstitute of Microelectronics Technology and High Purity MaterialsInstitute for SpectroscopyInstitute for Spectroscopy, Russian Academy of SciencesProgrammable Functional Materials Lab, Center for Neurophysics and Neuromorphic Technologies

National University of SingaporeYerevan State UniversityNational Research University “Higher School of Economics”Institute for Functional Intelligent MaterialsMoscow Center for Advanced StudiesInstitute of Microelectronics Technology and High Purity Materials, Russian Academy of SciencesCenter for Neurophysics and Neuromorphic TechnologiesInstitute of Microelectronics Technology and High Purity MaterialsInstitute for SpectroscopyInstitute for Spectroscopy, Russian Academy of SciencesProgrammable Functional Materials Lab, Center for Neurophysics and Neuromorphic TechnologiesTunneling conductance between two bilayer graphene (BLG) sheets separated by 2 nm-thick insulating barrier was measured in two devices with the twist angles between BLGs less than 1°. At small bias voltages, the tunneling occurs with conservation of energy and momentum at the points of intersection between two relatively shifted Fermi circles. Here, we experimentally found and theoretically described signatures of electron-hole asymmetric band structure of BLG: since holes are heavier, the tunneling conductance is enhanced at the hole doping due to the higher density of states. Another key feature of BLG that we explore is gap opening in a vertical electric field with a strong polarization of electron wave function at van Hove singularities near the gap edges. This polarization, by shifting electron wave function in one BLG closer to or father from the other BLG, gives rise to asymmetric tunneling resonances in the conductance around charge neutrality points, which result in strong sensitivity of the tunneling current to minor changes of the gate voltages. The observed phenomena are reproduced by our theoretical model taking into account electrostatics of the dual-gated structure, quantum capacitance effects, and self-consistent gap openings in both BLGs.

29 Sep 2025

Quasicrystals occupy a unique position between periodic and disordered systems, where localization phenomena such as Anderson transitions and mobility edges can emerge even in the absence of disorder. This distinctive behavior motivates the development of robust, all-optical diagnostic tools capable of probing the structural, topological, and dynamical properties of such systems. In this work, focusing on generalized Aubry-André-Harper models and on an incommensurate potential in the continuum limit, we demonstrate that high-harmonic generation phenomenon serves as a powerful probe of localization transitions and mobility edges in quasicrystals. We introduce a new parameter--dipole mobility--which captures the impact of intraband dipole transitions and enables classification of nonlinear optical regimes, where excitation and high-harmonic generation yield can differ by orders of magnitude. We show that the cutoff frequency of harmonics is strongly influenced by the position of the mobility edge, providing a robust and experimentally accessible signature of localization transitions in quasicrystals.

19 Dec 2024

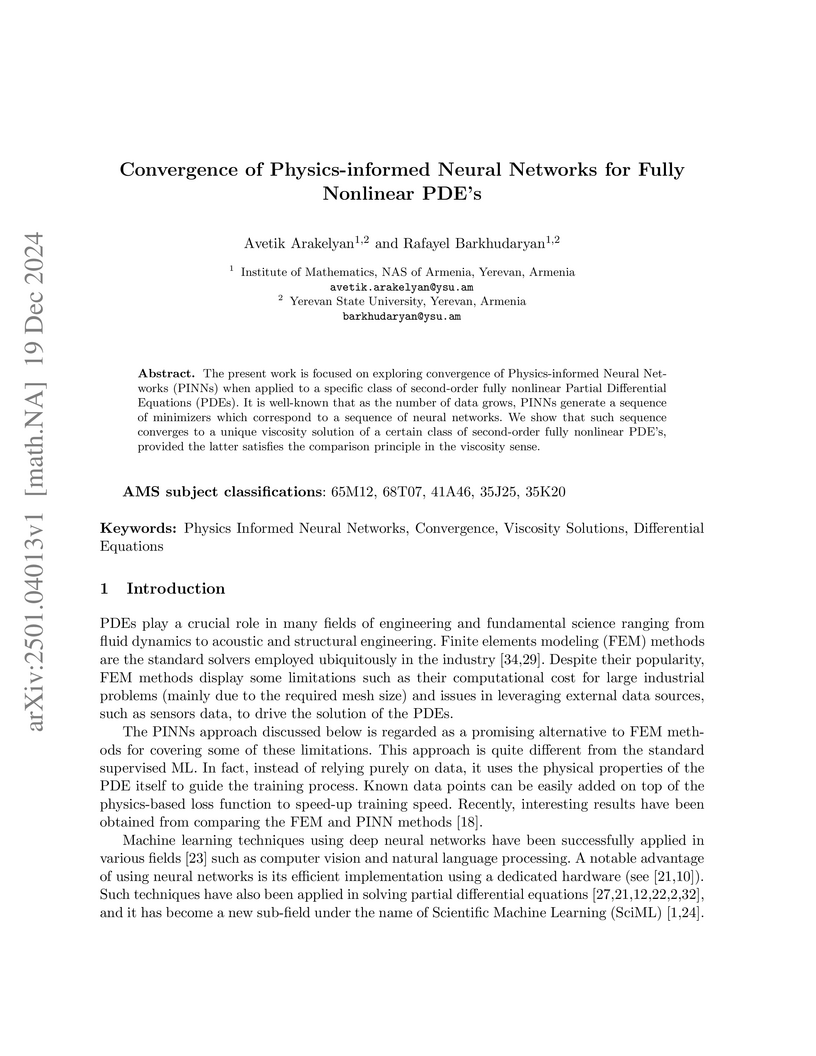

The present work is focused on exploring convergence of Physics-informed Neural Networks (PINNs) when applied to a specific class of second-order fully nonlinear Partial Differential Equations (PDEs). It is well-known that as the number of data grows, PINNs generate a sequence of minimizers which correspond to a sequence of neural networks. We show that such sequence converges to a unique viscosity solution of a certain class of second-order fully nonlinear PDE's, provided the latter satisfies the comparison principle in the viscosity sense.

In this paper, we present a comparative analysis of various self-supervised Vision Transformers (ViTs), focusing on their local representative power. Inspired by large language models, we examine the abilities of ViTs to perform various computer vision tasks with little to no fine-tuning. We design evaluation framework to analyze the quality of local, i.e.\ patch-level, representations in the context of few-shot semantic segmentation, instance identification, object retrieval and tracking. We discover that contrastive learning based methods like DINO produce more universal patch representations that can be immediately applied for downstream tasks with no parameter tuning, compared to masked image modeling. The embeddings learned using the latter approach, e.g. in masked autoencoders, have high variance features that harm distance-based algorithms, such as k-NN, and do not contain useful information for most downstream tasks. Furthermore, we demonstrate that removing these high-variance features enhances k-NN for MAE, as well as for its recent extension Scale-MAE. Finally, we find an object instance retrieval setting where DINOv2, a model pretrained on two orders of magnitude more data, falls short of its less compute intensive counterpart DINO.

The number and diversity of remote sensing satellites grows over time, while the vast majority of labeled data comes from older satellites. As the foundation models for Earth observation scale up, the cost of (re-)training to support new satellites grows too, so the generalization capabilities of the models towards new satellites become increasingly important. In this work we introduce GeoCrossBench, an extension of the popular GeoBench benchmark with a new evaluation protocol: it tests the in-distribution performance; generalization to satellites with no band overlap; and generalization to satellites with additional bands with respect to the training set. We also develop a self-supervised extension of ChannelViT, ChiViT, to improve its cross-satellite performance. First, we show that even the best foundation models for remote sensing (DOFA, TerraFM) do not outperform general purpose models like DINOv3 in the in-distribution setting. Second, when generalizing to new satellites with no band overlap, all models suffer 2-4x drop in performance, and ChiViT significantly outperforms the runner-up DINOv3. Third, the performance of all tested models drops on average by 5-25\% when given additional bands during test time. Finally, we show that fine-tuning just the last linear layer of these models using oracle labels from all bands can get relatively consistent performance across all satellites, highlighting that the benchmark is far from being saturated. We publicly release the code and the datasets to encourage the development of more future-proof remote sensing models with stronger cross-satellite generalization.

A deep learning approach for outdoor environment reconstruction, developed at Yerevan State University and CNR Pisa, exclusively leverages ambient radio frequency signals, demonstrating a viable alternative to vision-based methods. The transformer-based model achieved an IoU of 42.2% and a Chamfer distance of 18.3m on a synthetic dataset, showing improved resilience and scalability for mapping solutions.

The vacuum expectation value of the current density for a charged scalar field is investigated in Rindler spacetime with a part of spatial dimensions compactified to a torus. It is assumed that the field is prepared in the Fulling-Rindler vacuum state. For general values of the phases in the periodicity conditions and the lengths of compact dimensions, the expressions are provided for the Hadamard function and vacuum currents. The current density along compact dimensions is a periodic function of the magnetic flux enclosed by those dimensions and vanishes on the Rindler horizon. The obtained results are compared with the corresponding currents in the Minkowski vacuum. The near-horizon and large-distance asymptotics are discussed for the vacuum currents around cylindrical black holes. In the near-horizon approximation the lengths of compact dimensions are determined by the horizon radius. At large distances from the horizon the geometry is approximated by a locally anti-de Sitter spacetime with toroidally compact dimensions and the lengths of compact dimensions are determined by negative cosmological constant.

17 May 2024

Mixed spin-(1/2,1/2,1) trimer with two different Landé g-factors and two different exchange couplings is considered. The main feature of the model is non-conserving magnetization. The Hamiltonian of the system is diagonalized analytically. We presented a detailed analysis of the ground state properties, revealing several possible ground state phase diagrams and magnetization profiles. The main focus is on how non-conserving magnetization affects quantum entanglement. We have found that non-conserving magnetization can bring to the continuous dependence of the entanglement quantifying parameter (negativity) on magnetic field within the same eigenstate, while for the case of uniform g-factors it is a constant. The main result is an essential enhancement of the entanglement in case of uniform couplings for one pair of spins caused by an arbitrary small difference in the values of g-factors. This enhancement is robust and brings to almost 7-fold increasing of the negativity. We have also found weakening of entanglement for other cases. Thus, non-conserving magnetization offers a broad opportunity to manipulate the entanglement by means of magnetic field.

Digital twin networks (DTNs) serve as an emerging facilitator in the

industrial networking sector, enabling the management of new classes of

services, which require tailored support for improved resource utilization, low

latencies and accurate data fidelity. In this paper, we explore the

intersection between theoretical recommendations and practical implications of

applying DTNs to industrial networked environments, sharing empirical findings

and lessons learned from our ongoing work. To this end, we first provide

experimental examples from selected aspects of data representations and

fidelity, mixed-criticality workload support, and application-driven services.

Then, we introduce an architectural framework for DTNs, exposing a more

practical extension of existing standards; notably the ITU-T Y.3090 (2022)

recommendation. Specifically, we explore and discuss the dual nature of DTNs,

meant as a digital twin of the network and a network of digital twins, allowing

the co-existence of both paradigms.

25 Feb 2010

For i=2,3 and a cubic graph G let νi(G) denote the maximum number of edges that can be covered by i matchings. We show that ν2(G)≥4/5∣V(G)∣ and ν3(G)≥7/6∣V(G)∣. Moreover, it turns out that ν2(G)≤4∣V(G)∣+2ν3(G).

06 Feb 2018

We construct topological defects in the Liouville field theory producing jump in the value of cosmological constant. We construct them using the Cardy-Lewellen equation for the two-point function with defect. We show that there are continuous and discrete families of such kind of defects. For the continuous family of defects we also find the Lagrangian description and check its agreement with the solution of the Cardy-Lewellen equation using the heavy asymptotic semiclasscial limit.

The formation of the cosmic structures in the late Universe is considered using Vlasov kinetic approach. The crucial point is the use of the gravitational potential with repulsive term of the cosmological constant which provides a solution to the Hubble tension, that is the Hubble parameter for the late Universe has to differ from its global cosmological value. This also provides a mechanism of formation of stationary semi-periodic gravitating structures of voids and walls, so that the cosmological constant has a role of the scaling and hence can be compared with the observational data for given regions. The considered mechanism of the structure formation in late cosmological epoch then is succeeding the epoch described by the evolution of primordial density fluctuations.

29 Jan 2025

Causal Bayesian optimisation (CaBO) combines causality with Bayesian

optimisation (BO) and shows that there are situations where the optimal reward

is not achievable if causal knowledge is ignored. While CaBO exploits causal

relations to determine the set of controllable variables to intervene on, it

does not exploit purely observational variables and marginalises them. We show

that, in general, utilising a subset of observational variables as a context to

choose the values of interventional variables leads to lower cumulative

regrets. We propose a general framework of contextual causal Bayesian

optimisation that efficiently searches through combinations of controlled and

contextual variables, known as policy scopes, and identifies the one yielding

the optimum. We highlight the difficulties arising from the application of the

causal acquisition function currently used in CaBO to select the policy scope

in contextual settings and propose a multi-armed bandits based selection

mechanism. We analytically show that well-established methods, such as

contextual BO (CoBO) or CaBO, are not able to achieve the optimum in some

cases, and empirically show that the proposed method achieves sub-linear regret

in various environments and under different configurations.

There are no more papers matching your filters at the moment.