BIGAI

Vision language models (VLMs) have achieved impressive performance across a variety of computer vision tasks. However, the multimodal reasoning capability has not been fully explored in existing models. In this paper, we propose a Chain-of-Focus (CoF) method that allows VLMs to perform adaptive focusing and zooming in on key image regions based on obtained visual cues and the given questions, achieving efficient multimodal reasoning. To enable this CoF capability, we present a two-stage training pipeline, including supervised fine-tuning (SFT) and reinforcement learning (RL). In the SFT stage, we construct the MM-CoF dataset, comprising 3K samples derived from a visual agent designed to adaptively identify key regions to solve visual tasks with different image resolutions and questions. We use MM-CoF to fine-tune the Qwen2.5-VL model for cold start. In the RL stage, we leverage the outcome accuracies and formats as rewards to update the Qwen2.5-VL model, enabling further refining the search and reasoning strategy of models without human priors. Our model achieves significant improvements on multiple benchmarks. On the V* benchmark that requires strong visual reasoning capability, our model outperforms existing VLMs by 5% among 8 image resolutions ranging from 224 to 4K, demonstrating the effectiveness of the proposed CoF method and facilitating the more efficient deployment of VLMs in practical applications.

PiSSA (Principal Singular Values and Singular Vectors Adaptation) introduces an SVD-based initialization strategy for low-rank adapters in Large Language Models, directly tuning the principal components of pre-trained weight matrices. This approach consistently outperforms LoRA in fine-tuning performance across diverse models and tasks, achieving faster convergence and significantly reducing quantization error in its QPiSSA variant, for example, improving Gemma-7B's GSM8K accuracy by 3.25% over LoRA.

26 Oct 2025

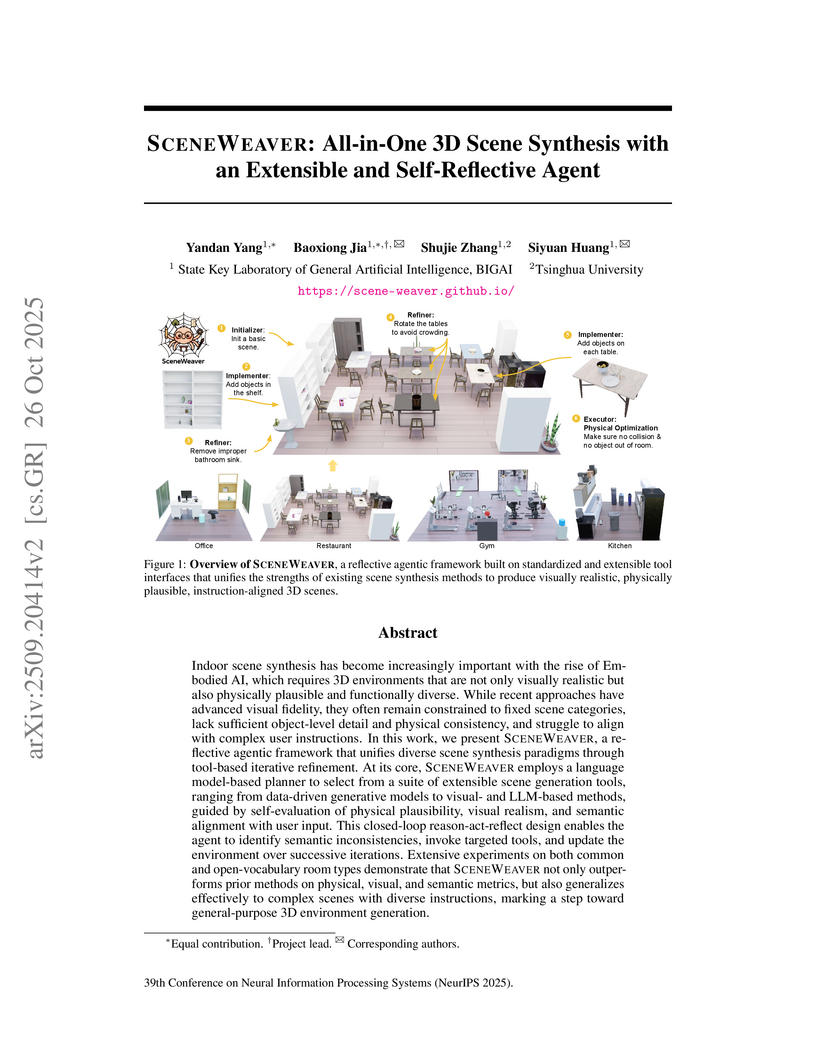

SCENEWEAVER is a reflective agentic framework that utilizes Multimodal Large Language Models (MLLMs) for feedback-guided, all-in-one 3D scene synthesis. It unifies diverse synthesis methods through a standardized tool interface and a "reason-act-reflect" paradigm, achieving state-of-the-art results in visual realism, physical plausibility, and semantic alignment across various scene types.

Researchers from BIGAI and Tsinghua University introduce MANIPTRANS, a two-stage framework that enables efficient transfer of human bimanual manipulation skills to robotic hands through combined trajectory imitation and residual learning, while generating DEXMANIPNET - a dataset of 3.3K manipulation episodes demonstrating generalization across multiple robotic hand designs.

Researchers developed COLA, a framework enabling humanoid robots to compliantly and coordinately carry objects with humans, leveraging a proprioception-only policy learned through a three-step training process. The system achieved a 24.7% reduction in human effort and demonstrated stable performance across diverse objects and terrains in real-world scenarios.

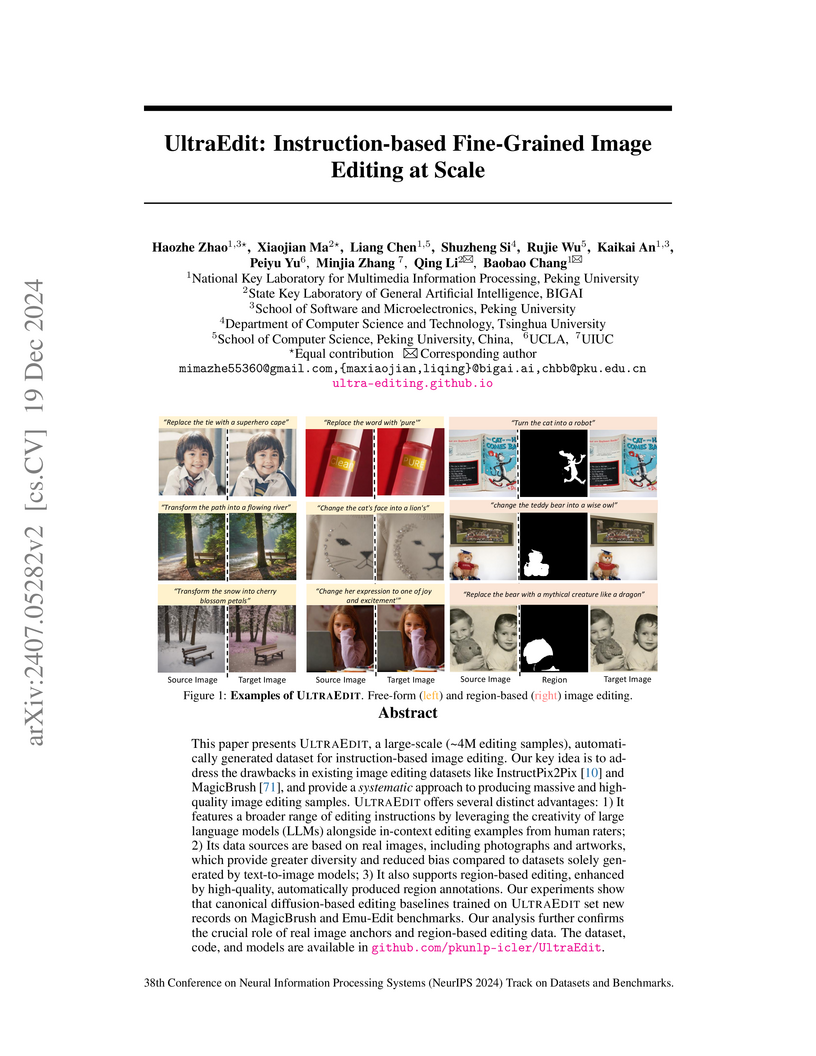

UltraEdit introduces a large-scale (over 4 million samples), high-quality dataset for instruction-based image editing, designed to mitigate common biases and enhance fine-grained control. Models trained on UltraEdit demonstrate improved performance on established benchmarks like MagicBrush and Emu Edit, particularly for region-based and multi-turn editing tasks.

26 Apr 2025

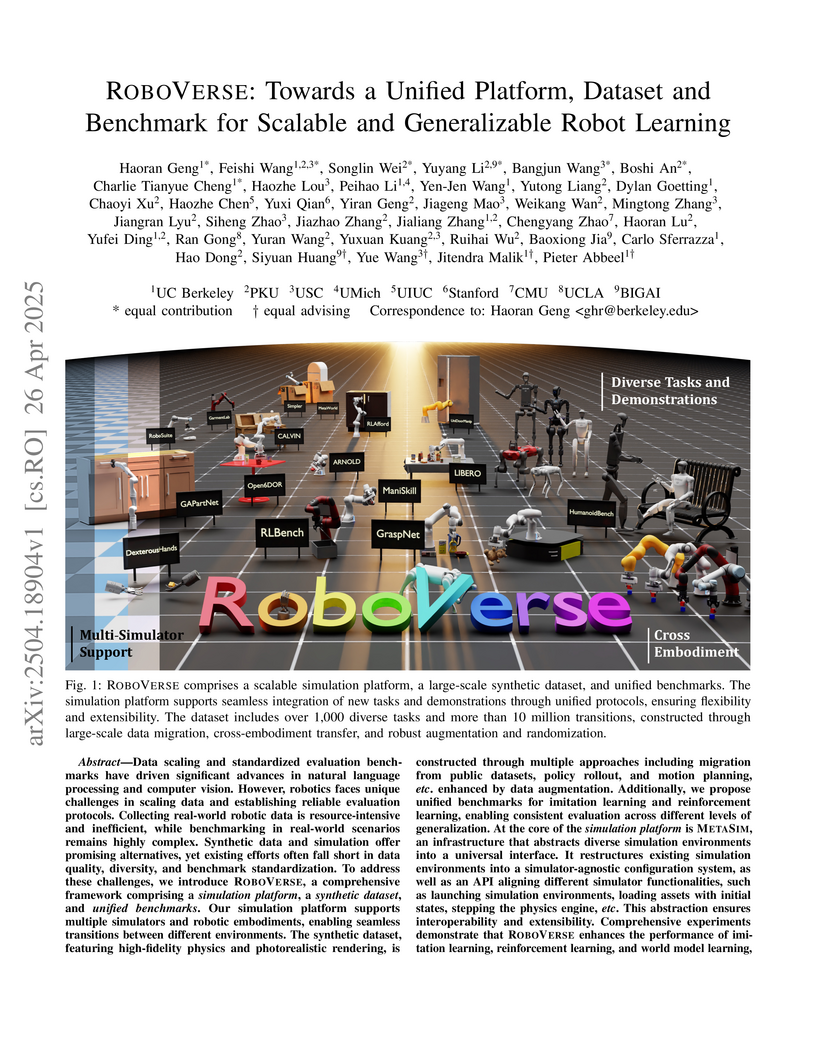

RoboVerse introduces a unified robotics platform combining high-fidelity simulation environments, a large-scale synthetic dataset, and standardized benchmarks for imitation and reinforcement learning, enabling cross-simulator integration and improved sim-to-real transfer through its METASIM infrastructure and diverse data generation approaches.

Researchers developed an automated pipeline to generate a large-scale multi-modal tool-usage dataset (MM-Traj), which was then used to fine-tune Vision-Language Models (VLMs) as agents, resulting in enhanced multi-step reasoning and tool-usage capabilities across various multi-modal tasks. The T3-Agent, trained on MM-Traj, demonstrated improved accuracy and tool utilization on challenging multi-modal benchmarks like GTA and GAIA, closing the performance gap with larger, closed-source models.

Building Graphical User Interface (GUI) agents is a promising research direction, which simulates human interaction with computers or mobile phones to perform diverse GUI tasks. However, a major challenge in developing generalized GUI agents is the lack of sufficient trajectory data across various operating systems and applications, mainly due to the high cost of manual annotations. In this paper, we propose the TongUI framework that builds generalized GUI agents by learning from rich multimodal web tutorials. Concretely, we crawl and process online GUI tutorials (such as videos and articles) into GUI agent trajectory data, through which we produce the GUI-Net dataset containing 143K trajectory data across five operating systems and more than 200 applications. We develop the TongUI agent by fine-tuning Qwen2.5-VL-3B/7B models on GUI-Net, which show remarkable performance improvements on commonly used grounding and navigation benchmarks, outperforming baseline agents about 10\% on multiple benchmarks, showing the effectiveness of the GUI-Net dataset and underscoring the significance of our TongUI framework. We will fully open-source the code, the GUI-Net dataset, and the trained models soon.

24 Oct 2025

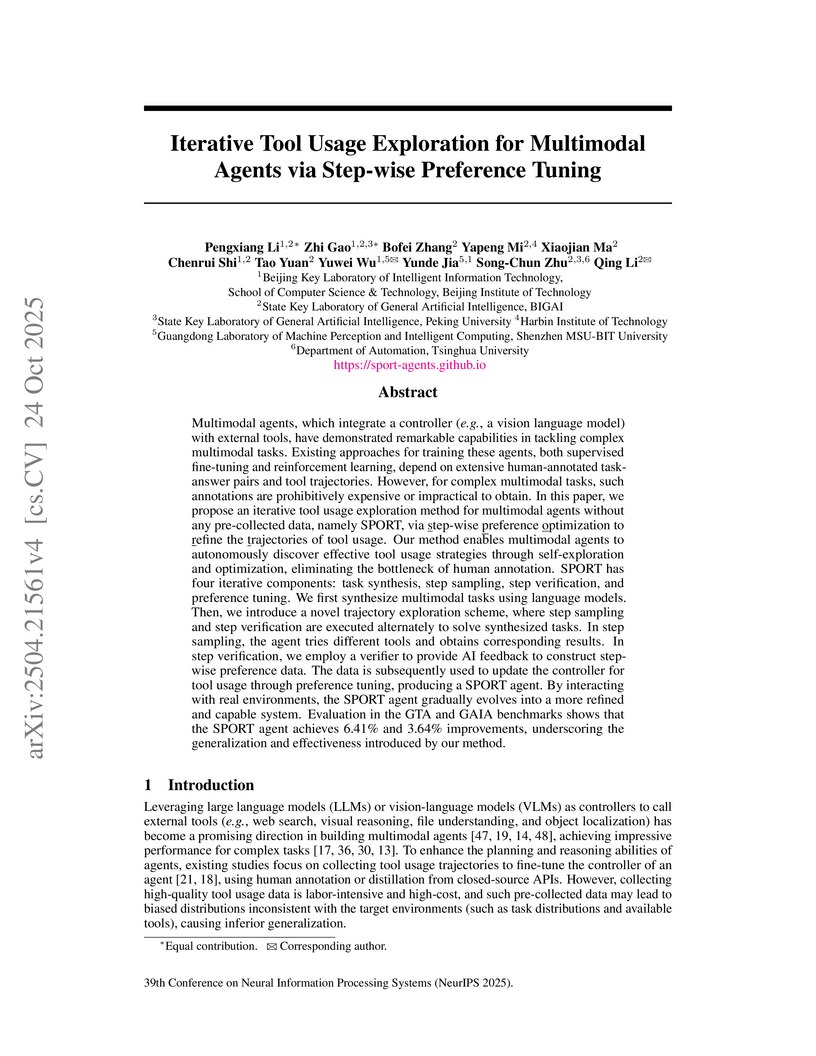

Researchers developed SPORT, an iterative framework enabling multimodal agents to autonomously enhance tool usage without human-annotated data. This method employs step-wise AI feedback and direct preference optimization, achieving up to 6.41% higher answer accuracy on the GTA benchmark and 3.64% higher answer accuracy on the GAIA benchmark compared to strong baselines.

PoliCon introduces a benchmark to assess large language models' capabilities in facilitating and achieving political consensus under diverse real-world objectives. Experiments revealed that while models like Gemini-2.5-Flash perform well on straightforward consensus tasks, all tested LLMs struggle with complex goals such as Rawlsianism and exhibit inherent partisan biases, highlighting limitations in sophisticated coalition-building.

30 Aug 2025

CLONE introduces a closed-loop system for whole-body humanoid teleoperation that achieves precise control for long-horizon tasks, virtually eliminating positional drift using only head and hand tracking. The system demonstrated a mean tracking error of 5.1 cm over 8.9 meters on a Unitree G1 robot, effectively enabling robust and coordinated execution of diverse dynamic skills.

JARVIS-VLA, a Vision Language Action model, is introduced for playing visual games in Minecraft using keyboards and mouse, leveraging ActVLP, a three-stage post-training paradigm that improves world knowledge and visual grounding before action tuning. This enabled JARVIS-VLA-Qwen2-VL to achieve state-of-the-art performance on over 1,000 diverse atomic tasks in Minecraft, demonstrating a 40% improvement over prior baselines.

10 Oct 2025

Large language model based multi-agent systems (MAS) have unlocked significant advancements in tackling complex problems, but their increasing capability introduces a structural fragility that makes them difficult to debug. A key obstacle to improving their reliability is the severe scarcity of large-scale, diverse datasets for error attribution, as existing resources rely on costly and unscalable manual annotation. To address this bottleneck, we introduce Aegis, a novel framework for Automated error generation and attribution for multi-agent systems. Aegis constructs a large dataset of 9,533 trajectories with annotated faulty agents and error modes, covering diverse MAS architectures and task domains. This is achieved using a LLM-based manipulator that can adaptively inject context-aware errors into successful execution trajectories. Leveraging fine-grained labels and the structured arrangement of positive-negative sample pairs, Aegis supports three different learning paradigms: Supervised Fine-Tuning, Reinforcement Learning, and Contrastive Learning. We develop learning methods for each paradigm. Comprehensive experiments show that trained models consistently achieve substantial improvements in error attribution. Notably, several of our fine-tuned LLMs demonstrate performance competitive with or superior to proprietary models an order of magnitude larger, validating our automated data generation framework as a crucial resource for developing more robust and interpretable multi-agent systems. Our project website is available at this https URL.

Embodied VideoAgent develops a multimodal agent that uses persistent object memory from egocentric videos and embodied sensors to understand dynamic 3D scenes. The system achieved an 85.37% success rate for 3D object localization on Ego4D-VQ3D and showed improved performance on embodied question answering benchmarks compared to existing models.

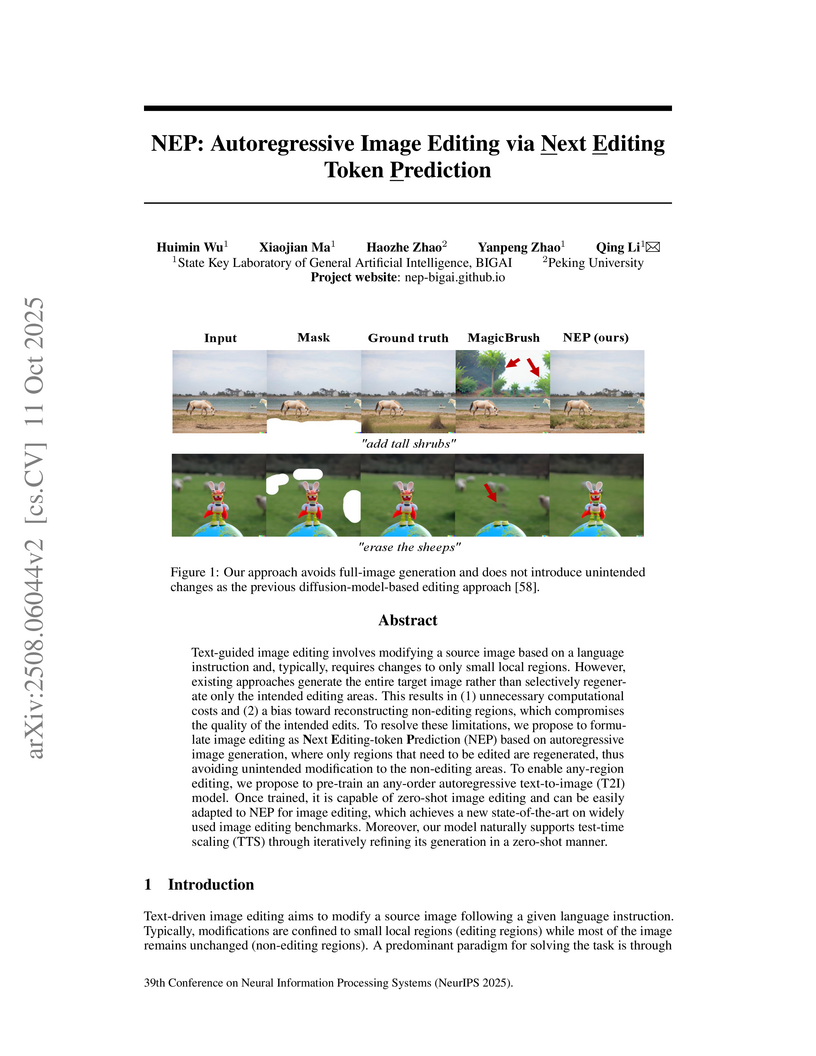

Text-guided image editing involves modifying a source image based on a language instruction and, typically, requires changes to only small local regions. However, existing approaches generate the entire target image rather than selectively regenerate only the intended editing areas. This results in (1) unnecessary computational costs and (2) a bias toward reconstructing non-editing regions, which compromises the quality of the intended edits. To resolve these limitations, we propose to formulate image editing as Next Editing-token Prediction (NEP) based on autoregressive image generation, where only regions that need to be edited are regenerated, thus avoiding unintended modification to the non-editing areas. To enable any-region editing, we propose to pre-train an any-order autoregressive text-to-image (T2I) model. Once trained, it is capable of zero-shot image editing and can be easily adapted to NEP for image editing, which achieves a new state-of-the-art on widely used image editing benchmarks. Moreover, our model naturally supports test-time scaling (TTS) through iteratively refining its generation in a zero-shot manner. The project page is: this https URL

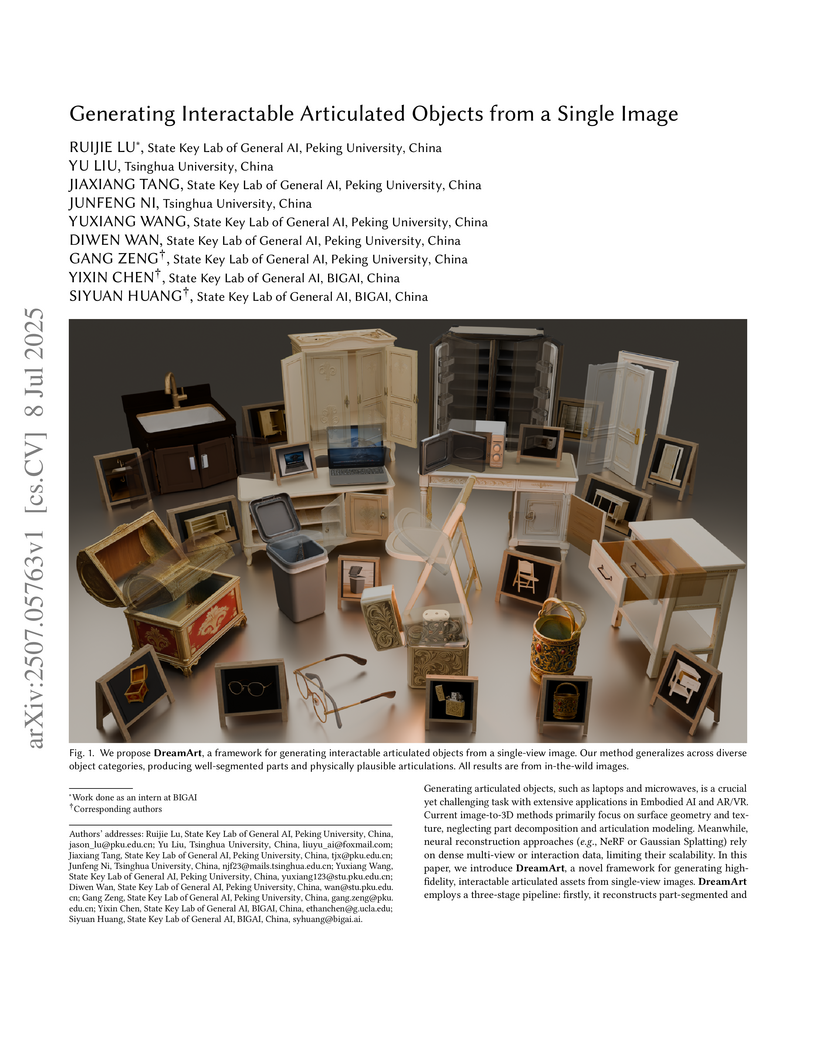

DreamArt generates high-fidelity, interactable articulated 3D objects from a single image by integrating part-aware 3D reconstruction, conditional video diffusion with novel prompts, and physics-informed articulation optimization. The system demonstrated superior visual quality and articulation plausibility compared to existing methods, achieving higher PSNR, SSIM, and FVD for video synthesis, and significantly better user study scores for overall asset quality.

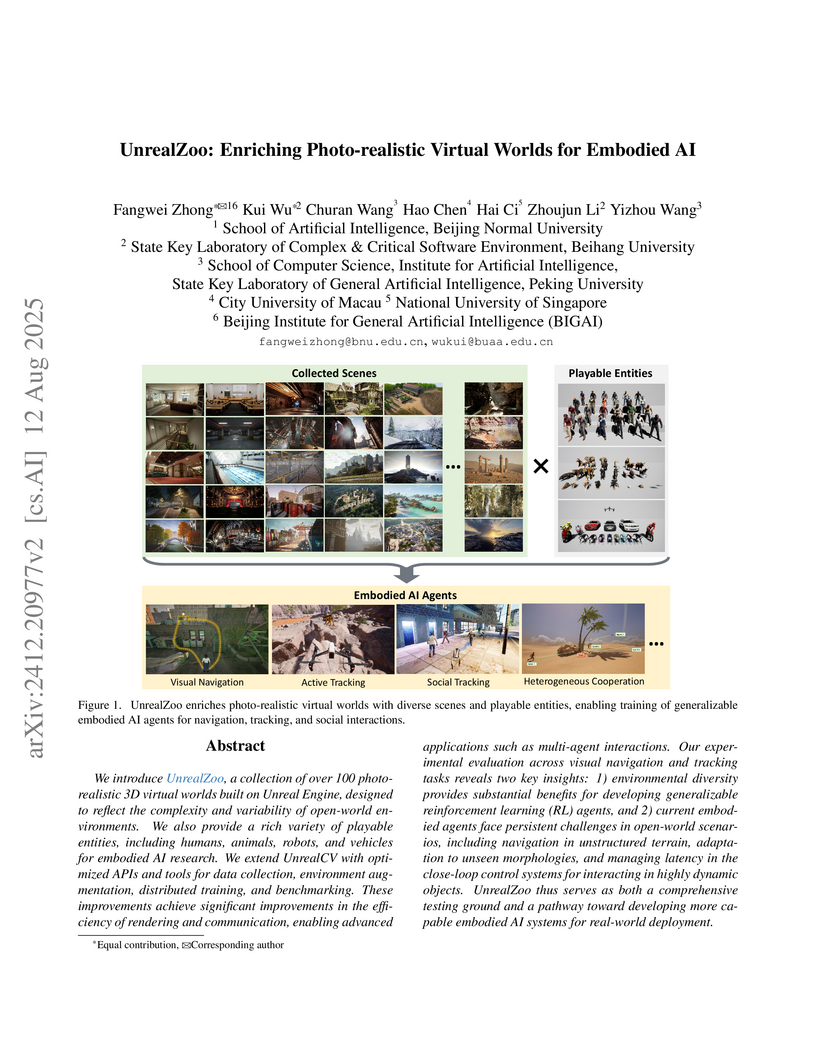

We introduce UnrealZoo, a collection of over 100 photo-realistic 3D virtual worlds built on Unreal Engine, designed to reflect the complexity and variability of open-world environments. We also provide a rich variety of playable entities, including humans, animals, robots, and vehicles for embodied AI research. We extend UnrealCV with optimized APIs and tools for data collection, environment augmentation, distributed training, and benchmarking. These improvements achieve significant improvements in the efficiency of rendering and communication, enabling advanced applications such as multi-agent interactions. Our experimental evaluation across visual navigation and tracking tasks reveals two key insights: 1) environmental diversity provides substantial benefits for developing generalizable reinforcement learning (RL) agents, and 2) current embodied agents face persistent challenges in open-world scenarios, including navigation in unstructured terrain, adaptation to unseen morphologies, and managing latency in the close-loop control systems for interacting in highly dynamic objects. UnrealZoo thus serves as both a comprehensive testing ground and a pathway toward developing more capable embodied AI systems for real-world deployment.

A systematic review comprehensively organizes the field of safe reinforcement learning, analyzing its methods, theories, and applications through a proposed '2H3W' framework. It provides a detailed examination of sample complexity, benchmarks, and emerging challenges in multi-agent and human-compatible safe RL.

Multi-Head Latent Attention (MLA), introduced in DeepSeek-V2, compresses key-value states into a low-rank latent vector, caching only this vector to reduce memory. In tensor parallelism (TP), however, attention heads are computed across multiple devices, and each device must load the full cache, eroding the advantage of MLA over Grouped Query Attention (GQA). We propose Tensor-Parallel Latent Attention (TPLA): a scheme that partitions both the latent representation and each head's input dimension across devices, performs attention independently per shard, and then combines results with an all-reduce. TPLA preserves the benefits of a compressed KV cache while unlocking TP efficiency. Unlike Grouped Latent Attention (GLA), every head in TPLA still leverages the full latent representation, maintaining stronger representational capacity. TPLA is drop-in compatible with models pre-trained using MLA: it supports MLA-style prefilling and enables efficient tensor-parallel decoding without retraining. Applying simple orthogonal transforms -- e.g., the Hadamard transform or PCA -- before TP slicing further mitigates cross-shard interference, yielding minimal accuracy degradation. By reducing the per-device KV cache for DeepSeek-V3 and Kimi-K2, we achieve 1.79x and 1.93x speedups, respectively, at a 32K-token context length while maintaining performance on commonsense and LongBench benchmarks. TPLA can be implemented with FlashAttention-3, enabling practical end-to-end acceleration.

There are no more papers matching your filters at the moment.