Centrale Lille

A Vision Transformer-based framework streamlines Diffuse Large B-Cell Lymphoma (DLBCL) subtyping by transferring knowledge from multi-modal (HES + IHC) training to an efficient HES-only inference model. The approach achieves 75% accuracy, surpassing current deep learning techniques and HES-based human pathologist assessments.

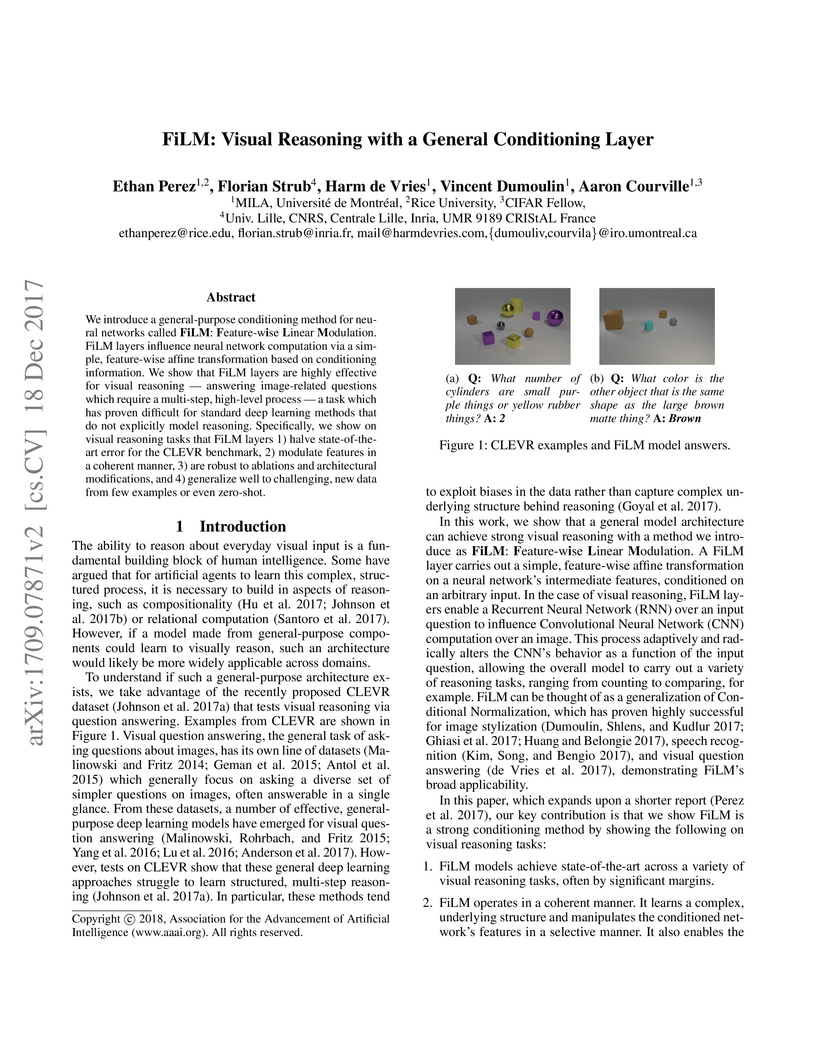

MILA researchers propose FiLM (Feature-wise Linear Modulation), a general conditioning layer that enables neural networks to dynamically adapt their computation based on conditioning input. Applied to visual reasoning, FiLM achieves 97.7% accuracy on the CLEVR benchmark, significantly reducing the error rate for models without explicit architectural priors or extra supervision.

22 Sep 2025

We introduce Reasoning Core, a new scalable environment for Reinforcement Learning with Verifiable Rewards (RLVR), designed to advance foundational symbolic reasoning in Large Language Models (LLMs). Unlike existing benchmarks that focus on games or isolated puzzles, Reasoning Core procedurally generates problems across core formal domains, including PDDL planning, first-order logic, context-free grammar parsing, causal reasoning, and system equation solving. The environment is built on key design principles of high-generality problem distributions, verification via external tools, and continuous difficulty control, which together provide a virtually infinite supply of novel training instances. Initial zero-shot evaluations with frontier LLMs confirm the difficulty of Reasoning Core's tasks, positioning it as a promising resource to improve the reasoning capabilities of future models.

This survey provides a comprehensive analysis of recent advancements in medical image segmentation by focusing on emerging approaches like generative AI, few-shot learning, foundation models, and universal models. It details how these methods address persistent challenges such as data scarcity, annotation costs, and model generalization to facilitate wider adoption in clinical settings.

In this paper, we consider the problem of computing the integral of a function on the unit sphere, in any dimension, using Monte Carlo methods. Although the methods we present are general, our guiding thread is the sliced Wasserstein distance between two measures on Rd, which is precisely an integral on the d-dimensional sphere. The sliced Wasserstein distance (SW) has gained momentum in machine learning either as a proxy to the less computationally tractable Wasserstein distance, or as a distance in its own right, due in particular to its built-in alleviation of the curse of dimensionality. There has been recent numerical benchmarks of quadratures for the sliced Wasserstein, and our viewpoint differs in that we concentrate on quadratures where the nodes are repulsive, i.e. negatively dependent. Indeed, negative dependence can bring variance reduction when the quadrature is adapted to the integration task. Our first contribution is to extract and motivate quadratures from the recent literature on determinantal point processes (DPPs) and repelled point processes, as well as repulsive quadratures from the literature specific to the sliced Wasserstein distance. We then numerically benchmark these quadratures. Moreover, we analyze the variance of the UnifOrtho estimator, an orthogonal Monte Carlo estimator. Our analysis sheds light on UnifOrtho's success for the estimation of the sliced Wasserstein in large dimensions, as well as counterexamples from the literature. Our final recommendation for the computation of the sliced Wasserstein distance is to use randomized quasi-Monte Carlo in low dimensions and \emph{UnifOrtho} in large dimensions. DPP-based quadratures only shine when quasi-Monte Carlo also does, while repelled quadratures show moderate variance reduction in general, but more theoretical effort is needed to make them robust.

07 Aug 2025

Dealing with uncertainty in optimization parameters is an important and longstanding challenge. Typically, uncertain parameters are predicted accurately, and then a deterministic optimization problem is solved. However, the decisions produced by this so-called predict-then-optimize procedure can be highly sensitive to uncertain parameters. In this work, we contribute to recent efforts in producing decision-focused predictions, i.e., to build predictive models that are constructed with the goal of minimizing a regret measure on the decisions taken with them. We begin by formulating the exact expected regret minimization as a pessimistic bilevel optimization model. Then, we show computational complexity results of this problem, including its membership in NP. In combination with a known NP-hardness result, this establishes NP-completeness and discards its hardness in higher complexity classes. Using duality arguments, we reformulate it as a non-convex quadratic optimization problem. Finally, leveraging the quadratic reformulation, we show various computational techniques to achieve empirical tractability. We report extensive computational results on shortest-path and bipartite matching instances with uncertain cost vectors. Our results indicate that our approach can improve training performance over the approach of Elmachtoub and Grigas (2022), a state-of-the-art method for decision-focused learning.

Federated Learning (FL) is a paradigm for large-scale distributed learning which faces two key challenges: (i) efficient training from highly heterogeneous user data, and (ii) protecting the privacy of participating users. In this work, we propose a novel FL approach (DP-SCAFFOLD) to tackle these two challenges together by incorporating Differential Privacy (DP) constraints into the popular SCAFFOLD algorithm. We focus on the challenging setting where users communicate with a "honest-but-curious" server without any trusted intermediary, which requires to ensure privacy not only towards a third-party with access to the final model but also towards the server who observes all user communications. Using advanced results from DP theory, we establish the convergence of our algorithm for convex and non-convex objectives. Our analysis clearly highlights the privacy-utility trade-off under data heterogeneity, and demonstrates the superiority of DP-SCAFFOLD over the state-of-the-art algorithm DP-FedAvg when the number of local updates and the level of heterogeneity grow. Our numerical results confirm our analysis and show that DP-SCAFFOLD provides significant gains in practice.

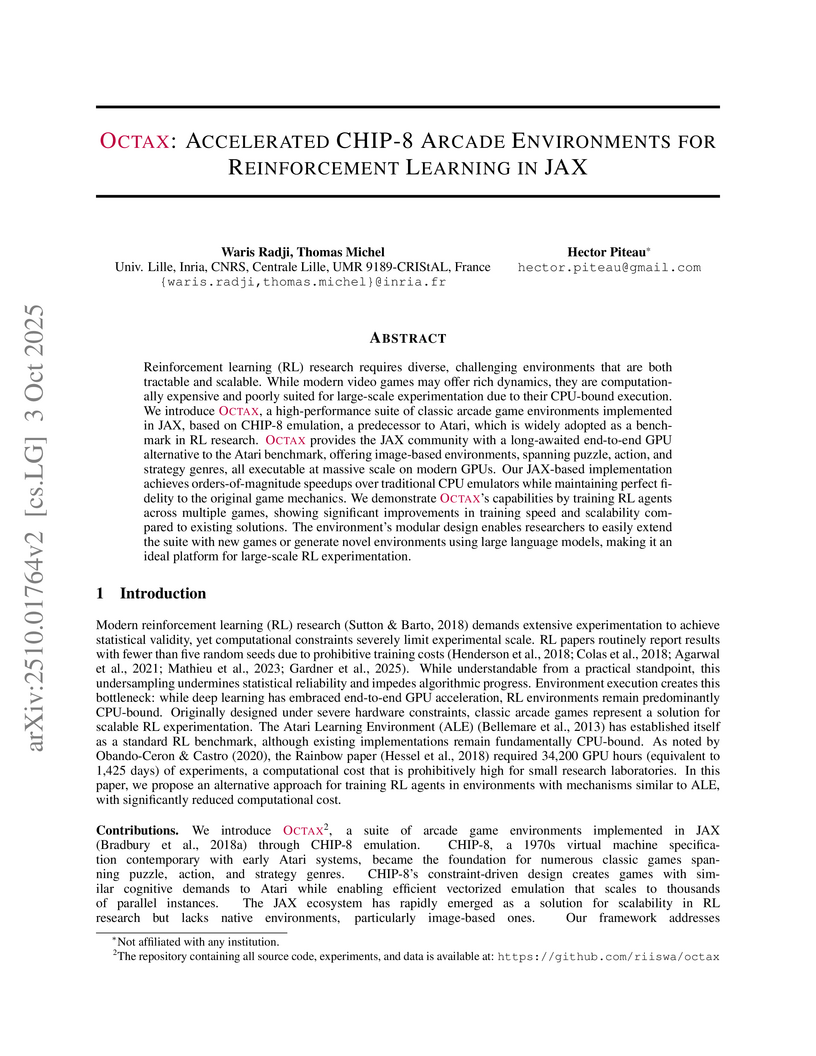

Reinforcement learning (RL) research requires diverse, challenging environments that are both tractable and scalable. While modern video games may offer rich dynamics, they are computationally expensive and poorly suited for large-scale experimentation due to their CPU-bound execution. We introduce Octax, a high-performance suite of classic arcade game environments implemented in JAX, based on CHIP-8 emulation, a predecessor to Atari, which is widely adopted as a benchmark in RL research. Octax provides the JAX community with a long-awaited end-to-end GPU alternative to the Atari benchmark, offering image-based environments, spanning puzzle, action, and strategy genres, all executable at massive scale on modern GPUs. Our JAX-based implementation achieves orders-of-magnitude speedups over traditional CPU emulators while maintaining perfect fidelity to the original game mechanics. We demonstrate Octax's capabilities by training RL agents across multiple games, showing significant improvements in training speed and scalability compared to existing solutions. The environment's modular design enables researchers to easily extend the suite with new games or generate novel environments using large language models, making it an ideal platform for large-scale RL experimentation.

Stabilized Supervised STDP (S2-STDP) and a Paired Competing Neurons (PCN) architecture are introduced to improve local learning in single-spike Spiking Neural Networks for classification. These methods enhance accuracy by up to 2.34 percentage points on MNIST and significantly increase the frequency of weight updates, addressing key limitations of prior supervised STDP techniques.

18 Mar 2024

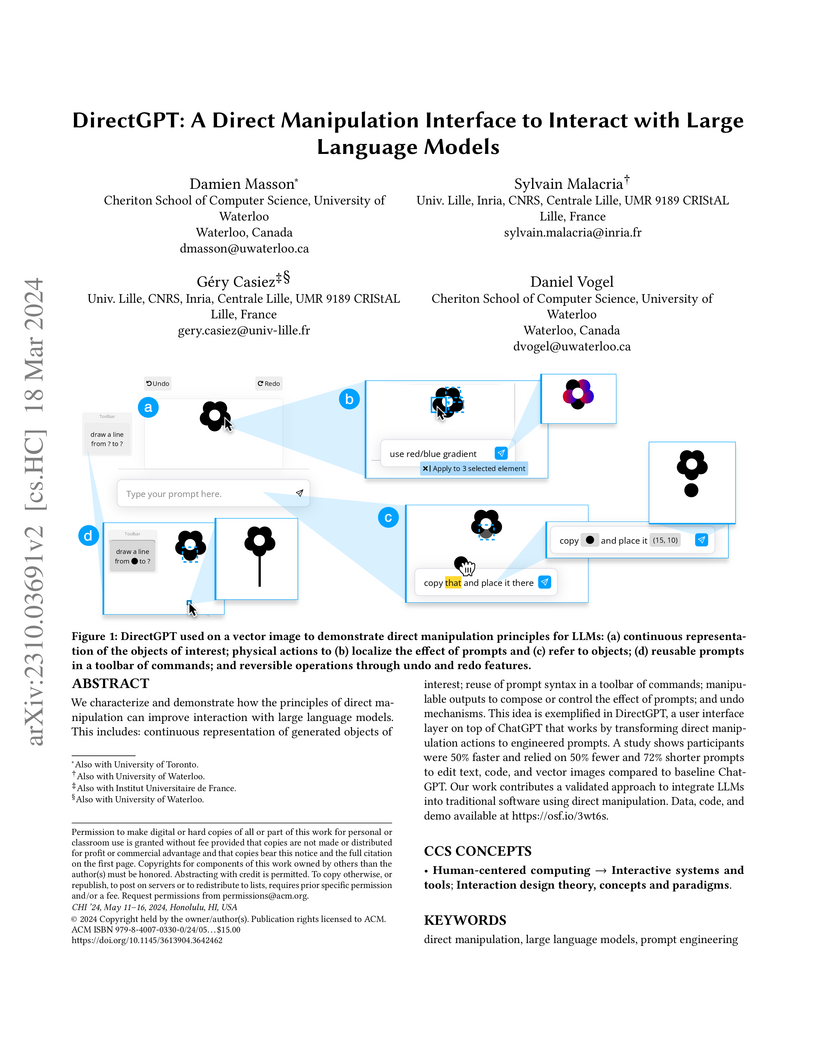

We characterize and demonstrate how the principles of direct manipulation can improve interaction with large language models. This includes: continuous representation of generated objects of interest; reuse of prompt syntax in a toolbar of commands; manipulable outputs to compose or control the effect of prompts; and undo mechanisms. This idea is exemplified in DirectGPT, a user interface layer on top of ChatGPT that works by transforming direct manipulation actions to engineered prompts. A study shows participants were 50% faster and relied on 50% fewer and 72% shorter prompts to edit text, code, and vector images compared to baseline ChatGPT. Our work contributes a validated approach to integrate LLMs into traditional software using direct manipulation. Data, code, and demo available at this https URL.

This paper offers a comprehensive review of the integration of Reinforcement Learning (RL) with Mean Field Games (MFGs), synthesizing diverse theoretical settings and algorithmic approaches. It clarifies how RL enables model-free learning for large-scale multi-agent systems, addressing computational challenges and highlighting key solution techniques.

Assessing whether a sample survey credibly represents the population is a critical question for ensuring the validity of downstream research. Generally, this problem reduces to estimating the distance between two high-dimensional distributions, which typically requires a number of samples that grows exponentially with the dimension. However, depending on the model used for data analysis, the conclusions drawn from the data may remain consistent across different underlying distributions. In this context, we propose a task-based approach to assess the credibility of sampled surveys. Specifically, we introduce a model-specific distance metric to quantify this notion of credibility. We also design an algorithm to verify the credibility of survey data in the context of regression models. Notably, the sample complexity of our algorithm is independent of the data dimension. This efficiency stems from the fact that the algorithm focuses on verifying the credibility of the survey data rather than reconstructing the underlying regression model. Furthermore, we show that if one attempts to verify credibility by reconstructing the regression model, the sample complexity scales linearly with the dimensionality of the data. We prove the theoretical correctness of our algorithm and numerically demonstrate our algorithm's performance.

17 Oct 2025

Critical reading is a primary way through which researchers develop their critical thinking skills. While exchanging thoughts and opinions with peers can strengthen critical reading, junior researchers often lack access to peers who can offer diverse perspectives. To address this gap, we designed an in-situ thought exchange interface informed by peer feedback from a formative study (N=8) to support junior researchers' critical paper reading. We evaluated the effects of thought exchanges under three conditions (no-agent, single-agent, and multi-agent) with 46 junior researchers over two weeks. Our results showed that incorporating agent-mediated thought exchanges during paper reading significantly improved participants' critical thinking scores compared to the no-agent condition. In the single-agent condition, participants more frequently made reflective annotations on the paper content. In the multi-agent condition, participants engaged more actively with agents' responses. Our qualitative analysis further revealed that participants compared and analyzed multiple perspectives in the multi-agent condition. This work contributes to understanding in-situ AI-based support for critical paper reading through thought exchanges and offers design implications for future research.

23 Sep 2025

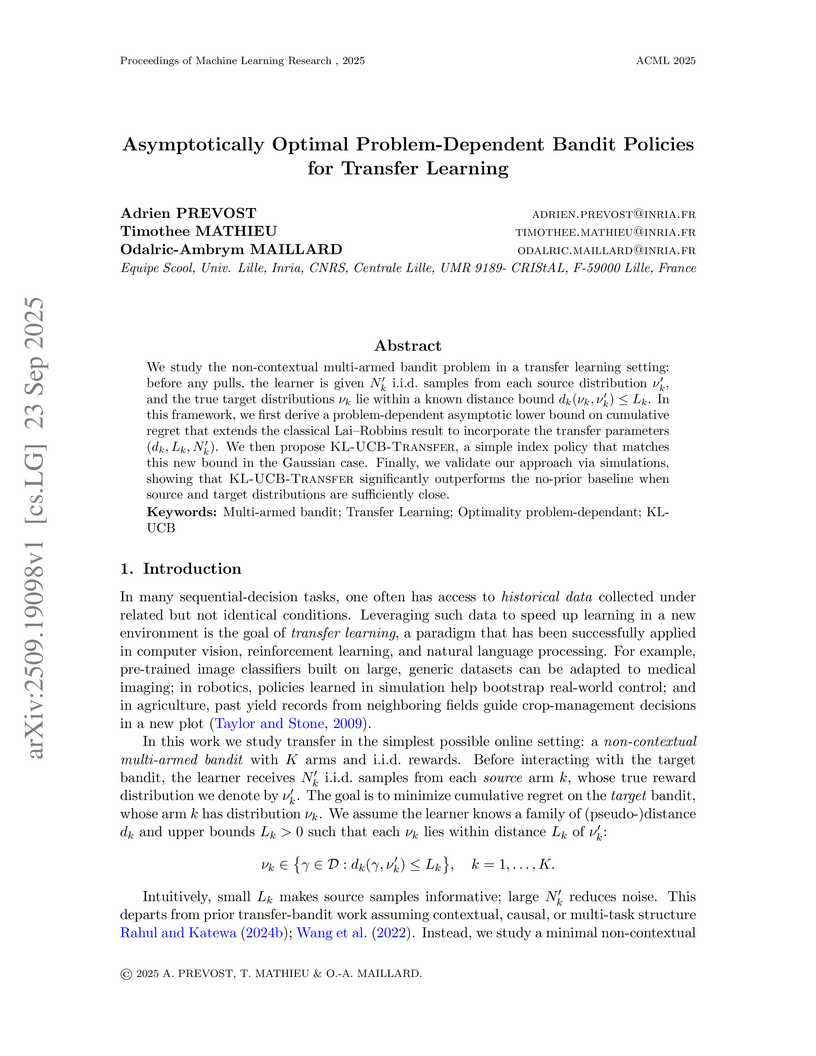

We study the non-contextual multi-armed bandit problem in a transfer learning setting: before any pulls, the learner is given N'_k i.i.d. samples from each source distribution nu'_k, and the true target distributions nu_k lie within a known distance bound d_k(nu_k, nu'_k) <= L_k. In this framework, we first derive a problem-dependent asymptotic lower bound on cumulative regret that extends the classical Lai-Robbins result to incorporate the transfer parameters (d_k, L_k, N'_k). We then propose KL-UCB-Transfer, a simple index policy that matches this new bound in the Gaussian case. Finally, we validate our approach via simulations, showing that KL-UCB-Transfer significantly outperforms the no-prior baseline when source and target distributions are sufficiently close.

The problem of identifying the best answer among a collection of items having real-valued distribution is well-understood.

Despite its practical relevance for many applications, fewer works have studied its extension when multiple and potentially conflicting metrics are available to assess an item's quality.

Pareto set identification (PSI) aims to identify the set of answers whose means are not uniformly worse than another.

This paper studies PSI in the transductive linear setting with potentially correlated objectives.

Building on posterior sampling in both the stopping and the sampling rules, we propose the PSIPS algorithm that deals simultaneously with structure and correlation without paying the computational cost of existing oracle-based algorithms.

Both from a frequentist and Bayesian perspective, PSIPS is asymptotically optimal.

We demonstrate its good empirical performance in real-world and synthetic instances.

Federated Learning (FL) is a collaborative machine learning (ML) approach, where multiple clients participate in training an ML model without exposing their private data. Fair and accurate assessment of client contributions facilitates incentive allocation in FL and encourages diverse clients to participate in a unified model training. Existing methods for contribution assessment adopts a co-operative game-theoretic concept, called Shapley value, but under restricted assumptions, e.g., all clients' participating in all epochs or at least in one epoch of FL.

We propose a history-aware client contribution assessment framework, called FLContrib, where client-participation is dynamic, i.e., a subset of clients participates in each epoch. The theoretical underpinning of FLContrib is based on the Markovian training process of FL. Under this setting, we directly apply the linearity property of Shapley value and compute a historical timeline of client contributions. Considering the possibility of a limited computational budget, we propose a two-sided fairness criteria to schedule Shapley value computation in a subset of epochs. Empirically, FLContrib is efficient and consistently accurate in estimating contribution across multiple utility functions. As a practical application, we apply FLContrib to detect dishonest clients in FL based on historical Shaplee values.

In reinforcement learning (RL) theory, the concept of most confusing instances is central to establishing regret lower bounds, that is, the minimal exploration needed to solve a problem. Given a reference model and its optimal policy, a most confusing instance is the statistically closest alternative model that makes a suboptimal policy optimal. While this concept is well-studied in multi-armed bandits and ergodic tabular Markov decision processes, constructing such instances remains an open question in the general case. In this paper, we formalize this problem for neural network world models as a constrained optimization: finding a modified model that is statistically close to the reference one, while producing divergent performance between optimal and suboptimal policies. We propose an adversarial training procedure to solve this problem and conduct an empirical study across world models of varying quality. Our results suggest that the degree of achievable confusion correlates with uncertainty in the approximate model, which may inform theoretically-grounded exploration strategies for deep model-based RL.

Bandits serve as the theoretical foundation of sequential learning and an

algorithmic foundation of modern recommender systems. However, recommender

systems often rely on user-sensitive data, making privacy a critical concern.

This paper contributes to the understanding of Differential Privacy (DP) in

bandits with a trusted centralised decision-maker, and especially the

implications of ensuring zero Concentrated Differential Privacy (zCDP). First,

we formalise and compare different adaptations of DP to bandits, depending on

the considered input and the interaction protocol. Then, we propose three

private algorithms, namely AdaC-UCB, AdaC-GOPE and AdaC-OFUL, for three bandit

settings, namely finite-armed bandits, linear bandits, and linear contextual

bandits. The three algorithms share a generic algorithmic blueprint, i.e. the

Gaussian mechanism and adaptive episodes, to ensure a good privacy-utility

trade-off. We analyse and upper bound the regret of these three algorithms. Our

analysis shows that in all of these settings, the prices of imposing zCDP are

(asymptotically) negligible in comparison with the regrets incurred oblivious

to privacy. Next, we complement our regret upper bounds with the first minimax

lower bounds on the regret of bandits with zCDP. To prove the lower bounds, we

elaborate a new proof technique based on couplings and optimal transport. We

conclude by experimentally validating our theoretical results for the three

different settings of bandits.

As sequential learning algorithms are increasingly applied to real life,

ensuring data privacy while maintaining their utilities emerges as a timely

question. In this context, regret minimisation in stochastic bandits under

ϵ-global Differential Privacy (DP) has been widely studied. Unlike

bandits without DP, there is a significant gap between the best-known regret

lower and upper bound in this setting, though they "match" in order. Thus, we

revisit the regret lower and upper bounds of ϵ-global DP algorithms

for Bernoulli bandits and improve both. First, we prove a tighter regret lower

bound involving a novel information-theoretic quantity characterising the

hardness of ϵ-global DP in stochastic bandits. Our lower bound

strictly improves on the existing ones across all ϵ values. Then, we

choose two asymptotically optimal bandit algorithms, i.e. DP-KLUCB and DP-IMED,

and propose their DP versions using a unified blueprint, i.e., (a) running in

arm-dependent phases, and (b) adding Laplace noise to achieve privacy. For

Bernoulli bandits, we analyse the regrets of these algorithms and show that

their regrets asymptotically match our lower bound up to a constant arbitrary

close to 1. This refutes the conjecture that forgetting past rewards is

necessary to design optimal bandit algorithms under global DP. At the core of

our algorithms lies a new concentration inequality for sums of Bernoulli

variables under Laplace mechanism, which is a new DP version of the Chernoff

bound. This result is universally useful as the DP literature commonly treats

the concentrations of Laplace noise and random variables separately, while we

couple them to yield a tighter bound.

26 Jul 2025

Negative dependence is becoming a key driver in advancing learning capabilities beyond the limits of traditional independence. Recent developments have evidenced support towards negatively dependent systems as a learning paradigm in a broad range of fundamental machine learning challenges including optimization, sampling, dimensionality reduction and sparse signal recovery, often surpassing the performance of current methods based on statistical independence. The most popular negatively dependent model has been that of determinantal point processes (DPPs), which have their origins in quantum theory. However, other models, such as perturbed lattice models, strongly Rayleigh measures, zeros of random functions have gained salience in various learning applications. In this article, we review this burgeoning field of research, as it has developed over the past two decades or so. We also present new results on applications of DPPs to the parsimonious representation of neural networks. In the limited scope of the article, we mostly focus on aspects of this area to which the authors contributed over the recent years, including applications to Monte Carlo methods, coresets and stochastic gradient descent, stochastic networks, signal processing and connections to quantum computation. However, starting from basics of negative dependence for the uninitiated reader, extensive references are provided to a broad swath of related developments which could not be covered within our limited scope. While existing works and reviews generally focus on specific negatively dependent models (e.g. DPPs), a notable feature of this article is that it addresses negative dependence as a machine learning methodology as a whole. In this vein, it covers within its span an array of negatively dependent models and their applications well beyond DPPs, thereby putting forward a very general and rather unique perspective.

There are no more papers matching your filters at the moment.