Centre for Perceptual and Interactive Intelligence

This research introduces Human Preference Score v2 (HPS v2), a new evaluation metric for text-to-image synthesis models built on Human Preference Dataset v2 (HPD v2), a large-scale dataset featuring images from diverse generative models and prompts cleaned by ChatGPT. HPS v2 achieves an 83.3% accuracy in predicting human preferences, demonstrating improved generalization and providing a robust benchmark for comparing T2I models.

Beihang University and CUHK's MMLab introduce OpenUAV, an open-source platform, and a comprehensive dataset for realistic UAV Vision-Language Navigation, featuring continuous 6 DoF trajectories and an MLLM-based navigation agent. Their method consistently outperforms baselines, achieving an average of 5% higher success rate on seen environments and demonstrating strong generalization on unseen maps and objects.

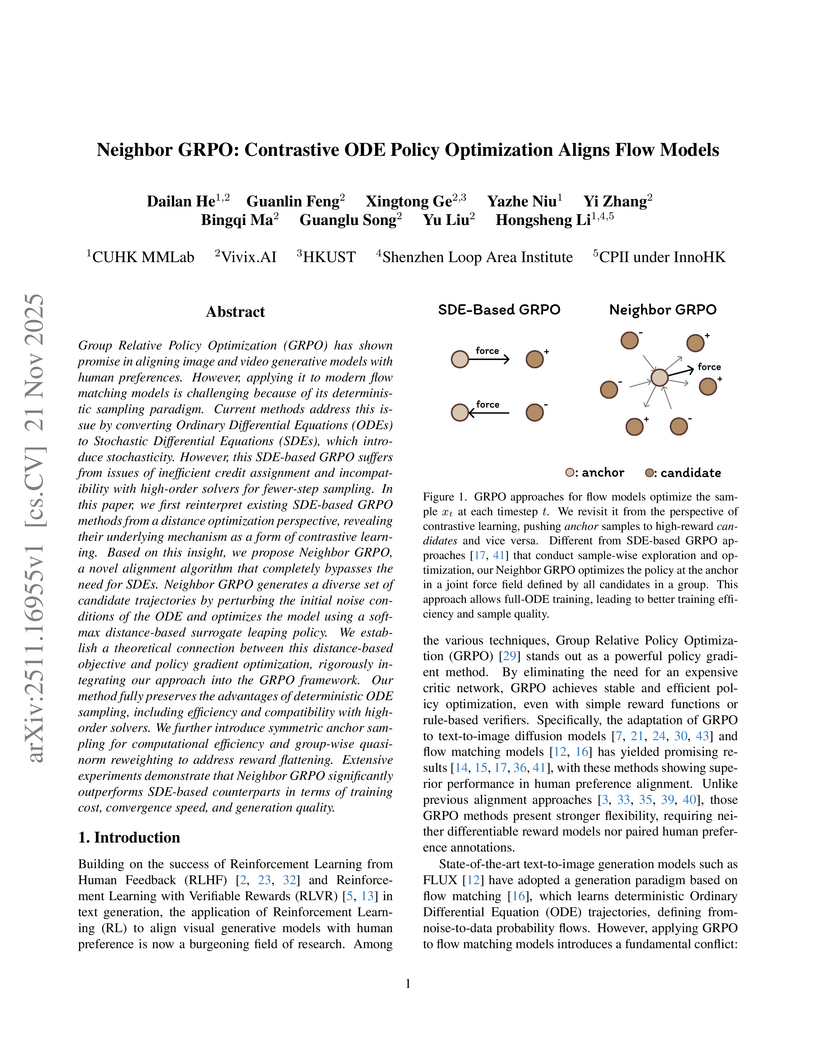

Neighbor GRPO introduces an SDE-free method for aligning flow matching models with human preferences, enabling up to 5x faster training while improving generation quality and robustness against reward hacking. The approach fully leverages efficient high-order ODE solvers for visual generative models.

25 Sep 2025

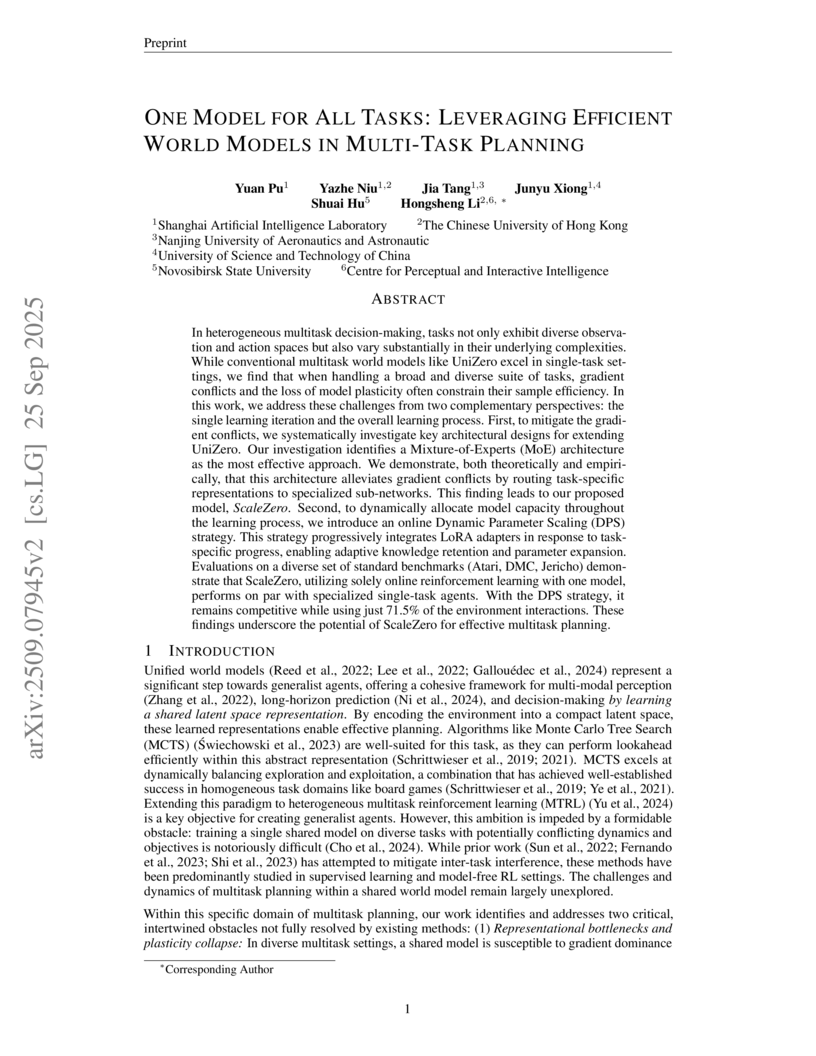

In heterogeneous multi-task decision-making, tasks not only exhibit diverse observation and action spaces but also vary substantially in their underlying complexities. While conventional multi-task world models like UniZero excel in single-task settings, we find that when handling a broad and diverse suite of tasks, gradient conflicts and the loss of model plasticity often constrain their sample efficiency. In this work, we address these challenges from two complementary perspectives: the single learning iteration and the overall learning process. First, to mitigate the gradient conflicts, we systematically investigate key architectural designs for extending UniZero. Our investigation identifies a Mixture-of-Experts (MoE) architecture as the most effective approach. We demonstrate, both theoretically and empirically, that this architecture alleviates gradient conflicts by routing task-specific representations to specialized sub-networks. This finding leads to our proposed model, \textit{ScaleZero}. Second, to dynamically allocate model capacity throughout the learning process, we introduce an online Dynamic Parameter Scaling (DPS) strategy. This strategy progressively integrates LoRA adapters in response to task-specific progress, enabling adaptive knowledge retention and parameter expansion. Evaluations on a diverse set of standard benchmarks (Atari, DMC, Jericho) demonstrate that ScaleZero, utilizing solely online reinforcement learning with one model, performs on par with specialized single-task agents. With the DPS strategy, it remains competitive while using just 71.5% of the environment interactions. These findings underscore the potential of ScaleZero for effective multi-task planning. Our code is available at \textcolor{magenta}{this https URL}.

Researchers from Alibaba, The Chinese University of Hong Kong, and other institutions developed LLaVA-MoD, a framework that leverages Mixture-of-Experts (MoE) architecture and progressive knowledge distillation to create efficient small-scale Multimodal Large Language Models (s-MLLMs). The resulting 2-billion-parameter model achieves state-of-the-art performance among similarly sized models and significantly reduces hallucination, even surpassing larger teacher models and RLHF-based systems, while using only 0.3% of the training data compared to some large MLLMs.

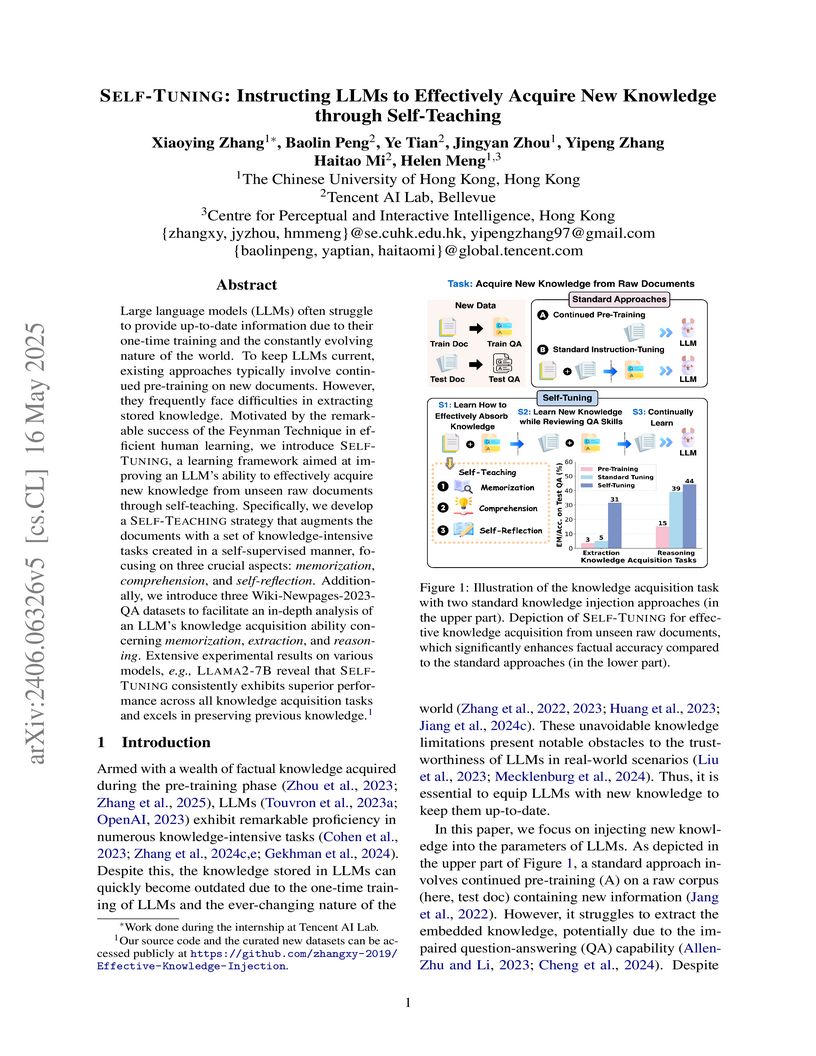

The SELF-TUNING framework improves large language models' capacity to internalize new, up-to-date knowledge from raw documents by employing a self-teaching strategy inspired by the Feynman Technique. This method yields superior memorization, extraction, and reasoning, outperforming prior approaches while effectively mitigating catastrophic forgetting.

M3Net is a unified multi-task learning framework for autonomous driving that jointly handles 3D object detection, BEV map segmentation, and 3D occupancy prediction using multimodal camera and LiDAR inputs. The system achieves state-of-the-art performance across all three tasks on the nuScenes dataset, including a 5-6% IoU improvement for occupancy prediction, by employing adaptive feature integration, task-specific query initialization, and task-oriented channel scaling.

VLM-Grounder introduces a method for zero-shot 3D visual grounding using 2D image sequences and a VLM agent, achieving improved accuracy on ScanRefer and Nr3D datasets without relying on 3D point clouds or object priors. The system from The Chinese University of Hong Kong, Zhejiang University, and Shanghai AI Laboratory integrates dynamic image stitching and a feedback-driven agentic framework to localize objects with natural language.

4D video control is essential in video generation as it enables the use of sophisticated lens techniques, such as multi-camera shooting and dolly zoom, which are currently unsupported by existing methods. Training a video Diffusion Transformer (DiT) directly to control 4D content requires expensive multi-view videos. Inspired by Monocular Dynamic novel View Synthesis (MDVS) that optimizes a 4D representation and renders videos according to different 4D elements, such as camera pose and object motion editing, we bring pseudo 4D Gaussian fields to video generation. Specifically, we propose a novel framework that constructs a pseudo 4D Gaussian field with dense 3D point tracking and renders the Gaussian field for all video frames. Then we finetune a pretrained DiT to generate videos following the guidance of the rendered video, dubbed as GS-DiT. To boost the training of the GS-DiT, we also propose an efficient Dense 3D Point Tracking (D3D-PT) method for the pseudo 4D Gaussian field construction. Our D3D-PT outperforms SpatialTracker, the state-of-the-art sparse 3D point tracking method, in accuracy and accelerates the inference speed by two orders of magnitude. During the inference stage, GS-DiT can generate videos with the same dynamic content while adhering to different camera parameters, addressing a significant limitation of current video generation models. GS-DiT demonstrates strong generalization capabilities and extends the 4D controllability of Gaussian splatting to video generation beyond just camera poses. It supports advanced cinematic effects through the manipulation of the Gaussian field and camera intrinsics, making it a powerful tool for creative video production. Demos are available at this https URL.

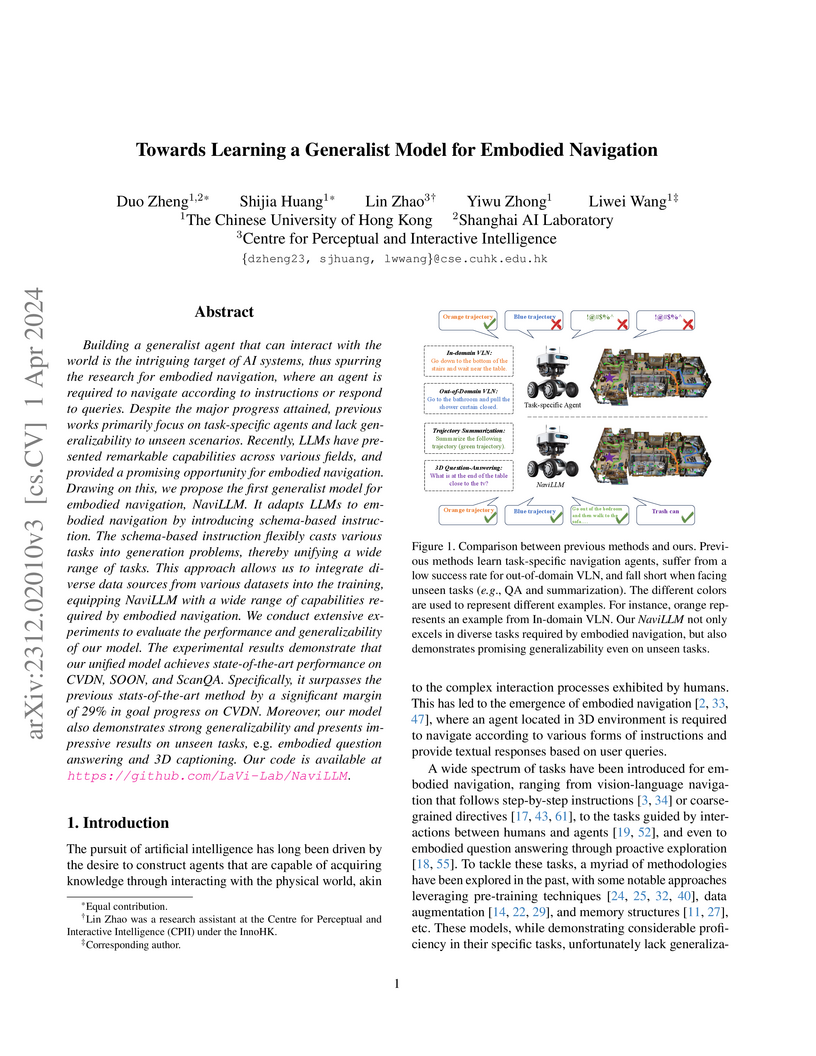

This paper presents NaviLLM, a generalist model for embodied navigation that adapts Large Language Models (LLMs) to unify a wide range of tasks within 3D environments using a schema-based instruction framework. The model achieved state-of-the-art results on several benchmarks, including CVDN, SOON, and ScanQA, and demonstrated strong zero-shot generalization capabilities on unseen tasks and environments.

Visual grounding (VG) occupies a pivotal position in multi-modality vision-language models. In this study, we propose ViLaM, a large multi-modality model, that supports multi-tasks of VG using the cycle training strategy, with abundant interaction instructions. The cycle training between referring expression generation (REG) and referring expression comprehension (REC) is introduced. It enhances the consistency between visual location and referring expressions, and addresses the need for high-quality, multi-tasks VG datasets. Moreover, multi-tasks of VG are promoted in our model, contributed by the cycle training strategy. The multi-tasks in REC encompass a range of granularities, from region-level to pixel-level, which include referring bbox detection, referring keypoints detection, and referring image segmentation. In REG, referring region classification determines the fine-grained category of the target, while referring region captioning generates a comprehensive description. Meanwhile, all tasks participate in the joint training, synergistically enhancing one another and collectively improving the overall performance of the model. Furthermore, leveraging the capabilities of large language models, ViLaM extends a wide range of instructions, thereby significantly enhancing its generalization and interaction potentials. Extensive public datasets corroborate the superior capabilities of our model in VG with muti-tasks. Additionally, validating its robust generalization, ViLaM is validated under open-set and few-shot scenarios. Especially in the medical field, our model demonstrates cross-domain robust generalization capabilities. Furthermore, we contribute a VG dataset, especially with multi-tasks. To support and encourage the community focused on VG, we have made both the dataset and our code public: this https URL.

Driving safely requires multiple capabilities from human and intelligent

agents, such as the generalizability to unseen environments, the safety

awareness of the surrounding traffic, and the decision-making in complex

multi-agent settings. Despite the great success of Reinforcement Learning (RL),

most of the RL research works investigate each capability separately due to the

lack of integrated environments. In this work, we develop a new driving

simulation platform called MetaDrive to support the research of generalizable

reinforcement learning algorithms for machine autonomy. MetaDrive is highly

compositional, which can generate an infinite number of diverse driving

scenarios from both the procedural generation and the real data importing.

Based on MetaDrive, we construct a variety of RL tasks and baselines in both

single-agent and multi-agent settings, including benchmarking generalizability

across unseen scenes, safe exploration, and learning multi-agent traffic. The

generalization experiments conducted on both procedurally generated scenarios

and real-world scenarios show that increasing the diversity and the size of the

training set leads to the improvement of the RL agent's generalizability. We

further evaluate various safe reinforcement learning and multi-agent

reinforcement learning algorithms in MetaDrive environments and provide the

benchmarks. Source code, documentation, and demo video are available at \url{

this https URL}.

01 Nov 2025

GraphRAG enhances large language models (LLMs) to generate quality answers for user questions by retrieving related facts from external knowledge graphs. However, current GraphRAG methods are primarily evaluated on and overly tailored for knowledge graph question answering (KGQA) benchmarks, which are biased towards a few specific question patterns and do not reflect the diversity of real-world questions. To better evaluate GraphRAG methods, we propose a complete four-class taxonomy to categorize the basic patterns of knowledge graph questions and use it to create PolyBench, a new GraphRAG benchmark encompassing a comprehensive set of graph questions. With the new benchmark, we find that existing GraphRAG methods fall short in effectiveness (i.e., quality of the generated answers) and/or efficiency (i.e., response time or token usage) because they adopt either a fixed graph traversal strategy or free-form exploration by LLMs for fact retrieval. However, different question patterns require distinct graph traversal strategies and context formation. To facilitate better retrieval, we propose PolyG, an adaptive GraphRAG approach by decomposing and categorizing the questions according to our proposed question taxonomy. Built on top of a unified interface and execution engine, PolyG dynamically prompts an LLM to generate a graph database query to retrieve the context for each decomposed basic question. Compared with SOTA GraphRAG methods, PolyG achieves a higher win rate in generation quality and has a low response latency and token cost. Our code and benchmark are open-source at this https URL.

In this study, we delve into the generation of high-resolution images from pre-trained diffusion models, addressing persistent challenges, such as repetitive patterns and structural distortions, that emerge when models are applied beyond their trained resolutions. To address this issue, we introduce an innovative, training-free approach FouriScale from the perspective of frequency domain analysis. We replace the original convolutional layers in pre-trained diffusion models by incorporating a dilation technique along with a low-pass operation, intending to achieve structural consistency and scale consistency across resolutions, respectively. Further enhanced by a padding-then-crop strategy, our method can flexibly handle text-to-image generation of various aspect ratios. By using the FouriScale as guidance, our method successfully balances the structural integrity and fidelity of generated images, achieving an astonishing capacity of arbitrary-size, high-resolution, and high-quality generation. With its simplicity and compatibility, our method can provide valuable insights for future explorations into the synthesis of ultra-high-resolution images. The code will be released at this https URL.

In this paper, we present GaussianPainter, the first method to paint a point cloud into 3D Gaussians given a reference image. GaussianPainter introduces an innovative feed-forward approach to overcome the limitations of time-consuming test-time optimization in 3D Gaussian splatting. Our method addresses a critical challenge in the field: the non-uniqueness problem inherent in the large parameter space of 3D Gaussian splatting. This space, encompassing rotation, anisotropic scales, and spherical harmonic coefficients, introduces the challenge of rendering similar images from substantially different Gaussian fields. As a result, feed-forward networks face instability when attempting to directly predict high-quality Gaussian fields, struggling to converge on consistent parameters for a given output. To address this issue, we propose to estimate a surface normal for each point to determine its Gaussian rotation. This strategy enables the network to effectively predict the remaining Gaussian parameters in the constrained space. We further enhance our approach with an appearance injection module, incorporating reference image appearance into Gaussian fields via a multiscale triplane representation. Our method successfully balances efficiency and fidelity in 3D Gaussian generation, achieving high-quality, diverse, and robust 3D content creation from point clouds in a single forward pass.

FlexDrive improves 3D driving scene reconstruction by enabling high-quality photorealistic rendering from arbitrary out-of-path viewpoints using 3D Gaussian Splatting. It introduces a novel pseudo-ground truth generation method and dense depth refinement, achieving superior performance on a new CARLA benchmark and enhanced qualitative results on the Waymo Open Dataset compared to existing methods.

Transformer architecture has emerged to be successful in a number of natural language processing tasks. However, its applications to medical vision remain largely unexplored. In this study, we present UTNet, a simple yet powerful hybrid Transformer architecture that integrates self-attention into a convolutional neural network for enhancing medical image segmentation. UTNet applies self-attention modules in both encoder and decoder for capturing long-range dependency at different scales with minimal overhead. To this end, we propose an efficient self-attention mechanism along with relative position encoding that reduces the complexity of self-attention operation significantly from O(n2) to approximate O(n). A new self-attention decoder is also proposed to recover fine-grained details from the skipped connections in the encoder. Our approach addresses the dilemma that Transformer requires huge amounts of data to learn vision inductive bias. Our hybrid layer design allows the initialization of Transformer into convolutional networks without a need of pre-training. We have evaluated UTNet on the multi-label, multi-vendor cardiac magnetic resonance imaging cohort. UTNet demonstrates superior segmentation performance and robustness against the state-of-the-art approaches, holding the promise to generalize well on other medical image segmentations.

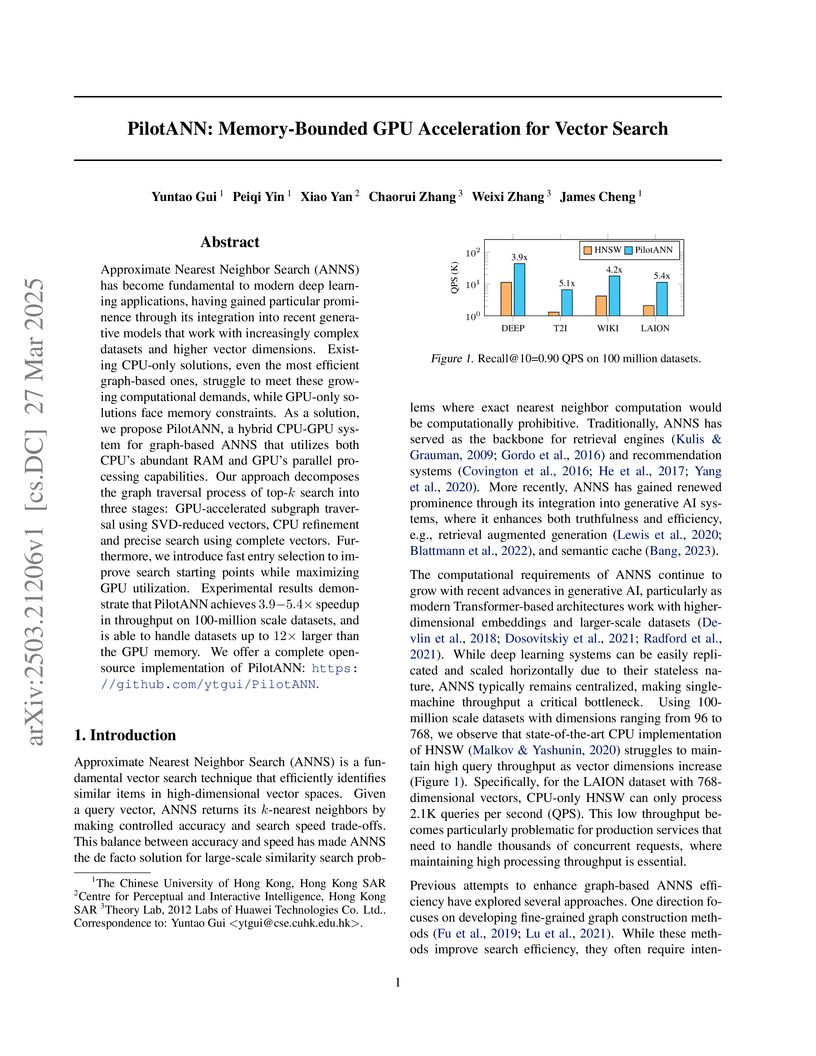

Approximate Nearest Neighbor Search (ANNS) has become fundamental to modern

deep learning applications, having gained particular prominence through its

integration into recent generative models that work with increasingly complex

datasets and higher vector dimensions. Existing CPU-only solutions, even the

most efficient graph-based ones, struggle to meet these growing computational

demands, while GPU-only solutions face memory constraints. As a solution, we

propose PilotANN, a hybrid CPU-GPU system for graph-based ANNS that utilizes

both CPU's abundant RAM and GPU's parallel processing capabilities. Our

approach decomposes the graph traversal process of top-k search into three

stages: GPU-accelerated subgraph traversal using SVD-reduced vectors, CPU

refinement and precise search using complete vectors. Furthermore, we introduce

fast entry selection to improve search starting points while maximizing GPU

utilization. Experimental results demonstrate that PilotANN achieves $3.9 - 5.4

\times$ speedup in throughput on 100-million scale datasets, and is able to

handle datasets up to 12× larger than the GPU memory. We offer a

complete open-source implementation at this https URL

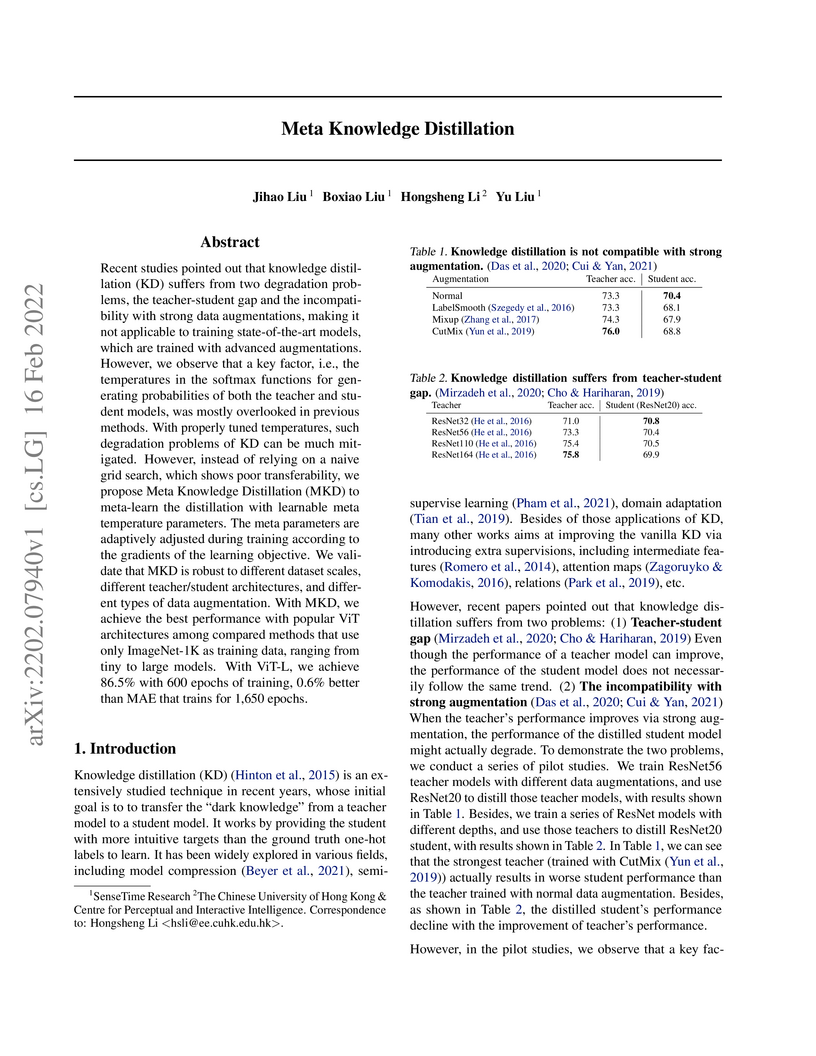

Recent studies pointed out that knowledge distillation (KD) suffers from two degradation problems, the teacher-student gap and the incompatibility with strong data augmentations, making it not applicable to training state-of-the-art models, which are trained with advanced augmentations. However, we observe that a key factor, i.e., the temperatures in the softmax functions for generating probabilities of both the teacher and student models, was mostly overlooked in previous methods. With properly tuned temperatures, such degradation problems of KD can be much mitigated. However, instead of relying on a naive grid search, which shows poor transferability, we propose Meta Knowledge Distillation (MKD) to meta-learn the distillation with learnable meta temperature parameters. The meta parameters are adaptively adjusted during training according to the gradients of the learning objective. We validate that MKD is robust to different dataset scales, different teacher/student architectures, and different types of data augmentation. With MKD, we achieve the best performance with popular ViT architectures among compared methods that use only ImageNet-1K as training data, ranging from tiny to large models. With ViT-L, we achieve 86.5% with 600 epochs of training, 0.6% better than MAE that trains for 1,650 epochs.

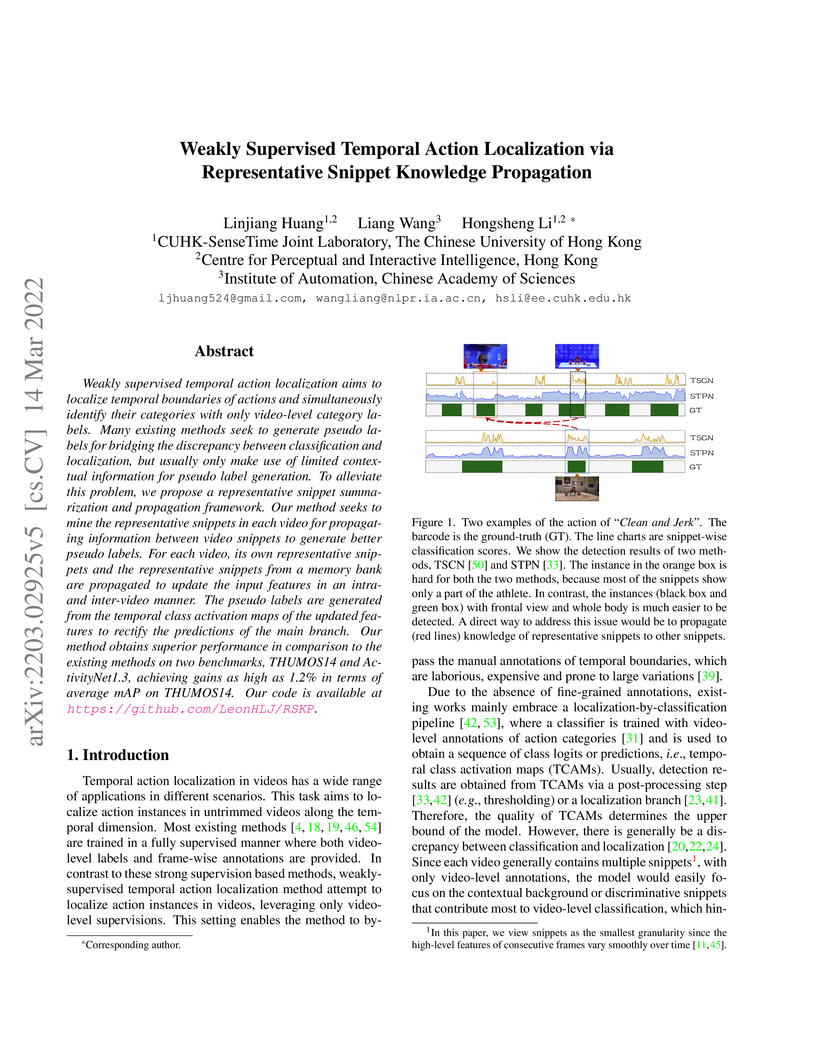

Weakly supervised temporal action localization aims to localize temporal

boundaries of actions and simultaneously identify their categories with only

video-level category labels. Many existing methods seek to generate pseudo

labels for bridging the discrepancy between classification and localization,

but usually only make use of limited contextual information for pseudo label

generation. To alleviate this problem, we propose a representative snippet

summarization and propagation framework. Our method seeks to mine the

representative snippets in each video for propagating information between video

snippets to generate better pseudo labels. For each video, its own

representative snippets and the representative snippets from a memory bank are

propagated to update the input features in an intra- and inter-video manner.

The pseudo labels are generated from the temporal class activation maps of the

updated features to rectify the predictions of the main branch. Our method

obtains superior performance in comparison to the existing methods on two

benchmarks, THUMOS14 and ActivityNet1.3, achieving gains as high as 1.2% in

terms of average mAP on THUMOS14.

There are no more papers matching your filters at the moment.