Chongqing Jiaotong University

17 Sep 2025

Non-monotone trust-region methods are known to provide additional benefits for scalar and multi-objective optimization, such as enhancing the probability of convergence and improving the speed of convergence. For optimization of set-valued maps, non-monotone trust-region methods have not yet been explored and investigated to see if they show similar benefits. Thus, in this article, we propose two non-monotone trust-region schemes--max-type and average-type for set-valued optimization. Using these methods, the aim is to find \emph{K}-critical points for a non-convex unconstrained set optimization problem through vectorization and oriented-distance scalarization. The main modification in the existing trust region method for set optimization occurs in reduction ratios, where max-type uses the maximum over function values from the last few iterations, and avg-type uses an exponentially weighted moving average of successive previous function values till the current iteration. Under appropriate assumptions, we show the global convergence of the proposed methods. To verify their effectiveness, we numerically compare their performance with the existing trust region method, steepest descent method, and conjugate gradient method using performance profile in terms of three metrics: number of non-convergence, number of iterations, and computation time.

Hawking radiation elucidates black holes as quantum thermodynamic systems, thereby establishing a conceptual bridge between general relativity and quantum mechanics through particle emission phenomena. While conventional theoretical frameworks predominantly focus on classical spacetime configurations, recent advancements in Extended Phase Space thermodynamics have redefined cosmological parameters (such as the Λ-term) as dynamic variables. Notably, the thermodynamics of Anti-de Sitter (AdS) black holes has been successfully extended to incorporate thermodynamic pressure P. Within this extended phase space framework, although numerous intriguing physical phenomena have been identified, the tunneling mechanism of particles incorporating pressure and volume remains unexplored. This study investigates Hawking radiation through particle tunneling in Schwarzschild Anti-de Sitter black holes within the extended phase space, where the thermodynamic pressure P is introduced via a dynamic cosmological constant Λ. By employing semi-classical tunneling calculations with self-gravitation corrections, we demonstrate that emission probabilities exhibit a direct correlation with variations in Bekenstein-Hawking entropy. Significantly, the radiation spectrum deviates from pure thermality, aligning with unitary quantum evolution while maintaining consistency with standard phase space results. Moreover, through thermodynamic analysis, we have verified that the emission rate of particles is related to the difference in Bekenstein-Hawking entropy of the emitted particles before and after they tunnel through the potential barrier. These findings establish particle tunneling as a unified probe of quantum gravitational effects in black hole thermodynamics.

This research investigates and addresses the issue of feature misalignment in Feature Pyramid Networks, proposing that conventional feature fusion can degrade performance. The paper introduces techniques like Soft Nearest Neighbor Interpolation and an Independent Hierarchy Pyramid to enhance feature alignment and representation, resulting in improved object detection accuracy on benchmarks like MS COCO.

We investigate the imaging properties of spherically symmetric Konoplya-Zhidenko (KZ) black holes surrounded by geometrically thick accretion flows, adopting a phenomenological radiatively inefficient accretion flow (RIAF) model and an analytical ballistic approximation accretion flow (BAAF) model. General relativistic radiative transfer is employed to compute synchrotron emission from thermal electrons and generate horizon-scale images. For the RIAF model, we analyze the dependence of image morphology on the deformation parameter, observing frequency, and flow dynamics. The photon ring and central dark region expand with increasing deformation parameter, with brightness asymmetries arising at high inclinations and depending on flow dynamics and emission anisotropy. The BAAF disk produces narrower rings and darker centers, while polarization patterns trace the brightness distribution and vary with viewing angle and deformation, revealing spacetime structure. These results demonstrate that intensity and polarization in thick-disk models provide probes of KZ black holes and near-horizon accretion physics.

Real-time object detection is significant for industrial and research fields. On edge devices, a giant model is difficult to achieve the real-time detecting requirement and a lightweight model built from a large number of the depth-wise separable convolutional could not achieve the sufficient accuracy. We introduce a new lightweight convolutional technique, GSConv, to lighten the model but maintain the accuracy. The GSConv accomplishes an excellent trade-off between the accuracy and speed. Furthermore, we provide a design suggestion based on the GSConv, Slim-Neck (SNs), to achieve a higher computational cost-effectiveness of the real-time detectors. The effectiveness of the SNs was robustly demonstrated in over twenty sets comparative experiments. In particular, the real-time detectors of ameliorated by the SNs obtain the state-of-the-art (70.9% AP50 for the SODA10M at a speed of ~ 100FPS on a Tesla T4) compared with the baselines. Code is available at this https URL

21 Aug 2025

DeepMEL introduces a multi-agent collaboration framework for Multimodal Entity Linking (MEL) that leverages specialized Large Language Models (LLMs) and Large Visual Models (LVMs) to process both text and images. The framework achieves state-of-the-art accuracy across five benchmark datasets, improving performance by 1% to 57% over previous methods by enabling fine-grained cross-modal fusion and adaptive candidate generation.

Temporal knowledge graph reasoning (TKGR) is increasingly gaining attention

for its ability to extrapolate new events from historical data, thereby

enriching the inherently incomplete temporal knowledge graphs. Existing

graph-based representation learning frameworks have made significant strides in

developing evolving representations for both entities and relational

embeddings. Despite these achievements, there's a notable tendency in these

models to inadvertently learn biased data representations and mine spurious

correlations, consequently failing to discern the causal relationships between

events. This often leads to incorrect predictions based on these false

correlations. To address this, we propose an innovative Causal Enhanced Graph

Representation Learning framework for TKGR (named CEGRL-TKGR). This framework

introduces causal structures in graph-based representation learning to unveil

the essential causal relationships between events, ultimately enhancing the

performance of the TKGR task. Specifically, we first disentangle the

evolutionary representations of entities and relations in a temporal knowledge

graph sequence into two distinct components, namely causal representations and

confounding representations. Then, drawing on causal intervention theory, we

advocate the utilization of causal representations for predictions, aiming to

mitigate the effects of erroneous correlations caused by confounding features,

thus achieving more robust and accurate predictions. Finally, extensive

experimental results on six benchmark datasets demonstrate the superior

performance of our model in the link prediction task.

This study investigates the optical imaging characteristics of massive boson stars based on a model with Einstein's nonlinear electrodynamics. Under asymptotically flat boundary conditions, the field equations are solved numerically to obtain the spacetime metric of the massive boson stars. Employing the ray-tracing method, we analyze the optical images of the massive boson stars under two illumination conditions: a celestial light source and a thin accretion disk. The research reveals that the configurations and optical images of the massive boson stars can be tuned via the initial parameter ϕ0 and the coupling constant Λ. The absence of the event horizon in the massive boson stars results in distinct optical image characteristics compared to those of black holes.

In this paper, based on the action of a complex scalar field minimally

coupled to a gravitational field, we numerically obtain a series of massive

boson star solutions in a spherically symmetric background with a quartic-order

self-interaction potential. Then, considering a thin accretion flow with a

certain four-velocity, we further investigate the observable appearance of the

boson star using the ray-tracing method and stereographic projection technique.

As a horizonless compact object, the boson star's thin disk images clearly

exhibit multiple light rings and a dark central region, with up to five bright

rings. As the observer's position changes, the light rings of some boson stars

deform into a symmetrical "horseshoe" or "crescent" shape. When the emitted

profile varies, the images may display distinct observational signatures of a

"Central Emission Region". Meanwhile, it shows that the corresponding polarized

images not only reveal the spacetime features of boson stars but also reflect

the properties of the accretion disk and its magnetic field structure. By

comparing with black hole, we find that both the polarized signatures and thin

disk images can effectively provide a possible basis for distinguishing boson

stars from black holes. However, within the current resolution limits of the

Event Horizon Telescope (EHT), boson stars may still closely mimic the

appearance of black holes, making them challenging to distinguish at this

stage.

07 Jan 2025

As the span and width of continuous rigid bridges increase, the complexity of

the spatial forces acting on these structures also grows, challenging

traditional design methods. Primary beam theory often fails to accurately

predict the stresses in the bridge girder, leading to potential overestimation

of the ultimate capacity of these bridges. This study addresses this gap by

developing a detailed finite element (FE) model of a continuous rigid bridge

using ABAQUS, which accounts for the complex 3D geometry, construction

procedures, and nonlinear material interactions. A loading test on a 210-meter

continuous rigid bridge is performed to validate the model, with the measured

strain and deflection data closely matching the FE simulation results. The

study also examines stress distributions under constant and live loads, as well

as the shear lag coefficients of the main girder. Through parametric analysis,

we explore the effects of varying the width-to-span and height-to-width ratios.

The results reveal that both a wider box girder and a larger span significantly

amplify the shear lag effect in the bridge girder. The findings enhance the

understanding of stress distribution in large-scale rigid bridges and provide

critical insights for more accurate design and as-sessment of such structures.

We investigate the imaging and polarization properties of Kerr-MOG black holes surrounded by geometrically thick accretion flows. The MOG parameter α introduces deviations from the Kerr metric, providing a means to test modified gravity in the strong field regime. Two representative accretion models are considered: the phenomenological radiatively inefficient accretion flow (RIAF) and the analytical ballistic approximation accretion flow (BAAF). Using general relativistic radiative transfer, we compute synchrotron emission and polarization maps under different spins, MOG parameters, inclinations, and observing frequencies. In both models, the photon ring and central dark region expand with increasing α, whereas frame dragging produces pronounced brightness asymmetry. The BAAF model predicts a narrower bright ring and distinct polarization morphology near the event horizon. By introducing the net polarization angle χnet and the second Fourier mode ∠β2, we quantify inclination- and frame-dragging-induced polarization features. Our results reveal that both α and spin significantly influence the near-horizon polarization patterns, suggesting that high-resolution polarimetric imaging could serve as a promising probe of modified gravity in the strong field regime.

To address the challenges of low detection accuracy and high false positive

rates of transmission lines in UAV (Unmanned Aerial Vehicle) images, we explore

the linear features and spatial distribution. We introduce an enhanced

stochastic Hough transform technique tailored for detecting transmission lines

in complex backgrounds. By employing the Hessian matrix for initial

preprocessing of transmission lines, and utilizing boundary search and pixel

row segmentation, our approach distinguishes transmission line areas from the

background. We significantly reduce both false positives and missed detections,

thereby improving the accuracy of transmission line identification. Experiments

demonstrate that our method not only processes images more rapidly, but also

yields superior detection results compared to conventional and random Hough

transform methods.

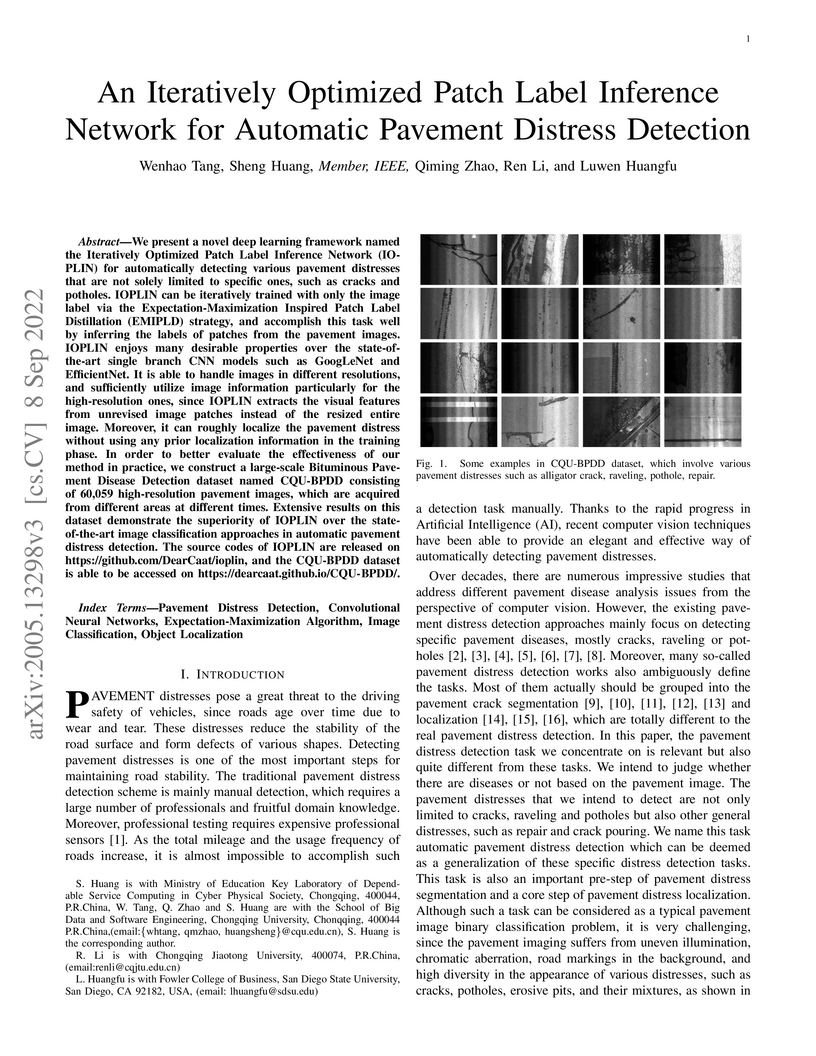

We present a novel deep learning framework named the Iteratively Optimized Patch Label Inference Network (IOPLIN) for automatically detecting various pavement distresses that are not solely limited to specific ones, such as cracks and potholes. IOPLIN can be iteratively trained with only the image label via the Expectation-Maximization Inspired Patch Label Distillation (EMIPLD) strategy, and accomplish this task well by inferring the labels of patches from the pavement images. IOPLIN enjoys many desirable properties over the state-of-the-art single branch CNN models such as GoogLeNet and EfficientNet. It is able to handle images in different resolutions, and sufficiently utilize image information particularly for the high-resolution ones, since IOPLIN extracts the visual features from unrevised image patches instead of the resized entire image. Moreover, it can roughly localize the pavement distress without using any prior localization information in the training phase. In order to better evaluate the effectiveness of our method in practice, we construct a large-scale Bituminous Pavement Disease Detection dataset named CQU-BPDD consisting of 60,059 high-resolution pavement images, which are acquired from different areas at different times. Extensive results on this dataset demonstrate the superiority of IOPLIN over the state-of-the-art image classification approaches in automatic pavement distress detection. The source codes of IOPLIN are released on \url{this https URL}, and the CQU-BPDD dataset is able to be accessed on \url{this https URL}.

To resolve the semantic ambiguity in texts, we propose a model, which innovatively combines a knowledge graph with an improved attention mechanism. An existing knowledge base is utilized to enrich the text with relevant contextual concepts. The model operates at both character and word levels to deepen its understanding by integrating the concepts. We first adopt information gain to select import words. Then an encoder-decoder framework is used to encode the text along with the related concepts. The local attention mechanism adjusts the weight of each concept, reducing the influence of irrelevant or noisy concepts during classification. We improve the calculation formula for attention scores in the local self-attention mechanism, ensuring that words with different frequencies of occurrence in the text receive higher attention scores. Finally, the model employs a Bi-directional Gated Recurrent Unit (Bi-GRU), which is effective in feature extraction from texts for improved classification accuracy. Its performance is demonstrated on datasets such as AGNews, Ohsumed, and TagMyNews, achieving accuracy of 75.1%, 58.7%, and 68.5% respectively, showing its effectiveness in classifying tasks.

28 Sep 2020

Many filters have been proposed in recent decades for the nonlinear state

estimation problem. The linearization-based extended Kalman filter (EKF) is

widely applied to nonlinear industrial systems. As EKF is limited in accuracy

and reliability, sequential Monte-Carlo methods or particle filters (PF) can

obtain superior accuracy at the cost of a huge number of random samples. The

unscented Kalman filter (UKF) can achieve adequate accuracy more efficiently by

using deterministic samples, but its weights may be negative, which might cause

instability problem. For Gaussian filters, the cubature Kalman filter (CKF) and

Gauss Hermit filter (GHF) employ cubature and respectively Gauss-Hermite rules

to approximate statistic information of random variables and exhibit impressive

performances in practical problems. Inspired by this work, this paper presents

a new nonlinear estimation scheme named after geometric unscented Kalman filter

(GUF). The GUF chooses the filtering framework of CKF for updating data and

develops a geometric unscented sampling (GUS) strategy for approximating random

variables. The main feature of GUS is selecting uniformly distributed samples

according to the probability and geometric location similar to UKF and CKF, and

having positive weights like PF. Through such way, GUF can maintain adequate

accuracy as GHF with reasonable efficiency and good stability. The GUF does not

suffer from the exponential increase of sample size as for PF or failure to

converge resulted from non-positive weights as for high order CKF and UKF.

18 Mar 2025

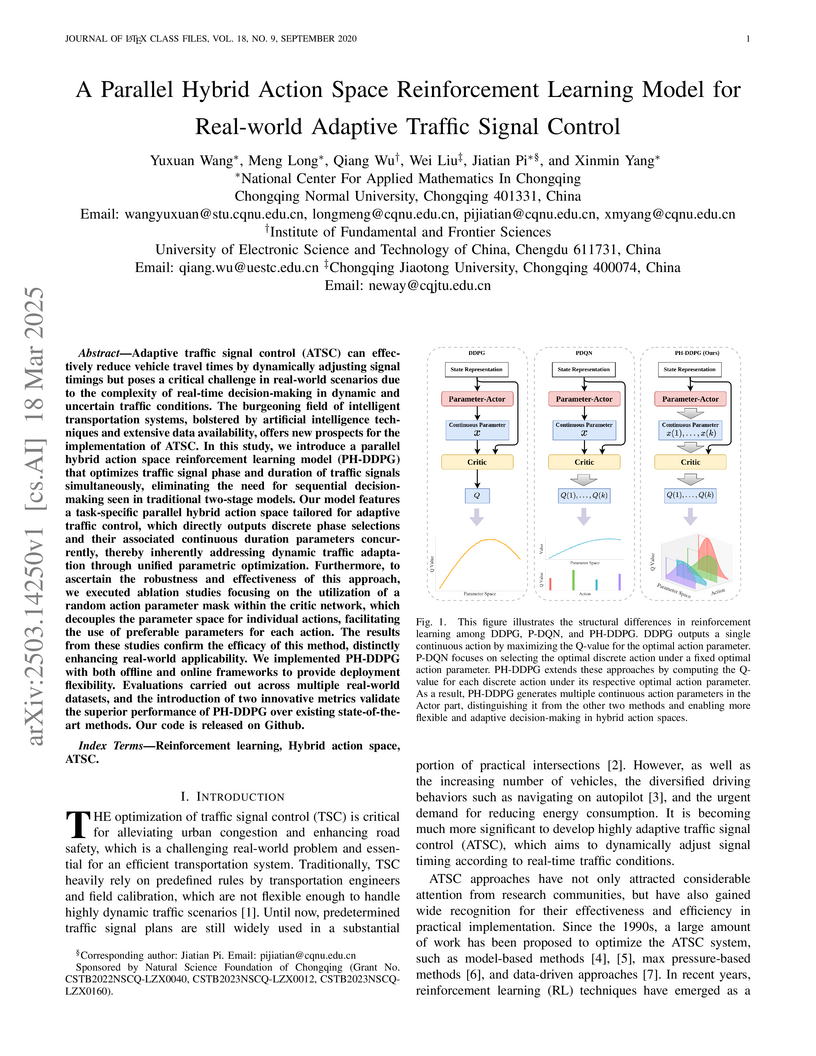

Researchers from multiple Chinese universities develop PH-DDPG, a reinforcement learning framework for adaptive traffic signal control that combines discrete phase selection with continuous duration parameters, achieving superior performance across real-world datasets from Jinan, Hangzhou, and New York while enabling both offline and online deployment through novel action parameter masking techniques.

The Johannsen-Psaltis (JP) metric provides a robust framework for testing the no-hair theorem of astrophysical black holes due to the regular spacetime configuration around JP black holes. Verification of this theorem through electromagnetic spectra often involves analyzing the photon sphere near black holes, which is intrinsically linked to black hole shadows. Investigating the shadow of JP black holes offers an effective approach for assessing the validity of theorem. Since the Hamilton-Jacobi equation in the JP metric does not permit exact variable separation, an approximate analytical approach is employed to calculate the shadow, while the backward ray-tracing numerical method serves as a rigorous alternative. For JP black holes with closed event horizons, the approximate analytical approach reliably reproduces results obtained through the numerical computation. However, significant discrepancies emerge for black holes with non-closed event horizons. As the deviation parameter ϵ3 increases, certain regions of the critical curve transition into non-smooth configurations. Analysis of photon trajectories in these regions reveals chaotic dynamics, which accounts for the failure of the approximate analytical method to accurately describe the shadows of JP black holes with non-closed event horizons.

Low-light image enhancement (LLIE) is critical in computer vision. Existing

LLIE methods often fail to discover the underlying relationships between

different sub-components, causing the loss of complementary information between

multiple modules and network layers, ultimately resulting in the loss of image

details. To beat this shortage, we design a hierarchical mutual Enhancement via

a Cross Attention transformer (ECAFormer), which introduces an architecture

that enables concurrent propagation and interaction of multiple features. The

model preserves detailed information by introducing a Dual Multi-head

self-attention (DMSA), which leverages visual and semantic features across

different scales, allowing them to guide and complement each other. Besides, a

Cross-Scale DMSA block is introduced to capture the residual connection,

integrating cross-layer information to further enhance image detail.

Experimental results show that ECAFormer reaches competitive performance across

multiple benchmarks, yielding nearly a 3% improvement in PSNR over the

suboptimal method, demonstrating the effectiveness of information interaction

in LLIE.

Deep neural networks often face generalization problems to handle out-of-distribution (OOD) data, and there remains a notable theoretical gap between the contributing factors and their respective impacts. Literature evidence from in-distribution data has suggested that generalization error can shrink if the size of mixture data for training increases. However, when it comes to OOD samples, this conventional understanding does not hold anymore -- Increasing the size of training data does not always lead to a reduction in the test generalization error. In fact, diverse trends of the errors have been found across various shifting scenarios including those decreasing trends under a power-law pattern, initial declines followed by increases, or continuous stable patterns. Previous work has approached OOD data qualitatively, treating them merely as samples unseen during training, which are hard to explain the complicated non-monotonic trends. In this work, we quantitatively redefine OOD data as those situated outside the convex hull of mixed training data and establish novel generalization error bounds to comprehend the counterintuitive observations better. Our proof of the new risk bound agrees that the efficacy of well-trained models can be guaranteed for unseen data within the convex hull; More interestingly, but for OOD data beyond this coverage, the generalization cannot be ensured, which aligns with our observations. Furthermore, we attempted various OOD techniques to underscore that our results not only explain insightful observations in recent OOD generalization work, such as the significance of diverse data and the sensitivity to unseen shifts of existing algorithms, but it also inspires a novel and effective data selection strategy.

This study investigates the astronomical implications of the Ghosh-Kumar rotating Black Hole (BH), particularly its behaviour on shadow images, illuminated by celestial light sources and equatorial thin accretion disks. Our research delineates a crucial correlation between dynamics of the shadow images and the parameters a,~ q and the θobs, which aptly reflect the influence of the model parameters on the optical features of shadow images. Initially, elevated behavior of both a and q transforms the geometry of the shadow images from perfect circles to an oval shape and converges them towards the centre of the screen. By imposing the backward ray-tracing method, we demonstrate the optical appearance of shadow images of the considering BH spacetime in the celestial light source. The results demonstrate that the Einstein ring shows a transition from an axisymmetric closed circle to an arc-like shape on the screen as well as producing the deformation on the shadow shape with the modifications of spacetime parameters at the fixed observational position. Next, we observe that the attributes of accretion disks along with the relevant parameters on the shadow images are illuminated by both prograde and retrograde accreting flow. Our study reveals the process by which the accretion disk transitions from a disk-like structure to a hat-like shape with the aid of observational angles. Moreover, with an increase of q, the observed flux of both direct and lensed images of the accretion disk gradually moves towards the lower zone of the screen. Furthermore, we present the intensity distribution of the redshift factors on the screen. Our analysis suggests that the observer can see both redshift and blueshift factors on the screen at higher observational angles, while augmenting the values of both a and q, enhancing the effect of redshift on the screen.

There are no more papers matching your filters at the moment.