Cranfield University

Researchers from the University of Warwick, Cranfield University, University of Oxford, and University of Cambridge propose KG4Diagnosis, a hierarchical multi-agent LLM framework enhanced by a knowledge graph for medical diagnosis. The system integrates automated knowledge graph construction with a two-tier agent architecture to address complex medical scenarios and mitigate LLM hallucination, covering 362 common diseases.

MedHallBench introduces a novel benchmark framework for evaluating hallucinations in Medical Large Language Models (MLLMs), accompanied by a specialized ACHMI metric. The work demonstrates that medically fine-tuned models like LLaVA-Med (SF) exhibit lower hallucination rates when assessed using this new framework, providing a more precise quantification of medically inaccurate information.

The increasing automation of navigation for unmanned aerial vehicles (UAVs) has exposed them to adversarial attacks that exploit vulnerabilities in reinforcement learning (RL) through sensor manipulation. Although existing robust RL methods aim to mitigate such threats, their effectiveness has limited generalization to out-of-distribution shifts from the optimal value distribution, as they are primarily designed to handle fixed perturbation. To address this limitation, this paper introduces an antifragile RL framework that enhances adaptability to broader distributional shifts by incorporating a switching mechanism based on discounted Thompson sampling (DTS). This mechanism dynamically selects among multiple robust policies to minimize adversarially induced state-action-value distribution shifts. The proposed approach first derives a diverse ensemble of action robust policies by accounting for a range of perturbations in the policy space. These policies are then modeled as a multiarmed bandit (MAB) problem, where DTS optimally selects policies in response to nonstationary Bernoulli rewards, effectively adapting to evolving adversarial strategies. Theoretical framework has also been provided where by optimizing the DTS to minimize the overall regrets due to distributional shift, results in effective adaptation against unseen adversarial attacks thus inducing antifragility. Extensive numerical simulations validate the effectiveness of the proposed framework in complex navigation environments with multiple dynamic three-dimensional obstacles and with stronger projected gradient descent (PGD) and spoofing attacks. Compared to conventional robust, non-adaptive RL methods, the antifragile approach achieves superior performance, demonstrating shorter navigation path lengths and a higher rate of conflict-free navigation trajectories compared to existing robust RL techniques

Super-resolution reconstruction techniques entail the utilization of software

algorithms to transform one or more sets of low-resolution images captured from

the same scene into high-resolution images. In recent years, considerable

advancement has been observed in the domain of single-image super-resolution

algorithms, particularly those based on deep learning techniques. Nevertheless,

the extraction of image features and nonlinear mapping methods in the

reconstruction process remain challenging for existing algorithms. These issues

result in the network architecture being unable to effectively utilize the

diverse range of information at different levels. The loss of high-frequency

details is significant, and the final reconstructed image features are overly

smooth, with a lack of fine texture details. This negatively impacts the

subjective visual quality of the image. The objective is to recover

high-quality, high-resolution images from low-resolution images. In this work,

an enhanced deep convolutional neural network model is employed, comprising

multiple convolutional layers, each of which is configured with specific

filters and activation functions to effectively capture the diverse features of

the image. Furthermore, a residual learning strategy is employed to accelerate

training and enhance the convergence of the network, while sub-pixel

convolutional layers are utilized to refine the high-frequency details and

textures of the image. The experimental analysis demonstrates the superior

performance of the proposed model on multiple public datasets when compared

with the traditional bicubic interpolation method and several other

learning-based super-resolution methods. Furthermore, it proves the model's

efficacy in maintaining image edges and textures.

While the existing stochastic control theory is well equipped to handle dynamical systems with stochastic uncertainties, a paradigm shift using distance measure based decision making is required for the effective further exploration of the field. As a first step, a distance measure between two stochastic linear time invariant systems is proposed here, extending the existing distance metrics between deterministic linear dynamical systems. In the frequency domain, the proposed distance measure corresponds to the worst-case point-wise in frequency Wasserstein distance between distributions characterising the uncertainties using inverse stereographic projection on the Riemann sphere. For the time domain setting, the proposed distance corresponds to the gap metric induced type-q Wasserstein distance between the distributions characterising the uncertainty of plant models. Apart from providing lower and upper bounds for the proposed distance measures in both frequency and time domain settings, it is proved that the former never exceeds the latter. The proposed distance measures will facilitate the provision of probabilistic guarantees on system robustness and controller performances.

In this work we demonstrate the possibility of estimating the wind environment of a UAV without specialised sensors, using only the UAV's trajectory, applying a causal machine learning approach. We implement the causal curiosity method which combines machine learning times series classification and clustering with a causal framework. We analyse three distinct wind environments: constant wind, shear wind, and turbulence, and explore different optimisation strategies for optimal UAV manoeuvres to estimate the wind conditions. The proposed approach can be used to design optimal trajectories in challenging weather conditions, and to avoid specialised sensors that add to the UAV's weight and compromise its functionality.

Drone detection has benefited from improvements in deep neural networks, but like many other applications, suffers from the availability of accurate data for training. Synthetic data provides a potential for low-cost data generation and has been shown to improve data availability and quality. However, models trained on synthetic datasets need to prove their ability to perform on real-world data, known as the problem of sim-to-real transferability. Here, we present a drone detection Faster-RCNN model trained on a purely synthetic dataset that transfers to real-world data. We found that it achieves an AP_50 of 97.0% when evaluated on the MAV-Vid - a real dataset of flying drones - compared with 97.8% for an equivalent model trained on real-world data. Our results show that using synthetic data for drone detection has the potential to reduce data collection costs and improve labelling quality. These findings could be a starting point for more elaborate synthetic drone datasets. For example, realistic recreations of specific scenarios could de-risk the dataset generation of safety-critical applications such as the detection of drones at airports. Further, synthetic data may enable reliable drone detection systems, which could benefit other areas, such as unmanned traffic management systems. The code is available this https URL alongside the datasets this https URL.

Recent advancements in computer vision and deep learning have enhanced disaster-response capabilities, particularly in the rapid assessment of earthquake-affected urban environments. Timely identification of accessible entry points and structural obstacles is essential for effective search-and-rescue (SAR) operations. To address this need, we introduce DRespNeT, a high-resolution dataset specifically developed for aerial instance segmentation of post-earthquake structural environments. Unlike existing datasets, which rely heavily on satellite imagery or coarse semantic labeling, DRespNeT provides detailed polygon-level instance segmentation annotations derived from high-definition (1080p) aerial footage captured in disaster zones, including the 2023 Turkiye earthquake and other impacted regions. The dataset comprises 28 operationally critical classes, including structurally compromised buildings, access points such as doors, windows, and gaps, multiple debris levels, rescue personnel, vehicles, and civilian visibility. A distinctive feature of DRespNeT is its fine-grained annotation detail, enabling differentiation between accessible and obstructed areas, thereby improving operational planning and response efficiency. Performance evaluations using YOLO-based instance segmentation models, specifically YOLOv8-seg, demonstrate significant gains in real-time situational awareness and decision-making. Our optimized YOLOv8-DRN model achieves 92.7% mAP50 with an inference speed of 27 FPS on an RTX-4090 GPU for multi-target detection, meeting real-time operational requirements. The dataset and models support SAR teams and robotic systems, providing a foundation for enhancing human-robot collaboration, streamlining emergency response, and improving survivor outcomes.

Researchers from Cranfield University created a synthetic social media environment that combines agent-based modeling with a reinforcement learning agent and large language models for ethical experimentation on online influence. The system demonstrated that an RL opinion leader could consistently acquire followers, achieving a 10% gain, with performance optimized by a constrained action space and the agent's self-observation.

In a modern power system with an increasing proportion of renewable energy,

wind power prediction is crucial to the arrangement of power grid dispatching

plans due to the volatility of wind power. However, traditional centralized

forecasting methods raise concerns regarding data privacy-preserving and data

islands problem. To handle the data privacy and openness, we propose a

forecasting scheme that combines federated learning and deep reinforcement

learning (DRL) for ultra-short-term wind power forecasting, called federated

deep reinforcement learning (FedDRL). Firstly, this paper uses the deep

deterministic policy gradient (DDPG) algorithm as the basic forecasting model

to improve prediction accuracy. Secondly, we integrate the DDPG forecasting

model into the framework of federated learning. The designed FedDRL can obtain

an accurate prediction model in a decentralized way by sharing model parameters

instead of sharing private data which can avoid sensitive privacy issues. The

simulation results show that the proposed FedDRL outperforms the traditional

prediction methods in terms of forecasting accuracy. More importantly, while

ensuring the forecasting performance, FedDRL can effectively protect the data

privacy and relieve the communication pressure compared with the traditional

centralized forecasting method. In addition, a simulation with different

federated learning parameters is conducted to confirm the robustness of the

proposed scheme.

08 Apr 2020

This paper develops a new exponential forgetting algorithm that can prevent

so-called the estimator windup problem, while retaining fast convergence speed.

To investigate the properties of the proposed forgetting algorithm, boundedness

of the covariance matrix is first analysed and compared with various

exponential and directional forgetting algorithms. Then, stability of the

estimation error with and without the persistent excitation condition is

theoretically analysed in comparison with the existing benchmark algorithms.

Numerical simulations on wing rock motion validate the analysis results.

22 Sep 2025

This paper presents a new cooperative guidance algorithm based on Distributed Nonlinear Dynamic Inversion (DNDI) to demonstrate a coordinated missile attack.

Flight delays are a significant challenge in the aviation industry, causing major financial and operational disruptions. To improve passenger experience and reduce revenue loss, flight delay prediction models must be both precise and generalizable across different networks. This paper introduces a novel approach that combines Queue-Theory with a simple attention model, referred to as the Queue-Theory SimAM (QT-SimAM). To validate our model, we used data from the US Bureau of Transportation Statistics, where our proposed QT-SimAM (Bidirectional) model outperformed existing methods with an accuracy of 0.927 and an F1 score of 0.932. To assess transferability, we tested the model on the EUROCONTROL dataset. The results demonstrated strong performance, achieving an accuracy of 0.826 and an F1 score of 0.791. Ultimately, this paper outlines an effective, end-to-end methodology for predicting flight delays. The proposed model's ability to forecast delays with high accuracy across different networks can help reduce passenger anxiety and improve operational decision-making

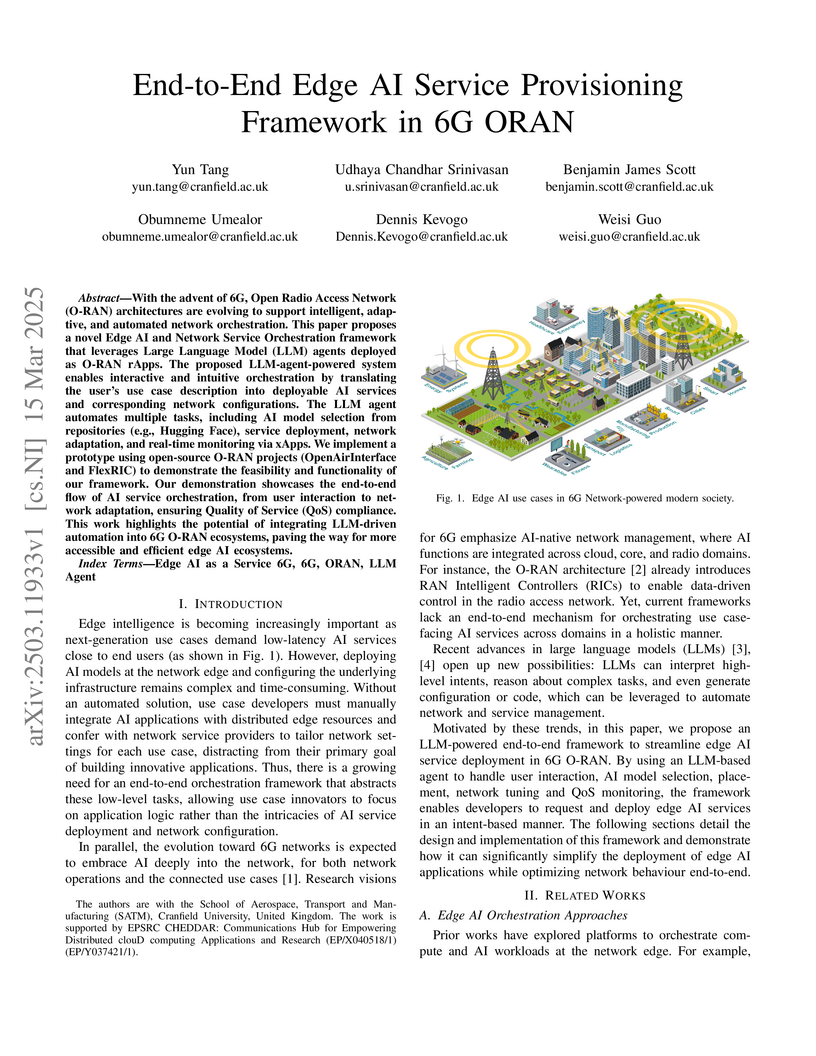

With the advent of 6G, Open Radio Access Network (O-RAN) architectures are

evolving to support intelligent, adaptive, and automated network orchestration.

This paper proposes a novel Edge AI and Network Service Orchestration framework

that leverages Large Language Model (LLM) agents deployed as O-RAN rApps. The

proposed LLM-agent-powered system enables interactive and intuitive

orchestration by translating the user's use case description into deployable AI

services and corresponding network configurations. The LLM agent automates

multiple tasks, including AI model selection from repositories (e.g., Hugging

Face), service deployment, network adaptation, and real-time monitoring via

xApps. We implement a prototype using open-source O-RAN projects

(OpenAirInterface and FlexRIC) to demonstrate the feasibility and functionality

of our framework. Our demonstration showcases the end-to-end flow of AI service

orchestration, from user interaction to network adaptation, ensuring Quality of

Service (QoS) compliance. This work highlights the potential of integrating

LLM-driven automation into 6G O-RAN ecosystems, paving the way for more

accessible and efficient edge AI ecosystems.

Stereo rectification is the determination of two image transformations (or

homographies) that map corresponding points on the two images, projections of

the same point in the 3D space, onto the same horizontal line in the

transformed images. Rectification is used to simplify the subsequent stereo

correspondence problem and speeding up the matching process. Rectifying

transformations, in general, introduce perspective distortion on the obtained

images, which shall be minimised to improve the accuracy of the following

algorithm dealing with the stereo correspondence problem. The search for the

optimal transformations is usually carried out relying on numerical

optimisation. This work proposes a closed-form solution for the rectifying

homographies that minimise perspective distortion. The experimental comparison

confirms its capability to solve the convergence issues of the previous

formulation. Its Python implementation is provided.

Although much political capital has been made regarding the war on terrorism,

and while appropriations have gotten underway, there has been a dearth of deep

work on counter-terrorism, and despite massive efforts by the federal

government, most cities and states do not have a robust response system. In

fact, most do not yet have a robust audit system with which to evaluate their

vulnerabilities or their responses. At the federal level there remain many

unresolved problems of coordination. One reason for this is the shift of much

of federal spending on war-fighting in Afghanistan and Iraq. While this

approach has drawn deep and lasting criticism, it is, in fact, in accord with

many principles of both military and corporate strategy. In the following paper

we explore several models of terrorist networks and the implications of both

the models and their substantive conclusions for combating terrorism.

Future airports are becoming more complex and congested with the increasing number of travellers. While the airports are more likely to become hotspots for potential conflicts to break out which can cause serious delays to flights and several safety issues. An intelligent algorithm which renders security surveillance more effective in detecting conflicts would bring many benefits to the passengers in terms of their safety, finance, and travelling efficiency. This paper details the development of a machine learning model to classify conflicting behaviour in a crowd. HRNet is used to segment the images and then two approaches are taken to classify the poses of people in the frame via multiple classifiers. Among them, it was found that the support vector machine (SVM) achieved the most performant achieving precision of 94.37%. Where the model falls short is against ambiguous behaviour such as a hug or losing track of a subject in the frame. The resulting model has potential for deployment within an airport if improvements are made to cope with the vast number of potential passengers in view as well as training against further ambiguous behaviours which will arise in an airport setting. In turn, will provide the capability to enhance security surveillance and improve airport safety.

The capital market plays a vital role in marketing operations for aerospace

industry. However, due to the uncertainty and complexity of the stock market

and many cyclical factors, the stock prices of listed aerospace companies

fluctuate significantly. This makes the share price prediction challengeable.

To improve the prediction of share price for aerospace industry sector and well

understand the impact of various indicators on stock prices, we provided a

hybrid prediction model by the combination of Principal Component Analysis

(PCA) and Recurrent Neural Networks. We investigated two types of aerospace

industries (manufacturer and operator). The experimental results show that PCA

could improve both accuracy and efficiency of prediction. Various factors could

influence the performance of prediction models, such as finance data, extracted

features, optimisation algorithms, and parameters of the prediction model. The

selection of features may depend on the stability of historical data: technical

features could be the first option when the share price is stable, whereas

fundamental features could be better when the share price has high fluctuation.

The delays of RNN also depend on the stability of historical data for different

types of companies. It would be more accurate through using short-term

historical data for aerospace manufacturers, whereas using long-term historical

data for aerospace operating airlines. The developed model could be an

intelligent agent in an automatic stock prediction system, with which, the

financial industry could make a prompt decision for their economic strategies

and business activities in terms of predicted future share price, thus

improving the return on investment. Currently, COVID-19 severely influences

aerospace industries. The developed approach can be used to predict the share

price of aerospace industries at post COVID-19 time.

Nowadays, large-scale foundation models are being increasingly integrated

into numerous safety-critical applications, including human-autonomy teaming

(HAT) within transportation, medical, and defence domains. Consequently, the

inherent 'black-box' nature of these sophisticated deep neural networks

heightens the significance of fostering mutual understanding and trust between

humans and autonomous systems. To tackle the transparency challenges in HAT,

this paper conducts a thoughtful study on the underexplored domain of

Explainable Interface (EI) in HAT systems from a human-centric perspective,

thereby enriching the existing body of research in Explainable Artificial

Intelligence (XAI). We explore the design, development, and evaluation of EI

within XAI-enhanced HAT systems. To do so, we first clarify the distinctions

between these concepts: EI, explanations and model explainability, aiming to

provide researchers and practitioners with a structured understanding. Second,

we contribute to a novel framework for EI, addressing the unique challenges in

HAT. Last, our summarized evaluation framework for ongoing EI offers a holistic

perspective, encompassing model performance, human-centered factors, and group

task objectives. Based on extensive surveys across XAI, HAT, psychology, and

Human-Computer Interaction (HCI), this review offers multiple novel insights

into incorporating XAI into HAT systems and outlines future directions.

An accurate system to study the stability of pipe flow that ensures regularity is presented. The system produces a spectrum that is as accurate as Meseguer \& Trefethen (2000), while providing flexibility to amend the boundary conditions without a need to modify the formulation. The accuracy is achieved by formulating the state variables to behave as analytic functions. We show that the resulting system retains the regular singularity at the pipe centre with a multiplicity of poles such that the wall boundary conditions are complemented with precisely the needed number of regularity conditions for obtaining unique solutions. In the case of axisymmetric and axially constant perturbations the computed eigenvalues match, to double precision accuracy, the values predicted by the analytical characteristic relations. The derived system is used to obtain the optimal inviscid disturbance pattern, which is found to hold similar structure as in plane shear flows.

There are no more papers matching your filters at the moment.