Deakin University

A comprehensive review highlights how Artificial Intelligence, particularly deep learning, can predict various cardiovascular diseases and their risk factors using non-invasive retinal fundus images. It synthesizes a decade of research demonstrating AI's capability to assess demographic, metabolic, and traditional risk factors directly from eye scans.

This paper presents a unified taxonomy for understanding LLM-based agents, categorizing LLM functionalities into three task-agnostic roles and four universal workflows. The work provides a structured analysis of current agent designs, highlighting commonalities, differences, and limitations across tool use, planning, and feedback learning paradigms.

Visual Question Answering (VQA) is an evolving research field aimed at

enabling machines to answer questions about visual content by integrating image

and language processing techniques such as feature extraction, object

detection, text embedding, natural language understanding, and language

generation. With the growth of multimodal data research, VQA has gained

significant attention due to its broad applications, including interactive

educational tools, medical image diagnosis, customer service, entertainment,

and social media captioning. Additionally, VQA plays a vital role in assisting

visually impaired individuals by generating descriptive content from images.

This survey introduces a taxonomy of VQA architectures, categorizing them based

on design choices and key components to facilitate comparative analysis and

evaluation. We review major VQA approaches, focusing on deep learning-based

methods, and explore the emerging field of Large Visual Language Models (LVLMs)

that have demonstrated success in multimodal tasks like VQA. The paper further

examines available datasets and evaluation metrics essential for measuring VQA

system performance, followed by an exploration of real-world VQA applications.

Finally, we highlight ongoing challenges and future directions in VQA research,

presenting open questions and potential areas for further development. This

survey serves as a comprehensive resource for researchers and practitioners

interested in the latest advancements and future

Cross-domain offline reinforcement learning (RL) seeks to enhance sample efficiency in offline RL by utilizing additional offline source datasets. A key challenge is to identify and utilize source samples that are most relevant to the target domain. Existing approaches address this challenge by measuring domain gaps through domain classifiers, target transition dynamics modeling, or mutual information estimation using contrastive loss. However, these methods often require large target datasets, which is impractical in many real-world scenarios. In this work, we address cross-domain offline RL under a limited target data setting, identifying two primary challenges: (1) Dataset imbalance, which is caused by large source and small target datasets and leads to overfitting in neural network-based domain gap estimators, resulting in uninformative measurements; and (2) Partial domain overlap, where only a subset of the source data is closely aligned with the target domain. To overcome these issues, we propose DmC, a novel framework for cross-domain offline RL with limited target samples. Specifically, DmC utilizes k-nearest neighbor (k-NN) based estimation to measure domain proximity without neural network training, effectively mitigating overfitting. Then, by utilizing this domain proximity, we introduce a nearest-neighbor-guided diffusion model to generate additional source samples that are better aligned with the target domain, thus enhancing policy learning with more effective source samples. Through theoretical analysis and extensive experiments in diverse MuJoCo environments, we demonstrate that DmC significantly outperforms state-of-the-art cross-domain offline RL methods, achieving substantial performance gains.

While most generative models show achievements in image data generation, few are developed for tabular data generation. Recently, due to success of large language models (LLM) in diverse tasks, they have also been used for tabular data generation. However, these methods do not capture the correct correlation between the features and the target variable, hindering their applications in downstream predictive tasks. To address this problem, we propose a LLM-based method with three important improvements to correctly capture the ground-truth feature-class correlation in the real data. First, we propose a novel permutation strategy for the input data in the fine-tuning phase. Second, we propose a feature-conditional sampling approach to generate synthetic samples. Finally, we generate the labels by constructing prompts based on the generated samples to query our fine-tuned LLM. Our extensive experiments show that our method significantly outperforms 10 SOTA baselines on 20 datasets in downstream tasks. It also produces highly realistic synthetic samples in terms of quality and diversity. More importantly, classifiers trained with our synthetic data can even compete with classifiers trained with the original data on half of the benchmark datasets, which is a significant achievement in tabular data generation.

University of TorontoUniversity of Stuttgart

University of TorontoUniversity of Stuttgart UC BerkeleyKorea University

UC BerkeleyKorea University National University of SingaporeChonnam National University

National University of SingaporeChonnam National University Stanford UniversityJapan Advanced Institute of Science and Technology

Stanford UniversityJapan Advanced Institute of Science and Technology University of California, San Diego

University of California, San Diego University of Texas at AustinConcordia UniversityMBZUAISingapore University of Technology and DesignDeakin UniversityNew Jersey Institute of TechnologyKnovel Engineering LabAuburn UniversityGerman Research Centre for Artificial IntelligenceOldenburg UniversityJustus Liebig University GiessenCollege of William & MaryBucknell UniversityMax Planck Research School for Intelligent Systems (IMPRS-IS)Hue UniversityUniversity Medical Center G

bottingenUniversity Medical Center G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

ottingen

University of Texas at AustinConcordia UniversityMBZUAISingapore University of Technology and DesignDeakin UniversityNew Jersey Institute of TechnologyKnovel Engineering LabAuburn UniversityGerman Research Centre for Artificial IntelligenceOldenburg UniversityJustus Liebig University GiessenCollege of William & MaryBucknell UniversityMax Planck Research School for Intelligent Systems (IMPRS-IS)Hue UniversityUniversity Medical Center G

bottingenUniversity Medical Center G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

G

ottingenFaithful reasoning in medical vision-language models (VLMs) requires not only accurate predictions but also transparent alignment between textual rationales and visual evidence. While Chain-of-Thought (CoT) prompting has shown promise in medical visual question answering (VQA), no large-scale expert-level dataset has captured stepwise reasoning with precise visual grounding. We introduce S-Chain, the first large-scale dataset of 12,000 expert-annotated medical images with bounding boxes and structured visual CoT (SV-CoT), explicitly linking visual regions to reasoning steps. The dataset further supports 16 languages, totaling over 700k VQA pairs for broad multilingual applicability. Using S-Chain, we benchmark state-of-the-art medical VLMs (ExGra-Med, LLaVA-Med) and general-purpose VLMs (Qwen2.5-VL, InternVL2.5), showing that SV-CoT supervision significantly improves interpretability, grounding fidelity, and robustness. Beyond benchmarking, we study its synergy with retrieval-augmented generation, revealing how domain knowledge and visual grounding interact during autoregressive reasoning. Finally, we propose a new mechanism that strengthens the alignment between visual evidence and reasoning, improving both reliability and efficiency. S-Chain establishes a new benchmark for grounded medical reasoning and paves the way toward more trustworthy and explainable medical VLMs.

Contrastive vision-language models excel in zero-shot image recognition but face challenges in few-shot scenarios due to computationally intensive offline fine-tuning using prompt learning, which risks overfitting. To overcome these limitations, we propose Attn-Adapter, a novel online few-shot learning framework that enhances CLIP's adaptability via a dual attention mechanism. Our design incorporates dataset-specific information through two components: the Memory Attn-Adapter, which refines category embeddings using support examples, and the Local-Global Attn-Adapter, which enriches image embeddings by integrating local and global features. This architecture enables dynamic adaptation from a few labeled samples without retraining the base model. Attn-Adapter outperforms state-of-the-art methods in cross-category and cross-dataset generalization, maintaining efficient inference and scaling across CLIP backbones.

Research interest in autonomous agents is on the rise as an emerging topic.

The notable achievements of Large Language Models (LLMs) have demonstrated the

considerable potential to attain human-like intelligence in autonomous agents.

However, the challenge lies in enabling these agents to learn, reason, and

navigate uncertainties in dynamic environments. Context awareness emerges as a

pivotal element in fortifying multi-agent systems when dealing with dynamic

situations. Despite existing research focusing on both context-aware systems

and multi-agent systems, there is a lack of comprehensive surveys outlining

techniques for integrating context-aware systems with multi-agent systems. To

address this gap, this survey provides a comprehensive overview of

state-of-the-art context-aware multi-agent systems. First, we outline the

properties of both context-aware systems and multi-agent systems that

facilitate integration between these systems. Subsequently, we propose a

general process for context-aware systems, with each phase of the process

encompassing diverse approaches drawn from various application domains such as

collision avoidance in autonomous driving, disaster relief management, utility

management, supply chain management, human-AI interaction, and others. Finally,

we discuss the existing challenges of context-aware multi-agent systems and

provide future research directions in this field.

SuperRAG, developed by Cinnamon AI, Hung Yen University of Technology and Education, and Deakin University, improves Retrieval-Augmented Generation for complex, multimodal documents by representing their layout and structure as a property graph. This approach leads to more accurate information retrieval and answer generation, outperforming traditional RAG systems on benchmark datasets like DOCBENCH and SPIQA.

Deakin University researchers analyze 3 million text samples generated by 12 different LLMs across 5,000 prompts, revealing distinct patterns in output similarity, stylistic markers, and bias levels while demonstrating that proprietary models produce more consistent outputs than open-source alternatives and certain models like Gemma-7B show reduced gender and racial bias compared to others.

Deakin University researchers develop Memory-R+, a memory-augmented reinforcement learning framework that enables small language models (≤1B parameters) to achieve improved reasoning capabilities through episodic memory storage and intrinsic motivation mechanisms, making advanced language model capabilities more accessible while reducing computational requirements.

08 Jul 2024

This paper presents a comprehensive review of differentiable physics simulators, detailing their foundational components for gradient computation, dynamics and contact modeling, and integration schemes. It surveys current open-source tools and prominent applications, illustrating how these simulators enable efficient gradient-based optimization across scientific and engineering domains like robotics and computational physics.

Video event detection has become a cornerstone of modern sports analytics, powering automated performance evaluation, content generation, and tactical decision-making. Recent advances in deep learning have driven progress in related tasks such as Temporal Action Localization (TAL), which detects extended action segments; Action Spotting (AS), which identifies a representative timestamp; and Precise Event Spotting (PES), which pinpoints the exact frame of an event. Although closely connected, their subtle differences often blur the boundaries between them, leading to confusion in both research and practical applications. Furthermore, prior surveys either address generic video event detection or broader sports video tasks, but largely overlook the unique temporal granularity and domain-specific challenges of event spotting. In addition, most existing sports video surveys focus on elite-level competitions while neglecting the wider community of everyday practitioners. This survey addresses these gaps by: (i) clearly delineating TAL, AS, and PES and their respective use cases; (ii) introducing a structured taxonomy of state of the art approaches including temporal modeling strategies, multimodal frameworks, and data-efficient pipelines tailored for AS and PES; and (iii) critically assessing benchmark datasets and evaluation protocols, highlighting limitations such as reliance on broadcast quality footage and metrics that over reward permissive multilabel predictions. By synthesizing current research and exposing open challenges, this work provides a comprehensive foundation for developing temporally precise, generalizable, and practically deployable sports event detection systems for both the research and industry communities.

In recent years, the abuse of a face swap technique called deepfake has

raised enormous public concerns. So far, a large number of deepfake videos

(known as "deepfakes") have been crafted and uploaded to the internet, calling

for effective countermeasures. One promising countermeasure against deepfakes

is deepfake detection. Several deepfake datasets have been released to support

the training and testing of deepfake detectors, such as DeepfakeDetection and

FaceForensics++. While this has greatly advanced deepfake detection, most of

the real videos in these datasets are filmed with a few volunteer actors in

limited scenes, and the fake videos are crafted by researchers using a few

popular deepfake softwares. Detectors developed on these datasets may become

less effective against real-world deepfakes on the internet. To better support

detection against real-world deepfakes, in this paper, we introduce a new

dataset WildDeepfake which consists of 7,314 face sequences extracted from 707

deepfake videos collected completely from the internet. WildDeepfake is a small

dataset that can be used, in addition to existing datasets, to develop and test

the effectiveness of deepfake detectors against real-world deepfakes. We

conduct a systematic evaluation of a set of baseline detection networks on both

existing and our WildDeepfake datasets, and show that WildDeepfake is indeed a

more challenging dataset, where the detection performance can decrease

drastically. We also propose two (eg. 2D and 3D) Attention-based Deepfake

Detection Networks (ADDNets) to leverage the attention masks on real/fake faces

for improved detection. We empirically verify the effectiveness of ADDNets on

both existing datasets and WildDeepfake. The dataset is available at:

https://github.com/OpenTAI/wild-deepfake.

MissHDD introduces a hybrid deterministic diffusion framework for heterogeneous incomplete tabular data, employing a two-channel architecture that handles numerical and categorical features distinctly. This approach achieves consistently lower imputation errors and maintains downstream task performance, while delivering up to a 5x inference speed-up and enhancing imputation stability compared to existing stochastic diffusion models.

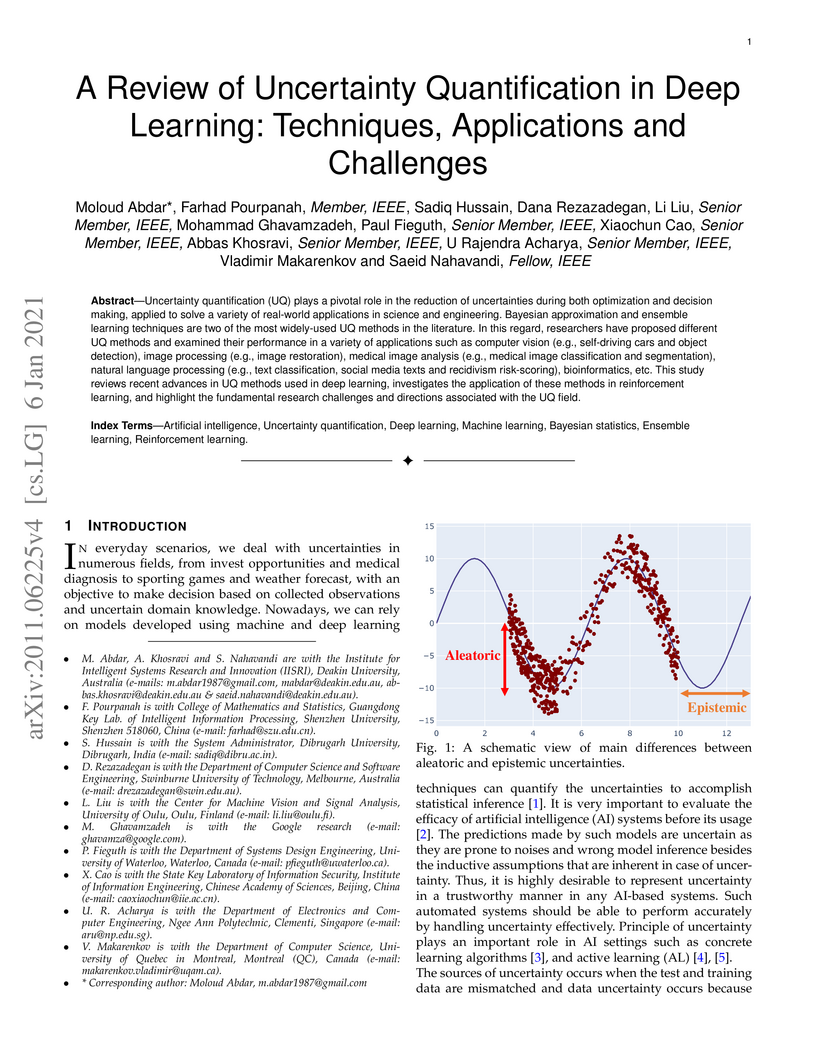

Uncertainty quantification (UQ) plays a pivotal role in reduction of uncertainties during both optimization and decision making processes. It can be applied to solve a variety of real-world applications in science and engineering. Bayesian approximation and ensemble learning techniques are two most widely-used UQ methods in the literature. In this regard, researchers have proposed different UQ methods and examined their performance in a variety of applications such as computer vision (e.g., self-driving cars and object detection), image processing (e.g., image restoration), medical image analysis (e.g., medical image classification and segmentation), natural language processing (e.g., text classification, social media texts and recidivism risk-scoring), bioinformatics, etc. This study reviews recent advances in UQ methods used in deep learning. Moreover, we also investigate the application of these methods in reinforcement learning (RL). Then, we outline a few important applications of UQ methods. Finally, we briefly highlight the fundamental research challenges faced by UQ methods and discuss the future research directions in this field.

Knowledge graph completion (KGC) can predict missing links and is crucial for real-world knowledge graphs, which widely suffer from incompleteness. KGC methods assume a knowledge graph is static, but that may lead to inaccurate prediction results because many facts in the knowledge graphs change over time. Recently, emerging methods have shown improved predictive results by further incorporating the timestamps of facts; namely, temporal knowledge graph completion (TKGC). With this temporal information, TKGC methods can learn the dynamic evolution of the knowledge graph that KGC methods fail to capture. In this paper, for the first time, we summarize the recent advances in TKGC research. First, we detail the background of TKGC, including the problem definition, benchmark datasets, and evaluation metrics. Then, we summarize existing TKGC methods based on how timestamps of facts are used to capture the temporal dynamics. Finally, we conclude the paper and present future research directions of TKGC.

This paper presents a comprehensive survey of Physics-Informed Medical Image Analysis (PIMIA), providing a unified taxonomy for how physics knowledge is incorporated and quantitatively assessing the performance enhancements from integrating physics into various medical imaging tasks. It delineates current research trends, challenges, and future directions in this rapidly growing field.

02 Mar 2025

Researchers at Fudan University and collaborators introduce HUMAN SIMULACRA, a psychology-guided benchmark and methodology to enhance large language model personification, aiming for their use as substitutes for human participants in social science experiments. The work constructs virtual characters with detailed life stories and proposes a Multi-Agent Cognitive Mechanism (MACM) for more consistent, personality-aligned responses, achieving up to 88.00% in self-reports and 77.50% in observer reports on GPT-4-Turbo.

Referring Medical Image Sequence Segmentation (Ref-MISS) is a novel and

challenging task that aims to segment anatomical structures in medical image

sequences (\emph{e.g.} endoscopy, ultrasound, CT, and MRI) based on natural

language descriptions. This task holds significant clinical potential and

offers a user-friendly advancement in medical imaging interpretation. Existing

2D and 3D segmentation models struggle to explicitly track objects of interest

across medical image sequences, and lack support for nteractive, text-driven

guidance. To address these limitations, we propose Text-Promptable Propagation

(TPP), a model designed for referring medical image sequence segmentation. TPP

captures the intrinsic relationships among sequential images along with their

associated textual descriptions. Specifically, it enables the recognition of

referred objects through cross-modal referring interaction, and maintains

continuous tracking across the sequence via Transformer-based triple

propagation, using text embeddings as queries. To support this task, we curate

a large-scale benchmark, Ref-MISS-Bench, which covers 4 imaging modalities and

20 different organs and lesions. Experimental results on this benchmark

demonstrate that TPP consistently outperforms state-of-the-art methods in both

medical segmentation and referring video object segmentation.

There are no more papers matching your filters at the moment.