Democritus University of Thrace

The visual examination of tissue biopsy sections is fundamental for cancer diagnosis, with pathologists analyzing sections at multiple magnifications to discern tumor cells and their subtypes. However, existing attention-based multiple instance learning (MIL) models used for analyzing Whole Slide Images (WSIs) in cancer diagnostics often overlook the contextual information of tumor and neighboring tiles, leading to misclassifications. To address this, we propose the Context-Aware Multiple Instance Learning (CAMIL) architecture. CAMIL incorporates neighbor-constrained attention to consider dependencies among tiles within a WSI and integrates contextual constraints as prior knowledge into the MIL model. We evaluated CAMIL on subtyping non-small cell lung cancer (TCGA-NSCLC) and detecting lymph node (CAMELYON16 and CAMELYON17) metastasis, achieving test AUCs of 97.5\%, 95.9\%, and 88.1\%, respectively, outperforming other state-of-the-art methods. Additionally, CAMIL enhances model interpretability by identifying regions of high diagnostic value.

Attention is a core operation in numerous machine learning and artificial intelligence models. This work focuses on the acceleration of attention kernel using FlashAttention algorithm, in vector processors, particularly those based on the RISC-V instruction set architecture (ISA). This work represents the first effort to vectorize FlashAttention, minimizing scalar code and simplifying the computational complexity of evaluating exponentials needed by softmax used in attention. By utilizing a low-cost approximation for exponentials in floating-point arithmetic, we reduce the cost of computing the exponential function without the need to extend baseline vector ISA with new custom instructions. Also, appropriate tiling strategies are explored with the goal to improve memory locality. Experimental results highlight the scalability of our approach, demonstrating significant performance gains with the vectorized implementations when processing attention layers in practical applications.

08 Mar 2025

Accurate hand pose estimation is vital in robotics, advancing dexterous

manipulation in human-computer interaction. Toward this goal, this paper

presents ReJSHand (which stands for Refined Joint and Skeleton Features), a

cutting-edge network formulated for real-time hand pose estimation and mesh

reconstruction. The proposed framework is designed to accurately predict 3D

hand gestures under real-time constraints, which is essential for systems that

demand agile and responsive hand motion tracking. The network's design

prioritizes computational efficiency without compromising accuracy, a

prerequisite for instantaneous robotic interactions. Specifically, ReJSHand

comprises a 2D keypoint generator, a 3D keypoint generator, an expansion block,

and a feature interaction block for meticulously reconstructing 3D hand poses

from 2D imagery. In addition, the multi-head self-attention mechanism and a

coordinate attention layer enhance feature representation, streamlining the

creation of hand mesh vertices through sophisticated feature mapping and linear

transformation. Regarding performance, comprehensive evaluations on the

FreiHand dataset demonstrate ReJSHand's computational prowess. It achieves a

frame rate of 72 frames per second while maintaining a PA-MPJPE

(Position-Accurate Mean Per Joint Position Error) of 6.3 mm and a PA-MPVPE

(Position-Accurate Mean Per Vertex Position Error) of 6.4 mm. Moreover, our

model reaches scores of 0.756 for F@05 and 0.984 for F@15, surpassing modern

pipelines and solidifying its position at the forefront of robotic hand pose

estimators. To facilitate future studies, we provide our source code at

~\url{this https URL}.

17 Jul 2025

The Number Theoretic Transform (NTT) is a fundamental operation in privacy-preserving technologies, particularly within fully homomorphic encryption (FHE). The efficiency of NTT computation directly impacts the overall performance of FHE, making hardware acceleration a critical technology that will enable realistic FHE applications. Custom accelerators, in FPGAs or ASICs, offer significant performance advantages due to their ability to exploit massive parallelism and specialized optimizations. However, the operation of NTT over large moduli requires large word-length modulo arithmetic that limits achievable clock frequencies in hardware and increases hardware area costs. To overcome such deficits, digit-serial arithmetic has been explored for modular multiplication and addition independently. The goal of this work is to leverage digit-serial modulo arithmetic combined with appropriate redundant data representation to design modular pipelined NTT accelerators that operate uniformly on arbitrary small digits, without the need for intermediate (de)serialization. The proposed architecture enables high clock frequencies through regular pipelining while maintaining parallelism. Experimental results demonstrate that the proposed approach outperforms state-of-the-art implementations and reduces hardware complexity under equal performance and input-output bandwidth constraints.

17 Jan 2025

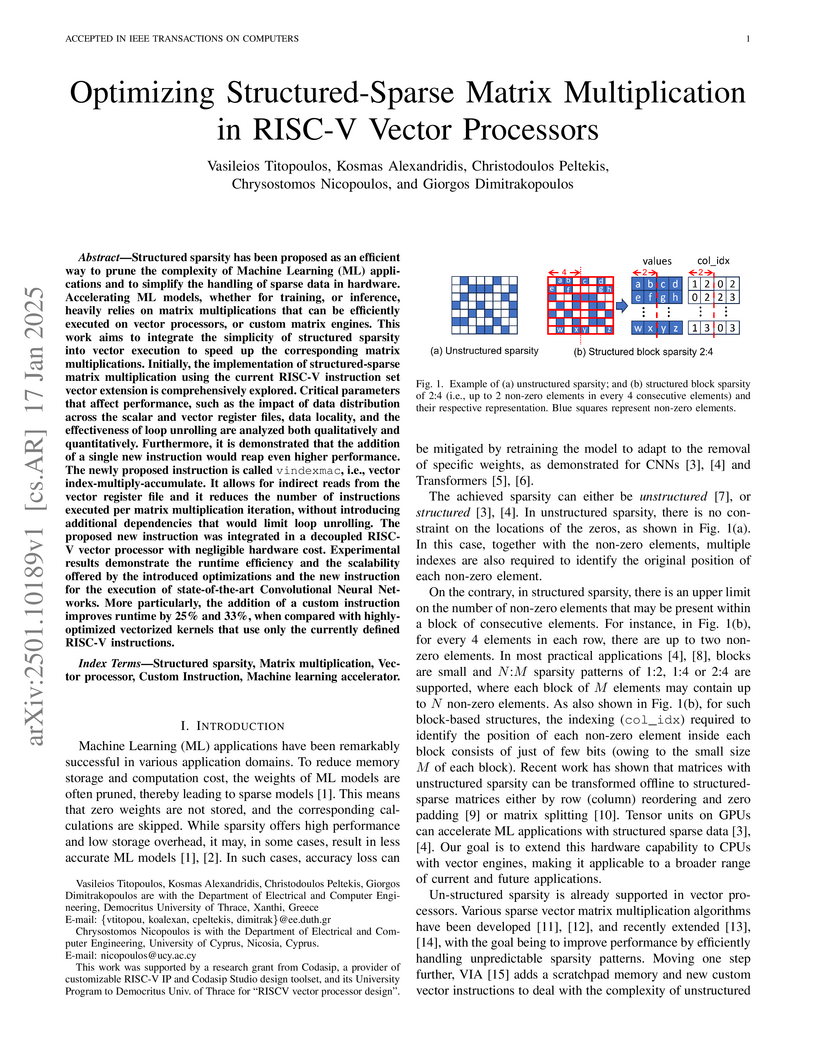

Structured sparsity has been proposed as an efficient way to prune the complexity of Machine Learning (ML) applications and to simplify the handling of sparse data in hardware. Accelerating ML models, whether for training, or inference, heavily relies on matrix multiplications that can be efficiently executed on vector processors, or custom matrix engines. This work aims to integrate the simplicity of structured sparsity into vector execution to speed up the corresponding matrix multiplications. Initially, the implementation of structured-sparse matrix multiplication using the current RISC-V instruction set vector extension is comprehensively explored. Critical parameters that affect performance, such as the impact of data distribution across the scalar and vector register files, data locality, and the effectiveness of loop unrolling are analyzed both qualitatively and quantitatively. Furthermore, it is demonstrated that the addition of a single new instruction would reap even higher performance. The newly proposed instruction is called vindexmac, i.e., vector index-multiply-accumulate. It allows for indirect reads from the vector register file and it reduces the number of instructions executed per matrix multiplication iteration, without introducing additional dependencies that would limit loop unrolling. The proposed new instruction was integrated in a decoupled RISC-V vector processor with negligible hardware cost. Experimental results demonstrate the runtime efficiency and the scalability offered by the introduced optimizations and the new instruction for the execution of state-of-the-art Convolutional Neural Networks. More particularly, the addition of a custom instruction improves runtime by 25% and 33% when compared with highly-optimized vectorized kernels that use only the currently defined RISC-V instructions.

17 Sep 2025

Membership Inference Attacks (MIAs) pose a significant privacy risk, as they enable adversaries to determine whether a specific data point was included in the training dataset of a model. While Machine Unlearning is primarily designed as a privacy mechanism to efficiently remove private data from a machine learning model without the need for full retraining, its impact on the susceptibility of models to MIA remains an open question. In this study, we systematically assess the vulnerability of models to MIA after applying state-of-art Machine Unlearning algorithms. Our analysis spans four diverse datasets (two from the image domain and two in tabular format), exploring how different unlearning approaches influence the exposure of models to membership inference. The findings highlight that while Machine Unlearning is not inherently a countermeasure against MIA, the unlearning algorithm and data characteristics can significantly affect a model's vulnerability. This work provides essential insights into the interplay between Machine Unlearning and MIAs, offering guidance for the design of privacy-preserving machine learning systems.

Clinical trials are a critical component of evaluating the effectiveness of

new medical interventions and driving advancements in medical research.

Therefore, timely enrollment of patients is crucial to prevent delays or

premature termination of trials. In this context, Electronic Health Records

(EHRs) have emerged as a valuable tool for identifying and enrolling eligible

participants. In this study, we propose an automated approach that leverages

ChatGPT, a large language model, to extract patient-related information from

unstructured clinical notes and generate search queries for retrieving

potentially eligible clinical trials. Our empirical evaluation, conducted on

two benchmark retrieval collections, shows improved retrieval performance

compared to existing approaches when several general-purposed and task-specific

prompts are used. Notably, ChatGPT-generated queries also outperform

human-generated queries in terms of retrieval performance. These findings

highlight the potential use of ChatGPT to enhance clinical trial enrollment

while ensuring the quality of medical service and minimizing direct risks to

patients.

Attention mechanisms, particularly within Transformer architectures and large language models (LLMs), have revolutionized sequence modeling in machine learning and artificial intelligence applications. To compute attention for increasingly long sequences, specialized accelerators have been proposed to execute key attention steps directly in hardware. Among the various recently proposed architectures, those based on variants of the FlashAttention algorithm, originally designed for GPUs, stand out due to their optimized computation, tiling capabilities, and reduced memory traffic. In this work, we focus on optimizing the kernel of floating-point-based FlashAttention using new hardware operators that fuse the computation of exponentials and vector multiplications, e.g., e^x, V. The proposed ExpMul hardware operators significantly reduce the area and power costs of FlashAttention-based hardware accelerators. When implemented in a 28nm ASIC technology, they achieve improvements of 28.8% in area and 17.6% in power, on average, compared to state-of-the-art hardware architectures with separate exponentials and vector multiplications hardware operators.

24 Jul 2025

Multi-UAV Coverage Path Planning (mCPP) algorithms in popular commercial software typically treat a Region of Interest (RoI) only as a 2D plane, ignoring important3D structure characteristics. This leads to incomplete 3Dreconstructions, especially around occluded or vertical surfaces. In this paper, we propose a modular algorithm that can extend commercial two-dimensional path planners to facilitate terrain-aware planning by adjusting altitude and camera orientations. To demonstrate it, we extend the well-known DARP (Divide Areas for Optimal Multi-Robot Coverage Path Planning) algorithm and produce DARP-3D. We present simulation results in multiple 3D environments and a real-world flight test using DJI hardware. Compared to baseline, our approach consistently captures improved 3D reconstructions, particularly in areas with significant vertical features. An open-source implementation of the algorithm is available here:this https URL

The increasing demand for efficient resource allocation in mobile networks has catalyzed the exploration of innovative solutions that could enhance the task of real-time cellular traffic prediction. Under these circumstances, federated learning (FL) stands out as a distributed and privacy-preserving solution to foster collaboration among different sites, thus enabling responsive near-the-edge solutions. In this paper, we comprehensively study the potential benefits of FL in telecommunications through a case study on federated traffic forecasting using real-world data from base stations (BSs) in Barcelona (Spain). Our study encompasses relevant aspects within the federated experience, including model aggregation techniques, outlier management, the impact of individual clients, personalized learning, and the integration of exogenous sources of data. The performed evaluation is based on both prediction accuracy and sustainability, thus showcasing the environmental impact of employed FL algorithms in various settings. The findings from our study highlight FL as a promising and robust solution for mobile traffic prediction, emphasizing its twin merits as a privacy-conscious and environmentally sustainable approach, while also demonstrating its capability to overcome data heterogeneity and ensure high-quality predictions, marking a significant stride towards its integration in mobile traffic management systems.

21 Sep 2023

University of Science and Technology of ChinaAristotle University of ThessalonikiUniversity of IoanninaUniversity of PatrasKuwait UniversityDemocritus University of ThraceAstronomical Institute of the Czech Academy of SciencesNational Observatory of AthensEuropean University CyprusAcademy of AthensSPACE ASICS S.A.Institute of Astrophysics, Foundation for Research and Innovation - Hellas* National and Kapodistrian University of Athens

University of Science and Technology of ChinaAristotle University of ThessalonikiUniversity of IoanninaUniversity of PatrasKuwait UniversityDemocritus University of ThraceAstronomical Institute of the Czech Academy of SciencesNational Observatory of AthensEuropean University CyprusAcademy of AthensSPACE ASICS S.A.Institute of Astrophysics, Foundation for Research and Innovation - Hellas* National and Kapodistrian University of AthensThe Laser Interferometer Space Antenna (LISA) mission, scheduled for launch

in the mid-2030s, is a gravitational wave observatory in space designed to

detect sources emitting in the millihertz band. LISA is an ESA flagship

mission, currently entering the Phase B development phase. It is expected to

help us improve our understanding about our Universe by measuring gravitational

wave sources of different types, with some of the sources being at very high

redshifts z∼20. On the 23rd of February 2022 we organized the

1st {\it LISA in Greece Workshop}. This workshop aimed to inform

the Greek scientific and tech industry community about the possibilities of

participating in LISA science and LISA mission, with the support of the

Hellenic Space Center (HSC). In this white paper, we summarize the outcome of

the workshop, the most important aspect of it being the inclusion of 15 Greek

researchers to the LISA Consortium, raising our total number to 22. At the

same time, we present a road-map with the future steps and actions of the Greek

Gravitational Wave community with respect to the future LISA mission.

This paper introduces RobotIQ, a framework that empowers mobile robots with

human-level planning capabilities, enabling seamless communication via natural

language instructions through any Large Language Model. The proposed framework

is designed in the ROS architecture and aims to bridge the gap between humans

and robots, enabling robots to comprehend and execute user-expressed text or

voice commands. Our research encompasses a wide spectrum of robotic tasks,

ranging from fundamental logical, mathematical, and learning reasoning for

transferring knowledge in domains like navigation, manipulation, and object

localization, enabling the application of learned behaviors from simulated

environments to real-world operations. All encapsulated within a modular

crafted robot library suite of API-wise control functions, RobotIQ offers a

fully functional AI-ROS-based toolset that allows researchers to design and

develop their own robotic actions tailored to specific applications and robot

configurations. The effectiveness of the proposed system was tested and

validated both in simulated and real-world experiments focusing on a home

service scenario that included an assistive application designed for elderly

people. RobotIQ with an open-source, easy-to-use, and adaptable robotic library

suite for any robot can be found at this https URL

This paper is an initial endeavor to bridge the gap between powerful Deep Reinforcement Learning methodologies and the problem of exploration/coverage of unknown terrains. Within this scope, MarsExplorer, an openai-gym compatible environment tailored to exploration/coverage of unknown areas, is presented. MarsExplorer translates the original robotics problem into a Reinforcement Learning setup that various off-the-shelf algorithms can tackle. Any learned policy can be straightforwardly applied to a robotic platform without an elaborate simulation model of the robot's dynamics to apply a different learning/adaptation phase. One of its core features is the controllable multi-dimensional procedural generation of terrains, which is the key for producing policies with strong generalization capabilities. Four different state-of-the-art RL algorithms (A3C, PPO, Rainbow, and SAC) are trained on the MarsExplorer environment, and a proper evaluation of their results compared to the average human-level performance is reported. In the follow-up experimental analysis, the effect of the multi-dimensional difficulty setting on the learning capabilities of the best-performing algorithm (PPO) is analyzed. A milestone result is the generation of an exploration policy that follows the Hilbert curve without providing this information to the environment or rewarding directly or indirectly Hilbert-curve-like trajectories. The experimental analysis is concluded by evaluating PPO learned policy algorithm side-by-side with frontier-based exploration strategies. A study on the performance curves revealed that PPO-based policy was capable of performing adaptive-to-the-unknown-terrain sweeping without leaving expensive-to-revisit areas uncovered, underlying the capability of RL-based methodologies to tackle exploration tasks efficiently. The source code can be found at: this https URL.

This paper presents the development of a new class of algorithms that

accurately implement the preferential attachment mechanism of the

Barab\'asi-Albert (BA) model to generate scale-free graphs. Contrary to

existing approximate preferential attachment schemes, our methods are exact in

terms of the proportionality of the vertex selection probabilities to their

degree and run in linear time with respect to the order of the generated graph.

Our algorithms utilize a series of precise, diverse, weighted and unweighted

random sampling steps to engineer the desired properties of the graph

generator. We analytically show that they obey the definition of the original

BA model that generates scale-free graphs and discuss their higher-order

properties. The proposed methods additionally include options to manipulate one

dimension of control over the joint inclusion of groups of vertices.

One of the most prominent attributes of Neural Networks (NNs) constitutes their capability of learning to extract robust and descriptive features from high dimensional data, like images. Hence, such an ability renders their exploitation as feature extractors particularly frequent in an abundant of modern reasoning systems. Their application scope mainly includes complex cascade tasks, like multi-modal recognition and deep Reinforcement Learning (RL). However, NNs induce implicit biases that are difficult to avoid or to deal with and are not met in traditional image descriptors. Moreover, the lack of knowledge for describing the intra-layer properties -- and thus their general behavior -- restricts the further applicability of the extracted features. With the paper at hand, a novel way of visualizing and understanding the vector space before the NNs' output layer is presented, aiming to enlighten the deep feature vectors' properties under classification tasks. Main attention is paid to the nature of overfitting in the feature space and its adverse effect on further exploitation. We present the findings that can be derived from our model's formulation, and we evaluate them on realistic recognition scenarios, proving its prominence by improving the obtained results.

Convolutional Neural Networks (CNNs) are the state-of-the-art solution for

many deep learning applications. For maximum scalability, their computation

should combine high performance and energy efficiency. In practice, the

convolutions of each CNN layer are mapped to a matrix multiplication that

includes all input features and kernels of each layer and is computed using a

systolic array. In this work, we focus on the design of a systolic array with

configurable pipeline with the goal to select an optimal pipeline configuration

for each CNN layer. The proposed systolic array, called ArrayFlex, can operate

in normal, or in shallow pipeline mode, thus balancing the execution time in

cycles and the operating clock frequency. By selecting the appropriate pipeline

configuration per CNN layer, ArrayFlex reduces the inference latency of

state-of-the-art CNNs by 11%, on average, as compared to a traditional

fixed-pipeline systolic array. Most importantly, this result is achieved while

using 13%-23% less power, for the same applications, thus offering a combined

energy-delay-product efficiency between 1.4x and 1.8x.

The purpose of the study is to analyse and compare the most common machine learning and deep learning techniques used for computer vision 2D object classification tasks. Firstly, we will present the theoretical background of the Bag of Visual words model and Deep Convolutional Neural Networks (DCNN). Secondly, we will implement a Bag of Visual Words model, the VGG16 CNN Architecture. Thirdly, we will present our custom and novice DCNN in which we test the aforementioned implementations on a modified version of the Belgium Traffic Sign dataset. Our results showcase the effects of hyperparameters on traditional machine learning and the advantage in terms of accuracy of DCNNs compared to classical machine learning methods. As our tests indicate, our proposed solution can achieve similar - and in some cases better - results than existing DCNNs architectures. Finally, the technical merit of this article lies in the presented computationally simpler DCNN architecture, which we believe can pave the way towards using more efficient architectures for basic tasks.

This paper presents a distributed algorithm applicable to a wide range of

practical multi-robot applications. In such multi-robot applications, the

user-defined objectives of the mission can be cast as a general optimization

problem, without explicit guidelines of the subtasks per different robot. Owing

to the unknown environment, unknown robot dynamics, sensor nonlinearities,

etc., the analytic form of the optimization cost function is not available a

priori. Therefore, standard gradient-descent-like algorithms are not applicable

to these problems. To tackle this, we introduce a new algorithm that carefully

designs each robot's subcost function, the optimization of which can accomplish

the overall team objective. Upon this transformation, we propose a distributed

methodology based on the cognitive-based adaptive optimization (CAO) algorithm,

that is able to approximate the evolution of each robot's cost function and to

adequately optimize its decision variables (robot actions). The latter can be

achieved by online learning only the problem-specific characteristics that

affect the accomplishment of mission objectives. The overall, low-complexity

algorithm can straightforwardly incorporate any kind of operational constraint,

is fault-tolerant, and can appropriately tackle time-varying cost functions. A

cornerstone of this approach is that it shares the same convergence

characteristics as those of block coordinate descent algorithms. The proposed

algorithm is evaluated in three heterogeneous simulation set-ups under multiple

scenarios, against both general-purpose and problem-specific algorithms. Source

code is available at

this https URL

03 Feb 2025

The ongoing electrification of the transportation sector requires the deployment of multiple Electric Vehicle (EV) charging stations across multiple locations. However, the EV charging stations introduce significant cyber-physical and privacy risks, given the presence of vulnerable communication protocols, like the Open Charge Point Protocol (OCPP). Meanwhile, the Federated Learning (FL) paradigm showcases a novel approach for improved intrusion detection results that utilize multiple sources of Internet of Things data, while respecting the confidentiality of private information. This paper proposes the adoption of the FL architecture for the monitoring of the EV charging infrastructure and the detection of cyberattacks against the OCPP 1.6 protocol. The evaluation results showcase high detection performance of the proposed FL-based solution.

08 Aug 2023

Reference-guided DNA sequencing and alignment is an important process in computational molecular biology. The amount of DNA data grows very fast, and many new genomes are waiting to be sequenced while millions of private genomes need to be re-sequenced. Each human genome has 3.2 B base pairs, and each one could be stored with 2 bits of information, so one human genome would take 6.4 B bits or about 760 MB of storage (National Institute of General Medical Sciences). Today most powerful tensor processing units cannot handle the volume of DNA data necessitating a major leap in computing power. It is, therefore, important to investigate the usefulness of quantum computers in genomic data analysis, especially in DNA sequence alignment. Quantum computers are expected to be involved in DNA sequencing, initially as parts of classical systems, acting as quantum accelerators. The number of available qubits is increasing annually, and future quantum computers could conduct DNA sequencing, taking the place of classical computing systems. We present a novel quantum algorithm for reference-guided DNA sequence alignment modeled with gate-based quantum computing. The algorithm is scalable, can be integrated into existing classical DNA sequencing systems and is intentionally structured to limit computational errors. The quantum algorithm has been tested using the quantum processing units and simulators provided by IBM Quantum, and its correctness has been confirmed.

There are no more papers matching your filters at the moment.