Ask or search anything...

the University of Tokyo

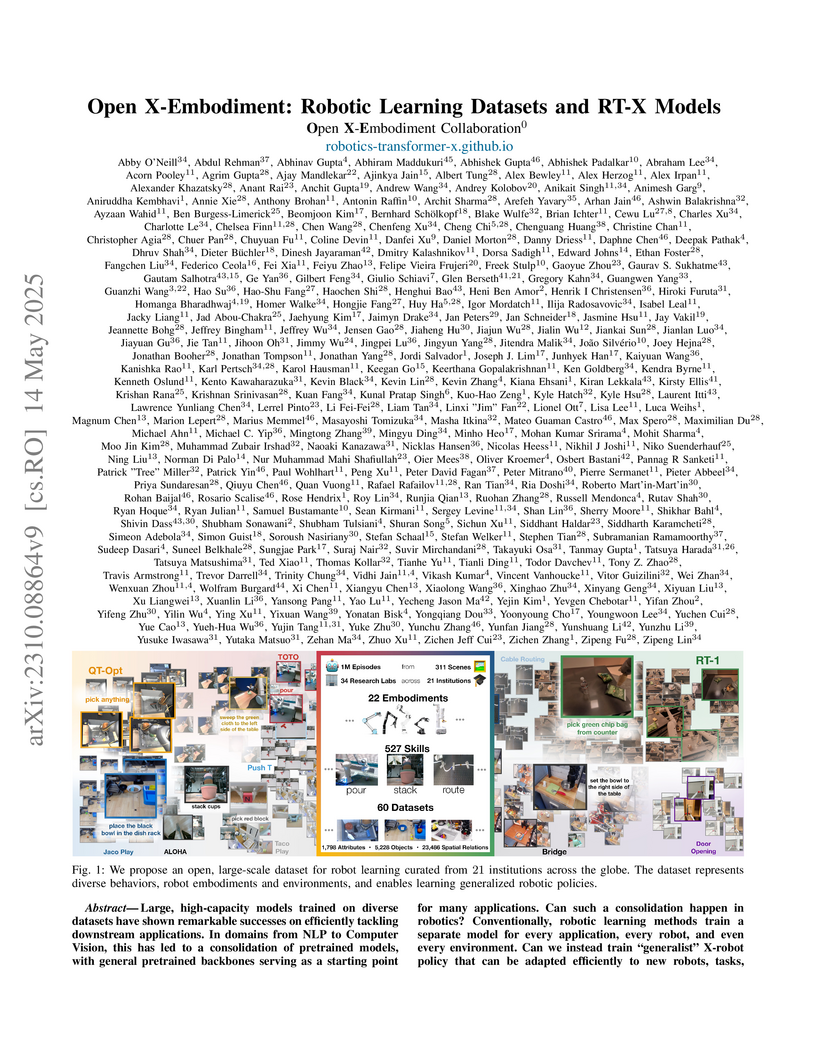

the University of TokyoThe OpenX-Embodiment Collaboration released the Open X-Embodiment (OXE) Dataset, a consolidated collection of over 1 million real robot trajectories from 22 embodiments. This work demonstrates that large RT-X models trained on such diverse data achieve positive transfer and emergent skills across different robot platforms.

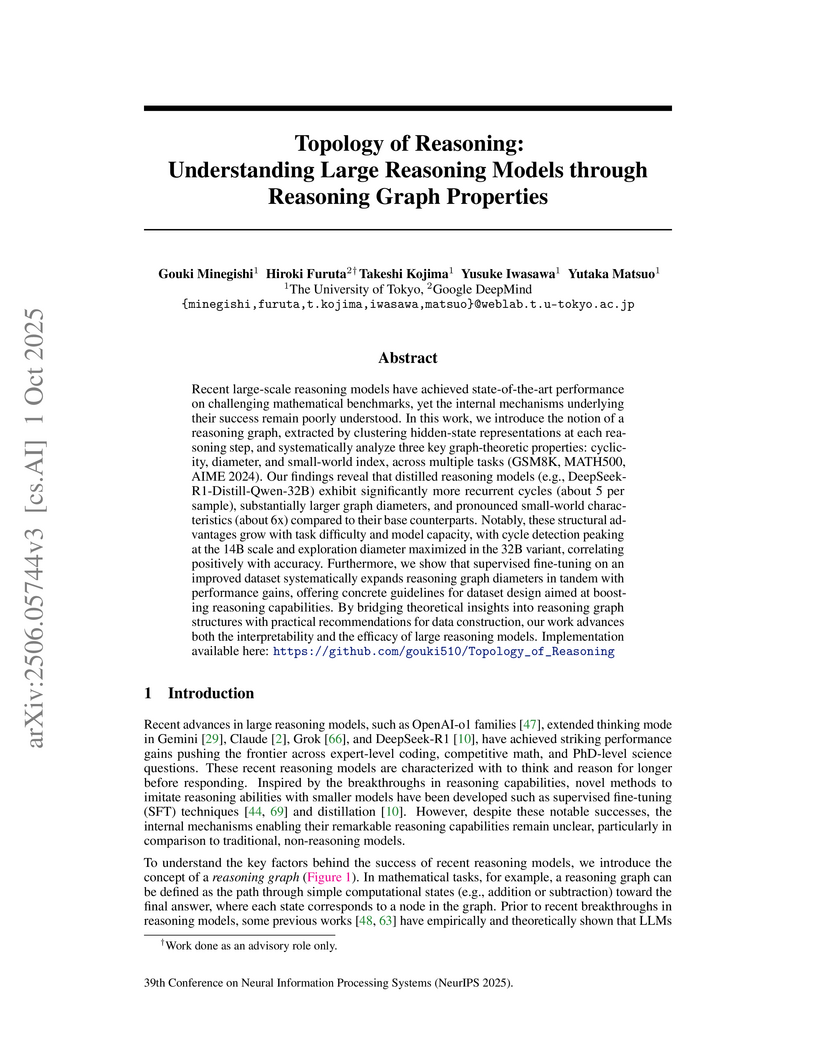

View blogResearchers from The University of Tokyo quantitatively compared reasoning in large language models after Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL), establishing that RL prunes incorrect reasoning paths and concentrates functionality, while SFT expands the diversity of correct trajectories and distributes functional importance. This work provides a mechanistic explanation for the empirical success of combined SFT+RL training strategies in enhancing LLM reasoning.

View blogA simple prompting technique called Zero-shot-CoT, using a generic phrase like "Let's think step by step", enables large language models to perform multi-step reasoning without requiring any task-specific examples. This approach significantly boosts performance on challenging arithmetic and symbolic reasoning tasks, such as improving InstructGPT's accuracy on MultiArith from 17.7% to 78.7% and Coin Flip from 12.8% to 91.4%.

View blogThis survey paper by researchers from Tsinghua University, University of Illinois Chicago, and other institutions provides a comprehensive overview of Retrieval-Augmented Generation (RAG) and reasoning integration in Large Language Models. It maps the evolution of these systems from one-way enhancements to synergistic, iterative frameworks, culminating in agentic RAG-Reasoning systems for complex knowledge-intensive tasks.

View blogResearchers at The University of Tokyo established a general algebraic-topological framework to analyze the entanglement structure of quantum many-body states possessing Rep(G) loop symmetries. This method determines the full entanglement spectrum across arbitrary dimensions and manifold topologies, including a direct verification of the Li-Haldane conjecture for Kitaev quantum double models.

View blogA new comprehensive video dataset named Sekai, curated by Shanghai AI Laboratory, supports advanced world exploration models with over 5,000 hours of videos from real-world and game sources, annotated with explicit camera trajectories and extensive metadata. This data effectively improved model performance in text-to-video, image-to-video, and camera-controlled video generation tasks.

View blogThis paper establishes an exact correspondence between free-fermion systems with quadratic band touching (QBT) and the c=-2 symplectic fermion conformal field theory (CFT). It reveals that gapless QBT systems host anyonic excitations, demonstrating topological ground-state degeneracy and non-diagonalizable spin, which are direct manifestations of logarithmic CFT characteristics.

View blogAn independent analysis of 15 years of Fermi-LAT data identifies a statistically significant halo-like gamma-ray excess in the Milky Way, peaking around 20 GeV. This excess exhibits a spherically symmetric morphology consistent with a smooth NFW profile and remains robust under various systematic checks, providing a new candidate for indirect dark matter detection.

View blogAn analysis of large language models' internal reasoning processes, conducted by researchers at The University of Tokyo and Google DeepMind, reveals that high-performing reasoning models possess unique graph-theoretic properties within their hidden states. These models consistently show higher cyclicity, larger reasoning graph diameters, and pronounced small-world network characteristics, which correlate with enhanced task accuracy and offer insights for designing more effective supervised fine-tuning datasets.

View blogResearchers from Microsoft Research and collaborating institutions developed NaturalSpeech 3, a text-to-speech system that achieves human-level naturalness in zero-shot multi-speaker synthesis. It employs a Factorized Neural Speech Codec (FACodec) to disentangle speech into content, prosody, timbre, and acoustic details, which are then generated sequentially by a Factorized Diffusion Model, enabling precise control and leading to state-of-the-art quality and intelligibility on diverse datasets like LibriSpeech.

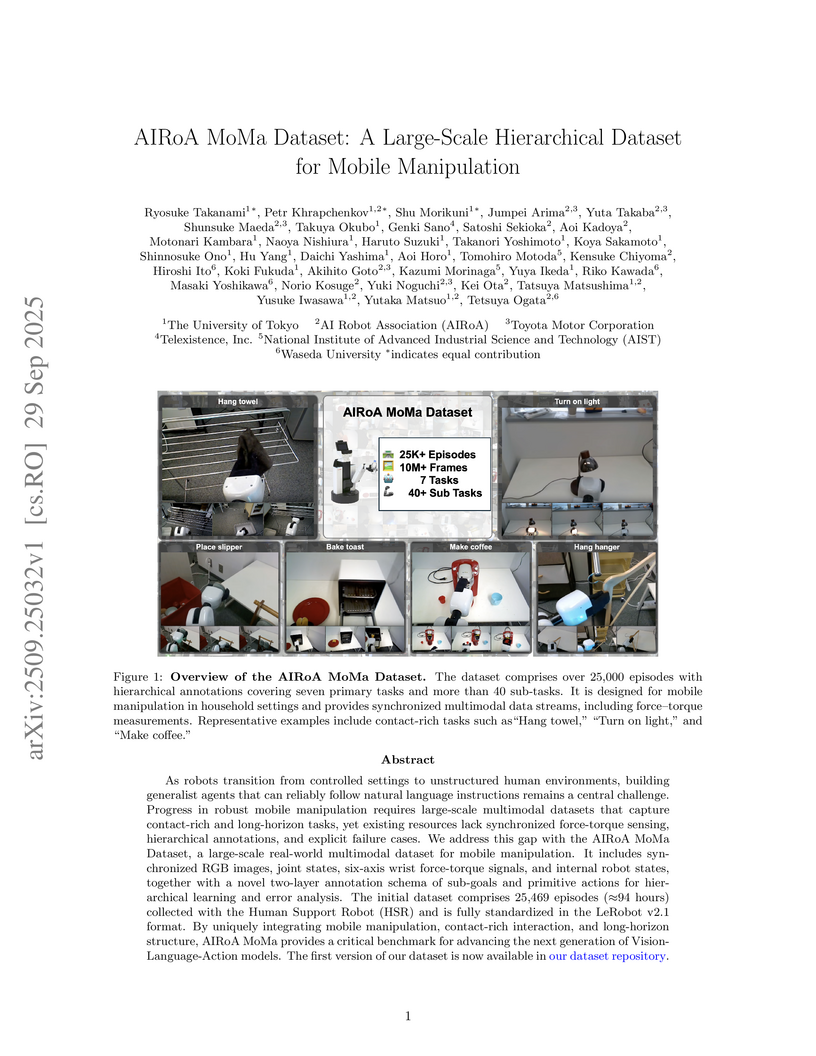

View blogResearchers from The University of Tokyo and the AI Robot Association, in collaboration with industry partners, introduced the AIRoA MoMa Dataset, a large-scale hierarchical dataset with 25,469 episodes (94 hours) of real-robot mobile manipulation data. It provides synchronized multimodal sensor streams, including 6-axis force-torque signals, alongside hierarchical task annotations and explicit failure cases, aiming to accelerate general-purpose robot learning.

View blogThis comprehensive survey systematically reviews current safety research across six major large AI model paradigms and autonomous agents, presenting a detailed taxonomy of 10 attack types and corresponding defense strategies. The review identifies a predominant focus on attack methodologies (60% of papers) over defenses and outlines key open challenges for advancing AI safety.

View blog Georgia Institute of Technology

Georgia Institute of Technology

Google DeepMind

Google DeepMind University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign

University of Cambridge

University of Cambridge

Google Research

Google Research

UCLA

UCLA

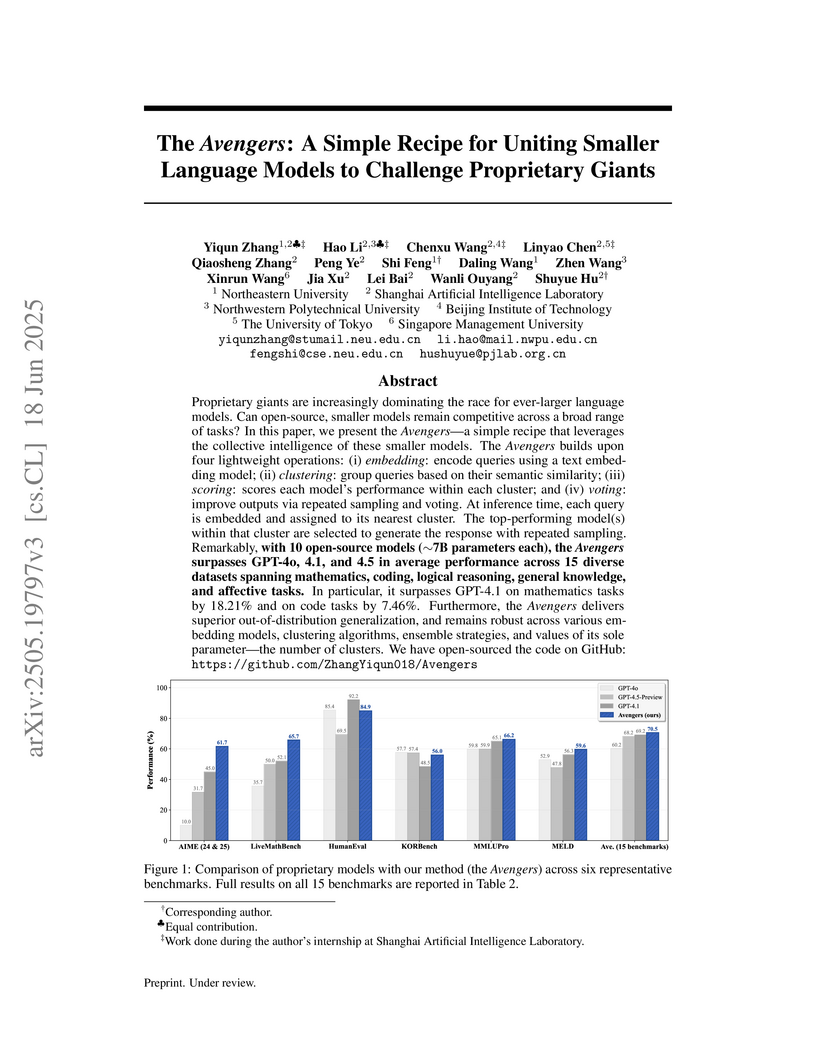

Northeastern University

Northeastern University

University of Science and Technology of China

University of Science and Technology of China

Waseda University

Waseda University

University of Oxford

University of Oxford