ENSTA

As artificial intelligence systems become increasingly integrated into daily life, the field of explainability has gained significant attention. This trend is particularly driven by the complexity of modern AI models and their decision-making processes. The advent of foundation models, characterized by their extensive generalization capabilities and emergent uses, has further complicated this landscape. Foundation models occupy an ambiguous position in the explainability domain: their complexity makes them inherently challenging to interpret, yet they are increasingly leveraged as tools to construct explainable models. In this survey, we explore the intersection of foundation models and eXplainable AI (XAI) in the vision domain. We begin by compiling a comprehensive corpus of papers that bridge these fields. Next, we categorize these works based on their architectural characteristics. We then discuss the challenges faced by current research in integrating XAI within foundation models. Furthermore, we review common evaluation methodologies for these combined approaches. Finally, we present key observations and insights from our survey, offering directions for future research in this rapidly evolving field.

This paper presents a comprehensive survey and conceptual framework for Explainable Artificial Intelligence (XAI). It introduces a novel definition of explainability centered on the audience and purpose, provides detailed taxonomies for XAI techniques including a dedicated one for Deep Learning, and integrates XAI within the broader paradigm of Responsible Artificial Intelligence, addressing its ethical implications.

16 Oct 2025

We study a limiting absorption principle for the boundary-value problem describing a hybrid plasma resonance, with a regular coefficient in the principal part of the operator that vanishes on a curve inside the domain and changes its sign across this curve. We prove the limiting absorption principle by establishing a priori bounds on the solution in certain weighted Sobolev spaces. Next, we show that the solution can be decomposed into regular and singular parts. A peculiar property of this decomposition enables us to introduce a radiation-like condition in a bounded domain and to state a well-posed problem satisfied by the limiting absorption solution.

Deep Neural Networks (DNNs) have demonstrated remarkable performance across various domains, including computer vision and natural language processing. However, they often struggle to accurately quantify the uncertainty of their predictions, limiting their broader adoption in critical real-world applications. Uncertainty Quantification (UQ) for Deep Learning seeks to address this challenge by providing methods to improve the reliability of uncertainty estimates. Although numerous techniques have been proposed, a unified tool offering a seamless workflow to evaluate and integrate these methods remains lacking. To bridge this gap, we introduce Torch-Uncertainty, a PyTorch and Lightning-based framework designed to streamline DNN training and evaluation with UQ techniques and metrics. In this paper, we outline the foundational principles of our library and present comprehensive experimental results that benchmark a diverse set of UQ methods across classification, segmentation, and regression tasks. Our library is available at this https URL

A new framework, BAGEL, developed by researchers at U2IS, ENSTA, Institut Polytechnique de Paris, and EPFL, offers global, concept-based mechanistic interpretability for deep neural networks. It systematically quantifies how high-level semantic concepts are represented across all internal layers and compares dataset biases with model-learned biases, enhancing understanding of model generalization and trustworthiness.

18 Sep 2025

We introduce SamBa-GQW, a novel quantum algorithm for solving binary combinatorial optimization problems of arbitrary degree with no use of any classical optimizer. The algorithm is based on a continuous-time quantum walk on the solution space represented as a graph. The walker explores the solution space to find its way to vertices that minimize the cost function of the optimization problem. The key novelty of our algorithm is an offline classical sampling protocol that gives information about the spectrum of the problem Hamiltonian. Then, the extracted information is used to guide the walker to high quality solutions via a quantum walk with a time-dependent hopping rate. We investigate the performance of SamBa-GQW on several quadratic problems, namely MaxCut, maximum independent set, portfolio optimization, and higher-order polynomial problems such as LABS, MAX-k-SAT and a quartic reformulation of the travelling salesperson problem. We empirically demonstrate that SamBa-GQW finds high quality approximate solutions on problems up to a size of n=20 qubits by only sampling n2 states among 2n possible decisions. SamBa-GQW compares very well also to other guided quantum walks and QAOA.

13 May 2020

Robots are still limited to controlled conditions, that the robot designer knows with enough details to endow the robot with the appropriate models or behaviors. Learning algorithms add some flexibility with the ability to discover the appropriate behavior given either some demonstrations or a reward to guide its exploration with a reinforcement learning algorithm. Reinforcement learning algorithms rely on the definition of state and action spaces that define reachable behaviors. Their adaptation capability critically depends on the representations of these spaces: small and discrete spaces result in fast learning while large and continuous spaces are challenging and either require a long training period or prevent the robot from converging to an appropriate behavior. Beside the operational cycle of policy execution and the learning cycle, which works at a slower time scale to acquire new policies, we introduce the redescription cycle, a third cycle working at an even slower time scale to generate or adapt the required representations to the robot, its environment and the task. We introduce the challenges raised by this cycle and we present DREAM (Deferred Restructuring of Experience in Autonomous Machines), a developmental cognitive architecture to bootstrap this redescription process stage by stage, build new state representations with appropriate motivations, and transfer the acquired knowledge across domains or tasks or even across robots. We describe results obtained so far with this approach and end up with a discussion of the questions it raises in Neuroscience.

RETENTION: Resource-Efficient Tree-Based Ensemble Model Acceleration with Content-Addressable Memory

RETENTION: Resource-Efficient Tree-Based Ensemble Model Acceleration with Content-Addressable Memory

Although deep learning has demonstrated remarkable capabilities in learning from unstructured data, modern tree-based ensemble models remain superior in extracting relevant information and learning from structured datasets. While several efforts have been made to accelerate tree-based models, the inherent characteristics of the models pose significant challenges for conventional accelerators. Recent research leveraging content-addressable memory (CAM) offers a promising solution for accelerating tree-based models, yet existing designs suffer from excessive memory consumption and low utilization. This work addresses these challenges by introducing RETENTION, an end-to-end framework that significantly reduces CAM capacity requirement for tree-based model inference. We propose an iterative pruning algorithm with a novel pruning criterion tailored for bagging-based models (e.g., Random Forest), which minimizes model complexity while ensuring controlled accuracy degradation. Additionally, we present a tree mapping scheme that incorporates two innovative data placement strategies to alleviate the memory redundancy caused by the widespread use of don't care states in CAM. Experimental results show that implementing the tree mapping scheme alone achieves 1.46× to 21.30× better space efficiency, while the full RETENTION framework yields 4.35× to 207.12× improvement with less than 3% accuracy loss. These results demonstrate that RETENTION is highly effective in reducing CAM capacity requirement, providing a resource-efficient direction for tree-based model acceleration.

This research evaluates the capacity of Large Language Models (LLMs) to translate human natural language instructions into the emergent symbolic representations learned by developmental reinforcement learning agents. The study reveals that while LLMs can achieve a surface-level translation, they exhibit limitations in robustly aligning with an agent's true internal symbolic structure, particularly in dynamic environments or with increasing symbolic granularity.

We study data-driven least squares (LS) problems with semidefinite (SD) constraints and derive finite-sample guarantees on the spectrum of their optimal solutions when these constraints are relaxed. In particular, we provide a high confidence bound allowing one to solve a simpler program in place of the full SDLS problem, while ensuring that the eigenvalues of the resulting solution are ε-close of those enforced by the SD constraints. The developed certificate, which consistently shrinks as the number of data increases, turns out to be easy-to-compute, distribution-free, and only requires independent and identically distributed samples. Moreover, when the SDLS is used to learn an unknown quadratic function, we establish bounds on the error between a gradient descent iterate minimizing the surrogate cost obtained with no SD constraints and the true minimizer.

While automatic monitoring and coaching of exercises are showing encouraging

results in non-medical applications, they still have limitations such as errors

and limited use contexts. To allow the development and assessment of physical

rehabilitation by an intelligent tutoring system, we identify in this article

four challenges to address and propose a medical dataset of clinical patients

carrying out low back-pain rehabilitation exercises. The dataset includes 3D

Kinect skeleton positions and orientations, RGB videos, 2D skeleton data, and

medical annotations to assess the correctness, and error classification and

localisation of body part and timespan. Along this dataset, we perform a

complete research path, from data collection to processing, and finally a small

benchmark. We evaluated on the dataset two baseline movement recognition

algorithms, pertaining to two different approaches: the probabilistic approach

with a Gaussian Mixture Model (GMM), and the deep learning approach with a

Long-Short Term Memory (LSTM).

This dataset is valuable because it includes rehabilitation relevant motions

in a clinical setting with patients in their rehabilitation program, using a

cost-effective, portable, and convenient sensor, and because it shows the

potential for improvement on these challenges.

01 Oct 2025

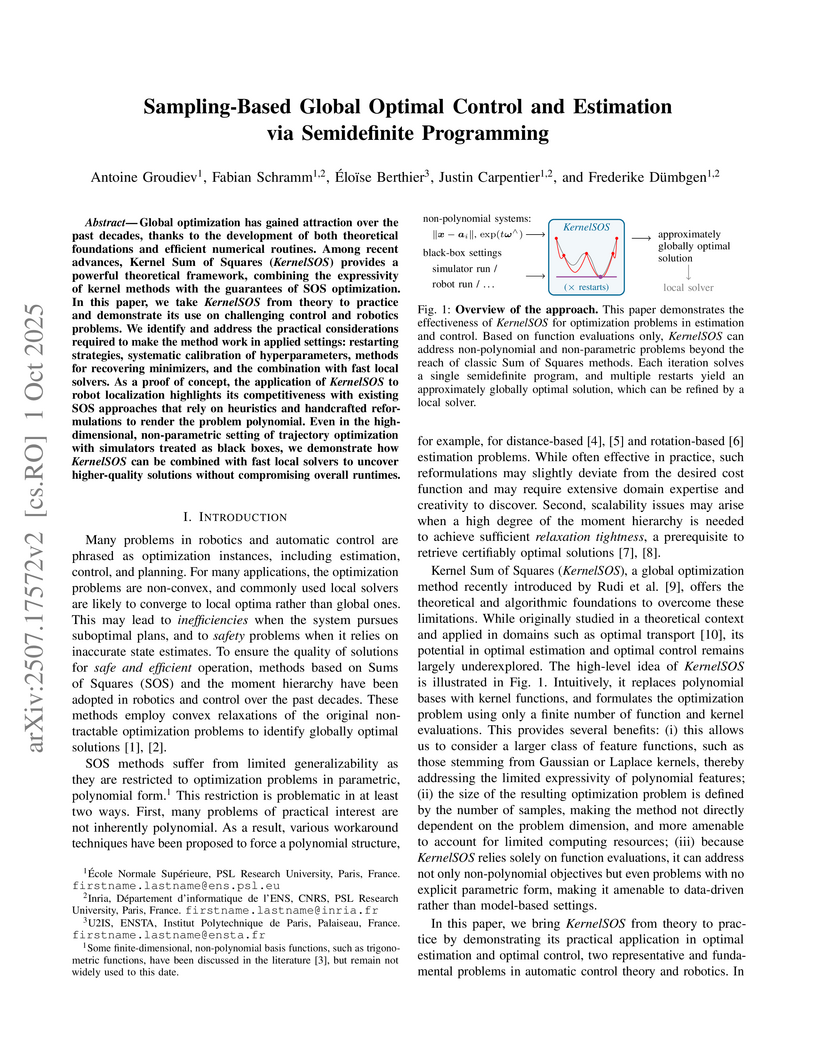

Global optimization has gained attraction over the past decades, thanks to the development of both theoretical foundations and efficient numerical routines. Among recent advances, Kernel Sum of Squares (KernelSOS) provides a powerful theoretical framework, combining the expressivity of kernel methods with the guarantees of SOS optimization. In this paper, we take KernelSOS from theory to practice and demonstrate its use on challenging control and robotics problems. We identify and address the practical considerations required to make the method work in applied settings: restarting strategies, systematic calibration of hyperparameters, methods for recovering minimizers, and the combination with fast local solvers. As a proof of concept, the application of KernelSOS to robot localization highlights its competitiveness with existing SOS approaches that rely on heuristics and handcrafted reformulations to render the problem polynomial. Even in the high-dimensional, non-parametric setting of trajectory optimization with simulators treated as black boxes, we demonstrate how KernelSOS can be combined with fast local solvers to uncover higher-quality solutions without compromising overall runtimes.

26 Sep 2024

The rise of Large Language Models (LLMs) has triggered legal and ethical concerns, especially regarding the unauthorized use of copyrighted materials in their training datasets. This has led to lawsuits against tech companies accused of using protected content without permission. Membership Inference Attacks (MIAs) aim to detect whether specific documents were used in a given LLM pretraining, but their effectiveness is undermined by biases such as time-shifts and n-gram overlaps.

This paper addresses the evaluation of MIAs on LLMs with partially inferable training sets, under the ex-post hypothesis, which acknowledges inherent distributional biases between members and non-members datasets. We propose and validate algorithms to create ``non-biased'' and ``non-classifiable'' datasets for fairer MIA assessment. Experiments using the Gutenberg dataset on OpenLamma and Pythia show that neutralizing known biases alone is insufficient. Our methods produce non-biased ex-post datasets with AUC-ROC scores comparable to those previously obtained on genuinely random datasets, validating our approach. Globally, MIAs yield results close to random, with only one being effective on both random and our datasets, but its performance decreases when bias is removed.

Beam-driven plasma-wakefield acceleration (PWFA) has emerged as a

transformative technology with the potential to revolutionize the field of

particle acceleration, especially toward compact accelerators for high-energy

and high-power applications. Charged particle beams are used to excite density

waves in plasma with accelerating fields reaching up to 100 GV/m, thousands of

times stronger than the fields provided by radio-frequency cavities.

Plasma-wakefield-accelerator research has matured over the span of four decades

from basic concepts and proof-of-principle experiments to a rich and rapidly

progressing sub-field with dedicated experimental facilities and

state-of-the-art simulation codes. We review the physics, including theory of

linear and nonlinear plasma wakefields as well as beam dynamics of both the

wakefield driver and trailing bunches accelerating in the plasma wake, and

address challenges associated with energy efficiency and preservation of beam

quality. Advanced topics such as positron acceleration, self-modulation,

internal injection, long-term plasma evolution and multistage acceleration are

discussed. Simulation codes and major experiments are surveyed, spanning the

use of electron, positron and proton bunches as wakefield drivers. Finally, we

look ahead to future particle colliders and light sources based on plasma

technology.

Image captioning models have been able to generate grammatically correct and

human understandable sentences. However most of the captions convey limited

information as the model used is trained on datasets that do not caption all

possible objects existing in everyday life. Due to this lack of prior

information most of the captions are biased to only a few objects present in

the scene, hence limiting their usage in daily life. In this paper, we attempt

to show the biased nature of the currently existing image captioning models and

present a new image captioning dataset, Egoshots, consisting of 978 real life

images with no captions. We further exploit the state of the art pre-trained

image captioning and object recognition networks to annotate our images and

show the limitations of existing works. Furthermore, in order to evaluate the

quality of the generated captions, we propose a new image captioning metric,

object based Semantic Fidelity (SF). Existing image captioning metrics can

evaluate a caption only in the presence of their corresponding annotations;

however, SF allows evaluating captions generated for images without

annotations, making it highly useful for real life generated captions.

19 Jan 2018

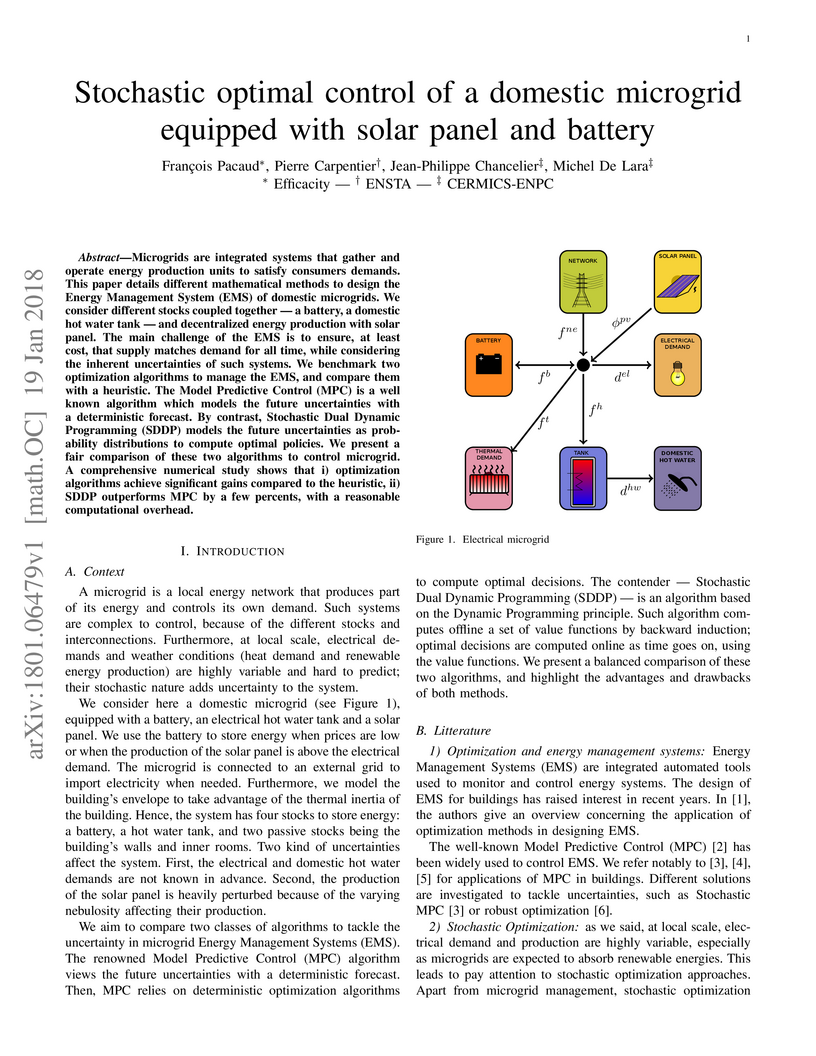

Microgrids are integrated systems that gather and operate energy production

units to satisfy consumers demands. This paper details different mathematical

methods to design the Energy Management System (EMS) of domestic microgrids. We

consider different stocks coupled together - a battery, a domestic hot water

tank - and decentralized energy production with solar panel. The main challenge

of the EMS is to ensure, at least cost, that supply matches demand for all

time, while considering the inherent uncertainties of such systems. We

benchmark two optimization algorithms to manage the EMS, and compare them with

a heuristic. The Model Predictive Control (MPC) is a well known algorithm which

models the future uncertainties with a deterministic forecast. By contrast,

Stochastic Dual Dynamic Programming (SDDP) models the future uncertainties as

probability distributions to compute optimal policies. We present a fair

comparison of these two algorithms to control microgrid. A comprehensive

numerical study shows that i) optimization algorithms achieve significant gains

compared to the heuristic, ii) SDDP outperforms MPC by a few percents, with a

reasonable computational overhead.

This work presents a new approach to the guidance and control of marine craft

via HEOL, i.e., a new way of combining flatness-based and model-free

controllers. Its goal is to develop a general regulator for Unmanned Surface

Vehicles (USV). To do so, the well-known USV maneuvering model is simplified

into a nominal Hovercraft model which is flat. A flatness-based controller is

derived for the simplified USV model and the loop is closed via an intelligent

proportional-derivative (iPD) regulator. We thus associate the well-documented

natural robustness of flatness-based control and adaptivity of iPDs. The

controller is applied in simulation to two surface vessels, one meeting the

simplifying hypotheses, the other one being a generic USV of the literature. It

is shown to stabilize both systems even in the presence of unmodeled

environmental disturbances.

The latest Deep Learning (DL) models for detection and classification have

achieved an unprecedented performance over classical machine learning

algorithms. However, DL models are black-box methods hard to debug, interpret,

and certify. DL alone cannot provide explanations that can be validated by a

non technical audience. In contrast, symbolic AI systems that convert concepts

into rules or symbols -- such as knowledge graphs -- are easier to explain.

However, they present lower generalisation and scaling capabilities. A very

important challenge is to fuse DL representations with expert knowledge. One

way to address this challenge, as well as the performance-explainability

trade-off is by leveraging the best of both streams without obviating domain

expert knowledge. We tackle such problem by considering the symbolic knowledge

is expressed in form of a domain expert knowledge graph. We present the

eXplainable Neural-symbolic learning (X-NeSyL) methodology, designed to learn

both symbolic and deep representations, together with an explainability metric

to assess the level of alignment of machine and human expert explanations. The

ultimate objective is to fuse DL representations with expert domain knowledge

during the learning process to serve as a sound basis for explainability.

X-NeSyL methodology involves the concrete use of two notions of explanation at

inference and training time respectively: 1) EXPLANet: Expert-aligned

eXplainable Part-based cLAssifier NETwork Architecture, a compositional CNN

that makes use of symbolic representations, and 2) SHAP-Backprop, an

explainable AI-informed training procedure that guides the DL process to align

with such symbolic representations in form of knowledge graphs. We showcase

X-NeSyL methodology using MonuMAI dataset for monument facade image

classification, and demonstrate that our approach improves explainability and

performance.

22 May 2025

In this work we study how the convergence rate of GMRES is influenced by the

properties of linear systems arising from Helmholtz problems near resonances or

quasi-resonances. We extend an existing convergence bound to demonstrate that

the approximation of small eigenvalues by harmonic Ritz values plays a key role

in convergence behavior. Next, we analyze the impact of deflation using

carefully selected vectors and combine this with a Complex Shifted Laplacian

preconditioner. Finally, we apply these tools to two numerical examples near

(quasi-)resonant frequencies, using them to explain how the convergence rate

evolves.

18 Jul 2025

We establish improved convergence rates for curved boundary element methods applied to the three-dimensional (3D) Laplace and Helmholtz equations with smooth geometry and data. Our analysis relies on a precise analysis of the consistency errors introduced by the perturbed bilinear and sesquilinear forms. We illustrate our results with numerical experiments in 3D based on basis functions and curved triangular elements up to order four.

There are no more papers matching your filters at the moment.