East China Jiaotong University

SAGE proposes a framework for self-evolving Large Language Model agents by integrating iterative feedback, reflection, and a novel memory optimization system inspired by the Ebbinghaus forgetting curve. This approach improves performance for both proprietary and open-source models, notably enabling Qwen-1.8B to achieve results comparable to GPT-3.5 and reducing memory consumption by nearly 50% in RAG tasks.

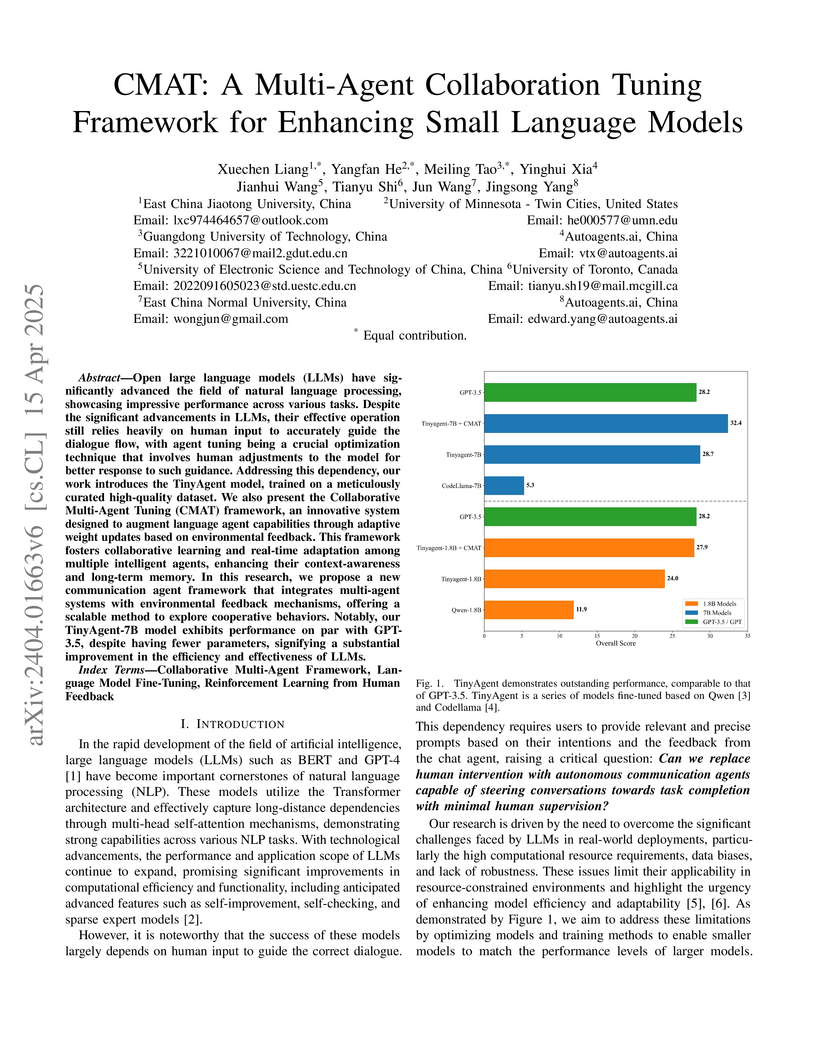

Open large language models (LLMs) have significantly advanced the field of

natural language processing, showcasing impressive performance across various

tasks.Despite the significant advancements in LLMs, their effective operation

still relies heavily on human input to accurately guide the dialogue flow, with

agent tuning being a crucial optimization technique that involves human

adjustments to the model for better response to such guidance.Addressing this

dependency, our work introduces the TinyAgent model, trained on a meticulously

curated high-quality dataset. We also present the Collaborative Multi-Agent

Tuning (CMAT) framework, an innovative system designed to augment language

agent capabilities through adaptive weight updates based on environmental

feedback. This framework fosters collaborative learning and real-time

adaptation among multiple intelligent agents, enhancing their context-awareness

and long-term memory. In this research, we propose a new communication agent

framework that integrates multi-agent systems with environmental feedback

mechanisms, offering a scalable method to explore cooperative behaviors.

Notably, our TinyAgent-7B model exhibits performance on par with GPT-3.5,

despite having fewer parameters, signifying a substantial improvement in the

efficiency and effectiveness of LLMs.

Image deraining is crucial for improving visual quality and supporting reliable downstream vision tasks. Although Mamba-based models provide efficient sequence modeling, their limited ability to capture fine-grained details and lack of frequency-domain awareness restrict further improvements. To address these issues, we propose DeRainMamba, which integrates a Frequency-Aware State-Space Module (FASSM) and Multi-Directional Perception Convolution (MDPConv). FASSM leverages Fourier transform to distinguish rain streaks from high-frequency image details, balancing rain removal and detail preservation. MDPConv further restores local structures by capturing anisotropic gradient features and efficiently fusing multiple convolution branches. Extensive experiments on four public benchmarks demonstrate that DeRainMamba consistently outperforms state-of-the-art methods in PSNR and SSIM, while requiring fewer parameters and lower computational costs. These results validate the effectiveness of combining frequency-domain modeling and spatial detail enhancement within a state-space framework for single image deraining.

14 Sep 2025

This paper investigates the efficacy of quantum computing in two distinct machine learning tasks: feature selection for credit risk assessment and image classification for handwritten digit recognition. For the first task, we address the feature selection challenge of the German Credit Dataset by formulating it as a Quadratic Unconstrained Binary Optimization (QUBO) problem, which is solved using quantum annealing to identify the optimal feature subset. Experimental results show that the resulting credit scoring model maintains high classification precision despite using a minimal number of features. For the second task, we focus on classifying handwritten digits 3 and 6 in the MNIST dataset using Quantum Neural Networks (QNNs). Through meticulous data preprocessing (downsampling, binarization), quantum encoding (FRQI and compressed FRQI), and the design of QNN architectures (CRADL and CRAML), we demonstrate that QNNs can effectively handle high-dimensional image data. Our findings highlight the potential of quantum computing in solving practical machine learning problems while emphasizing the need to balance resource expenditure and model efficacy.

Recently, graph-based and Transformer-based deep learning networks have demonstrated excellent performances on various point cloud tasks. Most of the existing graph methods are based on static graph, which take a fixed input to establish graph relations. Moreover, many graph methods apply maximization and averaging to aggregate neighboring features, so that only a single neighboring point affects the feature of centroid or different neighboring points have the same influence on the centroid's feature, which ignoring the correlation and difference between points. Most Transformer-based methods extract point cloud features based on global attention and lack the feature learning on local neighbors. To solve the problems of these two types of models, we propose a new feature extraction block named Graph Transformer and construct a 3D point point cloud learning network called GTNet to learn features of point clouds on local and global patterns. Graph Transformer integrates the advantages of graph-based and Transformer-based methods, and consists of Local Transformer and Global Transformer modules. Local Transformer uses a dynamic graph to calculate all neighboring point weights by intra-domain cross-attention with dynamically updated graph relations, so that every neighboring point could affect the features of centroid with different weights; Global Transformer enlarges the receptive field of Local Transformer by a global self-attention. In addition, to avoid the disappearance of the gradient caused by the increasing depth of network, we conduct residual connection for centroid features in GTNet; we also adopt the features of centroid and neighbors to generate the local geometric descriptors in Local Transformer to strengthen the local information learning capability of the model. Finally, we use GTNet for shape classification, part segmentation and semantic segmentation tasks in this paper.

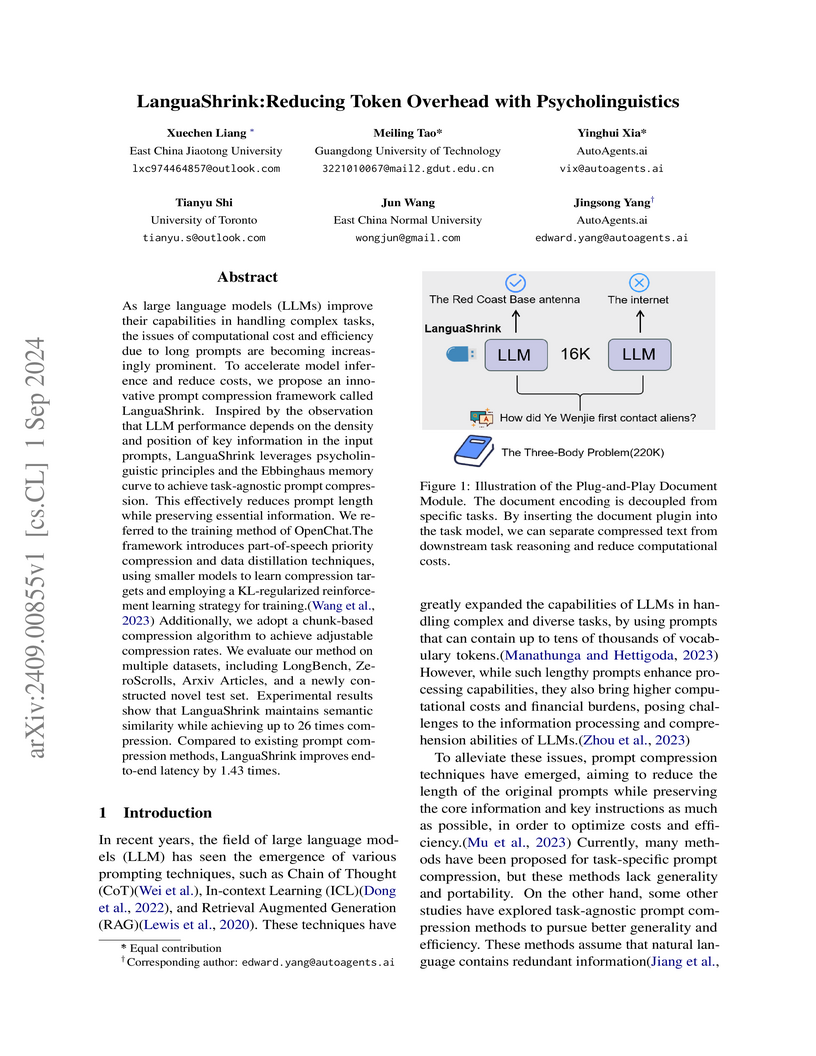

As large language models (LLMs) improve their capabilities in handling complex tasks, the issues of computational cost and efficiency due to long prompts are becoming increasingly prominent. To accelerate model inference and reduce costs, we propose an innovative prompt compression framework called LanguaShrink. Inspired by the observation that LLM performance depends on the density and position of key information in the input prompts, LanguaShrink leverages psycholinguistic principles and the Ebbinghaus memory curve to achieve task-agnostic prompt compression. This effectively reduces prompt length while preserving essential information. We referred to the training method of this http URL framework introduces part-of-speech priority compression and data distillation techniques, using smaller models to learn compression targets and employing a KL-regularized reinforcement learning strategy for training.\cite{wang2023openchat} Additionally, we adopt a chunk-based compression algorithm to achieve adjustable compression rates. We evaluate our method on multiple datasets, including LongBench, ZeroScrolls, Arxiv Articles, and a newly constructed novel test set. Experimental results show that LanguaShrink maintains semantic similarity while achieving up to 26 times compression. Compared to existing prompt compression methods, LanguaShrink improves end-to-end latency by 1.43 times.

Recently, machine learning techniques, particularly deep learning, have

demonstrated superior performance over traditional time series forecasting

methods across various applications, including both single-variable and

multi-variable predictions. This study aims to investigate the capability of i)

Next Generation Reservoir Computing (NG-RC) ii) Reservoir Computing (RC) iii)

Long short-term Memory (LSTM) for predicting chaotic system behavior, and to

compare their performance in terms of accuracy, efficiency, and robustness.

These methods are applied to predict time series obtained from four

representative chaotic systems including Lorenz, R\"ossler, Chen, Qi systems.

In conclusion, we found that NG-RC is more computationally efficient and offers

greater potential for predicting chaotic system behavior.

Researchers developed a modular framework combining Large Language Models with Reinforcement Learning and Theory of Mind to generate sophisticated commentary for the imperfect information card game Guandan. This framework significantly outperformed commercial models such as GPT-4 in human evaluations, demonstrating superior consistency and fluency.

Expressway traffic congestion severely reduces travel efficiency and hinders regional connectivity. Existing "detection-prediction" systems have critical flaws: low vehicle perception accuracy under occlusion and loss of long-sequence dependencies in congestion forecasting. This study proposes an integrated technical framework to resolve these this http URL traffic flow perception, two baseline algorithms were optimized. Traditional YOLOv11 was upgraded to YOLOv11-DIoU by replacing GIoU Loss with DIoU Loss, and DeepSort was improved by fusing Mahalanobis (motion) and cosine (appearance) distances. Experiments on Chang-Shen Expressway videos showed YOLOv11-DIoU achieved 95.7\% mAP (6.5 percentage points higher than baseline) with 5.3\% occlusion miss rate. DeepSort reached 93.8\% MOTA (11.3 percentage points higher than SORT) with only 4 ID switches. Using the Greenberg model (for 10-15 vehicles/km high-density scenarios), speed and density showed a strong negative correlation (r=-0.97), conforming to traffic flow theory. For congestion warning, a GRU-Attention model was built to capture congestion precursors. Trained 300 epochs with flow, density, and speed, it achieved 99.7\% test accuracy (7-9 percentage points higher than traditional GRU). In 10-minute advance warnings for 30-minute congestion, time error was ≤ 1 minute. Validation with an independent video showed 95\% warning accuracy, over 90\% spatial overlap of congestion points, and stable performance in high-flow (>5 vehicles/second) this http URL framework provides quantitative support for expressway congestion control, with promising intelligent transportation applications.

11 Mar 2023

Ultra-dense networks are widely regarded as a promising solution to explosively growing applications of Internet-of-Things (IoT) mobile devices (IMDs). However, complicated and severe interferences need to be tackled properly in such networks. To this end, both orthogonal multiple access (OMA) and non-orthogonal multiple access (NOMA) are utilized at first. Then, in order to attain a goal of green and secure computation offloading, under the proportional allocation of computational resources and the constraints of latency and security cost, joint device association, channel selection, security service assignment, power control and computation offloading are done for minimizing the overall energy consumed by all IMDs. It is noteworthy that multi-step computation offloading is concentrated to balance the network loads and utilize computing resources fully. Since the finally formulated problem is in a nonlinear mixed-integer form, it may be very difficult to find its closed-form solution. To solve it, an improved whale optimization algorithm (IWOA) is designed. As for this algorithm, the convergence, computational complexity and parallel implementation are analyzed in detail. Simulation results show that the designed algorithm may achieve lower energy consumption than other existing algorithms under the constraints of latency and security cost.

AD-Det: Boosting Object Detection in UAV Images with Focused Small Objects and Balanced Tail Classes

AD-Det: Boosting Object Detection in UAV Images with Focused Small Objects and Balanced Tail Classes

Object detection in Unmanned Aerial Vehicle (UAV) images poses significant

challenges due to complex scale variations and class imbalance among objects.

Existing methods often address these challenges separately, overlooking the

intricate nature of UAV images and the potential synergy between them. In

response, this paper proposes AD-Det, a novel framework employing a coherent

coarse-to-fine strategy that seamlessly integrates two pivotal components:

Adaptive Small Object Enhancement (ASOE) and Dynamic Class-balanced Copy-paste

(DCC). ASOE utilizes a high-resolution feature map to identify and cluster

regions containing small objects. These regions are subsequently enlarged and

processed by a fine-grained detector. On the other hand, DCC conducts

object-level resampling by dynamically pasting tail classes around the cluster

centers obtained by ASOE, main-taining a dynamic memory bank for each tail

class. This approach enables AD-Det to not only extract regions with small

objects for precise detection but also dynamically perform reasonable

resampling for tail-class objects. Consequently, AD-Det enhances the overall

detection performance by addressing the challenges of scale variations and

class imbalance in UAV images through a synergistic and adaptive framework. We

extensively evaluate our approach on two public datasets, i.e., VisDrone and

UAVDT, and demonstrate that AD-Det significantly outperforms existing

competitive alternatives. Notably, AD-Det achieves a 37.5% Average Precision

(AP) on the VisDrone dataset, surpassing its counterparts by at least 3.1%.

This study presents RoleCraft-GLM, an innovative framework aimed at enhancing

personalized role-playing with Large Language Models (LLMs). RoleCraft-GLM

addresses the key issue of lacking personalized interactions in conversational

AI, and offers a solution with detailed and emotionally nuanced character

portrayals. We contribute a unique conversational dataset that shifts from

conventional celebrity-centric characters to diverse, non-celebrity personas,

thus enhancing the realism and complexity of language modeling interactions.

Additionally, our approach includes meticulous character development, ensuring

dialogues are both realistic and emotionally resonant. The effectiveness of

RoleCraft-GLM is validated through various case studies, highlighting its

versatility and skill in different scenarios. Our framework excels in

generating dialogues that accurately reflect characters' personality traits and

emotions, thereby boosting user engagement. In conclusion, RoleCraft-GLM marks

a significant leap in personalized AI interactions, and paves the way for more

authentic and immersive AI-assisted role-playing experiences by enabling more

nuanced and emotionally rich dialogues

Recently, vision transformers have performed well in various computer vision tasks, including voxel 3D reconstruction. However, the windows of the vision transformer are not multi-scale, and there is no connection between the windows, which limits the accuracy of voxel 3D reconstruction. Therefore, we propose a voxel 3D reconstruction network based on shifted window attention. To the best of our knowledge, this is the first work to apply shifted window attention to voxel 3D reconstruction. Experimental results on ShapeNet verify our method achieves SOTA accuracy in single-view reconstruction.

14 Sep 2025

This paper investigates the efficacy of quantum computing in two distinct machine learning tasks: feature selection for credit risk assessment and image classification for handwritten digit recognition. For the first task, we address the feature selection challenge of the German Credit Dataset by formulating it as a Quadratic Unconstrained Binary Optimization (QUBO) problem, which is solved using quantum annealing to identify the optimal feature subset. Experimental results show that the resulting credit scoring model maintains high classification precision despite using a minimal number of features. For the second task, we focus on classifying handwritten digits 3 and 6 in the MNIST dataset using Quantum Neural Networks (QNNs). Through meticulous data preprocessing (downsampling, binarization), quantum encoding (FRQI and compressed FRQI), and the design of QNN architectures (CRADL and CRAML), we demonstrate that QNNs can effectively handle high-dimensional image data. Our findings highlight the potential of quantum computing in solving practical machine learning problems while emphasizing the need to balance resource expenditure and model efficacy.

To solve the problem that the low capacity in hot-spots and coverage holes of

conventional cellular networks, the base stations (BSs) having lower transmit

power are deployed to form heterogeneous cellular networks (HetNets). However,

because of these introduced disparate power BSs, the user distributions among

them looked fairly unbalanced if an appropriate user association scheme hasn't

been provided. For effectively tackling this problem, we jointly consider the

load of each BS and user's achievable rate instead of only utilizing the latter

when designing an association algorithm, and formulate it as a network-wide

weighted utility maximization problem. Note that, the load mentioned above

relates to the amount of required subbands decided by actual rate requirements,

i.e., QoS, but the number of associated users, thus it can reflect user's

actual load level. As for the proposed problem, we give a maximum probability

(max-probability) algorithm by relaxing variables as well as a low-complexity

distributed algorithm with a near-optimal solution that provides a theoretical

performance guarantee. Experimental results show that, compared with the

association strategy advocated by Ye, our strategy has a speeder convergence

rate, a lower call blocking probability and a higher load balancing level.

03 Feb 2016

We present quantum algorithms to realize geometric transformations (two-point

swappings, symmetric flips, local flips, orthogonal rotations, and

translations) based on an n-qubit normal arbitrary superposition state

(NASS). These transformations are implemented using quantum circuits consisting

of basic quantum gates, which are constructed with polynomial numbers of

single-qubit and two-qubit gates. Complexity analysis shows that the global

operators (symmetric flips, local flips, orthogonal rotations) can be

implemented with O(n) gates. The proposed geometric transformations are used

to facilitate applications of quantum images with low complexity.

20 Sep 2018

We propose to manipulate the statistic properties of the photons transport

nonreciprocally via quadratic optomechanical coupling. We present a scheme to

generate quadratic optomechanical interactions in the normal optical modes of a

whispering-gallery-mode (WGM) optomechanical system by eliminating the linear

optomechanical couplings via anticrossing of different modes. By optically

pumping the WGM optomechanical system in one direction, the effective quadratic

optomechanical coupling in that direction will be enhanced significantly, and

nonreciprocal photon blockade will be observed consequently. Our proposal has

potential applications for the on-chip nonreciprocal single-photon devices.

26 Sep 2019

We propose how to realize nonreciprocity for a weak input optical field via nonlinearity and synthetic magnetism. We show that the photons transmitting from a linear cavity to a nonlinear cavity (i.e., an asymmetric nonlinear optical molecule) exhibit nonreciprocal photon blockade but no clear nonreciprocal transmission. Both nonreciprocal transmission and nonreciprocal photon blockade can be observed, when one or two auxiliary modes are coupled to the asymmetric nonlinear optical molecule to generate an artificial magnetic field. Similar method can be used to create and manipulate nonreciprocal transmission and nonreciprocal photon blockade for photons bi-directionally transport in a symmetric nonlinear optical molecule. Additionally, a photon circulator with nonreciprocal photon blockade is designed based on nonlinearity and synthetic magnetism. The combination of nonlinearity and synthetic magnetism provides us an effective way towards the realization of quantum nonreciprocal devices, e.g., nonreciprocal single-photon sources and single-photon diodes.

24 Mar 2020

We propose to periodically modulate the onsite energy via two-tone drives, which can be furthermore used to engineer artificial gauge potential. As an example, we show that the fermionic ladder model penetrated with effective magnetic flux can be constructed by superconducting flux qubits using such two-tone-drive-engineered artificial gauge potential. In this superconducting system, the single-particle ground state can range from vortex phase to Meissner phase due to the competition between the interleg coupling strength and the effective magnetic flux. We also present the method to experimentally measure the chiral currents by the single-particle Rabi oscillations between adjacent qubits. In contrast to previous methods of generating artifical gauge potential, our proposal does not need the aid of auxiliary couplers and in principle remains valid only if the qubit circuit maintains enough anharmonicity. The fermionic ladder model with effective magnetic flux can also be interpreted as one-dimensional spin-orbit-coupled model, which thus lay a foundation towards the realization of quantum spin Hall effect.

08 Oct 2022

The principle of entropy increase is not only the basis of statistical

mechanics, but also closely related to the irreversibility of time, the origin

of life, chaos and turbulence. In this paper, we first discuss the dynamic

system definition of entropy from the perspective of symbol and partition of

information, and propose the entropy transfer characteristics based on the set

partition. By introducing the hypothesis of limited accuracy of measurement

into the continuous dynamical system, two necessary mechanisms for the

formation of chaos are obtained: the transfer of entropy from small scale to

macro scale (i.e. the increase of local entropy) and the dissipation of macro

information. The relationship between the local entropy increase and Lyapunov

exponent of dynamical system is established. And then the entropy increase and

abnormal dissipation mechanism in physical system are analyzed and discussed.

There are no more papers matching your filters at the moment.