Ecole Centrale Nantes

The rapid advances in the Internet of Things (IoT) have promoted a revolution in communication technology and offered various customer services. Artificial intelligence (AI) techniques have been exploited to facilitate IoT operations and maximize their potential in modern application scenarios. In particular, the convergence of IoT and AI has led to a new networking paradigm called Intelligent IoT (IIoT), which has the potential to significantly transform businesses and industrial domains. This paper presents a comprehensive survey of IIoT by investigating its significant applications in mobile networks, as well as its associated security and privacy issues. Specifically, we explore and discuss the roles of IIoT in a wide range of key application domains, from smart healthcare and smart cities to smart transportation and smart industries. Through such extensive discussions, we investigate important security issues in IIoT networks, where network attacks, confidentiality, integrity, and intrusion are analyzed, along with a discussion of potential countermeasures. Privacy issues in IIoT networks were also surveyed and discussed, including data, location, and model privacy leakage. Finally, we outline several key challenges and highlight potential research directions in this important area.

21 Oct 2025

This paper presents a novel framework for real-time human action recognition in industrial contexts, using standard 2D cameras. We introduce a complete pipeline for robust and real-time estimation of human joint kinematics, input to a temporally smoothed Transformer-based network, for action recognition. We rely on a new dataset including 11 subjects performing various actions, to evaluate our approach. Unlike most of the literature that relies on joint center positions (JCP) and is offline, ours uses biomechanical prior, eg. joint angles, for fast and robust real-time recognition. Besides, joint angles make the proposed method agnostic to sensor and subject poses as well as to anthropometric differences, and ensure robustness across environments and subjects. Our proposed learning model outperforms the best baseline model, running also in real-time, along various metrics. It achieves 88% accuracy and shows great generalization ability, for subjects not facing the cameras. Finally, we demonstrate the robustness and usefulness of our technique, through an online interaction experiment, with a simulated robot controlled in real-time via the recognized actions.

01 Apr 2025

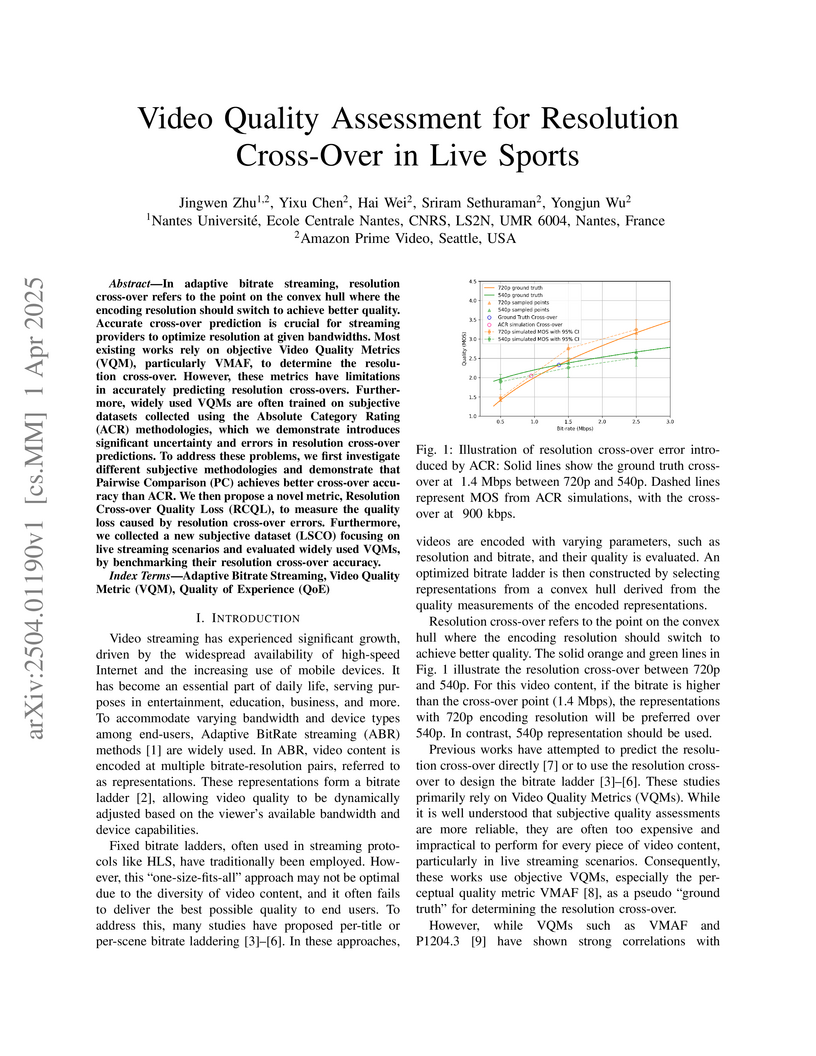

Researchers from Nantes Université and Amazon Prime Video developed a novel metric, Resolution Cross-Over Quality Loss (RCQL), and the Live Sport Cross-Over (LSCO) dataset to precisely identify optimal resolution switching points in adaptive bitrate streaming for live sports content. Their work demonstrates that pairwise comparison subjective tests provide more accurate cross-over determinations than traditional Absolute Category Rating, and highlights that VQMs designed for overall quality do not reliably predict these specific transition points.

Passivity property gives a sense of energy balance. The classical definitions and theorems of passivity in dynamical systems require time invariance and locally Lipschitz functions. However, these conditions are not met in many systems. A characteristic example is nonautonomous and discontinuous systems due to presence of Coulomb friction. This paper presents an extended result for the negative feedback connection of two passive nonautonomous systems with set-valued right-hand side based on an invariance-like principle. Such extension is the base of a structural passivity-based control synthesis for underactuated mechanical systems with Coulomb friction. The first step consists in designing the control able to restore the passivity in the considered friction law, achieving stabilization of the system trajectories to a domain with zero velocities. Then, an integral action is included to improve the latter result and perform a tracking over a constant reference (regulation). At last, the control is designed considering dynamics in the actuation. These control objectives are obtained using fewer control inputs than degrees of freedom, as a result of the underactuated nature of the plant. The presented control strategy is implemented in an earthquake prevention scenario, where a mature seismogenic fault represents the considered frictional underactuated mechanical system. Simulations are performed to show how the seismic energy can be slowly dissipated by tracking a slow reference, thanks to fluid injection far from the fault, accounting also for the slow dynamics of the fluid's diffusion.

In the past years, AI has seen many advances in the field of NLP. This has

led to the emergence of LLMs, such as the now famous GPT-3.5, which

revolutionise the way humans can access or generate content. Current studies on

LLM-based generative tools are mainly interested in the performance of such

tools in generating relevant content (code, text or image). However, ethical

concerns related to the design and use of generative tools seem to be growing,

impacting the public acceptability for specific tasks. This paper presents a

questionnaire survey to identify the intention to use generative tools by

employees of an IT company in the context of their work. This survey is based

on empirical models measuring intention to use (TAM by Davis, 1989, and UTAUT2

by Venkatesh and al., 2008). Our results indicate a rather average

acceptability of generative tools, although the more useful the tool is

perceived to be, the higher the intention to use seems to be. Furthermore, our

analyses suggest that the frequency of use of generative tools is likely to be

a key factor in understanding how employees perceive these tools in the context

of their work. Following on from this work, we plan to investigate the nature

of the requests that may be made to these tools by specific audiences.

Speckle based imaging consists of forming a super-resolved reconstruction of an unknown sample from low-resolution images obtained under random inhomogeneous illuminations (speckles). In a blind context where the illuminations are unknown, we study the intrinsic capacity of speckle-based imagers to recover spatial frequencies outside the frequency support of the data, with minimal assumptions about the sample. We demonstrate that, under physically realistic conditions, the covariance of the data has a super-resolution power corresponding to the squared magnitude of the imager point spread function. This theoretical result is important for many practical imaging systems such as acoustic and electromagnetic tomographs, fluorescence and photoacoustic microscopes, or synthetic aperture radar imaging. A numerical validation is presented in the case of fluorescence microscopy.

Anatomical segmentation of organs in ultrasound images is essential to many

clinical applications, particularly for diagnosis and monitoring. Existing deep

neural networks require a large amount of labeled data for training in order to

achieve clinically acceptable performance. Yet, in ultrasound, due to

characteristic properties such as speckle and clutter, it is challenging to

obtain accurate segmentation boundaries, and precise pixel-wise labeling of

images is highly dependent on the expertise of physicians. In contrast, CT

scans have higher resolution and improved contrast, easing organ

identification. In this paper, we propose a novel approach for learning to

optimize task-based ultra-sound image representations. Given annotated CT

segmentation maps as a simulation medium, we model acoustic propagation through

tissue via ray-casting to generate ultrasound training data. Our ultrasound

simulator is fully differentiable and learns to optimize the parameters for

generating physics-based ultrasound images guided by the downstream

segmentation task. In addition, we train an image adaptation network between

real and simulated images to achieve simultaneous image synthesis and automatic

segmentation on US images in an end-to-end training setting. The proposed

method is evaluated on aorta and vessel segmentation tasks and shows promising

quantitative results. Furthermore, we also conduct qualitative results of

optimized image representations on other organs.

In this paper, we present a novel approach to Handwritten Mathematical Expression Recognition (HMER) by leveraging graph-based modeling techniques. We introduce an End-to-end model with an Edge-weighted Graph Attention Mechanism (EGAT), designed to perform simultaneous node and edge classification. This model effectively integrates node and edge features, facilitating the prediction of symbol classes and their relationships within mathematical expressions. Additionally, we propose a stroke-level Graph Modeling method for both local (LGM) and global (GGM) information, which applies an end-to-end model to Online HMER tasks, transforming the recognition problem into node and edge classification tasks in graph structure. By capturing both local and global graph features, our method ensures comprehensive understanding of the expression structure. Through the combination of these components, our system demonstrates superior performance in symbol detection, relation classification, and expression-level recognition.

03 Jul 2024

Many environmental processes such as rainfall, wind or snowfall are

inherently spatial and the modelling of extremes has to take into account that

feature. In addition, environmental processes are often attached with an angle,

e.g., wind speed and direction or extreme snowfall and time of occurrence in

year. This article proposes a Bayesian hierarchical model with a conditional

independence assumption that aims at modelling simultaneously spatial extremes

and an angular component. The proposed model relies on the extreme value theory

as well as recent developments for handling directional statistics over a

continuous domain. Working within a Bayesian setting, a Gibbs sampler is

introduced whose performances are analysed through a simulation study. The

paper ends with an application on extreme wind speed in France. Results show

that extreme wind events in France are mainly coming from West apart from the

Mediterranean part of France and the Alps.

07 Feb 2024

Due to the energy transition, today's electrical networks include synchronous

machines and inverter-based resources interfacing renewable energies such as

wind turbines, solar panels, and Battery Energy Storage Systems to the grid. In

such systems, interactions known as coupling modes or dynamic interactions,

between synchronous machines and inverter-based resources may arise. This paper

conducts a clear and exhaustive study on a proposed benchmark, in order to

analyze, quantify and classify these new types of modes. Detailed models

representing electromagnetic transient phenomena are developed and linearized,

then used for conducting modal analysis to fully characterize the small-signal

stability of the system. Also, a sensitivity analysis is presented to evaluate

the impact of key parameters on the detected modes of oscillation. Besides the

exhaustive classification of the possible coupling modes, the proposed

benchmark and methodology can be used to study any given power system in a

minimal order modeling. The case of a fully detailed power grid based on the

IEEE 39 bus system was studied as an illustrative example.

With the ever-rising quality of deep generative models, it is increasingly important to be able to discern whether the audio data at hand have been recorded or synthesized. Although the detection of fake speech signals has been studied extensively, this is not the case for the detection of fake environmental audio.

We propose a simple and efficient pipeline for detecting fake environmental sounds based on the CLAP audio embedding. We evaluate this detector using audio data from the 2023 DCASE challenge task on Foley sound synthesis.

Our experiments show that fake sounds generated by 44 state-of-the-art synthesizers can be detected on average with 98% accuracy. We show that using an audio embedding learned on environmental audio is beneficial over a standard VGGish one as it provides a 10% increase in detection performance. Informal listening to Incorrect Negative examples demonstrates audible features of fake sounds missed by the detector such as distortion and implausible background noise.

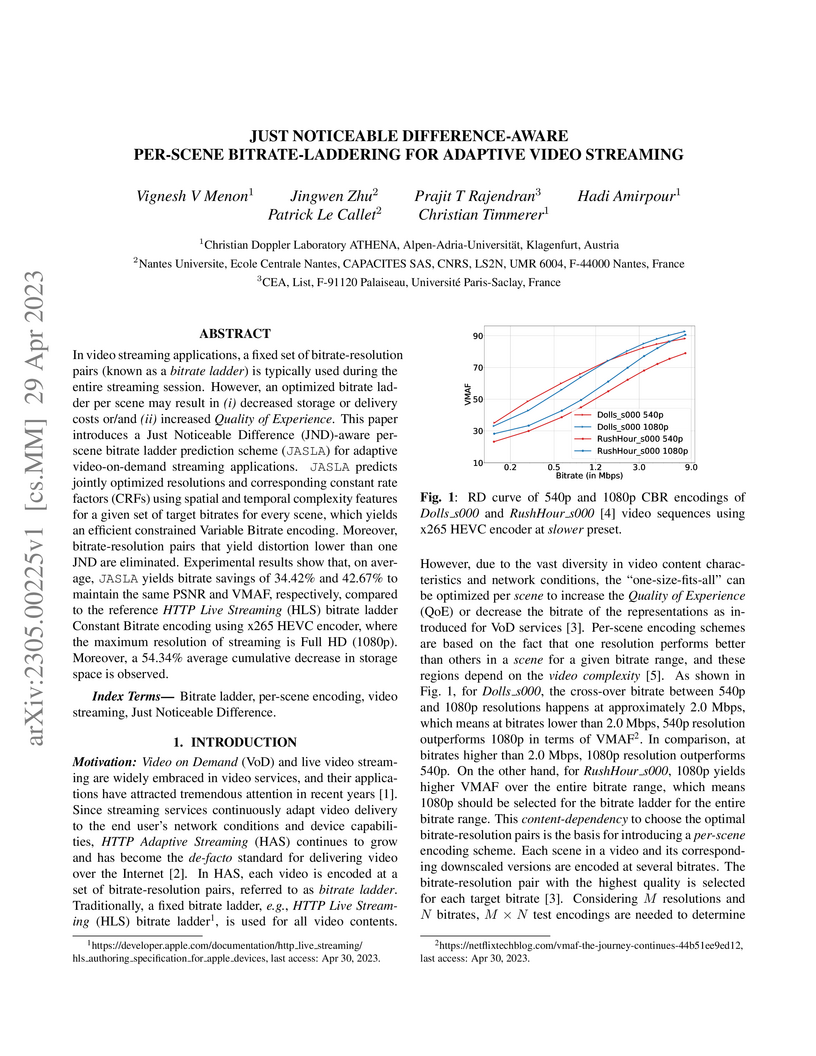

In video streaming applications, a fixed set of bitrate-resolution pairs (known as a bitrate ladder) is typically used during the entire streaming session. However, an optimized bitrate ladder per scene may result in (i) decreased storage or delivery costs or/and (ii) increased Quality of Experience. This paper introduces a Just Noticeable Difference (JND)-aware per-scene bitrate ladder prediction scheme (JASLA) for adaptive video-on-demand streaming applications. JASLA predicts jointly optimized resolutions and corresponding constant rate factors (CRFs) using spatial and temporal complexity features for a given set of target bitrates for every scene, which yields an efficient constrained Variable Bitrate encoding. Moreover, bitrate-resolution pairs that yield distortion lower than one JND are eliminated. Experimental results show that, on average, JASLA yields bitrate savings of 34.42% and 42.67% to maintain the same PSNR and VMAF, respectively, compared to the reference HTTP Live Streaming (HLS) bitrate ladder Constant Bitrate encoding using x265 HEVC encoder, where the maximum resolution of streaming is Full HD (1080p). Moreover, a 54.34% average cumulative decrease in storage space is observed.

23 Nov 2024

Reduced-scale experiments offer a controlled and safe environment for

studying the effects of blasts on structures. Traditionally, these experiments

rely on the detonation of solid or gaseous explosive mixtures, with only

limited understanding of alternative explosive sources. This paper presents a

detailed investigation of the blasts produced by exploding aluminum wires for

generating shock waves of controlled energy levels. We meticulously design the

experiments to ensure a precise quantification of the underlying uncertainties

and conduct comprehensive parametric studies. We draw practical relationships

of the blast intensity with respect to the stand-off distance and the stored

energy levels. The analysis demonstrates self-similarity of blasts with respect

to the conventional concept of the scaled distance, a desirable degree of

sphericity of the generated shock waves, and high repeatability. Finally, we

quantify the equivalence of the reduced-scale blasts from exploding wires with

high explosives, including TNT. The present experimental setup and study

demonstrate the high degree of robustness and effectiveness of exploding

aluminum wires as a tool for controlled blast generation and reduced-scale

structural testing.

In this paper, we target the problem of fracture classification from clinical

X-Ray images towards an automated Computer Aided Diagnosis (CAD) system.

Although primarily dealing with an image classification problem, we argue that

localizing the fracture in the image is crucial to make good class predictions.

Therefore, we propose and thoroughly analyze several schemes for simultaneous

fracture localization and classification. We show that using an auxiliary

localization task, in general, improves the classification performance.

Moreover, it is possible to avoid the need for additional localization

annotations thanks to recent advancements in weakly-supervised deep learning

approaches. Among such approaches, we investigate and adapt Spatial

Transformers (ST), Self-Transfer Learning (STL), and localization from global

pooling layers. We provide a detailed quantitative and qualitative validation

on a dataset of 1347 femur fractures images and report high accuracy with

regard to inter-expert correlation values reported in the literature. Our

investigations show that i) lesion localization improves the classification

outcome, ii) weakly-supervised methods improve baseline classification without

any additional cost, iii) STL guides feature activations and boost performance.

We plan to make both the dataset and code available.

Just Noticeable Difference (JND) model developed based on Human Vision System

(HVS) through subjective studies is valuable for many multimedia use cases. In

the streaming industries, it is commonly applied to reach a good balance

between compression efficiency and perceptual quality when selecting video

encoding recipes. Nevertheless, recent state-of-the-art deep learning based JND

prediction model relies on large-scale JND ground truth that is expensive and

time consuming to collect. Most of the existing JND datasets contain limited

number of contents and are limited to a certain codec (e.g., H264). As a

result, JND prediction models that were trained on such datasets are normally

not agnostic to the codecs. To this end, in order to decouple encoding recipes

and JND estimation, we propose a novel framework to map the difference of

objective Video Quality Assessment (VQA) scores, i.e., VMAF, between two given

videos encoded with different encoding recipes from the same content to the

probability of having just noticeable difference between them. The proposed

probability mapping model learns from DCR test data, which is significantly

cheaper compared to standard JND subjective test. As we utilize objective VQA

metric (e.g., VMAF that trained with contents encoded with different codecs) as

proxy to estimate JND, our model is agnostic to codecs and computationally

efficient. Throughout extensive experiments, it is demonstrated that the

proposed model is able to estimate JND values efficiently.

04 Mar 2025

This paper explores the role of generalized continuum mechanics, and the

feasibility of model-free data-driven computing approaches thereof, in solids

undergoing failure by strain localization. Specifically, we set forth a

methodology for capturing material instabilities using data-driven mechanics

without prior information regarding the failure mode. We show numerically that,

in problems involving strain localization, the standard data-driven framework

for Cauchy/Boltzmann continua fails to capture the length scale of the

material, as expected. We address this shortcoming by formulating a generalized

data-driven framework for micromorphic continua that effectively captures both

stiffness and length-scale information, as encoded in the material data, in a

model-free manner. These properties are exhibited systematically in a

one-dimensional softening bar problem and further verified through selected

plane-strain problems.

21 Jun 2024

Multi-label imbalanced classification poses a significant challenge in

machine learning, particularly evident in bioacoustics where animal sounds

often co-occur, and certain sounds are much less frequent than others. This

paper focuses on the specific case of classifying anuran species sounds using

the dataset AnuraSet, that contains both class imbalance and multi-label

examples. To address these challenges, we introduce Mixture of Mixups (Mix2), a

framework that leverages mixing regularization methods Mixup, Manifold Mixup,

and MultiMix. Experimental results show that these methods, individually, may

lead to suboptimal results; however, when applied randomly, with one selected

at each training iteration, they prove effective in addressing the mentioned

challenges, particularly for rare classes with few occurrences. Further

analysis reveals that Mix2 is also proficient in classifying sounds across

various levels of class co-occurrences.

12 Apr 2024

The propagation path of quasistatic cracks under monotonic loading is known

to be strongly influenced by the anisotropy of the fracture energy in

crystalline solids or engineered materials with a regular microstructure. Such

cracks generally follow directions close to minima of the fracture energy. Here

we demonstrate both experimentally and computationally that fatigue cracks

under cyclic loading follow dramatically different paths that are predominantly

dictated by the symmetry of the loading with the microstructure playing a

negligible or subdominant role.

The main goal of this paper is to study the geometric structures associated with the representation of tensors in subspace based formats. To do this we use a property of the so-called minimal subspaces which allows us to describe the tensor representation by means of a rooted tree. By using the tree structure and the dimensions of the associated minimal subspaces, we introduce, in the underlying algebraic tensor space, the set of tensors in a tree-based format with either bounded or fixed tree-based rank. This class contains the Tucker format and the Hierarchical Tucker format (including the Tensor Train format). In particular, we show that the set of tensors in the tree-based format with bounded (respectively, fixed) tree-based rank of an algebraic tensor product of normed vector spaces is an analytic Banach manifold. Indeed, the manifold geometry for the set of tensors with fixed tree-based rank is induced by a fibre bundle structure and the manifold geometry for the set of tensors with bounded tree-based rank is given by a finite union of connected components. In order to describe the relationship between these manifolds and the natural ambient space, we introduce the definition of topological tensor spaces in the tree-based format. We prove under natural conditions that any tensor of the topological tensor space under consideration admits best approximations in the manifold of tensors in the tree-based format with bounded tree-based rank. In this framework, we also show that the tangent (Banach) space at a given tensor is a complemented subspace in the natural ambient tensor Banach space and hence the set of tensors in the tree-based format with bounded (respectively, fixed) tree-based rank is an immersed submanifold. This fact allows us to extend the Dirac-Frenkel variational principle in the framework of topological tensor spaces.

17 Oct 2022

Topological data analysis has recently been applied to the study of dynamic networks. In this context, an algorithm was introduced and helps, among other things, to detect early warning signals of abnormal changes in the dynamic network under study. However, the complexity of this algorithm increases significantly once the database studied grows. In this paper, we propose a simplification of the algorithm without affecting its performance. We give various applications and simulations of the new algorithm on some weighted networks. The obtained results show clearly the efficiency of the introduced approach. Moreover, in some cases, the proposed algorithm makes it possible to highlight local information and sometimes early warning signals of local abnormal changes.

There are no more papers matching your filters at the moment.