Fraunhofer Center for Machine Learning

Flatness of the loss curve is conjectured to be connected to the generalization ability of machine learning models, in particular neural networks. While it has been empirically observed that flatness measures consistently correlate strongly with generalization, it is still an open theoretical problem why and under which circumstances flatness is connected to generalization, in particular in light of reparameterizations that change certain flatness measures but leave generalization unchanged. We investigate the connection between flatness and generalization by relating it to the interpolation from representative data, deriving notions of representativeness, and feature robustness. The notions allow us to rigorously connect flatness and generalization and to identify conditions under which the connection holds. Moreover, they give rise to a novel, but natural relative flatness measure that correlates strongly with generalization, simplifies to ridge regression for ordinary least squares, and solves the reparameterization issue.

Just as user preferences change with time, item reviews also reflect those same preference changes. In a nutshell, if one is to sequentially incorporate review content knowledge into recommender systems, one is naturally led to dynamical models of text. In the present work we leverage the known power of reviews to enhance rating predictions in a way that (i) respects the causality of review generation and (ii) includes, in a bidirectional fashion, the ability of ratings to inform language review models and vice-versa, language representations that help predict ratings end-to-end. Moreover, our representations are time-interval aware and thus yield a continuous-time representation of the dynamics. We provide experiments on real-world datasets and show that our methodology is able to outperform several state-of-the-art models. Source code for all models can be found at [1].

The paper introduces 'informed machine learning' and presents a novel three-dimensional taxonomy for classifying approaches that integrate formal prior knowledge into machine learning systems. This framework unifies fragmented terminology in the field and provides a structured overview, identifying common patterns and guiding principles for leveraging domain knowledge to address limitations of purely data-driven models.

An appropriate data basis grants one of the most important aspects for

training and evaluating probabilistic trajectory prediction models based on

neural networks. In this regard, a common shortcoming of current benchmark

datasets is their limitation to sets of sample trajectories and a lack of

actual ground truth distributions, which prevents the use of more expressive

error metrics, such as the Wasserstein distance for model evaluation. Towards

this end, this paper proposes a novel approach to synthetic dataset generation

based on composite probabilistic B\'ezier curves, which is capable of

generating ground truth data in terms of probability distributions over full

trajectories. This allows the calculation of arbitrary posterior distributions.

The paper showcases an exemplary trajectory prediction model evaluation using

generated ground truth distribution data.

We propose DenseHMM - a modification of Hidden Markov Models (HMMs) that allows to learn dense representations of both the hidden states and the observables. Compared to the standard HMM, transition probabilities are not atomic but composed of these representations via kernelization. Our approach enables constraint-free and gradient-based optimization. We propose two optimization schemes that make use of this: a modification of the Baum-Welch algorithm and a direct co-occurrence optimization. The latter one is highly scalable and comes empirically without loss of performance compared to standard HMMs. We show that the non-linearity of the kernelization is crucial for the expressiveness of the representations. The properties of the DenseHMM like learned co-occurrences and log-likelihoods are studied empirically on synthetic and biomedical datasets.

Researchers from Fraunhofer IAIS, University of Bonn, and Volkswagen Group Research extended dynamic model averaging to non-convex deep learning objectives, developing a protocol that reduces communication by an order of magnitude compared to periodic methods while maintaining predictive performance and adapting rapidly to concept drifts.

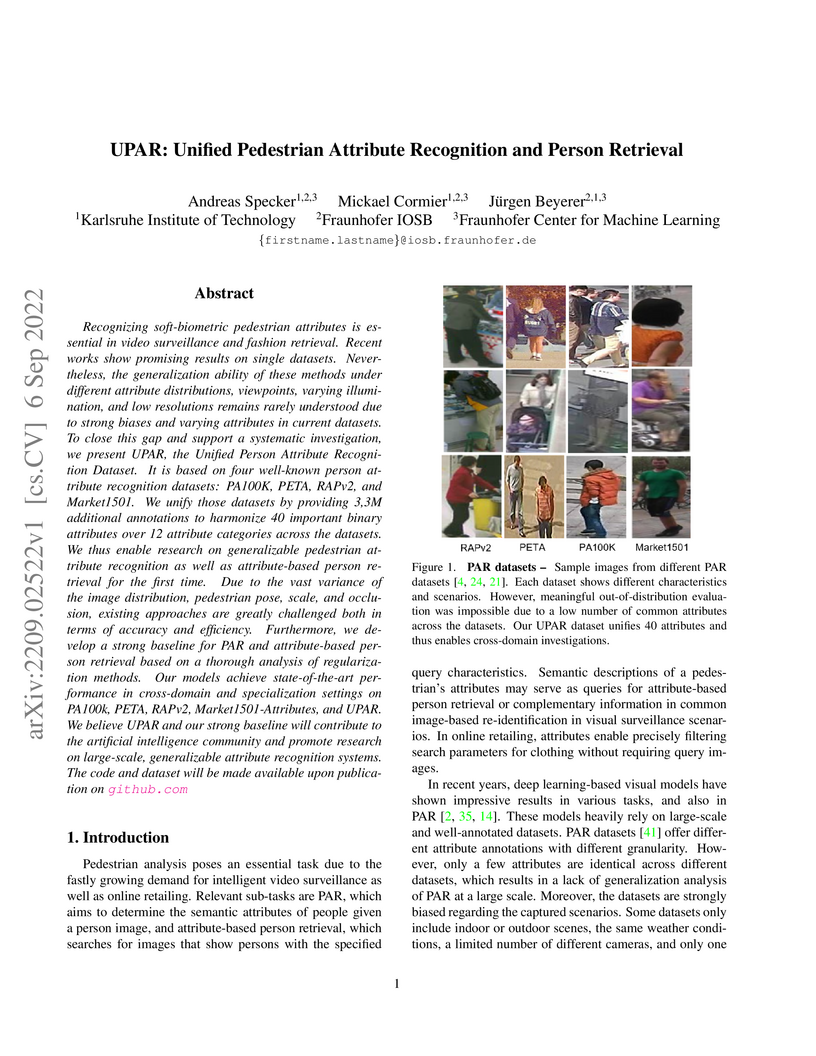

Recognizing soft-biometric pedestrian attributes is essential in video surveillance and fashion retrieval. Recent works show promising results on single datasets. Nevertheless, the generalization ability of these methods under different attribute distributions, viewpoints, varying illumination, and low resolutions remains rarely understood due to strong biases and varying attributes in current datasets. To close this gap and support a systematic investigation, we present UPAR, the Unified Person Attribute Recognition Dataset. It is based on four well-known person attribute recognition datasets: PA100K, PETA, RAPv2, and Market1501. We unify those datasets by providing 3,3M additional annotations to harmonize 40 important binary attributes over 12 attribute categories across the datasets. We thus enable research on generalizable pedestrian attribute recognition as well as attribute-based person retrieval for the first time. Due to the vast variance of the image distribution, pedestrian pose, scale, and occlusion, existing approaches are greatly challenged both in terms of accuracy and efficiency. Furthermore, we develop a strong baseline for PAR and attribute-based person retrieval based on a thorough analysis of regularization methods. Our models achieve state-of-the-art performance in cross-domain and specialization settings on PA100k, PETA, RAPv2, Market1501-Attributes, and UPAR. We believe UPAR and our strong baseline will contribute to the artificial intelligence community and promote research on large-scale, generalizable attribute recognition systems.

We present an improved approach for 3D object detection in point cloud data based on the Frustum PointNet (F-PointNet). Compared to the original F-PointNet, our newly proposed method considers the point neighborhood when computing point features. The newly introduced local neighborhood embedding operation mimics the convolutional operations in 2D neural networks. Thus features of each point are not only computed with the features of its own or of the whole point cloud but also computed especially with respect to the features of its neighbors. Experiments show that our proposed method achieves better performance than the F-Pointnet baseline on 3D object detection tasks.

Machine learning methods have been remarkably successful for a wide range of

application areas in the extraction of essential information from data. An

exciting and relatively recent development is the uptake of machine learning in

the natural sciences, where the major goal is to obtain novel scientific

insights and discoveries from observational or simulated data. A prerequisite

for obtaining a scientific outcome is domain knowledge, which is needed to gain

explainability, but also to enhance scientific consistency. In this article we

review explainable machine learning in view of applications in the natural

sciences and discuss three core elements which we identified as relevant in

this context: transparency, interpretability, and explainability. With respect

to these core elements, we provide a survey of recent scientific works that

incorporate machine learning and the way that explainable machine learning is

used in combination with domain knowledge from the application areas.

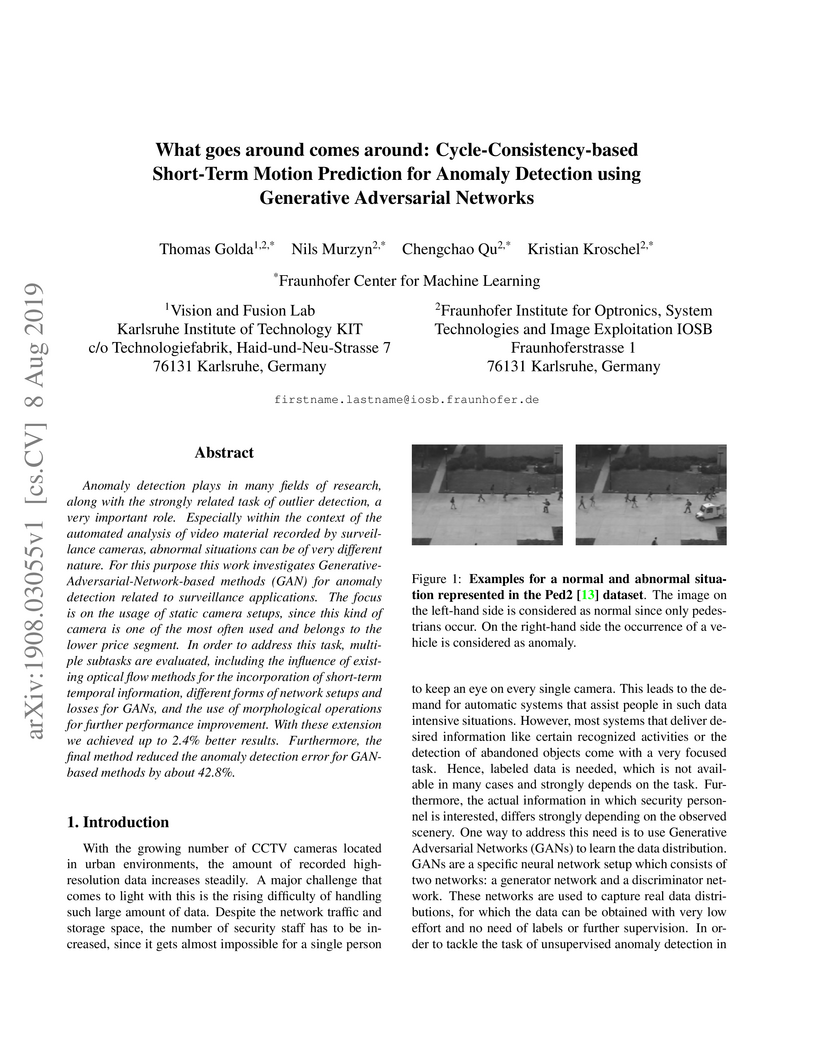

Anomaly detection plays in many fields of research, along with the strongly

related task of outlier detection, a very important role. Especially within the

context of the automated analysis of video material recorded by surveillance

cameras, abnormal situations can be of very different nature. For this purpose

this work investigates Generative-Adversarial-Network-based methods (GAN) for

anomaly detection related to surveillance applications. The focus is on the

usage of static camera setups, since this kind of camera is one of the most

often used and belongs to the lower price segment. In order to address this

task, multiple subtasks are evaluated, including the influence of existing

optical flow methods for the incorporation of short-term temporal information,

different forms of network setups and losses for GANs, and the use of

morphological operations for further performance improvement. With these

extension we achieved up to 2.4% better results. Furthermore, the final method

reduced the anomaly detection error for GAN-based methods by about 42.8%.

Monte Carlo (MC) dropout is one of the state-of-the-art approaches for

uncertainty estimation in neural networks (NNs). It has been interpreted as

approximately performing Bayesian inference. Based on previous work on the

approximation of Gaussian processes by wide and deep neural networks with

random weights, we study the limiting distribution of wide untrained NNs under

dropout more rigorously and prove that they as well converge to Gaussian

processes for fixed sets of weights and biases. We sketch an argument that this

property might also hold for infinitely wide feed-forward networks that are

trained with (full-batch) gradient descent. The theory is contrasted by an

empirical analysis in which we find correlations and non-Gaussian behaviour for

the pre-activations of finite width NNs. We therefore investigate how

(strongly) correlated pre-activations can induce non-Gaussian behavior in NNs

with strongly correlated weights.

As neural networks become able to generate realistic artificial images, they have the potential to improve movies, music, video games and make the internet an even more creative and inspiring place. Yet, the latest technology potentially enables new digital ways to lie. In response, the need for a diverse and reliable method toolbox arises to identify artificial images and other content. Previous work primarily relies on pixel-space CNNs or the Fourier transform. To the best of our knowledge, synthesized fake image analysis and detection methods based on a multi-scale wavelet representation, localized in both space and frequency, have been absent thus far. The wavelet transform conserves spatial information to a degree, which allows us to present a new analysis. Comparing the wavelet coefficients of real and fake images allows interpretation. Significant differences are identified. Additionally, this paper proposes to learn a model for the detection of synthetic images based on the wavelet-packet representation of natural and GAN-generated images. Our lightweight forensic classifiers exhibit competitive or improved performance at comparatively small network sizes, as we demonstrate on the FFHQ, CelebA and LSUN source identification problems. Furthermore, we study the binary FaceForensics++ fake-detection problem.

One of the central problems studied in the theory of machine learning is the question of whether, for a given class of hypotheses, it is possible to efficiently find a {consistent} hypothesis, i.e., which has zero training error. While problems involving {\em convex} hypotheses have been extensively studied, the question of whether efficient learning is possible for non-convex hypotheses composed of possibly several disconnected regions is still less understood. Although it has been shown quite a while ago that efficient learning of weakly convex hypotheses, a parameterized relaxation of convex hypotheses, is possible for the special case of Boolean functions, the question of whether this idea can be developed into a generic paradigm has not been studied yet. In this paper, we provide a positive answer and show that the consistent hypothesis finding problem can indeed be solved in polynomial time for a broad class of weakly convex hypotheses over metric spaces. To this end, we propose a general domain-independent algorithm for finding consistent weakly convex hypotheses and prove sufficient conditions for its efficiency that characterize the corresponding hypothesis classes. To illustrate our general algorithm and its properties, we discuss several non-trivial learning examples to demonstrate how it can be used to efficiently solve the corresponding consistent hypothesis finding problem. Without the weak convexity constraint, these problems are known to be computationally intractable. We then proceed to show that the general idea of our algorithm can even be extended to the case of extensional weakly convex hypotheses, as it naturally arise, e.g., when performing vertex classification in graphs. We prove that using our extended algorithm, the problem can be solved in polynomial time provided the distances in the domain can be computed efficiently.

Despite ongoing research on the topic of adversarial examples in deep

learning for computer vision, some fundamentals of the nature of these attacks

remain unclear. As the manifold hypothesis posits, high-dimensional data tends

to be part of a low-dimensional manifold. To verify the thesis with adversarial

patches, this paper provides an analysis of a set of adversarial patches and

investigates the reconstruction abilities of three different dimensionality

reduction methods. Quantitatively, the performance of reconstructed patches in

an attack setting is measured and the impact of sampled patches from the latent

space during adversarial training is investigated. The evaluation is performed

on two publicly available datasets for person detection. The results indicate

that more sophisticated dimensionality reduction methods offer no advantages

over a simple principal component analysis.

In this paper, we develop a novel benchmark suite including both a 2D synthetic image dataset and a 3D synthetic point cloud dataset. Our work is a sub-task in the framework of a remanufacturing project, in which small electric motors are used as fundamental objects. Apart from the given detection, classification, and segmentation annotations, the key objects also have multiple learnable attributes with ground truth provided. This benchmark can be used for computer vision tasks including 2D/3D detection, classification, segmentation, and multi-attribute learning. It is worth mentioning that most attributes of the motors are quantified as continuously variable rather than binary, which makes our benchmark well-suited for the less explored regression tasks. In addition, appropriate evaluation metrics are adopted or developed for each task and promising baseline results are provided. We hope this benchmark can stimulate more research efforts on the sub-domain of object attribute learning and multi-task learning in the future.

On robotics computer vision tasks, generating and annotating large amounts of data from real-world for the use of deep learning-based approaches is often difficult or even impossible. A common strategy for solving this problem is to apply simulation-to-reality (sim2real) approaches with the help of simulated scenes. While the majority of current robotics vision sim2real work focuses on image data, we present an industrial application case that uses sim2real transfer learning for point cloud data. We provide insights on how to generate and process synthetic point cloud data in order to achieve better performance when the learned model is transferred to real-world data. The issue of imbalanced learning is investigated using multiple strategies. A novel patch-based attention network is proposed additionally to tackle this problem.

Adversarial patches are still a simple yet powerful white box attack that can be used to fool object detectors by suppressing possible detections. The patches of these so-called evasion attacks are computational expensive to produce and require full access to the attacked detector. This paper addresses the problem of computational expensiveness by analyzing 375 generated patches, calculating the principal components of these and show, that linear combinations of the resulting "eigenpatches" can be used to fool object detections successfully.

To ensure the security of airports, it is essential to protect the airside from unauthorized access. For this purpose, security fences are commonly used, but they require regular inspection to detect damages. However, due to the growing shortage of human specialists and the large manual effort, there is the need for automated methods. The aim is to automatically inspect the fence for damage with the help of an autonomous robot. In this work, we explore object detection methods to address the fence inspection task and localize various types of damages. In addition to evaluating four State-of-the-Art (SOTA) object detection models, we analyze the impact of several design criteria, aiming at adapting to the task-specific challenges. This includes contrast adjustment, optimization of hyperparameters, and utilization of modern backbones. The experimental results indicate that our optimized You Only Look Once v5 (YOLOv5) model achieves the highest accuracy of the four methods with an increase of 6.9% points in Average Precision (AP) compared to the baseline. Moreover, we show the real-time capability of the model. The trained models are published on GitHub: this https URL.

Many modern-day applications require the development of new materials with specific properties. In particular, the design of new glass compositions is of great industrial interest. Current machine learning methods for learning the composition-property relationship of glasses promise to save on expensive trial-and-error approaches. Even though quite large datasets on the composition of glasses and their properties already exist (i.e., with more than 350,000 samples), they cover only a very small fraction of the space of all possible glass compositions. This limits the applicability of purely data-driven models for property prediction purposes and necessitates the development of models with high extrapolation power. In this paper, we propose a neural network model which incorporates prior scientific and expert knowledge in its learning pipeline. This informed learning approach leads to an improved extrapolation power compared to blind (uninformed) neural network models. To demonstrate this, we train our models to predict three different material properties, that is, the glass transition temperature, the Young's modulus (at room temperature), and the shear modulus of binary oxide glasses which do not contain sodium. As representatives for conventional blind neural network approaches we use five different feed-forward neural networks of varying widths and depths. For each property, we set up model ensembles of multiple trained models and show that, on average, our proposed informed model performs better in extrapolating the three properties of previously unseen sodium borate glass samples than all five conventional blind models.

Using data from a simulated cup drawing process, we demonstrate how the inherent geometrical structure of cup meshes can be used to effectively prune an artificial neural network in a straightforward way.

There are no more papers matching your filters at the moment.